Liang Lin

Adversarial Reinforced Instruction Attacker for Robust Vision-Language Navigation

Jul 23, 2021

Abstract:Language instruction plays an essential role in the natural language grounded navigation tasks. However, navigators trained with limited human-annotated instructions may have difficulties in accurately capturing key information from the complicated instruction at different timesteps, leading to poor navigation performance. In this paper, we exploit to train a more robust navigator which is capable of dynamically extracting crucial factors from the long instruction, by using an adversarial attacking paradigm. Specifically, we propose a Dynamic Reinforced Instruction Attacker (DR-Attacker), which learns to mislead the navigator to move to the wrong target by destroying the most instructive information in instructions at different timesteps. By formulating the perturbation generation as a Markov Decision Process, DR-Attacker is optimized by the reinforcement learning algorithm to generate perturbed instructions sequentially during the navigation, according to a learnable attack score. Then, the perturbed instructions, which serve as hard samples, are used for improving the robustness of the navigator with an effective adversarial training strategy and an auxiliary self-supervised reasoning task. Experimental results on both Vision-and-Language Navigation (VLN) and Navigation from Dialog History (NDH) tasks show the superiority of our proposed method over state-of-the-art methods. Moreover, the visualization analysis shows the effectiveness of the proposed DR-Attacker, which can successfully attack crucial information in the instructions at different timesteps. Code is available at https://github.com/expectorlin/DR-Attacker.

* Accepted by TPAMI 2021

Hybrid and dynamic policy gradient optimization for bipedal robot locomotion

Jul 05, 2021

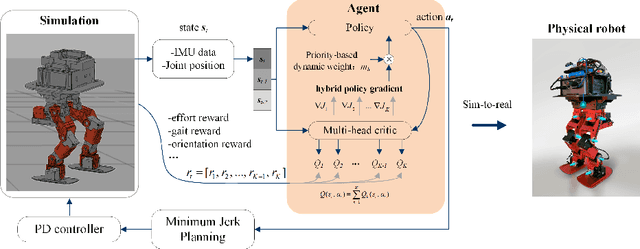

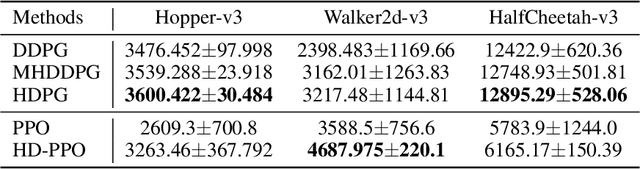

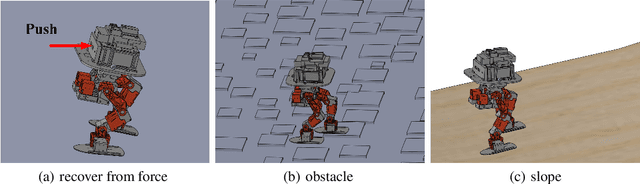

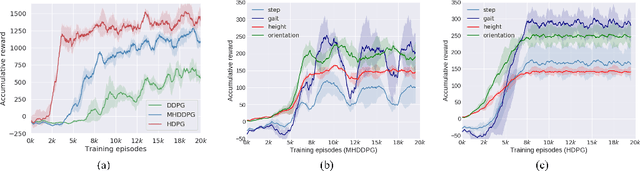

Abstract:Controlling a non-statically bipedal robot is challenging due to the complex dynamics and multi-criterion optimization involved. Recent works have demonstrated the effectiveness of deep reinforcement learning (DRL) for simulation and physically implemented bipeds. In these methods, the rewards from different criteria are normally summed to learn a single value function. However, this may cause the loss of dependency information between hybrid rewards and lead to a sub-optimal policy. In this work, we propose a novel policy gradient reinforcement learning for biped locomotion, allowing the control policy to be simultaneously optimized by multiple criteria using a dynamic mechanism. Our proposed method applies a multi-head critic to learn a separate value function for each component reward function. This also leads to hybrid policy gradients. We further propose dynamic weight for hybrid policy gradients to optimize the policy with different priorities. This hybrid and dynamic policy gradient (HDPG) design makes the agent learn more efficiently. We showed that the proposed method outperforms summed-up-reward approaches and is able to transfer to physical robots. The MuJoCo results further demonstrate the effectiveness and generalization of our HDPG.

Neural-Symbolic Solver for Math Word Problems with Auxiliary Tasks

Jul 03, 2021

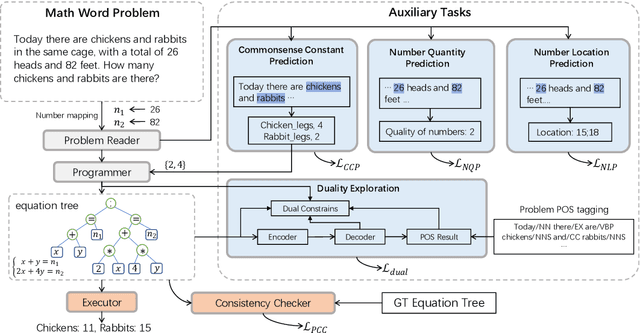

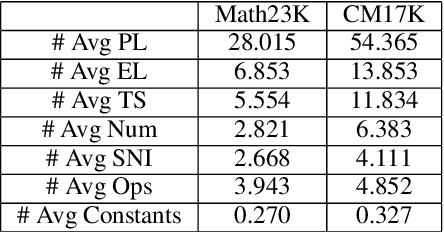

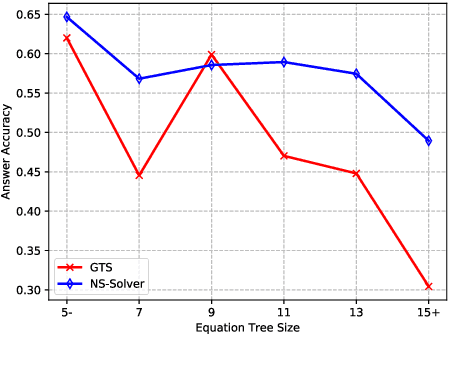

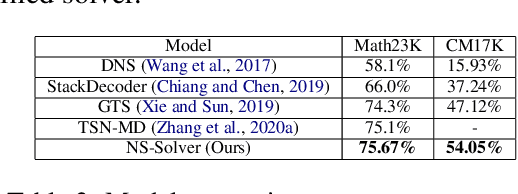

Abstract:Previous math word problem solvers following the encoder-decoder paradigm fail to explicitly incorporate essential math symbolic constraints, leading to unexplainable and unreasonable predictions. Herein, we propose Neural-Symbolic Solver (NS-Solver) to explicitly and seamlessly incorporate different levels of symbolic constraints by auxiliary tasks. Our NS-Solver consists of a problem reader to encode problems, a programmer to generate symbolic equations, and a symbolic executor to obtain answers. Along with target expression supervision, our solver is also optimized via 4 new auxiliary objectives to enforce different symbolic reasoning: a) self-supervised number prediction task predicting both number quantity and number locations; b) commonsense constant prediction task predicting what prior knowledge (e.g. how many legs a chicken has) is required; c) program consistency checker computing the semantic loss between predicted equation and target equation to ensure reasonable equation mapping; d) duality exploiting task exploiting the quasi duality between symbolic equation generation and problem's part-of-speech generation to enhance the understanding ability of a solver. Besides, to provide a more realistic and challenging benchmark for developing a universal and scalable solver, we also construct a new large-scale MWP benchmark CM17K consisting of 4 kinds of MWPs (arithmetic, one-unknown linear, one-unknown non-linear, equation set) with more than 17K samples. Extensive experiments on Math23K and our CM17k demonstrate the superiority of our NS-Solver compared to state-of-the-art methods.

Prototypical Graph Contrastive Learning

Jun 17, 2021

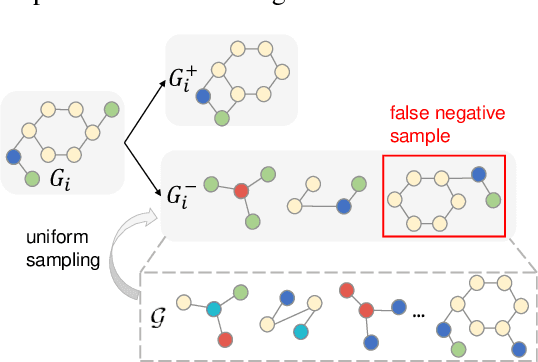

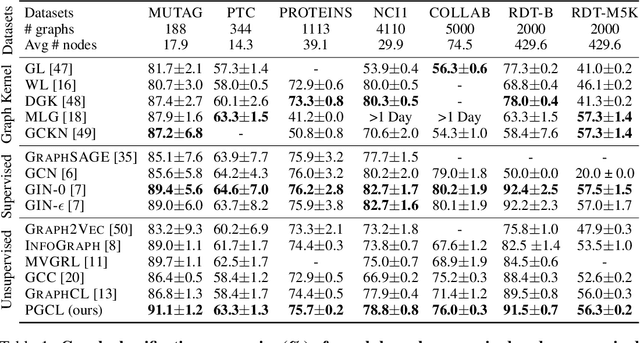

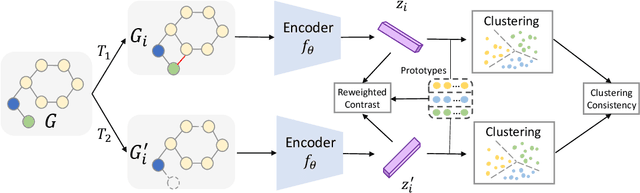

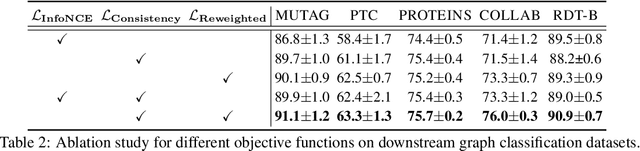

Abstract:Graph-level representations are critical in various real-world applications, such as predicting the properties of molecules. But in practice, precise graph annotations are generally very expensive and time-consuming. To address this issue, graph contrastive learning constructs instance discrimination task which pulls together positive pairs (augmentation pairs of the same graph) and pushes away negative pairs (augmentation pairs of different graphs) for unsupervised representation learning. However, since for a query, its negatives are uniformly sampled from all graphs, existing methods suffer from the critical sampling bias issue, i.e., the negatives likely having the same semantic structure with the query, leading to performance degradation. To mitigate this sampling bias issue, in this paper, we propose a Prototypical Graph Contrastive Learning (PGCL) approach. Specifically, PGCL models the underlying semantic structure of the graph data via clustering semantically similar graphs into the same group, and simultaneously encourages the clustering consistency for different augmentations of the same graph. Then given a query, it performs negative sampling via drawing the graphs from those clusters that differ from the cluster of query, which ensures the semantic difference between query and its negative samples. Moreover, for a query, PGCL further reweights its negative samples based on the distance between their prototypes (cluster centroids) and the query prototype such that those negatives having moderate prototype distance enjoy relatively large weights. This reweighting strategy is proved to be more effective than uniform sampling. Experimental results on various graph benchmarks testify the advantages of our PGCL over state-of-the-art methods.

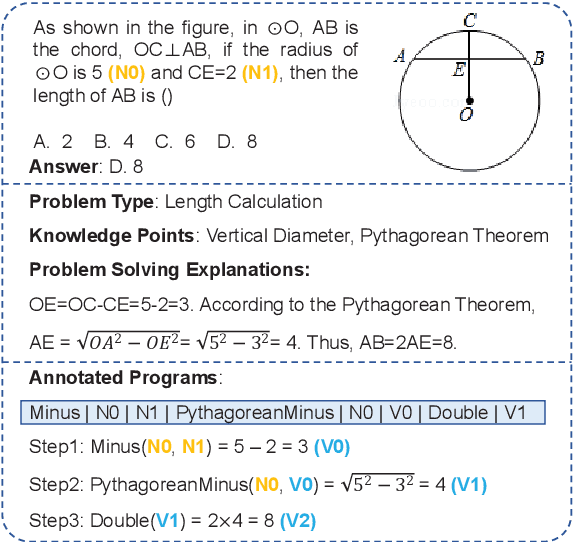

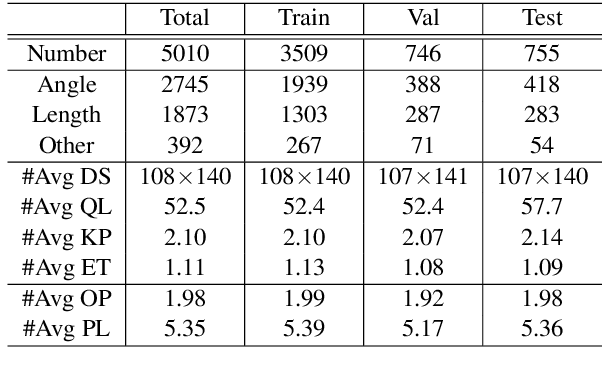

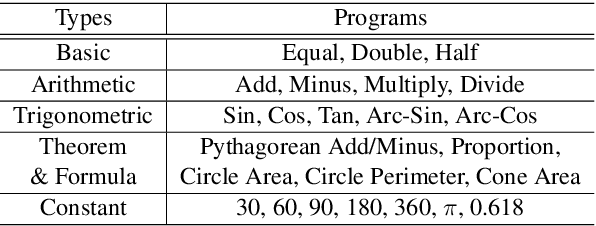

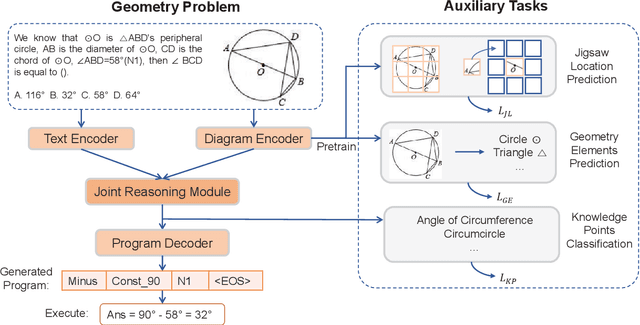

GeoQA: A Geometric Question Answering Benchmark Towards Multimodal Numerical Reasoning

Jun 08, 2021

Abstract:Automatic math problem solving has recently attracted increasing attention as a long-standing AI benchmark. In this paper, we focus on solving geometric problems, which requires a comprehensive understanding of textual descriptions, visual diagrams, and theorem knowledge. However, the existing methods were highly dependent on handcraft rules and were merely evaluated on small-scale datasets. Therefore, we propose a Geometric Question Answering dataset GeoQA, containing 5,010 geometric problems with corresponding annotated programs, which illustrate the solving process of the given problems. Compared with another publicly available dataset GeoS, GeoQA is 25 times larger, in which the program annotations can provide a practical testbed for future research on explicit and explainable numerical reasoning. Moreover, we introduce a Neural Geometric Solver (NGS) to address geometric problems by comprehensively parsing multimodal information and generating interpretable programs. We further add multiple self-supervised auxiliary tasks on NGS to enhance cross-modal semantic representation. Extensive experiments on GeoQA validate the effectiveness of our proposed NGS and auxiliary tasks. However, the results are still significantly lower than human performance, which leaves large room for future research. Our benchmark and code are released at https://github.com/chen-judge/GeoQA .

Towards Quantifiable Dialogue Coherence Evaluation

Jun 01, 2021

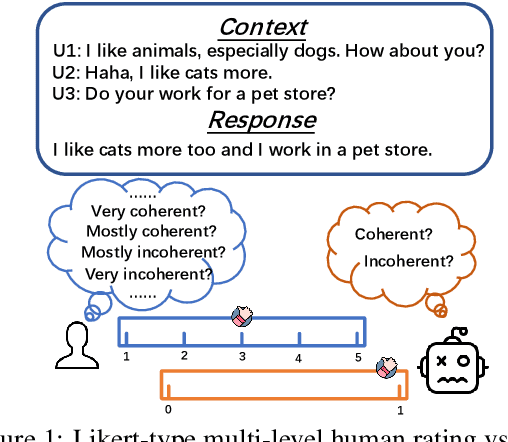

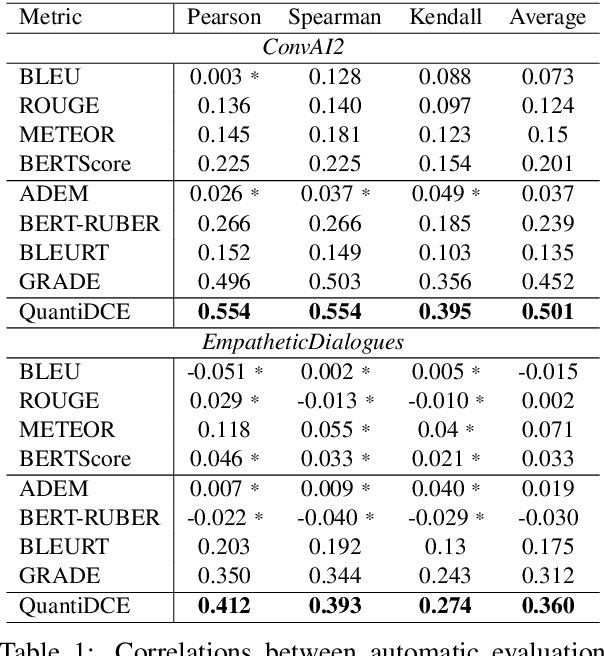

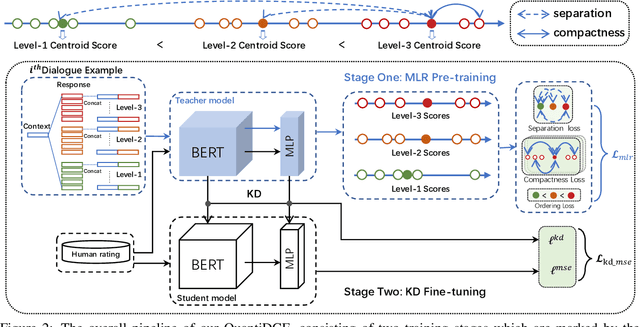

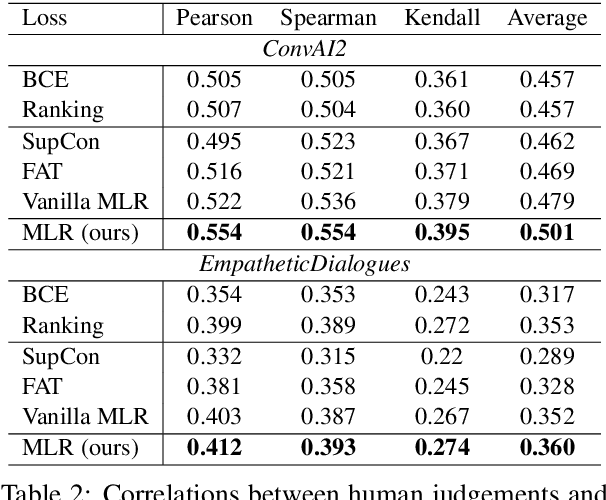

Abstract:Automatic dialogue coherence evaluation has attracted increasing attention and is crucial for developing promising dialogue systems. However, existing metrics have two major limitations: (a) they are mostly trained in a simplified two-level setting (coherent vs. incoherent), while humans give Likert-type multi-level coherence scores, dubbed as "quantifiable"; (b) their predicted coherence scores cannot align with the actual human rating standards due to the absence of human guidance during training. To address these limitations, we propose Quantifiable Dialogue Coherence Evaluation (QuantiDCE), a novel framework aiming to train a quantifiable dialogue coherence metric that can reflect the actual human rating standards. Specifically, QuantiDCE includes two training stages, Multi-Level Ranking (MLR) pre-training and Knowledge Distillation (KD) fine-tuning. During MLR pre-training, a new MLR loss is proposed for enabling the model to learn the coarse judgement of coherence degrees. Then, during KD fine-tuning, the pretrained model is further finetuned to learn the actual human rating standards with only very few human-annotated data. To advocate the generalizability even with limited fine-tuning data, a novel KD regularization is introduced to retain the knowledge learned at the pre-training stage. Experimental results show that the model trained by QuantiDCE presents stronger correlations with human judgements than the other state-of-the-art metrics.

Solving Inefficiency of Self-supervised Representation Learning

Apr 18, 2021

Abstract:Self-supervised learning has attracted great interest due to its tremendous potentials in learning discriminative representations in an unsupervised manner. Along this direction, contrastive learning achieves current state-of-the-art performance. Despite the acknowledged successes, existing contrastive learning methods suffer from very low learning efficiency, e.g., taking about ten times more training epochs than supervised learning for comparable recognition accuracy. In this paper, we discover two contradictory phenomena in contrastive learning that we call under-clustering and over-clustering problems, which are major obstacles to learning efficiency. Under-clustering means that the model cannot efficiently learn to discover the dissimilarity between inter-class samples when the negative sample pairs for contrastive learning are insufficient to differentiate all the actual object categories. Over-clustering implies that the model cannot efficiently learn the feature representation from excessive negative sample pairs, which include many outliers and thus enforce the model to over-cluster samples of the same actual categories into different clusters. To simultaneously overcome these two problems, we propose a novel self-supervised learning framework using a median triplet loss. Precisely, we employ a triplet loss tending to maximize the relative distance between the positive pair and negative pairs to address the under-clustering problem; and we construct the negative pair by selecting the negative sample of a median similarity score from all negative samples to avoid the over-clustering problem, guaranteed by the Bernoulli Distribution model. We extensively evaluate our proposed framework in several large-scale benchmarks (e.g., ImageNet, SYSU-30k, and COCO). The results demonstrate the superior performance of our model over the latest state-of-the-art methods by a clear margin.

Joint Learning of Neural Transfer and Architecture Adaptation for Image Recognition

Mar 31, 2021

Abstract:Current state-of-the-art visual recognition systems usually rely on the following pipeline: (a) pretraining a neural network on a large-scale dataset (e.g., ImageNet) and (b) finetuning the network weights on a smaller, task-specific dataset. Such a pipeline assumes the sole weight adaptation is able to transfer the network capability from one domain to another domain, based on a strong assumption that a fixed architecture is appropriate for all domains. However, each domain with a distinct recognition target may need different levels/paths of feature hierarchy, where some neurons may become redundant, and some others are re-activated to form new network structures. In this work, we prove that dynamically adapting network architectures tailored for each domain task along with weight finetuning benefits in both efficiency and effectiveness, compared to the existing image recognition pipeline that only tunes the weights regardless of the architecture. Our method can be easily generalized to an unsupervised paradigm by replacing supernet training with self-supervised learning in the source domain tasks and performing linear evaluation in the downstream tasks. This further improves the search efficiency of our method. Moreover, we also provide principled and empirical analysis to explain why our approach works by investigating the ineffectiveness of existing neural architecture search. We find that preserving the joint distribution of the network architecture and weights is of importance. This analysis not only benefits image recognition but also provides insights for crafting neural networks. Experiments on five representative image recognition tasks such as person re-identification, age estimation, gender recognition, image classification, and unsupervised domain adaptation demonstrate the effectiveness of our method.

Learning Class-Agnostic Pseudo Mask Generation for Box-Supervised Semantic Segmentation

Mar 09, 2021

Abstract:Recently, several weakly supervised learning methods have been devoted to utilize bounding box supervision for training deep semantic segmentation models. Most existing methods usually leverage the generic proposal generators (\eg, dense CRF and MCG) to produce enhanced segmentation masks for further training segmentation models. These proposal generators, however, are generic and not specifically designed for box-supervised semantic segmentation, thereby leaving some leeway for improving segmentation performance. In this paper, we aim at seeking for a more accurate learning-based class-agnostic pseudo mask generator tailored to box-supervised semantic segmentation. To this end, we resort to a pixel-level annotated auxiliary dataset where the class labels are non-overlapped with those of the box-annotated dataset. For learning pseudo mask generator from the auxiliary dataset, we present a bi-level optimization formulation. In particular, the lower subproblem is used to learn box-supervised semantic segmentation, while the upper subproblem is used to learn an optimal class-agnostic pseudo mask generator. The learned pseudo segmentation mask generator can then be deployed to the box-annotated dataset for improving weakly supervised semantic segmentation. Experiments on PASCAL VOC 2012 dataset show that the learned pseudo mask generator is effective in boosting segmentation performance, and our method can further close the performance gap between box-supervised and fully-supervised models. Our code will be made publicly available at https://github.com/Vious/LPG_BBox_Segmentation .

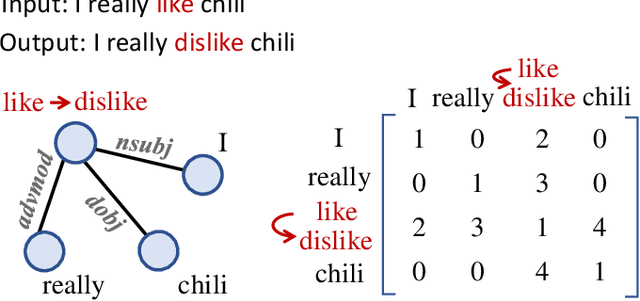

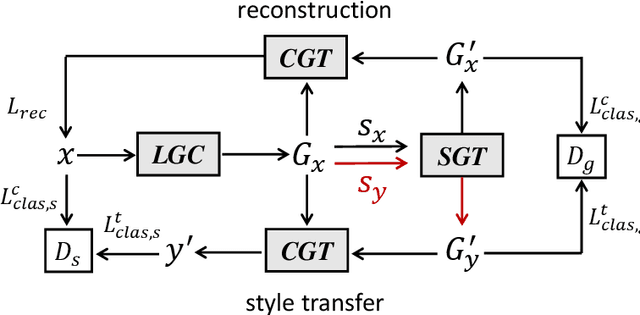

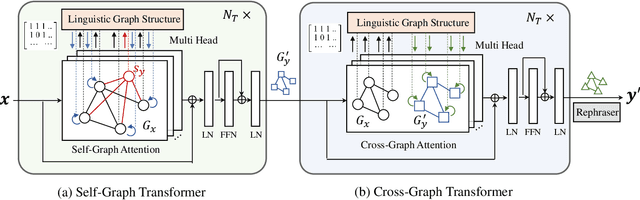

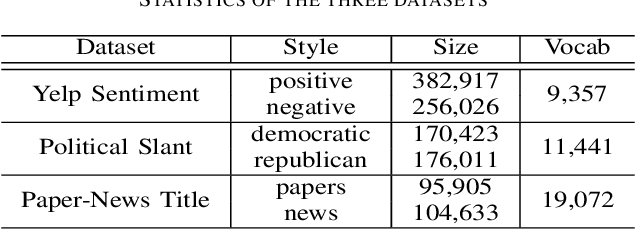

GTAE: Graph-Transformer based Auto-Encoders for Linguistic-Constrained Text Style Transfer

Feb 01, 2021

Abstract:Non-parallel text style transfer has attracted increasing research interests in recent years. Despite successes in transferring the style based on the encoder-decoder framework, current approaches still lack the ability to preserve the content and even logic of original sentences, mainly due to the large unconstrained model space or too simplified assumptions on latent embedding space. Since language itself is an intelligent product of humans with certain grammars and has a limited rule-based model space by its nature, relieving this problem requires reconciling the model capacity of deep neural networks with the intrinsic model constraints from human linguistic rules. To this end, we propose a method called Graph Transformer based Auto Encoder (GTAE), which models a sentence as a linguistic graph and performs feature extraction and style transfer at the graph level, to maximally retain the content and the linguistic structure of original sentences. Quantitative experiment results on three non-parallel text style transfer tasks show that our model outperforms state-of-the-art methods in content preservation, while achieving comparable performance on transfer accuracy and sentence naturalness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge