Jing Jin

Two Pathways to Truthfulness: On the Intrinsic Encoding of LLM Hallucinations

Jan 12, 2026Abstract:Despite their impressive capabilities, large language models (LLMs) frequently generate hallucinations. Previous work shows that their internal states encode rich signals of truthfulness, yet the origins and mechanisms of these signals remain unclear. In this paper, we demonstrate that truthfulness cues arise from two distinct information pathways: (1) a Question-Anchored pathway that depends on question-answer information flow, and (2) an Answer-Anchored pathway that derives self-contained evidence from the generated answer itself. First, we validate and disentangle these pathways through attention knockout and token patching. Afterwards, we uncover notable and intriguing properties of these two mechanisms. Further experiments reveal that (1) the two mechanisms are closely associated with LLM knowledge boundaries; and (2) internal representations are aware of their distinctions. Finally, building on these insightful findings, two applications are proposed to enhance hallucination detection performance. Overall, our work provides new insight into how LLMs internally encode truthfulness, offering directions for more reliable and self-aware generative systems.

Dynamic Residual Encoding with Slide-Level Contrastive Learning for End-to-End Whole Slide Image Representation

Nov 07, 2025

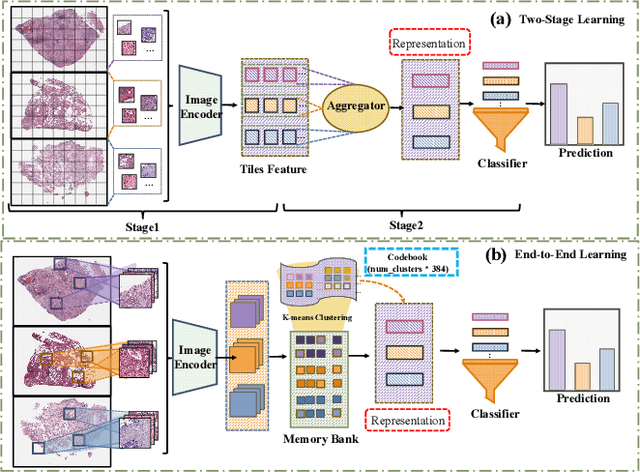

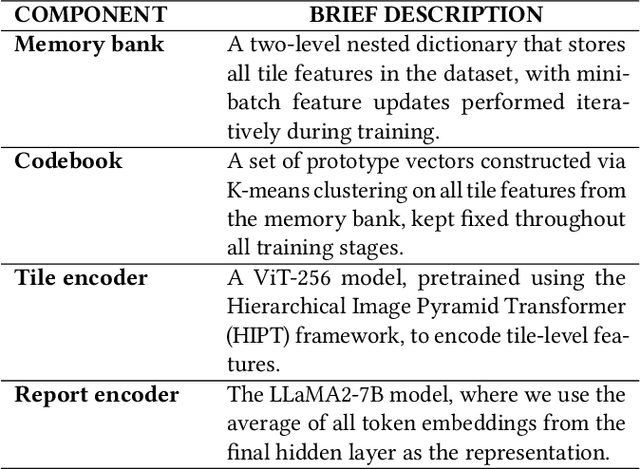

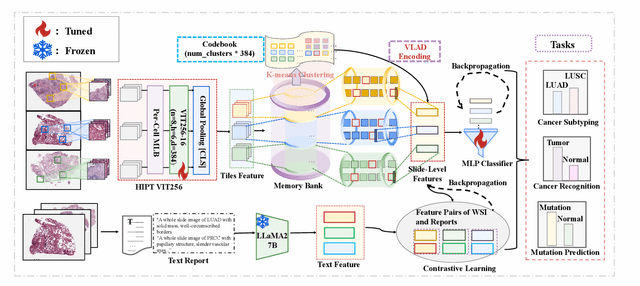

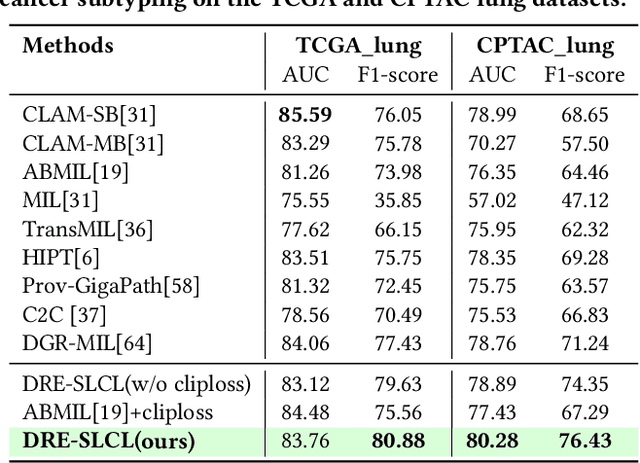

Abstract:Whole Slide Image (WSI) representation is critical for cancer subtyping, cancer recognition and mutation prediction.Training an end-to-end WSI representation model poses significant challenges, as a standard gigapixel slide can contain tens of thousands of image tiles, making it difficult to compute gradients of all tiles in a single mini-batch due to current GPU limitations. To address this challenge, we propose a method of dynamic residual encoding with slide-level contrastive learning (DRE-SLCL) for end-to-end WSI representation. Our approach utilizes a memory bank to store the features of tiles across all WSIs in the dataset. During training, a mini-batch usually contains multiple WSIs. For each WSI in the batch, a subset of tiles is randomly sampled and their features are computed using a tile encoder. Then, additional tile features from the same WSI are selected from the memory bank. The representation of each individual WSI is generated using a residual encoding technique that incorporates both the sampled features and those retrieved from the memory bank. Finally, the slide-level contrastive loss is computed based on the representations and histopathology reports ofthe WSIs within the mini-batch. Experiments conducted over cancer subtyping, cancer recognition, and mutation prediction tasks proved the effectiveness of the proposed DRE-SLCL method.

* 8pages, 3figures, published to ACM Digital Library

Performance Analysis of Cooperative Integrated Sensing and Communications for 6G Networks

May 14, 2025Abstract:In this work, we aim to effectively characterize the performance of cooperative integrated sensing and communication (ISAC) networks and to reveal how performance metrics relate to network parameters. To this end, we introduce a generalized stochastic geometry framework to model the cooperative ISAC networks, which approximates the spatial randomness of the network deployment. Based on this framework, we derive analytical expressions for key performance metrics in both communication and sensing domains, with a particular focus on communication coverage probability and radar information rate. The analytical expressions derived explicitly highlight how performance metrics depend on network parameters, thereby offering valuable insights into the deployment and design of cooperative ISAC networks. In the end, we validate the theoretical performance analysis through Monte Carlo simulation results. Our results demonstrate that increasing the number of cooperative base stations (BSs) significantly improves both metrics, while increasing the BS deployment density has a limited impact on communication coverage probability but substantially enhances the radar information rate. Additionally, increasing the number of transmit antennas is effective when the total number of transmit antennas is relatively small. The incremental performance gain reduces with the increase of the number of transmit antennas, suggesting that indiscriminately increasing antennas is not an efficient strategy to improve the performance of the system in cooperative ISAC networks.

Large Language Model Psychometrics: A Systematic Review of Evaluation, Validation, and Enhancement

May 13, 2025Abstract:The rapid advancement of large language models (LLMs) has outpaced traditional evaluation methodologies. It presents novel challenges, such as measuring human-like psychological constructs, navigating beyond static and task-specific benchmarks, and establishing human-centered evaluation. These challenges intersect with Psychometrics, the science of quantifying the intangible aspects of human psychology, such as personality, values, and intelligence. This survey introduces and synthesizes an emerging interdisciplinary field of LLM Psychometrics, which leverages psychometric instruments, theories, and principles to evaluate, understand, and enhance LLMs. We systematically explore the role of Psychometrics in shaping benchmarking principles, broadening evaluation scopes, refining methodologies, validating results, and advancing LLM capabilities. This paper integrates diverse perspectives to provide a structured framework for researchers across disciplines, enabling a more comprehensive understanding of this nascent field. Ultimately, we aim to provide actionable insights for developing future evaluation paradigms that align with human-level AI and promote the advancement of human-centered AI systems for societal benefit. A curated repository of LLM psychometric resources is available at https://github.com/valuebyte-ai/Awesome-LLM-Psychometrics.

Movable Antenna-Aided Cooperative ISAC Network with Time Synchronization error and Imperfect CSI

Jan 26, 2025Abstract:Cooperative-integrated sensing and communication (C-ISAC) networks have emerged as promising solutions for communication and target sensing. However, imperfect channel state information (CSI) estimation and time synchronization (TS) errors degrade performance, affecting communication and sensing accuracy. This paper addresses these challenges {by employing} {movable antennas} (MAs) to enhance C-ISAC robustness. We analyze the impact of CSI errors on achievable rates and introduce a hybrid Cramer-Rao lower bound (HCRLB) to evaluate the effect of TS errors on target localization accuracy. Based on these models, we derive the worst-case achievable rate and sensing precision under such errors. We optimize cooperative beamforming, {base station (BS)} selection factor and MA position to minimize power consumption while ensuring accuracy. {We then propose a} constrained deep reinforcement learning (C-DRL) approach to solve this non-convex optimization problem, using a modified deep deterministic policy gradient (DDPG) algorithm with a Wolpertinger architecture for efficient training under complex constraints. {Simulation results show that the proposed method significantly improves system robustness against CSI and TS errors, where robustness mean reliable data transmission under poor channel conditions.} These findings demonstrate the potential of MA technology to reduce power consumption in imperfect CSI and TS environments.

LLMs are Biased Evaluators But Not Biased for Retrieval Augmented Generation

Oct 28, 2024

Abstract:Recent studies have demonstrated that large language models (LLMs) exhibit significant biases in evaluation tasks, particularly in preferentially rating and favoring self-generated content. However, the extent to which this bias manifests in fact-oriented tasks, especially within retrieval-augmented generation (RAG) frameworks-where keyword extraction and factual accuracy take precedence over stylistic elements-remains unclear. Our study addresses this knowledge gap by simulating two critical phases of the RAG framework. In the first phase, we access the suitability of human-authored versus model-generated passages, emulating the pointwise reranking process. The second phase involves conducting pairwise reading comprehension tests to simulate the generation process. Contrary to previous findings indicating a self-preference in rating tasks, our results reveal no significant self-preference effect in RAG frameworks. Instead, we observe that factual accuracy significantly influences LLMs' output, even in the absence of prior knowledge. Our research contributes to the ongoing discourse on LLM biases and their implications for RAG-based system, offering insights that may inform the development of more robust and unbiased LLM systems.

DIVESPOT: Depth Integrated Volume Estimation of Pile of Things Based on Point Cloud

Jul 07, 2024Abstract:Non-contact volume estimation of pile-type objects has considerable potential in industrial scenarios, including grain, coal, mining, and stone materials. However, using existing method for these scenarios is challenged by unstable measurement poses, significant light interference, the difficulty of training data collection, and the computational burden brought by large piles. To address the above issues, we propose the Depth Integrated Volume EStimation of Pile Of Things (DIVESPOT) based on point cloud technology in this study. For the challenges of unstable measurement poses, the point cloud pose correction and filtering algorithm is designed based on the Random Sample Consensus (RANSAC) and the Hierarchical Density-Based Spatial Clustering of Applications with Noise (HDBSCAN). To cope with light interference and to avoid the relying on training data, the height-distribution-based ground feature extraction algorithm is proposed to achieve RGB-independent. To reduce the computational burden, the storage space optimizing strategy is developed, such that accurate estimation can be acquired by using compressed voxels. Experimental results demonstrate that the DIVESPOT method enables non-data-driven, RGB-independent segmentation of pile point clouds, maintaining a volume calculation relative error within 2%. Even with 90% compression of the voxel mesh, the average error of the results can be under 3%.

A Label-Free and Non-Monotonic Metric for Evaluating Denoising in Event Cameras

Jun 13, 2024

Abstract:Event cameras are renowned for their high efficiency due to outputting a sparse, asynchronous stream of events. However, they are plagued by noisy events, especially in low light conditions. Denoising is an essential task for event cameras, but evaluating denoising performance is challenging. Label-dependent denoising metrics involve artificially adding noise to clean sequences, complicating evaluations. Moreover, the majority of these metrics are monotonic, which can inflate scores by removing substantial noise and valid events. To overcome these limitations, we propose the first label-free and non-monotonic evaluation metric, the area of the continuous contrast curve (AOCC), which utilizes the area enclosed by event frame contrast curves across different time intervals. This metric is inspired by how events capture the edge contours of scenes or objects with high temporal resolution. An effective denoising method removes noise without eliminating these edge-contour events, thus preserving the contrast of event frames. Consequently, contrast across various time ranges serves as a metric to assess denoising effectiveness. As the time interval lengthens, the curve will initially rise and then fall. The proposed metric is validated through both theoretical and experimental evidence.

Iterative Causal Segmentation: Filling the Gap between Market Segmentation and Marketing Strategy

May 23, 2024

Abstract:The field of causal Machine Learning (ML) has made significant strides in recent years. Notable breakthroughs include methods such as meta learners (arXiv:1706.03461v6) and heterogeneous doubly robust estimators (arXiv:2004.14497) introduced in the last five years. Despite these advancements, the field still faces challenges, particularly in managing tightly coupled systems where both the causal treatment variable and a confounding covariate must serve as key decision-making indicators. This scenario is common in applications of causal ML for marketing, such as marketing segmentation and incremental marketing uplift. In this work, we present our formally proven algorithm, iterative causal segmentation, to address this issue.

InstructPipe: Building Visual Programming Pipelines with Human Instructions

Dec 15, 2023

Abstract:Visual programming provides beginner-level programmers with a coding-free experience to build their customized pipelines. Existing systems require users to build a pipeline entirely from scratch, implying that novice users need to set up and link appropriate nodes all by themselves, starting from a blank workspace. We present InstructPipe, an AI assistant that enables users to start prototyping machine learning (ML) pipelines with text instructions. We designed two LLM modules and a code interpreter to execute our solution. LLM modules generate pseudocode of a target pipeline, and the interpreter renders a pipeline in the node-graph editor for further human-AI collaboration. Technical evaluations reveal that InstructPipe reduces user interactions by 81.1% compared to traditional methods. Our user study (N=16) showed that InstructPipe empowers novice users to streamline their workflow in creating desired ML pipelines, reduce their learning curve, and spark innovative ideas with open-ended commands.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge