Jie Zheng

CellVTA: Enhancing Vision Foundation Models for Accurate Cell Segmentation and Classification

Apr 01, 2025

Abstract:Cell instance segmentation is a fundamental task in digital pathology with broad clinical applications. Recently, vision foundation models, which are predominantly based on Vision Transformers (ViTs), have achieved remarkable success in pathology image analysis. However, their improvements in cell instance segmentation remain limited. A key challenge arises from the tokenization process in ViTs, which substantially reduces the spatial resolution of input images, leading to suboptimal segmentation quality, especially for small and densely packed cells. To address this problem, we propose CellVTA (Cell Vision Transformer with Adapter), a novel method that improves the performance of vision foundation models for cell instance segmentation by incorporating a CNN-based adapter module. This adapter extracts high-resolution spatial information from input images and injects it into the ViT through a cross-attention mechanism. Our method preserves the core architecture of ViT, ensuring seamless integration with pretrained foundation models. Extensive experiments show that CellVTA achieves 0.538 mPQ on the CoNIC dataset and 0.506 mPQ on the PanNuke dataset, which significantly outperforms the state-of-the-art cell segmentation methods. Ablation studies confirm the superiority of our approach over other fine-tuning strategies, including decoder-only fine-tuning and full fine-tuning. Our code and models are publicly available at https://github.com/JieZheng-ShanghaiTech/CellVTA.

Interpretable High-order Knowledge Graph Neural Network for Predicting Synthetic Lethality in Human Cancers

Mar 08, 2025Abstract:Synthetic lethality (SL) is a promising gene interaction for cancer therapy. Recent SL prediction methods integrate knowledge graphs (KGs) into graph neural networks (GNNs) and employ attention mechanisms to extract local subgraphs as explanations for target gene pairs. However, attention mechanisms often lack fidelity, typically generate a single explanation per gene pair, and fail to ensure trustworthy high-order structures in their explanations. To overcome these limitations, we propose Diverse Graph Information Bottleneck for Synthetic Lethality (DGIB4SL), a KG-based GNN that generates multiple faithful explanations for the same gene pair and effectively encodes high-order structures. Specifically, we introduce a novel DGIB objective, integrating a Determinant Point Process (DPP) constraint into the standard IB objective, and employ 13 motif-based adjacency matrices to capture high-order structures in gene representations. Experimental results show that DGIB4SL outperforms state-of-the-art baselines and provides multiple explanations for SL prediction, revealing diverse biological mechanisms underlying SL inference.

StarWhisper Telescope: Agent-Based Observation Assistant System to Approach AI Astrophysicist

Dec 09, 2024

Abstract:With the rapid advancements in Large Language Models (LLMs), LLM-based agents have introduced convenient and user-friendly methods for leveraging tools across various domains. In the field of astronomical observation, the construction of new telescopes has significantly increased astronomers' workload. Deploying LLM-powered agents can effectively alleviate this burden and reduce the costs associated with training personnel. Within the Nearby Galaxy Supernovae Survey (NGSS) project, which encompasses eight telescopes across three observation sites, aiming to find the transients from the galaxies in 50 mpc, we have developed the \textbf{StarWhisper Telescope System} to manage the entire observation process. This system automates tasks such as generating observation lists, conducting observations, analyzing data, and providing feedback to the observer. Observation lists are customized for different sites and strategies to ensure comprehensive coverage of celestial objects. After manual verification, these lists are uploaded to the telescopes via the agents in the system, which initiates observations upon neutral language. The observed images are analyzed in real-time, and the transients are promptly communicated to the observer. The agent modifies them into a real-time follow-up observation proposal and send to the Xinglong observatory group chat, then add them to the next-day observation lists. Additionally, the integration of AI agents within the system provides online accessibility, saving astronomers' time and encouraging greater participation from amateur astronomers in the NGSS project.

Tissue-Contrastive Semi-Masked Autoencoders for Segmentation Pretraining on Chest CT

Jul 12, 2024

Abstract:Existing Masked Image Modeling (MIM) depends on a spatial patch-based masking-reconstruction strategy to perceive objects'features from unlabeled images, which may face two limitations when applied to chest CT: 1) inefficient feature learning due to complex anatomical details presented in CT images, and 2) suboptimal knowledge transfer owing to input disparity between upstream and downstream models. To address these issues, we propose a new MIM method named Tissue-Contrastive Semi-Masked Autoencoder (TCS-MAE) for modeling chest CT images. Our method has two novel designs: 1) a tissue-based masking-reconstruction strategy to capture more fine-grained anatomical features, and 2) a dual-AE architecture with contrastive learning between the masked and original image views to bridge the gap of the upstream and downstream models. To validate our method, we systematically investigate representative contrastive, generative, and hybrid self-supervised learning methods on top of tasks involving segmenting pneumonia, mediastinal tumors, and various organs. The results demonstrate that, compared to existing methods, our TCS-MAE more effectively learns tissue-aware representations, thereby significantly enhancing segmentation performance across all tasks.

Credentials in the Occupation Ontology

Apr 30, 2024

Abstract:The term credential encompasses educational certificates, degrees, certifications, and government-issued licenses. An occupational credential is a verification of an individuals qualification or competence issued by a third party with relevant authority. Job seekers often leverage such credentials as evidence that desired qualifications are satisfied by their holders. Many U.S. education and workforce development organizations have recognized the importance of credentials for employment and the challenges of understanding the value of credentials. In this study, we identified and ontologically defined credential and credential-related terms at the textual and semantic levels based on the Occupation Ontology (OccO), a BFO-based ontology. Different credential types and their authorization logic are modeled. We additionally defined a high-level hierarchy of credential related terms and relations among many terms, which were initiated in concert with the Alabama Talent Triad (ATT) program, which aims to connect learners, earners, employers and education/training providers through credentials and skills. To our knowledge, our research provides for the first time systematic ontological modeling of the important domain of credentials and related contents, supporting enhanced credential data and knowledge integration in the future.

Large Language Models for Automated Open-domain Scientific Hypotheses Discovery

Sep 06, 2023Abstract:Hypothetical induction is recognized as the main reasoning type when scientists make observations about the world and try to propose hypotheses to explain those observations. Past research on hypothetical induction has a limited setting that (1) the observation annotations of the dataset are not raw web corpus but are manually selected sentences (resulting in a close-domain setting); and (2) the ground truth hypotheses annotations are mostly commonsense knowledge, making the task less challenging. In this work, we propose the first NLP dataset for social science academic hypotheses discovery, consisting of 50 recent papers published in top social science journals. Raw web corpora that are necessary for developing hypotheses in the published papers are also collected in the dataset, with the final goal of creating a system that automatically generates valid, novel, and helpful (to human researchers) hypotheses, given only a pile of raw web corpora. The new dataset can tackle the previous problems because it requires to (1) use raw web corpora as observations; and (2) propose hypotheses even new to humanity. A multi-module framework is developed for the task, as well as three different feedback mechanisms that empirically show performance gain over the base framework. Finally, our framework exhibits high performance in terms of both GPT-4 based evaluation and social science expert evaluation.

Biological Factor Regulatory Neural Network

Apr 11, 2023

Abstract:Genes are fundamental for analyzing biological systems and many recent works proposed to utilize gene expression for various biological tasks by deep learning models. Despite their promising performance, it is hard for deep neural networks to provide biological insights for humans due to their black-box nature. Recently, some works integrated biological knowledge with neural networks to improve the transparency and performance of their models. However, these methods can only incorporate partial biological knowledge, leading to suboptimal performance. In this paper, we propose the Biological Factor Regulatory Neural Network (BFReg-NN), a generic framework to model relations among biological factors in cell systems. BFReg-NN starts from gene expression data and is capable of merging most existing biological knowledge into the model, including the regulatory relations among genes or proteins (e.g., gene regulatory networks (GRN), protein-protein interaction networks (PPI)) and the hierarchical relations among genes, proteins and pathways (e.g., several genes/proteins are contained in a pathway). Moreover, BFReg-NN also has the ability to provide new biologically meaningful insights because of its white-box characteristics. Experimental results on different gene expression-based tasks verify the superiority of BFReg-NN compared with baselines. Our case studies also show that the key insights found by BFReg-NN are consistent with the biological literature.

Constrained Reinforcement Learning for Short Video Recommendation

May 26, 2022

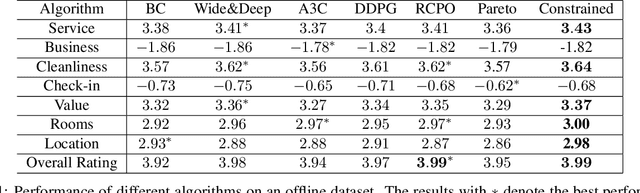

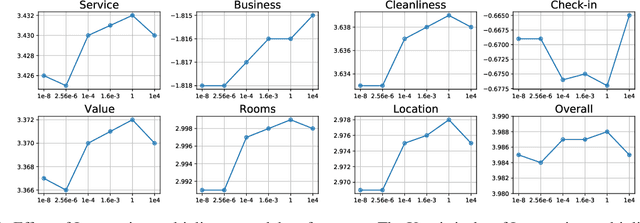

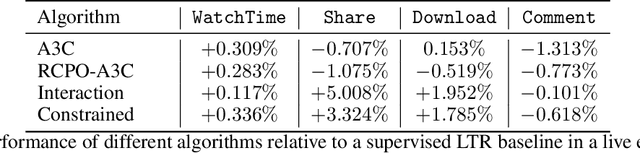

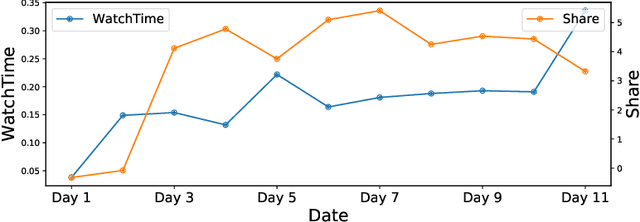

Abstract:The wide popularity of short videos on social media poses new opportunities and challenges to optimize recommender systems on the video-sharing platforms. Users provide complex and multi-faceted responses towards recommendations, including watch time and various types of interactions with videos. As a result, established recommendation algorithms that concern a single objective are not adequate to meet this new demand of optimizing comprehensive user experiences. In this paper, we formulate the problem of short video recommendation as a constrained Markov Decision Process (MDP), where platforms want to optimize the main goal of user watch time in long term, with the constraint of accommodating the auxiliary responses of user interactions such as sharing/downloading videos. To solve the constrained MDP, we propose a two-stage reinforcement learning approach based on actor-critic framework. At stage one, we learn individual policies to optimize each auxiliary response. At stage two, we learn a policy to (i) optimize the main response and (ii) stay close to policies learned at the first stage, which effectively guarantees the performance of this main policy on the auxiliaries. Through extensive simulations, we demonstrate effectiveness of our approach over alternatives in both optimizing the main goal as well as balancing the others. We further show the advantage of our approach in live experiments of short video recommendations, where it significantly outperforms other baselines in terms of watch time and interactions from video views. Our approach has been fully launched in the production system to optimize user experiences on the platform.

Exploring the Impact of Negative Samples of Contrastive Learning: A Case Study of Sentence Embedding

Mar 16, 2022

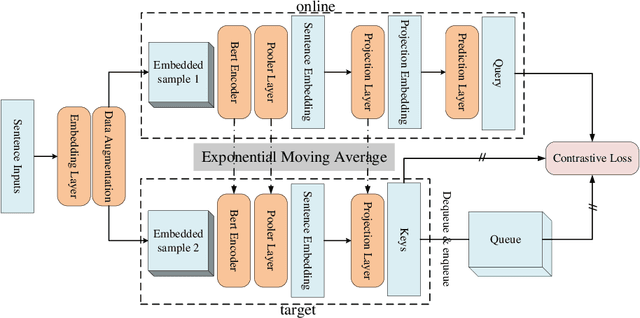

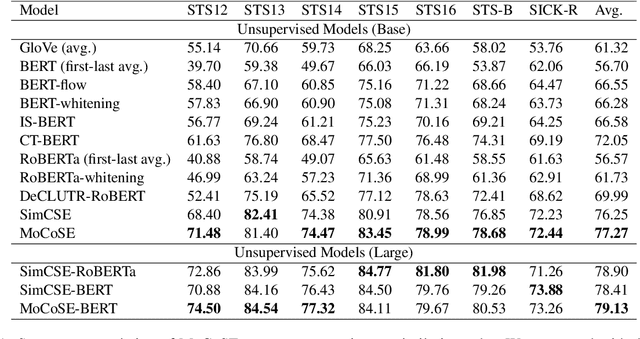

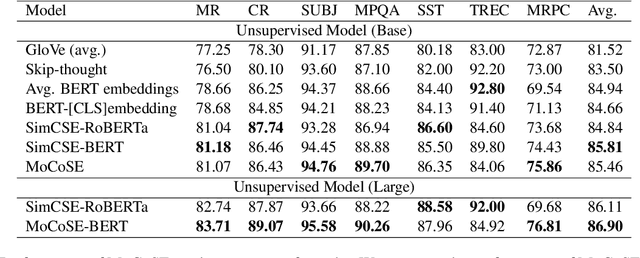

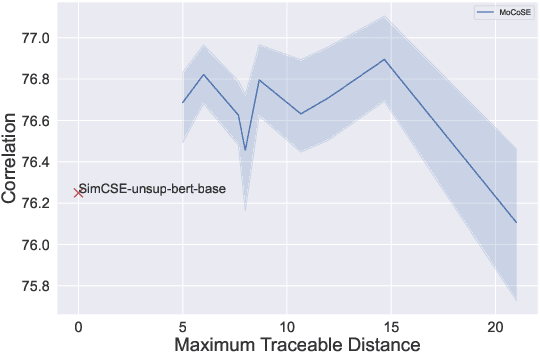

Abstract:Contrastive learning is emerging as a powerful technique for extracting knowledge from unlabeled data. This technique requires a balanced mixture of two ingredients: positive (similar) and negative (dissimilar) samples. This is typically achieved by maintaining a queue of negative samples during training. Prior works in the area typically uses a fixed-length negative sample queue, but how the negative sample size affects the model performance remains unclear. The opaque impact of the number of negative samples on performance when employing contrastive learning aroused our in-depth exploration. This paper presents a momentum contrastive learning model with negative sample queue for sentence embedding, namely MoCoSE. We add the prediction layer to the online branch to make the model asymmetric and together with EMA update mechanism of the target branch to prevent the model from collapsing. We define a maximum traceable distance metric, through which we learn to what extent the text contrastive learning benefits from the historical information of negative samples. Our experiments find that the best results are obtained when the maximum traceable distance is at a certain range, demonstrating that there is an optimal range of historical information for a negative sample queue. We evaluate the proposed unsupervised MoCoSE on the semantic text similarity (STS) task and obtain an average Spearman's correlation of $77.27\%$. Source code is available at https://github.com/xbdxwyh/mocose.

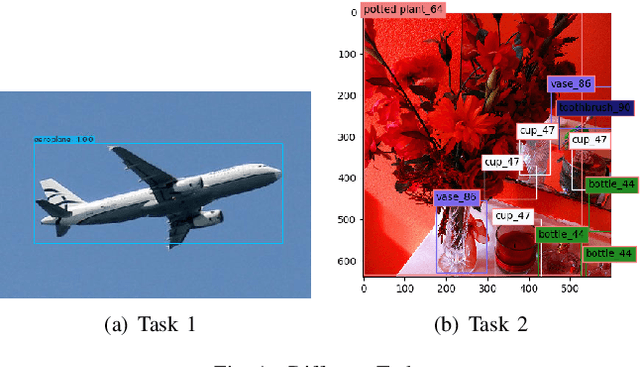

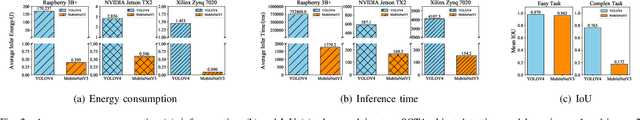

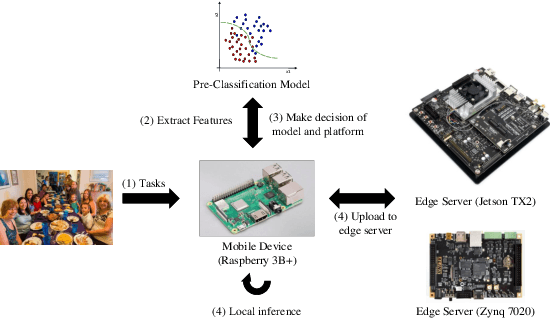

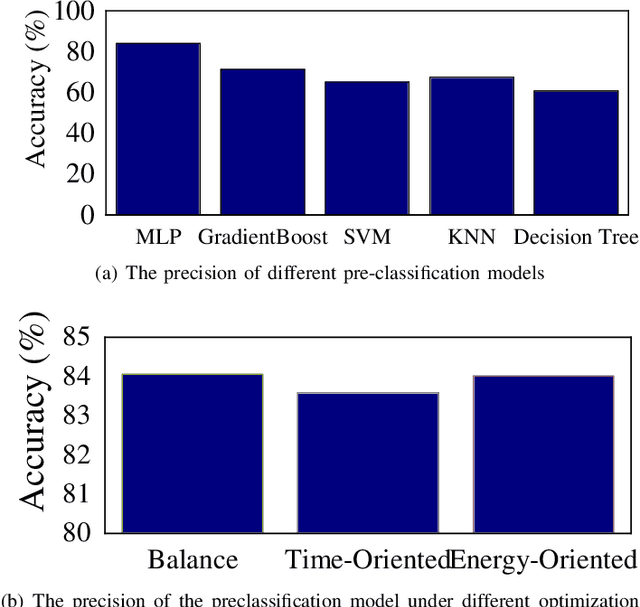

ESOD:Edge-based Task Scheduling for Object Detection

Oct 20, 2021

Abstract:Object Detection on the mobile system is a challenge in terms of everything. Nowadays, many object detection models have been designed, and most of them concentrate on precision. However, the computation burden of those models on mobile systems is unacceptable. Researchers have designed some lightweight networks for mobiles by sacrificing precision. We present a novel edge-based task scheduling framework for object detection (termed as ESOD). In detail, we train a DNN model (termed as pre-model) to predict which object detection model to use for the coming task and offloads to which edge servers by physical characteristics of the image task (e.g., brightness, saturation). The results show that ESOD can reduce latency and energy consumption by an average of 22.13% and 29.60% and improve the mAP to 45.8(with 0.9 mAP better), respectively, compared with the SOTA DETR model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge