Hao Tang

RoRA: Efficient Fine-Tuning of LLM with Reliability Optimization for Rank Adaptation

Jan 08, 2025

Abstract:Fine-tuning helps large language models (LLM) recover degraded information and enhance task performance.Although Low-Rank Adaptation (LoRA) is widely used and effective for fine-tuning, we have observed that its scaling factor can limit or even reduce performance as the rank size increases. To address this issue, we propose RoRA (Rank-adaptive Reliability Optimization), a simple yet effective method for optimizing LoRA's scaling factor. By replacing $\alpha/r$ with $\alpha/\sqrt{r}$, RoRA ensures improved performance as rank size increases. Moreover, RoRA enhances low-rank adaptation in fine-tuning uncompressed models and excels in the more challenging task of accuracy recovery when fine-tuning pruned models. Extensive experiments demonstrate the effectiveness of RoRA in fine-tuning both uncompressed and pruned models. RoRA surpasses the state-of-the-art (SOTA) in average accuracy and robustness on LLaMA-7B/13B, LLaMA2-7B, and LLaMA3-8B, specifically outperforming LoRA and DoRA by 6.5% and 2.9% on LLaMA-7B, respectively. In pruned model fine-tuning, RoRA shows significant advantages; for SHEARED-LLAMA-1.3, a LLaMA-7B with 81.4% pruning, RoRA achieves 5.7% higher average accuracy than LoRA and 3.9% higher than DoRA.

End-to-End Long Document Summarization using Gradient Caching

Jan 03, 2025

Abstract:Training transformer-based encoder-decoder models for long document summarization poses a significant challenge due to the quadratic memory consumption during training. Several approaches have been proposed to extend the input length at test time, but training with these approaches is still difficult, requiring truncation of input documents and causing a mismatch between training and test conditions. In this work, we propose CachED (Gradient $\textbf{Cach}$ing for $\textbf{E}$ncoder-$\textbf{D}$ecoder models), an approach that enables end-to-end training of existing transformer-based encoder-decoder models, using the entire document without truncation. Specifically, we apply non-overlapping sliding windows to input documents, followed by fusion in decoder. During backpropagation, the gradients are cached at the decoder and are passed through the encoder in chunks by re-computing the hidden vectors, similar to gradient checkpointing. In the experiments on long document summarization, we extend BART to CachED BART, processing more than 500K tokens during training and achieving superior performance without using any additional parameters.

PolSAM: Polarimetric Scattering Mechanism Informed Segment Anything Model

Dec 17, 2024Abstract:PolSAR data presents unique challenges due to its rich and complex characteristics. Existing data representations, such as complex-valued data, polarimetric features, and amplitude images, are widely used. However, these formats often face issues related to usability, interpretability, and data integrity. Most feature extraction networks for PolSAR are small, limiting their ability to capture features effectively. To address these issues, We propose the Polarimetric Scattering Mechanism-Informed SAM (PolSAM), an enhanced Segment Anything Model (SAM) that integrates domain-specific scattering characteristics and a novel prompt generation strategy. PolSAM introduces Microwave Vision Data (MVD), a lightweight and interpretable data representation derived from polarimetric decomposition and semantic correlations. We propose two key components: the Feature-Level Fusion Prompt (FFP), which fuses visual tokens from pseudo-colored SAR images and MVD to address modality incompatibility in the frozen SAM encoder, and the Semantic-Level Fusion Prompt (SFP), which refines sparse and dense segmentation prompts using semantic information. Experimental results on the PhySAR-Seg datasets demonstrate that PolSAM significantly outperforms existing SAM-based and multimodal fusion models, improving segmentation accuracy, reducing data storage, and accelerating inference time. The source code and datasets will be made publicly available at \url{https://github.com/XAI4SAR/PolSAM}.

Divide-and-Conquer: Confluent Triple-Flow Network for RGB-T Salient Object Detection

Dec 02, 2024Abstract:RGB-Thermal Salient Object Detection aims to pinpoint prominent objects within aligned pairs of visible and thermal infrared images. Traditional encoder-decoder architectures, while designed for cross-modality feature interactions, may not have adequately considered the robustness against noise originating from defective modalities. Inspired by hierarchical human visual systems, we propose the ConTriNet, a robust Confluent Triple-Flow Network employing a Divide-and-Conquer strategy. Specifically, ConTriNet comprises three flows: two modality-specific flows explore cues from RGB and Thermal modalities, and a third modality-complementary flow integrates cues from both modalities. ConTriNet presents several notable advantages. It incorporates a Modality-induced Feature Modulator in the modality-shared union encoder to minimize inter-modality discrepancies and mitigate the impact of defective samples. Additionally, a foundational Residual Atrous Spatial Pyramid Module in the separated flows enlarges the receptive field, allowing for the capture of multi-scale contextual information. Furthermore, a Modality-aware Dynamic Aggregation Module in the modality-complementary flow dynamically aggregates saliency-related cues from both modality-specific flows. Leveraging the proposed parallel triple-flow framework, we further refine saliency maps derived from different flows through a flow-cooperative fusion strategy, yielding a high-quality, full-resolution saliency map for the final prediction. To evaluate the robustness and stability of our approach, we collect a comprehensive RGB-T SOD benchmark, VT-IMAG, covering various real-world challenging scenarios. Extensive experiments on public benchmarks and our VT-IMAG dataset demonstrate that ConTriNet consistently outperforms state-of-the-art competitors in both common and challenging scenarios.

Multimodal Alignment and Fusion: A Survey

Nov 26, 2024

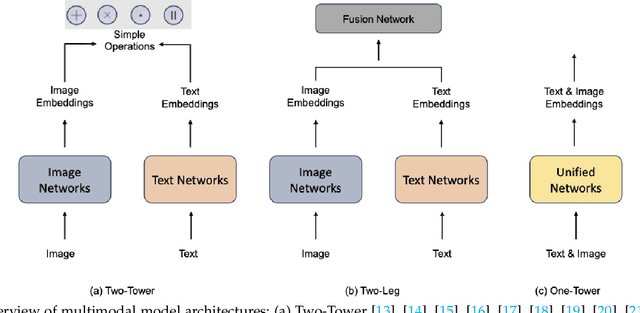

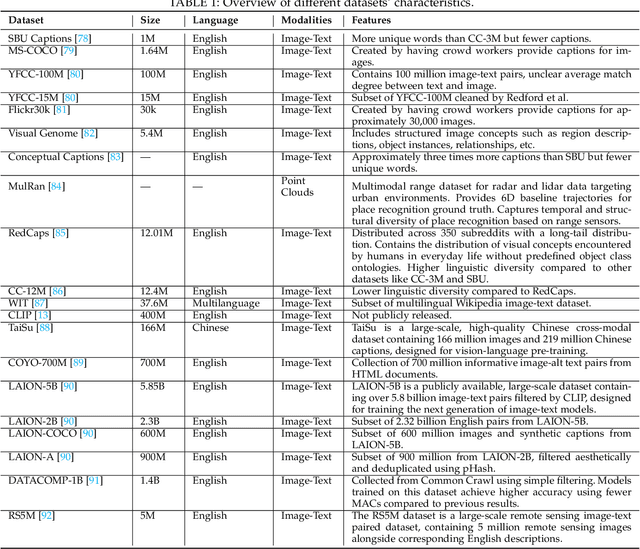

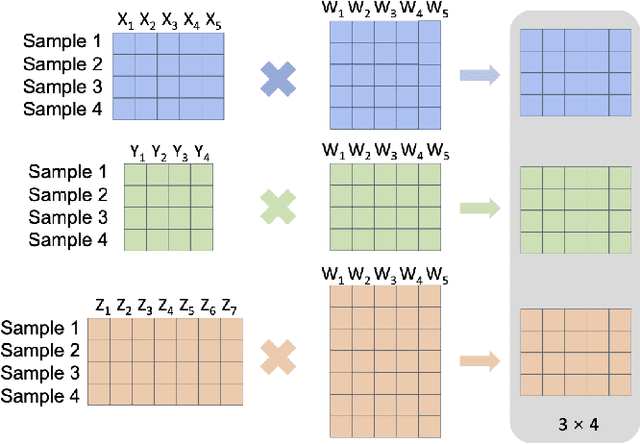

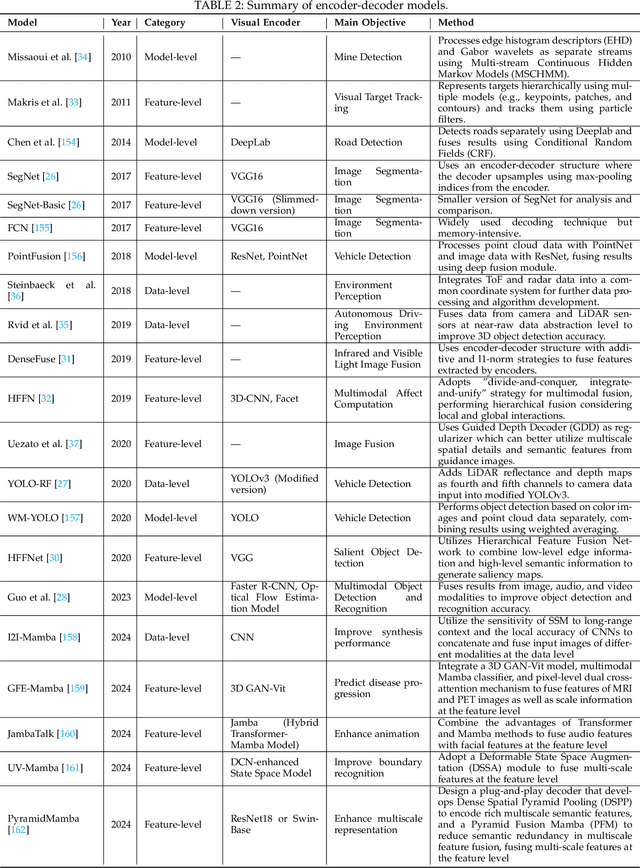

Abstract:This survey offers a comprehensive review of recent advancements in multimodal alignment and fusion within machine learning, spurred by the growing diversity of data types such as text, images, audio, and video. Multimodal integration enables improved model accuracy and broader applicability by leveraging complementary information across different modalities, as well as facilitating knowledge transfer in situations with limited data. We systematically categorize and analyze existing alignment and fusion techniques, drawing insights from an extensive review of more than 200 relevant papers. Furthermore, this survey addresses the challenges of multimodal data integration - including alignment issues, noise resilience, and disparities in feature representation - while focusing on applications in domains like social media analysis, medical imaging, and emotion recognition. The insights provided are intended to guide future research towards optimizing multimodal learning systems to enhance their scalability, robustness, and generalizability across various applications.

Network Inversion and Its Applications

Nov 26, 2024

Abstract:Neural networks have emerged as powerful tools across various applications, yet their decision-making process often remains opaque, leading to them being perceived as "black boxes." This opacity raises concerns about their interpretability and reliability, especially in safety-critical scenarios. Network inversion techniques offer a solution by allowing us to peek inside these black boxes, revealing the features and patterns learned by the networks behind their decision-making processes and thereby provide valuable insights into how neural networks arrive at their conclusions, making them more interpretable and trustworthy. This paper presents a simple yet effective approach to network inversion using a meticulously conditioned generator that learns the data distribution in the input space of the trained neural network, enabling the reconstruction of inputs that would most likely lead to the desired outputs. To capture the diversity in the input space for a given output, instead of simply revealing the conditioning labels to the generator, we encode the conditioning label information into vectors and intermediate matrices and further minimize the cosine similarity between features of the generated images. Additionally, we incorporate feature orthogonality as a regularization term to boost image diversity which penalises the deviations of the Gram matrix of the features from the identity matrix, ensuring orthogonality and promoting distinct, non-redundant representations for each label. The paper concludes by exploring immediate applications of the proposed network inversion approach in interpretability, out-of-distribution detection, and training data reconstruction.

Text-to-Image Synthesis: A Decade Survey

Nov 25, 2024

Abstract:When humans read a specific text, they often visualize the corresponding images, and we hope that computers can do the same. Text-to-image synthesis (T2I), which focuses on generating high-quality images from textual descriptions, has become a significant aspect of Artificial Intelligence Generated Content (AIGC) and a transformative direction in artificial intelligence research. Foundation models play a crucial role in T2I. In this survey, we review over 440 recent works on T2I. We start by briefly introducing how GANs, autoregressive models, and diffusion models have been used for image generation. Building on this foundation, we discuss the development of these models for T2I, focusing on their generative capabilities and diversity when conditioned on text. We also explore cutting-edge research on various aspects of T2I, including performance, controllability, personalized generation, safety concerns, and consistency in content and spatial relationships. Furthermore, we summarize the datasets and evaluation metrics commonly used in T2I research. Finally, we discuss the potential applications of T2I within AIGC, along with the challenges and future research opportunities in this field.

ConsistentAvatar: Learning to Diffuse Fully Consistent Talking Head Avatar with Temporal Guidance

Nov 23, 2024

Abstract:Diffusion models have shown impressive potential on talking head generation. While plausible appearance and talking effect are achieved, these methods still suffer from temporal, 3D or expression inconsistency due to the error accumulation and inherent limitation of single-image generation ability. In this paper, we propose ConsistentAvatar, a novel framework for fully consistent and high-fidelity talking avatar generation. Instead of directly employing multi-modal conditions to the diffusion process, our method learns to first model the temporal representation for stability between adjacent frames. Specifically, we propose a Temporally-Sensitive Detail (TSD) map containing high-frequency feature and contours that vary significantly along the time axis. Using a temporal consistent diffusion module, we learn to align TSD of the initial result to that of the video frame ground truth. The final avatar is generated by a fully consistent diffusion module, conditioned on the aligned TSD, rough head normal, and emotion prompt embedding. We find that the aligned TSD, which represents the temporal patterns, constrains the diffusion process to generate temporally stable talking head. Further, its reliable guidance complements the inaccuracy of other conditions, suppressing the accumulated error while improving the consistency on various aspects. Extensive experiments demonstrate that ConsistentAvatar outperforms the state-of-the-art methods on the generated appearance, 3D, expression and temporal consistency. Project page: https://njust-yang.github.io/ConsistentAvatar.github.io/

Hierarchical Cross-Attention Network for Virtual Try-On

Nov 23, 2024

Abstract:In this paper, we present an innovative solution for the challenges of the virtual try-on task: our novel Hierarchical Cross-Attention Network (HCANet). HCANet is crafted with two primary stages: geometric matching and try-on, each playing a crucial role in delivering realistic virtual try-on outcomes. A key feature of HCANet is the incorporation of a novel Hierarchical Cross-Attention (HCA) block into both stages, enabling the effective capture of long-range correlations between individual and clothing modalities. The HCA block enhances the depth and robustness of the network. By adopting a hierarchical approach, it facilitates a nuanced representation of the interaction between the person and clothing, capturing intricate details essential for an authentic virtual try-on experience. Our experiments establish the prowess of HCANet. The results showcase its performance across both quantitative metrics and subjective evaluations of visual realism. HCANet stands out as a state-of-the-art solution, demonstrating its capability to generate virtual try-on results that excel in accuracy and realism. This marks a significant step in advancing virtual try-on technologies.

AllRestorer: All-in-One Transformer for Image Restoration under Composite Degradations

Nov 16, 2024Abstract:Image restoration models often face the simultaneous interaction of multiple degradations in real-world scenarios. Existing approaches typically handle single or composite degradations based on scene descriptors derived from text or image embeddings. However, due to the varying proportions of different degradations within an image, these scene descriptors may not accurately differentiate between degradations, leading to suboptimal restoration in practical applications. To address this issue, we propose a novel Transformer-based restoration framework, AllRestorer. In AllRestorer, we enable the model to adaptively consider all image impairments, thereby avoiding errors from scene descriptor misdirection. Specifically, we introduce an All-in-One Transformer Block (AiOTB), which adaptively removes all degradations present in a given image by modeling the relationships between all degradations and the image embedding in latent space. To accurately address different variations potentially present within the same type of degradation and minimize ambiguity, AiOTB utilizes a composite scene descriptor consisting of both image and text embeddings to define the degradation. Furthermore, AiOTB includes an adaptive weight for each degradation, allowing for precise control of the restoration intensity. By leveraging AiOTB, AllRestorer avoids misdirection caused by inaccurate scene descriptors, achieving a 5.00 dB increase in PSNR compared to the baseline on the CDD-11 dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge