music generation

Music generation is the task of generating music or music-like sounds from a model or algorithm.

Papers and Code

TCSinger 2: Customizable Multilingual Zero-shot Singing Voice Synthesis

May 20, 2025

Customizable multilingual zero-shot singing voice synthesis (SVS) has various potential applications in music composition and short video dubbing. However, existing SVS models overly depend on phoneme and note boundary annotations, limiting their robustness in zero-shot scenarios and producing poor transitions between phonemes and notes. Moreover, they also lack effective multi-level style control via diverse prompts. To overcome these challenges, we introduce TCSinger 2, a multi-task multilingual zero-shot SVS model with style transfer and style control based on various prompts. TCSinger 2 mainly includes three key modules: 1) Blurred Boundary Content (BBC) Encoder, predicts duration, extends content embedding, and applies masking to the boundaries to enable smooth transitions. 2) Custom Audio Encoder, uses contrastive learning to extract aligned representations from singing, speech, and textual prompts. 3) Flow-based Custom Transformer, leverages Cus-MOE, with F0 supervision, enhancing both the synthesis quality and style modeling of the generated singing voice. Experimental results show that TCSinger 2 outperforms baseline models in both subjective and objective metrics across multiple related tasks.

Evaluating the Impact of AI-Powered Audiovisual Personalization on Learner Emotion, Focus, and Learning Outcomes

May 05, 2025Independent learners often struggle with sustaining focus and emotional regulation in unstructured or distracting settings. Although some rely on ambient aids such as music, ASMR, or visual backgrounds to support concentration, these tools are rarely integrated into cohesive, learner-centered systems. Moreover, existing educational technologies focus primarily on content adaptation and feedback, overlooking the emotional and sensory context in which learning takes place. Large language models have demonstrated powerful multimodal capabilities including the ability to generate and adapt text, audio, and visual content. Educational research has yet to fully explore their potential in creating personalized audiovisual learning environments. To address this gap, we introduce an AI-powered system that uses LLMs to generate personalized multisensory study environments. Users select or generate customized visual themes (e.g., abstract vs. realistic, static vs. animated) and auditory elements (e.g., white noise, ambient ASMR, familiar vs. novel sounds) to create immersive settings aimed at reducing distraction and enhancing emotional stability. Our primary research question investigates how combinations of personalized audiovisual elements affect learner cognitive load and engagement. Using a mixed-methods design that incorporates biometric measures and performance outcomes, this study evaluates the effectiveness of LLM-driven sensory personalization. The findings aim to advance emotionally responsive educational technologies and extend the application of multimodal LLMs into the sensory dimension of self-directed learning.

Detect All-Type Deepfake Audio: Wavelet Prompt Tuning for Enhanced Auditory Perception

Apr 09, 2025

The rapid advancement of audio generation technologies has escalated the risks of malicious deepfake audio across speech, sound, singing voice, and music, threatening multimedia security and trust. While existing countermeasures (CMs) perform well in single-type audio deepfake detection (ADD), their performance declines in cross-type scenarios. This paper is dedicated to studying the alltype ADD task. We are the first to comprehensively establish an all-type ADD benchmark to evaluate current CMs, incorporating cross-type deepfake detection across speech, sound, singing voice, and music. Then, we introduce the prompt tuning self-supervised learning (PT-SSL) training paradigm, which optimizes SSL frontend by learning specialized prompt tokens for ADD, requiring 458x fewer trainable parameters than fine-tuning (FT). Considering the auditory perception of different audio types,we propose the wavelet prompt tuning (WPT)-SSL method to capture type-invariant auditory deepfake information from the frequency domain without requiring additional training parameters, thereby enhancing performance over FT in the all-type ADD task. To achieve an universally CM, we utilize all types of deepfake audio for co-training. Experimental results demonstrate that WPT-XLSR-AASIST achieved the best performance, with an average EER of 3.58% across all evaluation sets. The code is available online.

A Computational Cognitive Model for Processing Repetitions of Hierarchical Relations

Apr 14, 2025Patterns are fundamental to human cognition, enabling the recognition of structure and regularity across diverse domains. In this work, we focus on structural repeats, patterns that arise from the repetition of hierarchical relations within sequential data, and develop a candidate computational model of how humans detect and understand such structural repeats. Based on a weighted deduction system, our model infers the minimal generative process of a given sequence in the form of a Template program, a formalism that enriches the context-free grammar with repetition combinators. Such representation efficiently encodes the repetition of sub-computations in a recursive manner. As a proof of concept, we demonstrate the expressiveness of our model on short sequences from music and action planning. The proposed model offers broader insights into the mental representations and cognitive mechanisms underlying human pattern recognition.

Coupling the Heart to Musical Machines

May 05, 2025

Biofeedback is being used more recently as a general control paradigm for human-computer interfaces (HCIs). While biofeedback especially from breath has seen increasing uptake as a controller for novel musical interfaces, new interfaces for musical expression (NIMEs), the community has not given as much attention to the heart. The heart is just as intimate a part of music as breath and it is argued that the heart determines our perception of time and so indirectly our perception of music. Inspired by this I demonstrate a photoplethysmogram (PPG)-based NIME controller using heart rate as a 1D control parameter to transform the qualities of sounds in real-time over a Bluetooth wireless HCI. I apply time scaling to "warp" audio buffers inbound to the sound card, and play these transformed audio buffers back to the listener wearing the PPG sensor, creating a hypothetical perceptual biofeedback loop: changes in sound change heart rate to change PPG measurements to change sound. I discuss how a sound-heart-PPG biofeedback loop possibly affords greater control and/or variety of movements with a 1D controller, how controlling the space and/or time scale of sound playback with biofeedback makes for possibilities in performance ambience, and I briefly discuss generative latent spaces as a possible way to extend a 1D PPG control space.

Solving Copyright Infringement on Short Video Platforms: Novel Datasets and an Audio Restoration Deep Learning Pipeline

Apr 30, 2025

Short video platforms like YouTube Shorts and TikTok face significant copyright compliance challenges, as infringers frequently embed arbitrary background music (BGM) to obscure original soundtracks (OST) and evade content originality detection. To tackle this issue, we propose a novel pipeline that integrates Music Source Separation (MSS) and cross-modal video-music retrieval (CMVMR). Our approach effectively separates arbitrary BGM from the original OST, enabling the restoration of authentic video audio tracks. To support this work, we introduce two domain-specific datasets: OASD-20K for audio separation and OSVAR-160 for pipeline evaluation. OASD-20K contains 20,000 audio clips featuring mixed BGM and OST pairs, while OSVAR160 is a unique benchmark dataset comprising 1,121 video and mixed-audio pairs, specifically designed for short video restoration tasks. Experimental results demonstrate that our pipeline not only removes arbitrary BGM with high accuracy but also restores OSTs, ensuring content integrity. This approach provides an ethical and scalable solution to copyright challenges in user-generated content on short video platforms.

Kimi-Audio Technical Report

Apr 25, 2025

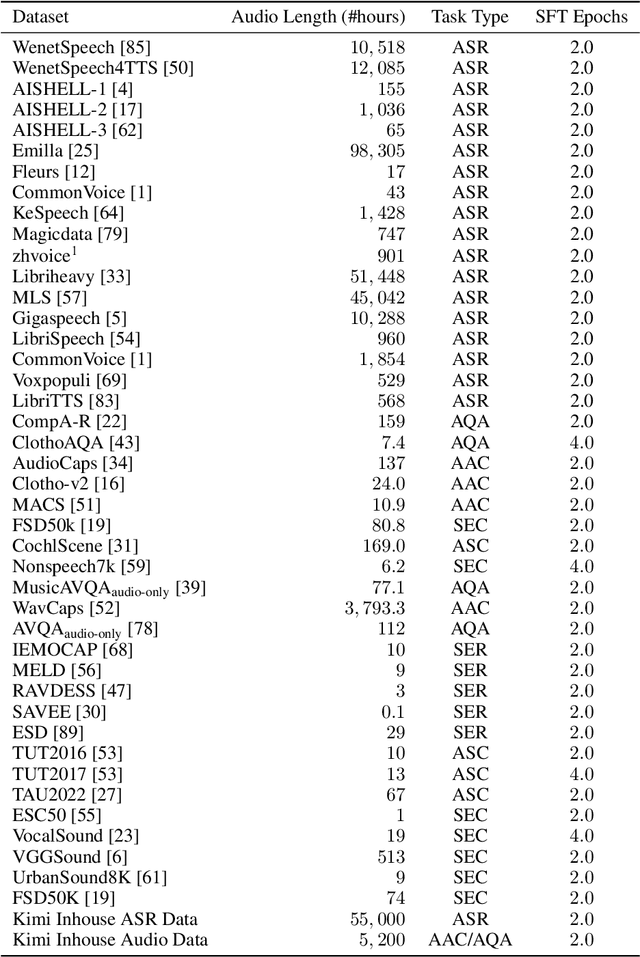

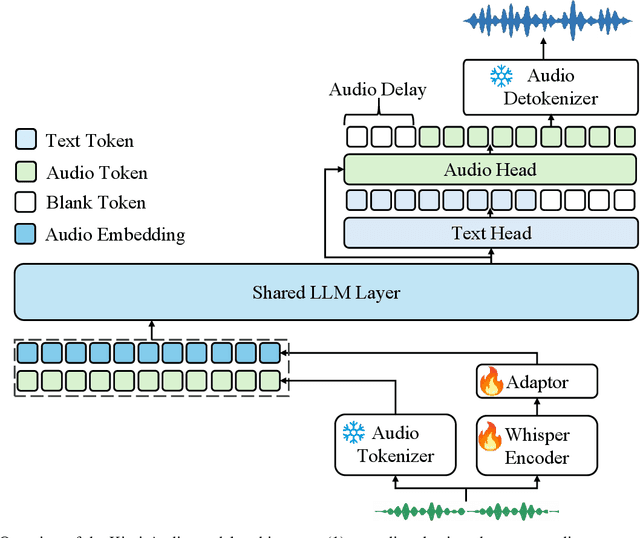

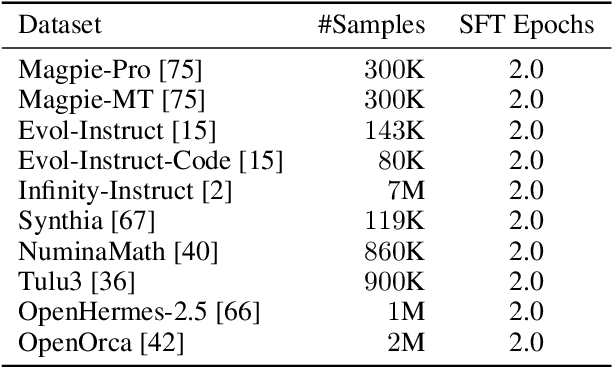

We present Kimi-Audio, an open-source audio foundation model that excels in audio understanding, generation, and conversation. We detail the practices in building Kimi-Audio, including model architecture, data curation, training recipe, inference deployment, and evaluation. Specifically, we leverage a 12.5Hz audio tokenizer, design a novel LLM-based architecture with continuous features as input and discrete tokens as output, and develop a chunk-wise streaming detokenizer based on flow matching. We curate a pre-training dataset that consists of more than 13 million hours of audio data covering a wide range of modalities including speech, sound, and music, and build a pipeline to construct high-quality and diverse post-training data. Initialized from a pre-trained LLM, Kimi-Audio is continual pre-trained on both audio and text data with several carefully designed tasks, and then fine-tuned to support a diverse of audio-related tasks. Extensive evaluation shows that Kimi-Audio achieves state-of-the-art performance on a range of audio benchmarks including speech recognition, audio understanding, audio question answering, and speech conversation. We release the codes, model checkpoints, as well as the evaluation toolkits in https://github.com/MoonshotAI/Kimi-Audio.

Réduire le bruit grâce à la réalité augmentée sonore -- Auditory Concealer

Apr 08, 2025This report presents the work done over 22 weeks of internship within the Sound Perception and Design team of the Sciences and Technologies of Music and Sound (STMS) laboratory at the Institute for Research and Coordination in Acoustics/Music (IRCAM). As part of the launch of the project Reducing Noise with Augmented Reality (ReNAR); which aims to create a tool to reduce in real-time the cognitive impact of sounds perceived as unpleasant or annoying in indoor environments; an initial study was conducted to validate the feasibility and effectiveness of a new masking approach called concealer. The main hypothesis is that the concealer approach could provide better results than a masker approach in terms of perceived pleasantness. Mixtures of two noise sources (ventilation) and five masking sounds (water sounds) were generated using both approaches at various levels. The evaluation of the perceived pleasantness of these mixtures showed that the masker approach remains more effective than the concealer approach, regardless of the noise source, water sound, or level used.

Unsupervised Estimation of Nonlinear Audio Effects: Comparing Diffusion-Based and Adversarial approaches

Apr 07, 2025Accurately estimating nonlinear audio effects without access to paired input-output signals remains a challenging problem.This work studies unsupervised probabilistic approaches for solving this task. We introduce a method, novel for this application, based on diffusion generative models for blind system identification, enabling the estimation of unknown nonlinear effects using black- and gray-box models. This study compares this method with a previously proposed adversarial approach, analyzing the performance of both methods under different parameterizations of the effect operator and varying lengths of available effected recordings.Through experiments on guitar distortion effects, we show that the diffusion-based approach provides more stable results and is less sensitive to data availability, while the adversarial approach is superior at estimating more pronounced distortion effects. Our findings contribute to the robust unsupervised blind estimation of audio effects, demonstrating the potential of diffusion models for system identification in music technology.

M2D2: Exploring General-purpose Audio-Language Representations Beyond CLAP

Mar 28, 2025Contrastive language-audio pre-training (CLAP) has addressed audio-language tasks such as audio-text retrieval by aligning audio and text in a common feature space. While CLAP addresses general audio-language tasks, its audio features do not generalize well in audio tasks. In contrast, self-supervised learning (SSL) models learn general-purpose audio features that perform well in diverse audio tasks. We pursue representation learning that can be widely used in audio applications and hypothesize that a method that learns both general audio features and CLAP features should achieve our goal, which we call a general-purpose audio-language representation. To implement our hypothesis, we propose M2D2, a second-generation masked modeling duo (M2D) that combines an SSL M2D and CLAP. M2D2 learns two types of features using two modalities (audio and text) in a two-stage training process. It also utilizes advanced LLM-based sentence embeddings in CLAP training for powerful semantic supervision. In the first stage, M2D2 learns generalizable audio features from M2D and CLAP, where CLAP aligns the features with the fine LLM-based semantic embeddings. In the second stage, it learns CLAP features using the audio features learned from the LLM-based embeddings. Through these pre-training stages, M2D2 should enhance generalizability and performance in its audio and CLAP features. Experiments validated that M2D2 achieves effective general-purpose audio-language representation, highlighted with SOTA fine-tuning mAP of 49.0 for AudioSet, SOTA performance in music tasks, and top-level performance in audio-language tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge