Zhi Gao

School of Remote Sensing and Information Engineering, Wuhan University, Wuhan, China

Global-Local MAV Detection under Challenging Conditions based on Appearance and Motion

Dec 18, 2023

Abstract:Visual detection of micro aerial vehicles (MAVs) has received increasing research attention in recent years due to its importance in many applications. However, the existing approaches based on either appearance or motion features of MAVs still face challenges when the background is complex, the MAV target is small, or the computation resource is limited. In this paper, we propose a global-local MAV detector that can fuse both motion and appearance features for MAV detection under challenging conditions. This detector first searches MAV target using a global detector and then switches to a local detector which works in an adaptive search region to enhance accuracy and efficiency. Additionally, a detector switcher is applied to coordinate the global and local detectors. A new dataset is created to train and verify the effectiveness of the proposed detector. This dataset contains more challenging scenarios that can occur in practice. Extensive experiments on three challenging datasets show that the proposed detector outperforms the state-of-the-art ones in terms of detection accuracy and computational efficiency. In particular, this detector can run with near real-time frame rate on NVIDIA Jetson NX Xavier, which demonstrates the usefulness of our approach for real-world applications. The dataset is available at https://github.com/WestlakeIntelligentRobotics/GLAD. In addition, A video summarizing this work is available at https://youtu.be/Tv473mAzHbU.

CLOVA: A Closed-Loop Visual Assistant with Tool Usage and Update

Dec 18, 2023Abstract:Leveraging large language models (LLMs) to integrate off-the-shelf tools (e.g., visual models and image processing functions) is a promising research direction to build powerful visual assistants for solving diverse visual tasks. However, the learning capability is rarely explored in existing methods, as they freeze the used tools after deployment, thereby limiting the generalization to new environments requiring specific knowledge. In this paper, we propose CLOVA, a Closed-LOop Visual Assistant to address this limitation, which encompasses inference, reflection, and learning phases in a closed-loop framework. During inference, LLMs generate programs and execute corresponding tools to accomplish given tasks. The reflection phase introduces a multimodal global-local reflection scheme to analyze whether and which tool needs to be updated based on environmental feedback. Lastly, the learning phase uses three flexible manners to collect training data in real-time and introduces a novel prompt tuning scheme to update the tools, enabling CLOVA to efficiently learn specific knowledge for new environments without human involvement. Experiments show that CLOVA outperforms tool-usage methods by 5% in visual question answering and multiple-image reasoning tasks, by 10% in knowledge tagging tasks, and by 20% in image editing tasks, highlighting the significance of the learning capability for general visual assistants.

Exploring Data Geometry for Continual Learning

Apr 08, 2023Abstract:Continual learning aims to efficiently learn from a non-stationary stream of data while avoiding forgetting the knowledge of old data. In many practical applications, data complies with non-Euclidean geometry. As such, the commonly used Euclidean space cannot gracefully capture non-Euclidean geometric structures of data, leading to inferior results. In this paper, we study continual learning from a novel perspective by exploring data geometry for the non-stationary stream of data. Our method dynamically expands the geometry of the underlying space to match growing geometric structures induced by new data, and prevents forgetting by keeping geometric structures of old data into account. In doing so, making use of the mixed curvature space, we propose an incremental search scheme, through which the growing geometric structures are encoded. Then, we introduce an angular-regularization loss and a neighbor-robustness loss to train the model, capable of penalizing the change of global geometric structures and local geometric structures. Experiments show that our method achieves better performance than baseline methods designed in Euclidean space.

Meta-causal Learning for Single Domain Generalization

Apr 07, 2023Abstract:Single domain generalization aims to learn a model from a single training domain (source domain) and apply it to multiple unseen test domains (target domains). Existing methods focus on expanding the distribution of the training domain to cover the target domains, but without estimating the domain shift between the source and target domains. In this paper, we propose a new learning paradigm, namely simulate-analyze-reduce, which first simulates the domain shift by building an auxiliary domain as the target domain, then learns to analyze the causes of domain shift, and finally learns to reduce the domain shift for model adaptation. Under this paradigm, we propose a meta-causal learning method to learn meta-knowledge, that is, how to infer the causes of domain shift between the auxiliary and source domains during training. We use the meta-knowledge to analyze the shift between the target and source domains during testing. Specifically, we perform multiple transformations on source data to generate the auxiliary domain, perform counterfactual inference to learn to discover the causal factors of the shift between the auxiliary and source domains, and incorporate the inferred causality into factor-aware domain alignments. Extensive experiments on several benchmarks of image classification show the effectiveness of our method.

Imbalance Knowledge-Driven Multi-modal Network for Land-Cover Semantic Segmentation Using Images and LiDAR Point Clouds

Mar 28, 2023Abstract:Despite the good results that have been achieved in unimodal segmentation, the inherent limitations of individual data increase the difficulty of achieving breakthroughs in performance. For that reason, multi-modal learning is increasingly being explored within the field of remote sensing. The present multi-modal methods usually map high-dimensional features to low-dimensional spaces as a preprocess before feature extraction to address the nonnegligible domain gap, which inevitably leads to information loss. To address this issue, in this paper we present our novel Imbalance Knowledge-Driven Multi-modal Network (IKD-Net) to extract features from raw multi-modal heterogeneous data directly. IKD-Net is capable of mining imbalance information across modalities while utilizing a strong modal to drive the feature map refinement of the weaker ones in the global and categorical perspectives by way of two sophisticated plug-and-play modules: the Global Knowledge-Guided (GKG) and Class Knowledge-Guided (CKG) gated modules. The whole network then is optimized using a holistic loss function. While we were developing IKD-Net, we also established a new dataset called the National Agriculture Imagery Program and 3D Elevation Program Combined dataset in California (N3C-California), which provides a particular benchmark for multi-modal joint segmentation tasks. In our experiments, IKD-Net outperformed the benchmarks and state-of-the-art methods both in the N3C-California and the small-scale ISPRS Vaihingen dataset. IKD-Net has been ranked first on the real-time leaderboard for the GRSS DFC 2018 challenge evaluation until this paper's submission.

SyreaNet: A Physically Guided Underwater Image Enhancement Framework Integrating Synthetic and Real Images

Feb 16, 2023Abstract:Underwater image enhancement (UIE) is vital for high-level vision-related underwater tasks. Although learning-based UIE methods have made remarkable achievements in recent years, it's still challenging for them to consistently deal with various underwater conditions, which could be caused by: 1) the use of the simplified atmospheric image formation model in UIE may result in severe errors; 2) the network trained solely with synthetic images might have difficulty in generalizing well to real underwater images. In this work, we, for the first time, propose a framework \textit{SyreaNet} for UIE that integrates both synthetic and real data under the guidance of the revised underwater image formation model and novel domain adaptation (DA) strategies. First, an underwater image synthesis module based on the revised model is proposed. Then, a physically guided disentangled network is designed to predict the clear images by combining both synthetic and real underwater images. The intra- and inter-domain gaps are abridged by fully exchanging the domain knowledge. Extensive experiments demonstrate the superiority of our framework over other state-of-the-art (SOTA) learning-based UIE methods qualitatively and quantitatively. The code and dataset are publicly available at https://github.com/RockWenJJ/SyreaNet.git.

TJ-FlyingFish: Design and Implementation of an Aerial-Aquatic Quadrotor with Tiltable Propulsion Units

Feb 07, 2023Abstract:Aerial-aquatic vehicles are capable to move in the two most dominant fluids, making them more promising for a wide range of applications. We propose a prototype with special designs for propulsion and thruster configuration to cope with the vast differences in the fluid properties of water and air. For propulsion, the operating range is switched for the different mediums by the dual-speed propulsion unit, providing sufficient thrust and also ensuring output efficiency. For thruster configuration, thrust vectoring is realized by the rotation of the propulsion unit around the mount arm, thus enhancing the underwater maneuverability. This paper presents a quadrotor prototype of this concept and the design details and realization in practice.

DynaVIG: Monocular Vision/INS/GNSS Integrated Navigation and Object Tracking for AGV in Dynamic Scenes

Nov 26, 2022

Abstract:Visual-Inertial Odometry (VIO) usually suffers from drifting over long-time runs, the accuracy is easily affected by dynamic objects. We propose DynaVIG, a navigation and object tracking system based on the integration of Monocular Vision, Inertial Navigation System (INS), and Global Navigation Satellite System (GNSS). Our system aims to provide an accurate global estimation of the navigation states and object poses for the automated ground vehicle (AGV) in dynamic scenes. Due to the scale ambiguity of the object, a prior height model is proposed to initialize the object pose, and the scale is continuously estimated with the aid of GNSS and INS. To precisely track the object with complex moving, we establish an accurate dynamics model according to its motion state. Then the multi-sensor observations are optimized in a unified framework. Experiments on the KITTI dataset demonstrate that the multisensor fusion can effectively improve the accuracy of navigation and object tracking, compared to state-of-the-art methods. In addition, the proposed system achieves good estimation of the objects that change speed or direction.

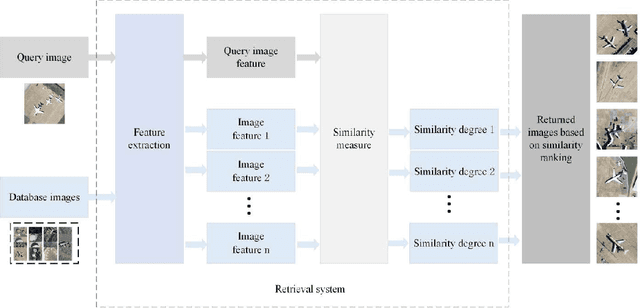

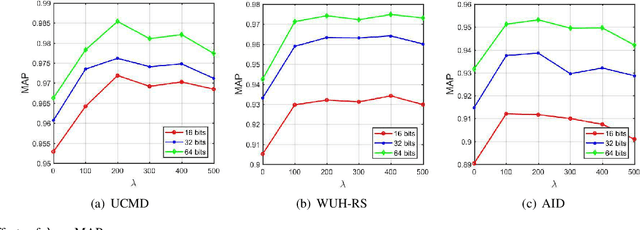

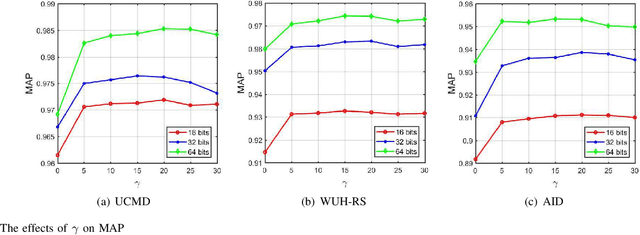

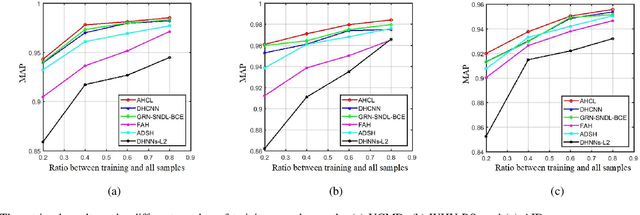

Asymmetric Hash Code Learning for Remote Sensing Image Retrieval

Jan 15, 2022

Abstract:Remote sensing image retrieval (RSIR), aiming at searching for a set of similar items to a given query image, is a very important task in remote sensing applications. Deep hashing learning as the current mainstream method has achieved satisfactory retrieval performance. On one hand, various deep neural networks are used to extract semantic features of remote sensing images. On the other hand, the hashing techniques are subsequently adopted to map the high-dimensional deep features to the low-dimensional binary codes. This kind of methods attempts to learn one hash function for both the query and database samples in a symmetric way. However, with the number of database samples increasing, it is typically time-consuming to generate the hash codes of large-scale database images. In this paper, we propose a novel deep hashing method, named asymmetric hash code learning (AHCL), for RSIR. The proposed AHCL generates the hash codes of query and database images in an asymmetric way. In more detail, the hash codes of query images are obtained by binarizing the output of the network, while the hash codes of database images are directly learned by solving the designed objective function. In addition, we combine the semantic information of each image and the similarity information of pairs of images as supervised information to train a deep hashing network, which improves the representation ability of deep features and hash codes. The experimental results on three public datasets demonstrate that the proposed method outperforms symmetric methods in terms of retrieval accuracy and efficiency. The source code is available at https://github.com/weiweisong415/Demo AHCL for TGRS2022.

Generating Multivariate Load States Using a Conditional Variational Autoencoder

Oct 21, 2021

Abstract:For planning of power systems and for the calibration of operational tools, it is essential to analyse system performance in a large range of representative scenarios. When the available historical data is limited, generative models are a promising solution, but modelling high-dimensional dependencies is challenging. In this paper, a multivariate load state generating model on the basis of a conditional variational autoencoder (CVAE) neural network is proposed. Going beyond common CVAE implementations, the model includes stochastic variation of output samples under given latent vectors and co-optimizes the parameters for this output variability. It is shown that this improves statistical properties of the generated data. The quality of generated multivariate loads is evaluated using univariate and multivariate performance metrics. A generation adequacy case study on the European network is used to illustrate model's ability to generate realistic tail distributions. The experiments demonstrate that the proposed generator outperforms other data generating mechanisms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge