Mehrtash Harandi

Segment Any Tumour: An Uncertainty-Aware Vision Foundation Model for Whole-Body Analysis

Nov 11, 2025Abstract:Prompt-driven vision foundation models, such as the Segment Anything Model, have recently demonstrated remarkable adaptability in computer vision. However, their direct application to medical imaging remains challenging due to heterogeneous tissue structures, imaging artefacts, and low-contrast boundaries, particularly in tumours and cancer primaries leading to suboptimal segmentation in ambiguous or overlapping lesion regions. Here, we present Segment Any Tumour 3D (SAT3D), a lightweight volumetric foundation model designed to enable robust and generalisable tumour segmentation across diverse medical imaging modalities. SAT3D integrates a shifted-window vision transformer for hierarchical volumetric representation with an uncertainty-aware training pipeline that explicitly incorporates uncertainty estimates as prompts to guide reliable boundary prediction in low-contrast regions. Adversarial learning further enhances model performance for the ambiguous pathological regions. We benchmark SAT3D against three recent vision foundation models and nnUNet across 11 publicly available datasets, encompassing 3,884 tumour and cancer cases for training and 694 cases for in-distribution evaluation. Trained on 17,075 3D volume-mask pairs across multiple modalities and cancer primaries, SAT3D demonstrates strong generalisation and robustness. To facilitate practical use and clinical translation, we developed a 3D Slicer plugin that enables interactive, prompt-driven segmentation and visualisation using the trained SAT3D model. Extensive experiments highlight its effectiveness in improving segmentation accuracy under challenging and out-of-distribution scenarios, underscoring its potential as a scalable foundation model for medical image analysis.

Modality Alignment across Trees on Heterogeneous Hyperbolic Manifolds

Oct 31, 2025

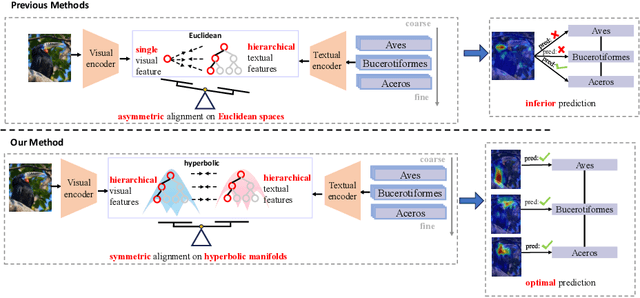

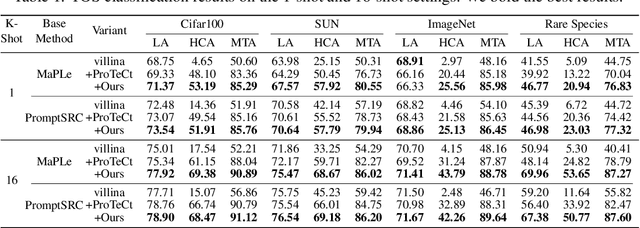

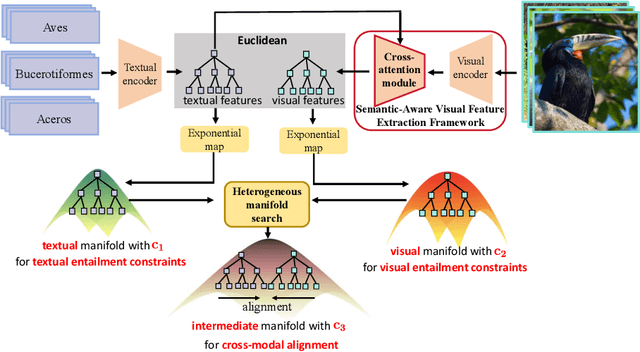

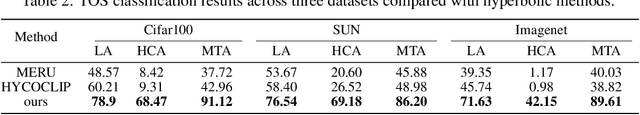

Abstract:Modality alignment is critical for vision-language models (VLMs) to effectively integrate information across modalities. However, existing methods extract hierarchical features from text while representing each image with a single feature, leading to asymmetric and suboptimal alignment. To address this, we propose Alignment across Trees, a method that constructs and aligns tree-like hierarchical features for both image and text modalities. Specifically, we introduce a semantic-aware visual feature extraction framework that applies a cross-attention mechanism to visual class tokens from intermediate Transformer layers, guided by textual cues to extract visual features with coarse-to-fine semantics. We then embed the feature trees of the two modalities into hyperbolic manifolds with distinct curvatures to effectively model their hierarchical structures. To align across the heterogeneous hyperbolic manifolds with different curvatures, we formulate a KL distance measure between distributions on heterogeneous manifolds, and learn an intermediate manifold for manifold alignment by minimizing the distance. We prove the existence and uniqueness of the optimal intermediate manifold. Experiments on taxonomic open-set classification tasks across multiple image datasets demonstrate that our method consistently outperforms strong baselines under few-shot and cross-domain settings.

Curvature Learning for Generalization of Hyperbolic Neural Networks

Aug 24, 2025Abstract:Hyperbolic neural networks (HNNs) have demonstrated notable efficacy in representing real-world data with hierarchical structures via exploiting the geometric properties of hyperbolic spaces characterized by negative curvatures. Curvature plays a crucial role in optimizing HNNs. Inappropriate curvatures may cause HNNs to converge to suboptimal parameters, degrading overall performance. So far, the theoretical foundation of the effect of curvatures on HNNs has not been developed. In this paper, we derive a PAC-Bayesian generalization bound of HNNs, highlighting the role of curvatures in the generalization of HNNs via their effect on the smoothness of the loss landscape. Driven by the derived bound, we propose a sharpness-aware curvature learning method to smooth the loss landscape, thereby improving the generalization of HNNs. In our method, we design a scope sharpness measure for curvatures, which is minimized through a bi-level optimization process. Then, we introduce an implicit differentiation algorithm that efficiently solves the bi-level optimization by approximating gradients of curvatures. We present the approximation error and convergence analyses of the proposed method, showing that the approximation error is upper-bounded, and the proposed method can converge by bounding gradients of HNNs. Experiments on four settings: classification, learning from long-tailed data, learning from noisy data, and few-shot learning show that our method can improve the performance of HNNs.

Exemplar-Free Continual Learning for State Space Models

May 24, 2025Abstract:State-Space Models (SSMs) excel at capturing long-range dependencies with structured recurrence, making them well-suited for sequence modeling. However, their evolving internal states pose challenges in adapting them under Continual Learning (CL). This is particularly difficult in exemplar-free settings, where the absence of prior data leaves updates to the dynamic SSM states unconstrained, resulting in catastrophic forgetting. To address this, we propose Inf-SSM, a novel and simple geometry-aware regularization method that utilizes the geometry of the infinite-dimensional Grassmannian to constrain state evolution during CL. Unlike classical continual learning methods that constrain weight updates, Inf-SSM regularizes the infinite-horizon evolution of SSMs encoded in their extended observability subspace. We show that enforcing this regularization requires solving a matrix equation known as the Sylvester equation, which typically incurs $\mathcal{O}(n^3)$ complexity. We develop a $\mathcal{O}(n^2)$ solution by exploiting the structure and properties of SSMs. This leads to an efficient regularization mechanism that can be seamlessly integrated into existing CL methods. Comprehensive experiments on challenging benchmarks, including ImageNet-R and Caltech-256, demonstrate a significant reduction in forgetting while improving accuracy across sequential tasks.

Unleashing Diffusion Transformers for Visual Correspondence by Modulating Massive Activations

May 24, 2025

Abstract:Pre-trained stable diffusion models (SD) have shown great advances in visual correspondence. In this paper, we investigate the capabilities of Diffusion Transformers (DiTs) for accurate dense correspondence. Distinct from SD, DiTs exhibit a critical phenomenon in which very few feature activations exhibit significantly larger values than others, known as \textit{massive activations}, leading to uninformative representations and significant performance degradation for DiTs. The massive activations consistently concentrate at very few fixed dimensions across all image patch tokens, holding little local information. We trace these dimension-concentrated massive activations and find that such concentration can be effectively localized by the zero-initialized Adaptive Layer Norm (AdaLN-zero). Building on these findings, we propose Diffusion Transformer Feature (DiTF), a training-free framework designed to extract semantic-discriminative features from DiTs. Specifically, DiTF employs AdaLN to adaptively localize and normalize massive activations with channel-wise modulation. In addition, we develop a channel discard strategy to further eliminate the negative impacts from massive activations. Experimental results demonstrate that our DiTF outperforms both DINO and SD-based models and establishes a new state-of-the-art performance for DiTs in different visual correspondence tasks (\eg, with +9.4\% on Spair-71k and +4.4\% on AP-10K-C.S.).

MoKD: Multi-Task Optimization for Knowledge Distillation

May 13, 2025

Abstract:Compact models can be effectively trained through Knowledge Distillation (KD), a technique that transfers knowledge from larger, high-performing teacher models. Two key challenges in Knowledge Distillation (KD) are: 1) balancing learning from the teacher's guidance and the task objective, and 2) handling the disparity in knowledge representation between teacher and student models. To address these, we propose Multi-Task Optimization for Knowledge Distillation (MoKD). MoKD tackles two main gradient issues: a) Gradient Conflicts, where task-specific and distillation gradients are misaligned, and b) Gradient Dominance, where one objective's gradient dominates, causing imbalance. MoKD reformulates KD as a multi-objective optimization problem, enabling better balance between objectives. Additionally, it introduces a subspace learning framework to project feature representations into a high-dimensional space, improving knowledge transfer. Our MoKD is demonstrated to outperform existing methods through extensive experiments on image classification using the ImageNet-1K dataset and object detection using the COCO dataset, achieving state-of-the-art performance with greater efficiency. To the best of our knowledge, MoKD models also achieve state-of-the-art performance compared to models trained from scratch.

Optimizing Specific and Shared Parameters for Efficient Parameter Tuning

Apr 04, 2025Abstract:Foundation models, with a vast number of parameters and pretraining on massive datasets, achieve state-of-the-art performance across various applications. However, efficiently adapting them to downstream tasks with minimal computational overhead remains a challenge. Parameter-Efficient Transfer Learning (PETL) addresses this by fine-tuning only a small subset of parameters while preserving pre-trained knowledge. In this paper, we propose SaS, a novel PETL method that effectively mitigates distributional shifts during fine-tuning. SaS integrates (1) a shared module that captures common statistical characteristics across layers using low-rank projections and (2) a layer-specific module that employs hypernetworks to generate tailored parameters for each layer. This dual design ensures an optimal balance between performance and parameter efficiency while introducing less than 0.05% additional parameters, making it significantly more compact than existing methods. Extensive experiments on diverse downstream tasks, few-shot settings and domain generalization demonstrate that SaS significantly enhances performance while maintaining superior parameter efficiency compared to existing methods, highlighting the importance of capturing both shared and layer-specific information in transfer learning. Code and data are available at https://anonymous.4open.science/r/SaS-PETL-3565.

PCGS: Progressive Compression of 3D Gaussian Splatting

Mar 11, 2025

Abstract:3D Gaussian Splatting (3DGS) achieves impressive rendering fidelity and speed for novel view synthesis. However, its substantial data size poses a significant challenge for practical applications. While many compression techniques have been proposed, they fail to efficiently utilize existing bitstreams in on-demand applications due to their lack of progressivity, leading to a waste of resource. To address this issue, we propose PCGS (Progressive Compression of 3D Gaussian Splatting), which adaptively controls both the quantity and quality of Gaussians (or anchors) to enable effective progressivity for on-demand applications. Specifically, for quantity, we introduce a progressive masking strategy that incrementally incorporates new anchors while refining existing ones to enhance fidelity. For quality, we propose a progressive quantization approach that gradually reduces quantization step sizes to achieve finer modeling of Gaussian attributes. Furthermore, to compact the incremental bitstreams, we leverage existing quantization results to refine probability prediction, improving entropy coding efficiency across progressive levels. Overall, PCGS achieves progressivity while maintaining compression performance comparable to SoTA non-progressive methods. Code available at: github.com/YihangChen-ee/PCGS.

Learning from Noisy Labels with Contrastive Co-Transformer

Mar 04, 2025

Abstract:Deep learning with noisy labels is an interesting challenge in weakly supervised learning. Despite their significant learning capacity, CNNs have a tendency to overfit in the presence of samples with noisy labels. Alleviating this issue, the well known Co-Training framework is used as a fundamental basis for our work. In this paper, we introduce a Contrastive Co-Transformer framework, which is simple and fast, yet able to improve the performance by a large margin compared to the state-of-the-art approaches. We argue the robustness of transformers when dealing with label noise. Our Contrastive Co-Transformer approach is able to utilize all samples in the dataset, irrespective of whether they are clean or noisy. Transformers are trained by a combination of contrastive loss and classification loss. Extensive experimental results on corrupted data from six standard benchmark datasets including Clothing1M, demonstrate that our Contrastive Co-Transformer is superior to existing state-of-the-art methods.

HAC++: Towards 100X Compression of 3D Gaussian Splatting

Jan 21, 2025

Abstract:3D Gaussian Splatting (3DGS) has emerged as a promising framework for novel view synthesis, boasting rapid rendering speed with high fidelity. However, the substantial Gaussians and their associated attributes necessitate effective compression techniques. Nevertheless, the sparse and unorganized nature of the point cloud of Gaussians (or anchors in our paper) presents challenges for compression. To achieve a compact size, we propose HAC++, which leverages the relationships between unorganized anchors and a structured hash grid, utilizing their mutual information for context modeling. Additionally, HAC++ captures intra-anchor contextual relationships to further enhance compression performance. To facilitate entropy coding, we utilize Gaussian distributions to precisely estimate the probability of each quantized attribute, where an adaptive quantization module is proposed to enable high-precision quantization of these attributes for improved fidelity restoration. Moreover, we incorporate an adaptive masking strategy to eliminate invalid Gaussians and anchors. Overall, HAC++ achieves a remarkable size reduction of over 100X compared to vanilla 3DGS when averaged on all datasets, while simultaneously improving fidelity. It also delivers more than 20X size reduction compared to Scaffold-GS. Our code is available at https://github.com/YihangChen-ee/HAC-plus.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge