Zhao Xu

Table-as-Search: Formulate Long-Horizon Agentic Information Seeking as Table Completion

Feb 06, 2026Abstract:Current Information Seeking (InfoSeeking) agents struggle to maintain focus and coherence during long-horizon exploration, as tracking search states, including planning procedure and massive search results, within one plain-text context is inherently fragile. To address this, we introduce \textbf{Table-as-Search (TaS)}, a structured planning framework that reformulates the InfoSeeking task as a Table Completion task. TaS maps each query into a structured table schema maintained in an external database, where rows represent search candidates and columns denote constraints or required information. This table precisely manages the search states: filled cells strictly record the history and search results, while empty cells serve as an explicit search plan. Crucially, TaS unifies three distinct InfoSeeking tasks: Deep Search, Wide Search, and the challenging DeepWide Search. Extensive experiments demonstrate that TaS significantly outperforms numerous state-of-the-art baselines across three kinds of benchmarks, including multi-agent framework and commercial systems. Furthermore, our analysis validates the TaS's superior robustness in long-horizon InfoSeeking, alongside its efficiency, scalability and flexibility. Code and datasets are publicly released at https://github.com/AIDC-AI/Marco-Search-Agent.

Vectra: A New Metric, Dataset, and Model for Visual Quality Assessment in E-Commerce In-Image Machine Translation

Jan 31, 2026Abstract:In-Image Machine Translation (IIMT) powers cross-border e-commerce product listings; existing research focuses on machine translation evaluation, while visual rendering quality is critical for user engagement. When facing context-dense product imagery and multimodal defects, current reference-based methods (e.g., SSIM, FID) lack explainability, while model-as-judge approaches lack domain-grounded, fine-grained reward signals. To bridge this gap, we introduce Vectra, to the best of our knowledge, the first reference-free, MLLM-driven visual quality assessment framework for e-commerce IIMT. Vectra comprises three components: (1) Vectra Score, a multidimensional quality metric system that decomposes visual quality into 14 interpretable dimensions, with spatially-aware Defect Area Ratio (DAR) quantification to reduce annotation ambiguity; (2) Vectra Dataset, constructed from 1.1M real-world product images via diversity-aware sampling, comprising a 2K benchmark for system evaluation, 30K reasoning-based annotations for instruction tuning, and 3.5K expert-labeled preferences for alignment and evaluation; and (3) Vectra Model, a 4B-parameter MLLM that generates both quantitative scores and diagnostic reasoning. Experiments demonstrate that Vectra achieves state-of-the-art correlation with human rankings, and our model outperforms leading MLLMs, including GPT-5 and Gemini-3, in scoring performance. The dataset and model will be released upon acceptance.

Text is All You Need for Vision-Language Model Jailbreaking

Jan 31, 2026Abstract:Large Vision-Language Models (LVLMs) are increasingly equipped with robust safety safeguards to prevent responses to harmful or disallowed prompts. However, these defenses often focus on analyzing explicit textual inputs or relevant visual scenes. In this work, we introduce Text-DJ, a novel jailbreak attack that bypasses these safeguards by exploiting the model's Optical Character Recognition (OCR) capability. Our methodology consists of three stages. First, we decompose a single harmful query into multiple and semantically related but more benign sub-queries. Second, we pick a set of distraction queries that are maximally irrelevant to the harmful query. Third, we present all decomposed sub-queries and distraction queries to the LVLM simultaneously as a grid of images, with the position of the sub-queries being middle within the grid. We demonstrate that this method successfully circumvents the safety alignment of state-of-the-art LVLMs. We argue this attack succeeds by (1) converting text-based prompts into images, bypassing standard text-based filters, and (2) inducing distractions, where the model's safety protocols fail to link the scattered sub-queries within a high number of irrelevant queries. Overall, our findings expose a critical vulnerability in LVLMs' OCR capabilities that are not robust to dispersed, multi-image adversarial inputs, highlighting the need for defenses for fragmented multimodal inputs.

Triplets Better Than Pairs: Towards Stable and Effective Self-Play Fine-Tuning for LLMs

Jan 13, 2026Abstract:Recently, self-play fine-tuning (SPIN) has been proposed to adapt large language models to downstream applications with scarce expert-annotated data, by iteratively generating synthetic responses from the model itself. However, SPIN is designed to optimize the current reward advantages of annotated responses over synthetic responses at hand, which may gradually vanish during iterations, leading to unstable optimization. Moreover, the utilization of reference policy induces a misalignment issue between the reward formulation for training and the metric for generation. To address these limitations, we propose a novel Triplet-based Self-Play fIne-tuNing (T-SPIN) method that integrates two key designs. First, beyond current advantages, T-SPIN additionally incorporates historical advantages between iteratively generated responses and proto-synthetic responses produced by the initial policy. Even if the current advantages diminish, historical advantages remain effective, stabilizing the overall optimization. Second, T-SPIN introduces the entropy constraint into the self-play framework, which is theoretically justified to support reference-free fine-tuning, eliminating the training-generation discrepancy. Empirical results on various tasks demonstrate not only the superior performance of T-SPIN over SPIN, but also its stable evolution during iterations. Remarkably, compared to supervised fine-tuning, T-SPIN achieves comparable or even better performance with only 25% samples, highlighting its effectiveness when faced with scarce annotated data.

Deep But Reliable: Advancing Multi-turn Reasoning for Thinking with Images

Dec 19, 2025

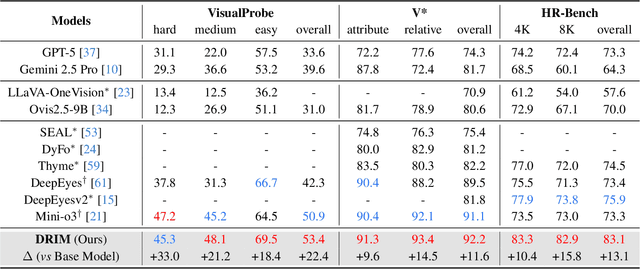

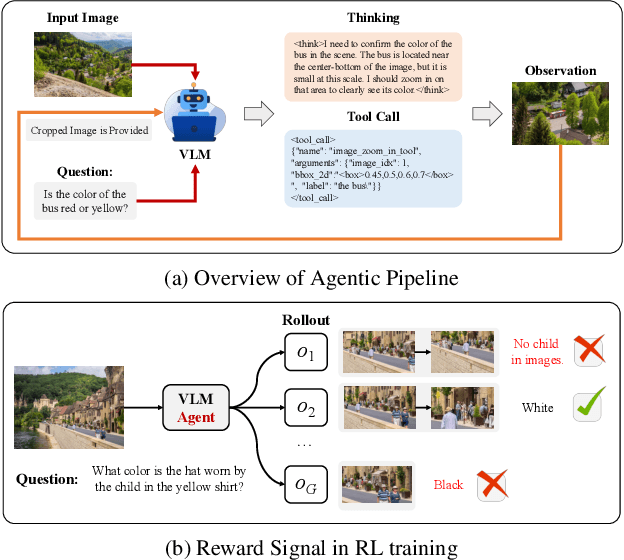

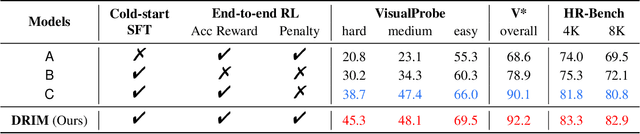

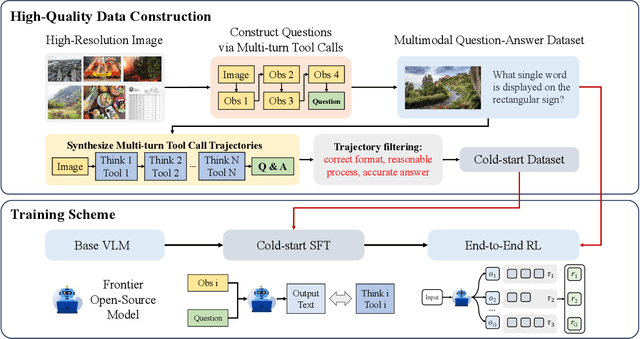

Abstract:Recent advances in large Vision-Language Models (VLMs) have exhibited strong reasoning capabilities on complex visual tasks by thinking with images in their Chain-of-Thought (CoT), which is achieved by actively invoking tools to analyze visual inputs rather than merely perceiving them. However, existing models often struggle to reflect on and correct themselves when attempting incorrect reasoning trajectories. To address this limitation, we propose DRIM, a model that enables deep but reliable multi-turn reasoning when thinking with images in its multimodal CoT. Our pipeline comprises three stages: data construction, cold-start SFT and RL. Based on a high-resolution image dataset, we construct high-difficulty and verifiable visual question-answer pairs, where solving each task requires multi-turn tool calls to reach the correct answer. In the SFT stage, we collect tool trajectories as cold-start data, guiding a multi-turn reasoning pattern. In the RL stage, we introduce redundancy-penalized policy optimization, which incentivizes the model to develop a self-reflective reasoning pattern. The basic idea is to impose judgment on reasoning trajectories and penalize those that produce incorrect answers without sufficient multi-scale exploration. Extensive experiments demonstrate that DRIM achieves superior performance on visual understanding benchmarks.

Omni-View: Unlocking How Generation Facilitates Understanding in Unified 3D Model based on Multiview images

Nov 10, 2025Abstract:This paper presents Omni-View, which extends the unified multimodal understanding and generation to 3D scenes based on multiview images, exploring the principle that "generation facilitates understanding". Consisting of understanding model, texture module, and geometry module, Omni-View jointly models scene understanding, novel view synthesis, and geometry estimation, enabling synergistic interaction between 3D scene understanding and generation tasks. By design, it leverages the spatiotemporal modeling capabilities of its texture module responsible for appearance synthesis, alongside the explicit geometric constraints provided by its dedicated geometry module, thereby enriching the model's holistic understanding of 3D scenes. Trained with a two-stage strategy, Omni-View achieves a state-of-the-art score of 55.4 on the VSI-Bench benchmark, outperforming existing specialized 3D understanding models, while simultaneously delivering strong performance in both novel view synthesis and 3D scene generation.

TransBench: Benchmarking Machine Translation for Industrial-Scale Applications

May 20, 2025Abstract:Machine translation (MT) has become indispensable for cross-border communication in globalized industries like e-commerce, finance, and legal services, with recent advancements in large language models (LLMs) significantly enhancing translation quality. However, applying general-purpose MT models to industrial scenarios reveals critical limitations due to domain-specific terminology, cultural nuances, and stylistic conventions absent in generic benchmarks. Existing evaluation frameworks inadequately assess performance in specialized contexts, creating a gap between academic benchmarks and real-world efficacy. To address this, we propose a three-level translation capability framework: (1) Basic Linguistic Competence, (2) Domain-Specific Proficiency, and (3) Cultural Adaptation, emphasizing the need for holistic evaluation across these dimensions. We introduce TransBench, a benchmark tailored for industrial MT, initially targeting international e-commerce with 17,000 professionally translated sentences spanning 4 main scenarios and 33 language pairs. TransBench integrates traditional metrics (BLEU, TER) with Marco-MOS, a domain-specific evaluation model, and provides guidelines for reproducible benchmark construction. Our contributions include: (1) a structured framework for industrial MT evaluation, (2) the first publicly available benchmark for e-commerce translation, (3) novel metrics probing multi-level translation quality, and (4) open-sourced evaluation tools. This work bridges the evaluation gap, enabling researchers and practitioners to systematically assess and enhance MT systems for industry-specific needs.

Unified Multimodal Understanding and Generation Models: Advances, Challenges, and Opportunities

May 05, 2025Abstract:Recent years have seen remarkable progress in both multimodal understanding models and image generation models. Despite their respective successes, these two domains have evolved independently, leading to distinct architectural paradigms: While autoregressive-based architectures have dominated multimodal understanding, diffusion-based models have become the cornerstone of image generation. Recently, there has been growing interest in developing unified frameworks that integrate these tasks. The emergence of GPT-4o's new capabilities exemplifies this trend, highlighting the potential for unification. However, the architectural differences between the two domains pose significant challenges. To provide a clear overview of current efforts toward unification, we present a comprehensive survey aimed at guiding future research. First, we introduce the foundational concepts and recent advancements in multimodal understanding and text-to-image generation models. Next, we review existing unified models, categorizing them into three main architectural paradigms: diffusion-based, autoregressive-based, and hybrid approaches that fuse autoregressive and diffusion mechanisms. For each category, we analyze the structural designs and innovations introduced by related works. Additionally, we compile datasets and benchmarks tailored for unified models, offering resources for future exploration. Finally, we discuss the key challenges facing this nascent field, including tokenization strategy, cross-modal attention, and data. As this area is still in its early stages, we anticipate rapid advancements and will regularly update this survey. Our goal is to inspire further research and provide a valuable reference for the community. The references associated with this survey will be available on GitHub soon.

CHATS: Combining Human-Aligned Optimization and Test-Time Sampling for Text-to-Image Generation

Feb 18, 2025

Abstract:Diffusion models have emerged as a dominant approach for text-to-image generation. Key components such as the human preference alignment and classifier-free guidance play a crucial role in ensuring generation quality. However, their independent application in current text-to-image models continues to face significant challenges in achieving strong text-image alignment, high generation quality, and consistency with human aesthetic standards. In this work, we for the first time, explore facilitating the collaboration of human performance alignment and test-time sampling to unlock the potential of text-to-image models. Consequently, we introduce CHATS (Combining Human-Aligned optimization and Test-time Sampling), a novel generative framework that separately models the preferred and dispreferred distributions and employs a proxy-prompt-based sampling strategy to utilize the useful information contained in both distributions. We observe that CHATS exhibits exceptional data efficiency, achieving strong performance with only a small, high-quality funetuning dataset. Extensive experiments demonstrate that CHATS surpasses traditional preference alignment methods, setting new state-of-the-art across various standard benchmarks.

Evaluating Image Caption via Cycle-consistent Text-to-Image Generation

Jan 08, 2025

Abstract:Evaluating image captions typically relies on reference captions, which are costly to obtain and exhibit significant diversity and subjectivity. While reference-free evaluation metrics have been proposed, most focus on cross-modal evaluation between captions and images. Recent research has revealed that the modality gap generally exists in the representation of contrastive learning-based multi-modal systems, undermining the reliability of cross-modality metrics like CLIPScore. In this paper, we propose CAMScore, a cyclic reference-free automatic evaluation metric for image captioning models. To circumvent the aforementioned modality gap, CAMScore utilizes a text-to-image model to generate images from captions and subsequently evaluates these generated images against the original images. Furthermore, to provide fine-grained information for a more comprehensive evaluation, we design a three-level evaluation framework for CAMScore that encompasses pixel-level, semantic-level, and objective-level perspectives. Extensive experiment results across multiple benchmark datasets show that CAMScore achieves a superior correlation with human judgments compared to existing reference-based and reference-free metrics, demonstrating the effectiveness of the framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge