Ruimao Zhang

TouchGuide: Inference-Time Steering of Visuomotor Policies via Touch Guidance

Jan 28, 2026Abstract:Fine-grained and contact-rich manipulation remain challenging for robots, largely due to the underutilization of tactile feedback. To address this, we introduce TouchGuide, a novel cross-policy visuo-tactile fusion paradigm that fuses modalities within a low-dimensional action space. Specifically, TouchGuide operates in two stages to guide a pre-trained diffusion or flow-matching visuomotor policy at inference time. First, the policy produces a coarse, visually-plausible action using only visual inputs during early sampling. Second, a task-specific Contact Physical Model (CPM) provides tactile guidance to steer and refine the action, ensuring it aligns with realistic physical contact conditions. Trained through contrastive learning on limited expert demonstrations, the CPM provides a tactile-informed feasibility score to steer the sampling process toward refined actions that satisfy physical contact constraints. Furthermore, to facilitate TouchGuide training with high-quality and cost-effective data, we introduce TacUMI, a data collection system. TacUMI achieves a favorable trade-off between precision and affordability; by leveraging rigid fingertips, it obtains direct tactile feedback, thereby enabling the collection of reliable tactile data. Extensive experiments on five challenging contact-rich tasks, such as shoe lacing and chip handover, show that TouchGuide consistently and significantly outperforms state-of-the-art visuo-tactile policies.

Advances and Innovations in the Multi-Agent Robotic System (MARS) Challenge

Jan 26, 2026Abstract:Recent advancements in multimodal large language models and vision-languageaction models have significantly driven progress in Embodied AI. As the field transitions toward more complex task scenarios, multi-agent system frameworks are becoming essential for achieving scalable, efficient, and collaborative solutions. This shift is fueled by three primary factors: increasing agent capabilities, enhancing system efficiency through task delegation, and enabling advanced human-agent interactions. To address the challenges posed by multi-agent collaboration, we propose the Multi-Agent Robotic System (MARS) Challenge, held at the NeurIPS 2025 Workshop on SpaVLE. The competition focuses on two critical areas: planning and control, where participants explore multi-agent embodied planning using vision-language models (VLMs) to coordinate tasks and policy execution to perform robotic manipulation in dynamic environments. By evaluating solutions submitted by participants, the challenge provides valuable insights into the design and coordination of embodied multi-agent systems, contributing to the future development of advanced collaborative AI systems.

CDP: Towards Robust Autoregressive Visuomotor Policy Learning via Causal Diffusion

Jun 17, 2025Abstract:Diffusion Policy (DP) enables robots to learn complex behaviors by imitating expert demonstrations through action diffusion. However, in practical applications, hardware limitations often degrade data quality, while real-time constraints restrict model inference to instantaneous state and scene observations. These limitations seriously reduce the efficacy of learning from expert demonstrations, resulting in failures in object localization, grasp planning, and long-horizon task execution. To address these challenges, we propose Causal Diffusion Policy (CDP), a novel transformer-based diffusion model that enhances action prediction by conditioning on historical action sequences, thereby enabling more coherent and context-aware visuomotor policy learning. To further mitigate the computational cost associated with autoregressive inference, a caching mechanism is also introduced to store attention key-value pairs from previous timesteps, substantially reducing redundant computations during execution. Extensive experiments in both simulated and real-world environments, spanning diverse 2D and 3D manipulation tasks, demonstrate that CDP uniquely leverages historical action sequences to achieve significantly higher accuracy than existing methods. Moreover, even when faced with degraded input observation quality, CDP maintains remarkable precision by reasoning through temporal continuity, which highlights its practical robustness for robotic control under realistic, imperfect conditions.

WeThink: Toward General-purpose Vision-Language Reasoning via Reinforcement Learning

Jun 09, 2025Abstract:Building on the success of text-based reasoning models like DeepSeek-R1, extending these capabilities to multimodal reasoning holds great promise. While recent works have attempted to adapt DeepSeek-R1-style reinforcement learning (RL) training paradigms to multimodal large language models (MLLM), focusing on domain-specific tasks like math and visual perception, a critical question remains: How can we achieve the general-purpose visual-language reasoning through RL? To address this challenge, we make three key efforts: (1) A novel Scalable Multimodal QA Synthesis pipeline that autonomously generates context-aware, reasoning-centric question-answer (QA) pairs directly from the given images. (2) The open-source WeThink dataset containing over 120K multimodal QA pairs with annotated reasoning paths, curated from 18 diverse dataset sources and covering various question domains. (3) A comprehensive exploration of RL on our dataset, incorporating a hybrid reward mechanism that combines rule-based verification with model-based assessment to optimize RL training efficiency across various task domains. Across 14 diverse MLLM benchmarks, we demonstrate that our WeThink dataset significantly enhances performance, from mathematical reasoning to diverse general multimodal tasks. Moreover, we show that our automated data pipeline can continuously increase data diversity to further improve model performance.

DFVO: Learning Darkness-free Visible and Infrared Image Disentanglement and Fusion All at Once

May 07, 2025Abstract:Visible and infrared image fusion is one of the most crucial tasks in the field of image fusion, aiming to generate fused images with clear structural information and high-quality texture features for high-level vision tasks. However, when faced with severe illumination degradation in visible images, the fusion results of existing image fusion methods often exhibit blurry and dim visual effects, posing major challenges for autonomous driving. To this end, a Darkness-Free network is proposed to handle Visible and infrared image disentanglement and fusion all at Once (DFVO), which employs a cascaded multi-task approach to replace the traditional two-stage cascaded training (enhancement and fusion), addressing the issue of information entropy loss caused by hierarchical data transmission. Specifically, we construct a latent-common feature extractor (LCFE) to obtain latent features for the cascaded tasks strategy. Firstly, a details-extraction module (DEM) is devised to acquire high-frequency semantic information. Secondly, we design a hyper cross-attention module (HCAM) to extract low-frequency information and preserve texture features from source images. Finally, a relevant loss function is designed to guide the holistic network learning, thereby achieving better image fusion. Extensive experiments demonstrate that our proposed approach outperforms state-of-the-art alternatives in terms of qualitative and quantitative evaluations. Particularly, DFVO can generate clearer, more informative, and more evenly illuminated fusion results in the dark environments, achieving best performance on the LLVIP dataset with 63.258 dB PSNR and 0.724 CC, providing more effective information for high-level vision tasks. Our code is publicly accessible at https://github.com/DaVin-Qi530/DFVO.

RoboFactory: Exploring Embodied Agent Collaboration with Compositional Constraints

Mar 20, 2025Abstract:Designing effective embodied multi-agent systems is critical for solving complex real-world tasks across domains. Due to the complexity of multi-agent embodied systems, existing methods fail to automatically generate safe and efficient training data for such systems. To this end, we propose the concept of compositional constraints for embodied multi-agent systems, addressing the challenges arising from collaboration among embodied agents. We design various interfaces tailored to different types of constraints, enabling seamless interaction with the physical world. Leveraging compositional constraints and specifically designed interfaces, we develop an automated data collection framework for embodied multi-agent systems and introduce the first benchmark for embodied multi-agent manipulation, RoboFactory. Based on RoboFactory benchmark, we adapt and evaluate the method of imitation learning and analyzed its performance in different difficulty agent tasks. Furthermore, we explore the architectures and training strategies for multi-agent imitation learning, aiming to build safe and efficient embodied multi-agent systems.

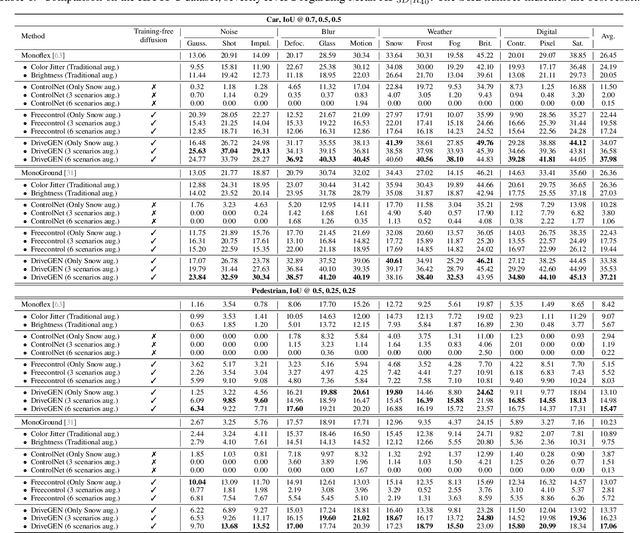

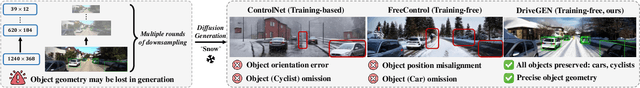

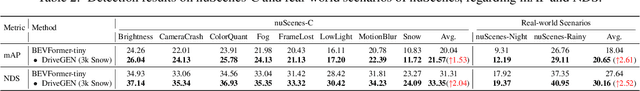

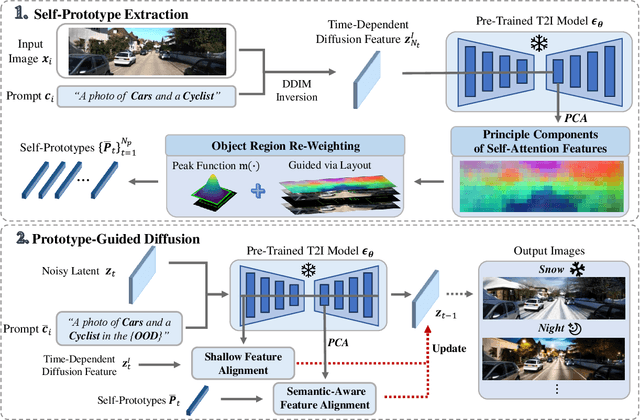

DriveGEN: Generalized and Robust 3D Detection in Driving via Controllable Text-to-Image Diffusion Generation

Mar 14, 2025

Abstract:In autonomous driving, vision-centric 3D detection aims to identify 3D objects from images. However, high data collection costs and diverse real-world scenarios limit the scale of training data. Once distribution shifts occur between training and test data, existing methods often suffer from performance degradation, known as Out-of-Distribution (OOD) problems. To address this, controllable Text-to-Image (T2I) diffusion offers a potential solution for training data enhancement, which is required to generate diverse OOD scenarios with precise 3D object geometry. Nevertheless, existing controllable T2I approaches are restricted by the limited scale of training data or struggle to preserve all annotated 3D objects. In this paper, we present DriveGEN, a method designed to improve the robustness of 3D detectors in Driving via Training-Free Controllable Text-to-Image Diffusion Generation. Without extra diffusion model training, DriveGEN consistently preserves objects with precise 3D geometry across diverse OOD generations, consisting of 2 stages: 1) Self-Prototype Extraction: We empirically find that self-attention features are semantic-aware but require accurate region selection for 3D objects. Thus, we extract precise object features via layouts to capture 3D object geometry, termed self-prototypes. 2) Prototype-Guided Diffusion: To preserve objects across various OOD scenarios, we perform semantic-aware feature alignment and shallow feature alignment during denoising. Extensive experiments demonstrate the effectiveness of DriveGEN in improving 3D detection. The code is available at https://github.com/Hongbin98/DriveGEN.

Unlock the Power of Unlabeled Data in Language Driving Model

Mar 13, 2025

Abstract:Recent Vision-based Large Language Models~(VisionLLMs) for autonomous driving have seen rapid advancements. However, such promotion is extremely dependent on large-scale high-quality annotated data, which is costly and labor-intensive. To address this issue, we propose unlocking the value of abundant yet unlabeled data to improve the language-driving model in a semi-supervised learning manner. Specifically, we first introduce a series of template-based prompts to extract scene information, generating questions that create pseudo-answers for the unlabeled data based on a model trained with limited labeled data. Next, we propose a Self-Consistency Refinement method to improve the quality of these pseudo-annotations, which are later used for further training. By utilizing a pre-trained VisionLLM (e.g., InternVL), we build a strong Language Driving Model (LDM) for driving scene question-answering, outperforming previous state-of-the-art methods. Extensive experiments on the DriveLM benchmark show that our approach performs well with just 5% labeled data, achieving competitive performance against models trained with full datasets. In particular, our LDM achieves 44.85% performance with limited labeled data, increasing to 54.27% when using unlabeled data, while models trained with full datasets reach 60.68% on the DriveLM benchmark.

Semantic-Supervised Spatial-Temporal Fusion for LiDAR-based 3D Object Detection

Mar 13, 2025

Abstract:LiDAR-based 3D object detection presents significant challenges due to the inherent sparsity of LiDAR points. A common solution involves long-term temporal LiDAR data to densify the inputs. However, efficiently leveraging spatial-temporal information remains an open problem. In this paper, we propose a novel Semantic-Supervised Spatial-Temporal Fusion (ST-Fusion) method, which introduces a novel fusion module to relieve the spatial misalignment caused by the object motion over time and a feature-level semantic supervision to sufficiently unlock the capacity of the proposed fusion module. Specifically, the ST-Fusion consists of a Spatial Aggregation (SA) module and a Temporal Merging (TM) module. The SA module employs a convolutional layer with progressively expanding receptive fields to aggregate the object features from the local regions to alleviate the spatial misalignment, the TM module dynamically extracts object features from the preceding frames based on the attention mechanism for a comprehensive sequential presentation. Besides, in the semantic supervision, we propose a Semantic Injection method to enrich the sparse LiDAR data via injecting the point-wise semantic labels, using it for training a teacher model and providing a reconstruction target at the feature level supervised by the proposed object-aware loss. Extensive experiments on various LiDAR-based detectors demonstrate the effectiveness and universality of our proposal, yielding an improvement of approximately +2.8% in NDS based on the nuScenes benchmark.

NavigateDiff: Visual Predictors are Zero-Shot Navigation Assistants

Feb 19, 2025Abstract:Navigating unfamiliar environments presents significant challenges for household robots, requiring the ability to recognize and reason about novel decoration and layout. Existing reinforcement learning methods cannot be directly transferred to new environments, as they typically rely on extensive mapping and exploration, leading to time-consuming and inefficient. To address these challenges, we try to transfer the logical knowledge and the generalization ability of pre-trained foundation models to zero-shot navigation. By integrating a large vision-language model with a diffusion network, our approach named \mname ~constructs a visual predictor that continuously predicts the agent's potential observations in the next step which can assist robots generate robust actions. Furthermore, to adapt the temporal property of navigation, we introduce temporal historical information to ensure that the predicted image is aligned with the navigation scene. We then carefully designed an information fusion framework that embeds the predicted future frames as guidance into goal-reaching policy to solve downstream image navigation tasks. This approach enhances navigation control and generalization across both simulated and real-world environments. Through extensive experimentation, we demonstrate the robustness and versatility of our method, showcasing its potential to improve the efficiency and effectiveness of robotic navigation in diverse settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge