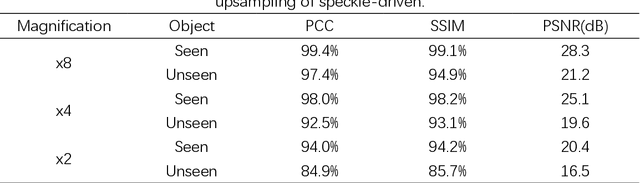

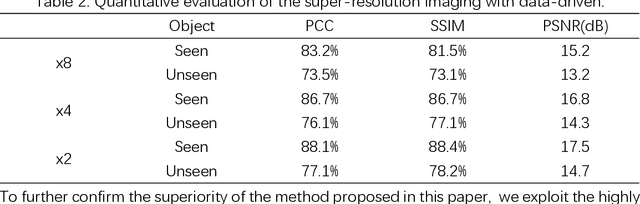

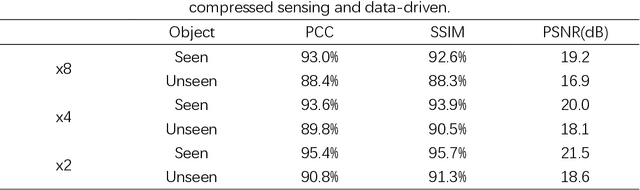

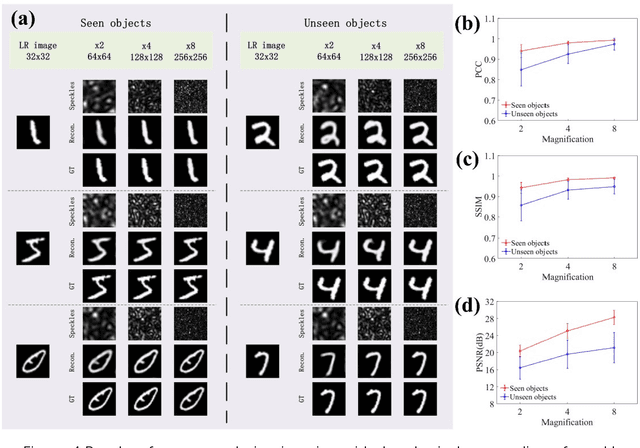

Super-resolution imaging through a multimode fiber: the physical upsampling of speckle-driven

Jul 11, 2023Chuncheng Zhang, Tingting Liu, Zhihua Xie, Yu Wang, Tong Liu, Qian Chen, Xiubao Sui

Following recent advancements in multimode fiber (MMF), miniaturization of imaging endoscopes has proven crucial for minimally invasive surgery in vivo. Recent progress enabled by super-resolution imaging methods with a data-driven deep learning (DL) framework has balanced the relationship between the core size and resolution. However, most of the DL approaches lack attention to the physical properties of the speckle, which is crucial for reconciling the relationship between the magnification of super-resolution imaging and the quality of reconstruction quality. In the paper, we find that the interferometric process of speckle formation is an essential basis for creating DL models with super-resolution imaging. It physically realizes the upsampling of low-resolution (LR) images and enhances the perceptual capabilities of the models. The finding experimentally validates the role played by the physical upsampling of speckle-driven, effectively complementing the lack of information in data-driven. Experimentally, we break the restriction of the poor reconstruction quality at great magnification by inputting the same size of the speckle with the size of the high-resolution (HR) image to the model. The guidance of our research for endoscopic imaging may accelerate the further development of minimally invasive surgery.

FedBone: Towards Large-Scale Federated Multi-Task Learning

Jun 30, 2023Yiqiang Chen, Teng Zhang, Xinlong Jiang, Qian Chen, Chenlong Gao, Wuliang Huang

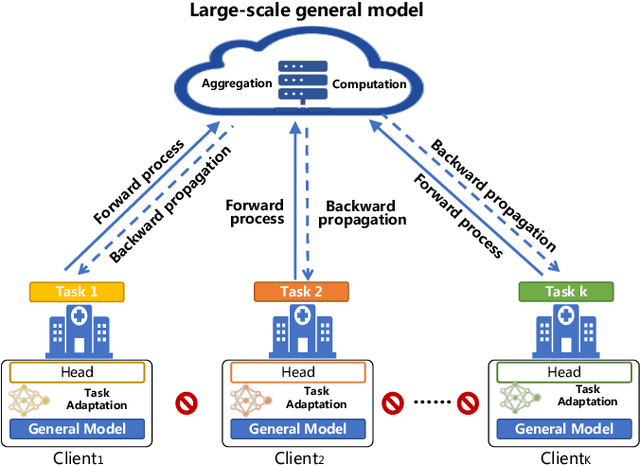

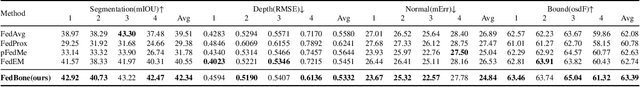

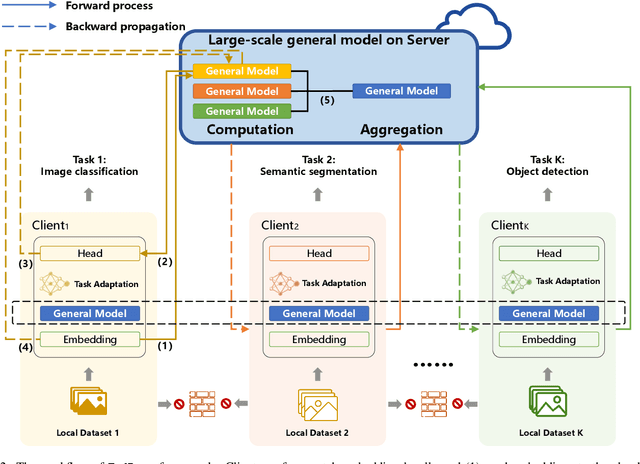

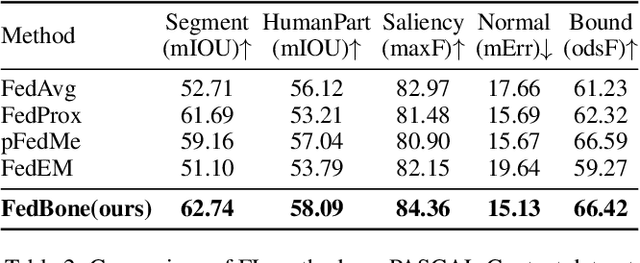

Heterogeneous federated multi-task learning (HFMTL) is a federated learning technique that combines heterogeneous tasks of different clients to achieve more accurate, comprehensive predictions. In real-world applications, visual and natural language tasks typically require large-scale models to extract high-level abstract features. However, large-scale models cannot be directly applied to existing federated multi-task learning methods. Existing HFML methods also disregard the impact of gradient conflicts on multi-task optimization during the federated aggregation process. In this work, we propose an innovative framework called FedBone, which enables the construction of large-scale models with better generalization from the perspective of server-client split learning and gradient projection. We split the entire model into two components: a large-scale general model (referred to as the general model) on the cloud server and multiple task-specific models (referred to as the client model) on edge clients, solving the problem of insufficient computing power on edge clients. The conflicting gradient projection technique is used to enhance the generalization of the large-scale general model between different tasks. The proposed framework is evaluated on two benchmark datasets and a real ophthalmic dataset. Comprehensive results demonstrate that FedBone efficiently adapts to heterogeneous local tasks of each client and outperforms existing federated learning algorithms in most dense prediction and classification tasks with off-the-shelf computational resources on the client side.

3D-Speaker: A Large-Scale Multi-Device, Multi-Distance, and Multi-Dialect Corpus for Speech Representation Disentanglement

Jun 28, 2023Siqi Zheng, Luyao Cheng, Yafeng Chen, Hui Wang, Qian Chen

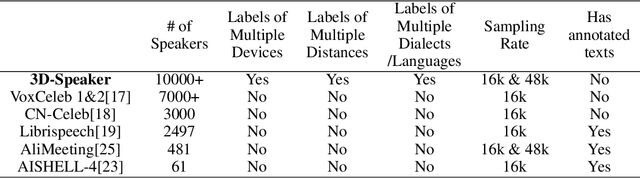

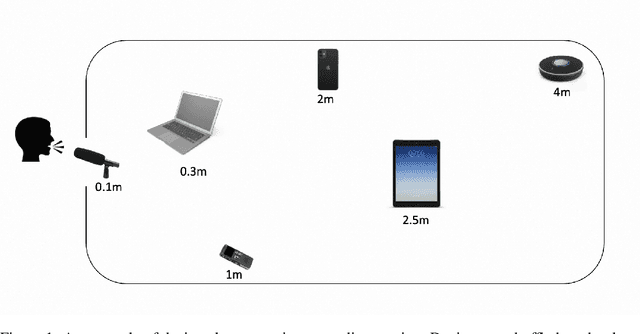

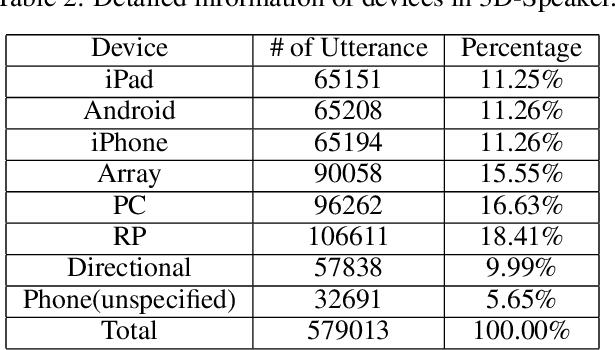

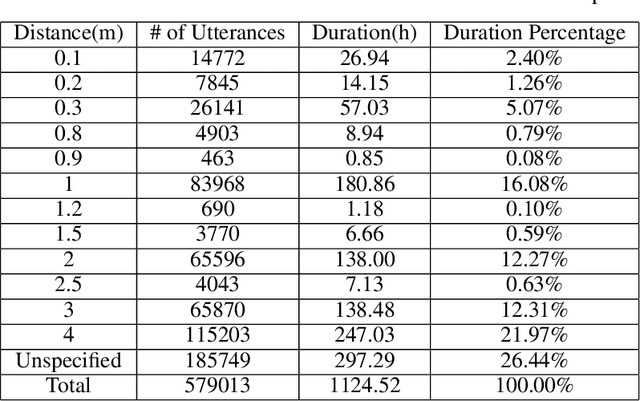

Disentangling uncorrelated information in speech utterances is a crucial research topic within speech community. Different speech-related tasks focus on extracting distinct speech representations while minimizing the affects of other uncorrelated information. We present a large-scale speech corpus to facilitate the research of speech representation disentanglement. 3D-Speaker contains over 10,000 speakers, each of whom are simultaneously recorded by multiple Devices, locating at different Distances, and some speakers are speaking multiple Dialects. The controlled combinations of multi-dimensional audio data yield a matrix of a diverse blend of speech representation entanglement, thereby motivating intriguing methods to untangle them. The multi-domain nature of 3D-Speaker also makes it a suitable resource to evaluate large universal speech models and experiment methods of out-of-domain learning and self-supervised learning. https://3dspeaker.github.io/

Exploiting Correlations Between Contexts and Definitions with Multiple Definition Modeling

May 24, 2023Linhan Zhang, Qian Chen, Wen Wang, Yuxin Jiang, Bing Li, Wei Wang, Xin Cao

Definition modeling is an important task in advanced natural language applications such as understanding and conversation. Since its introduction, it focus on generating one definition for a target word or phrase in a given context, which we refer to as Single Definition Modeling (SDM). However, this approach does not adequately model the correlations and patterns among different contexts and definitions of words. In addition, the creation of a training dataset for SDM requires significant human expertise and effort. In this paper, we carefully design a new task called Multiple Definition Modeling (MDM) that pool together all contexts and definition of target words. We demonstrate the ease of creating a model as well as multiple training sets automatically. % In the experiments, we demonstrate and analyze the benefits of MDM, including improving SDM's performance by using MDM as the pretraining task and its comparable performance in the zero-shot setting.

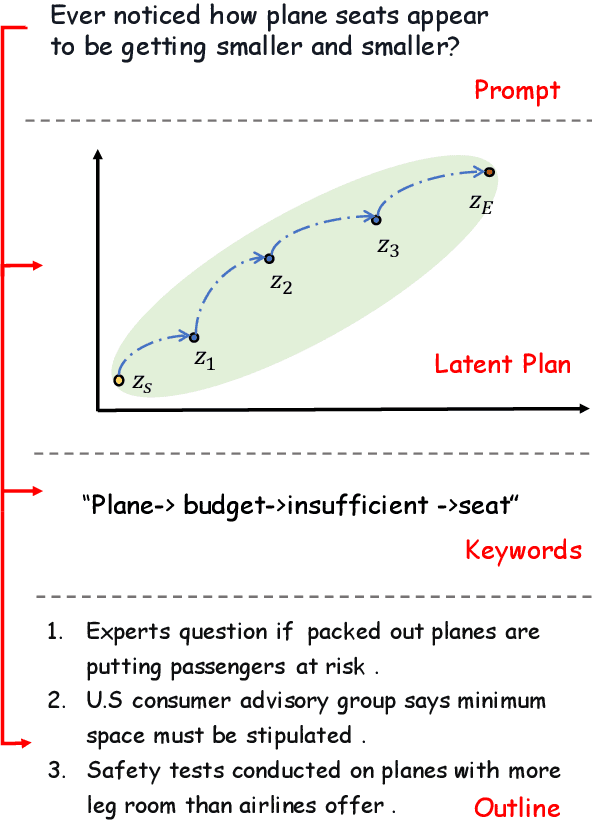

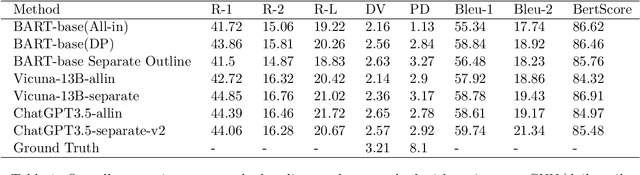

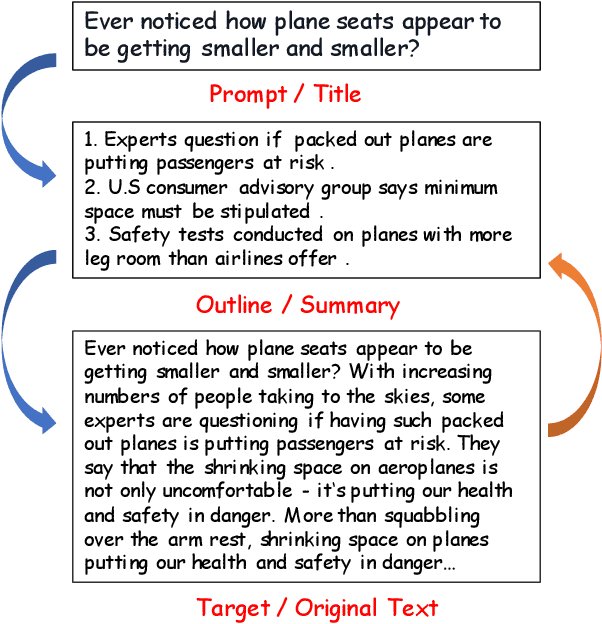

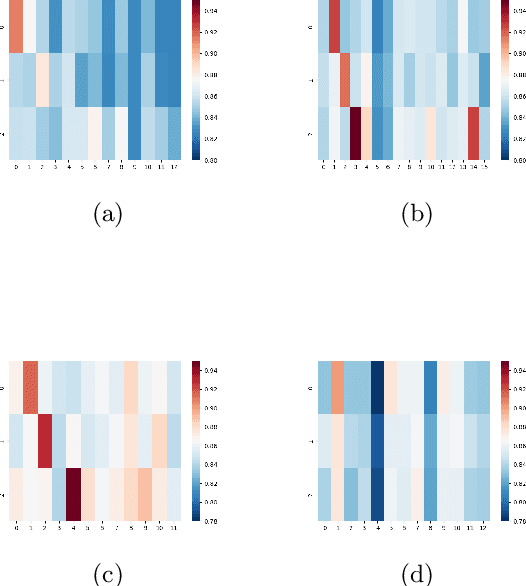

Enhancing Generation through Summarization Duality and Explicit Outline Control

May 23, 2023Yunzhe Li, Qian Chen, Weixiang Yan, Wen Wang, Qinglin Zhang, Hari Sundaram

Automatically open-ended long text generation poses significant challenges due to semantic incoherence and plot implausibility. Previous works usually alleviate this problem through outlines in the form of short phrases or abstractive signals by designing unsupervised tasks, which tend to be unstable and weakly interpretable. Assuming that a summary serves as a mature outline, we introduce a two-stage, summary-enhanced outline supervised generation framework. This framework leverages the dual characteristics of the summarization task to improve outline prediction, resulting in more explicit and plausible outlines. Furthermore, we identify an underutilization issue in outline-based generation with both standard pretrained language models (e.g., GPT-2, BART) and large language models (e.g., Vicuna, ChatGPT). To address this, we propose a novel explicit outline control method for more effective utilization of generated outlines.

BA-SOT: Boundary-Aware Serialized Output Training for Multi-Talker ASR

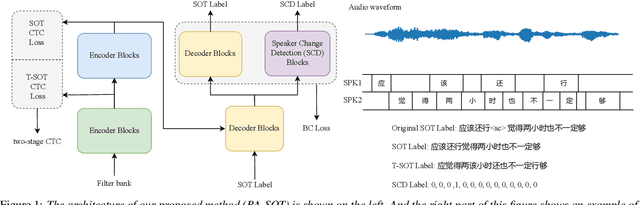

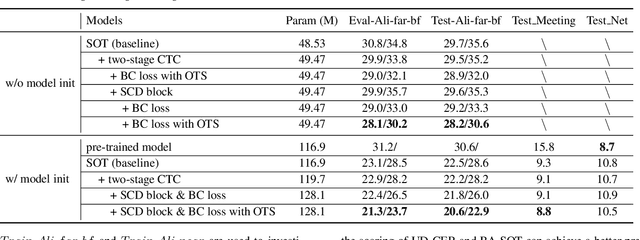

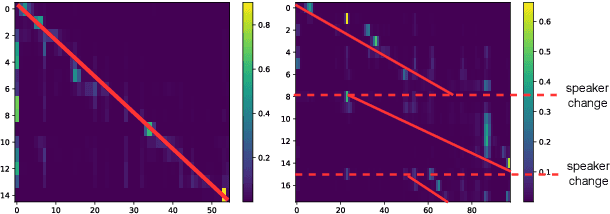

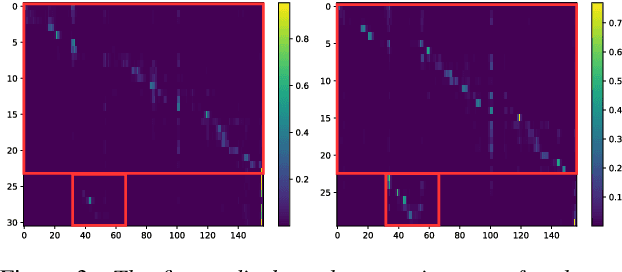

May 23, 2023Yuhao Liang, Fan Yu, Yangze Li, Pengcheng Guo, Shiliang Zhang, Qian Chen, Lei Xie

The recently proposed serialized output training (SOT) simplifies multi-talker automatic speech recognition (ASR) by generating speaker transcriptions separated by a special token. However, frequent speaker changes can make speaker change prediction difficult. To address this, we propose boundary-aware serialized output training (BA-SOT), which explicitly incorporates boundary knowledge into the decoder via a speaker change detection task and boundary constraint loss. We also introduce a two-stage connectionist temporal classification (CTC) strategy that incorporates token-level SOT CTC to restore temporal context information. Besides typical character error rate (CER), we introduce utterance-dependent character error rate (UD-CER) to further measure the precision of speaker change prediction. Compared to original SOT, BA-SOT reduces CER/UD-CER by 5.1%/14.0%, and leveraging a pre-trained ASR model for BA-SOT model initialization further reduces CER/UD-CER by 8.4%/19.9%.

Exploring Speaker-Related Information in Spoken Language Understanding for Better Speaker Diarization

May 22, 2023Luyao Cheng, Siqi Zheng, Zhang Qinglin, Hui Wang, Yafeng Chen, Qian Chen

Speaker diarization(SD) is a classic task in speech processing and is crucial in multi-party scenarios such as meetings and conversations. Current mainstream speaker diarization approaches consider acoustic information only, which result in performance degradation when encountering adverse acoustic conditions. In this paper, we propose methods to extract speaker-related information from semantic content in multi-party meetings, which, as we will show, can further benefit speaker diarization. We introduce two sub-tasks, Dialogue Detection and Speaker-Turn Detection, in which we effectively extract speaker information from conversational semantics. We also propose a simple yet effective algorithm to jointly model acoustic and semantic information and obtain speaker-identified texts. Experiments on both AISHELL-4 and AliMeeting datasets show that our method achieves consistent improvements over acoustic-only speaker diarization systems.

An Enhanced Res2Net with Local and Global Feature Fusion for Speaker Verification

May 22, 2023Yafeng Chen, Siqi Zheng, Hui Wang, Luyao Cheng, Qian Chen, Jiajun Qi

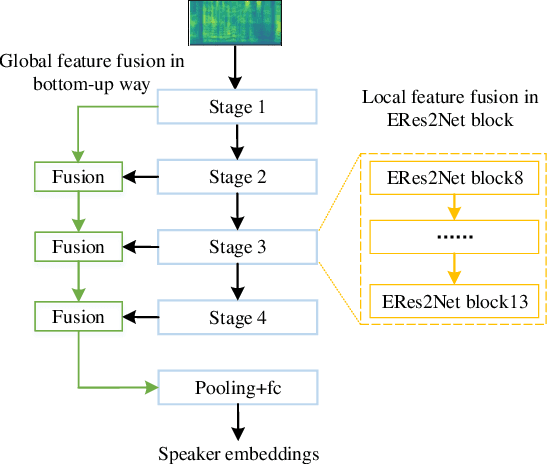

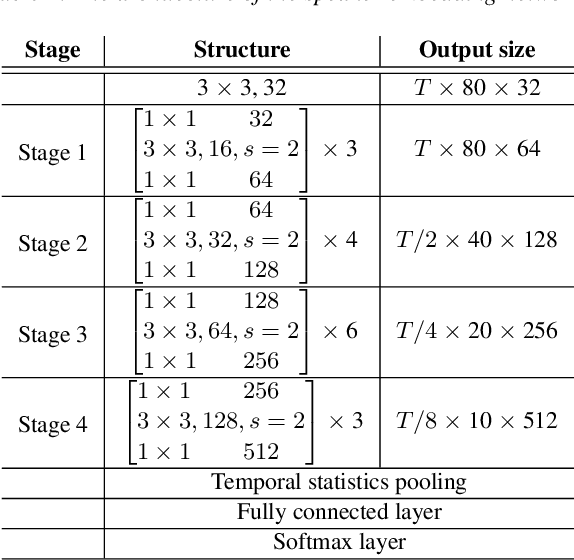

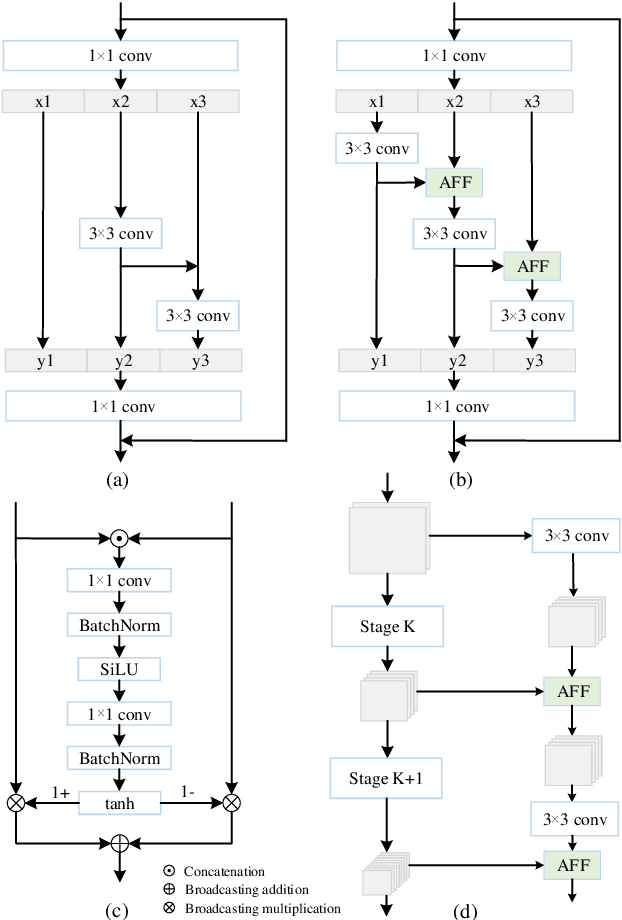

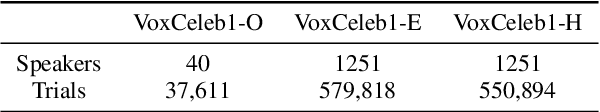

Effective fusion of multi-scale features is crucial for improving speaker verification performance. While most existing methods aggregate multi-scale features in a layer-wise manner via simple operations, such as summation or concatenation. This paper proposes a novel architecture called Enhanced Res2Net (ERes2Net), which incorporates both local and global feature fusion techniques to improve the performance. The local feature fusion (LFF) fuses the features within one single residual block to extract the local signal. The global feature fusion (GFF) takes acoustic features of different scales as input to aggregate global signal. To facilitate effective feature fusion in both LFF and GFF, an attentional feature fusion module is employed in the ERes2Net architecture, replacing summation or concatenation operations. A range of experiments conducted on the VoxCeleb datasets demonstrate the superiority of the ERes2Net in speaker verification.

CASA-ASR: Context-Aware Speaker-Attributed ASR

May 21, 2023Mohan Shi, Zhihao Du, Qian Chen, Fan Yu, Yangze Li, Shiliang Zhang, Jie Zhang, Li-Rong Dai

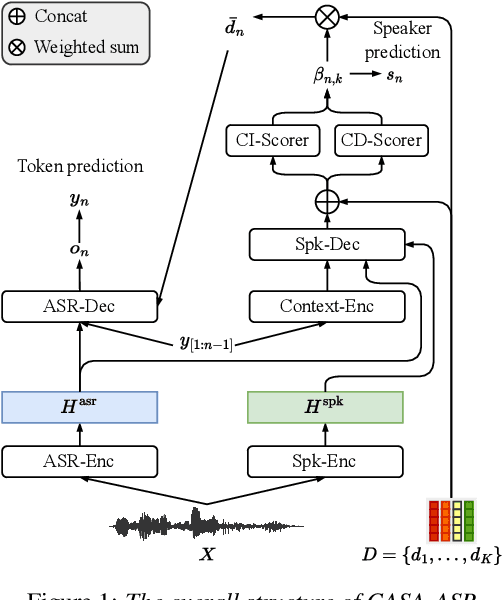

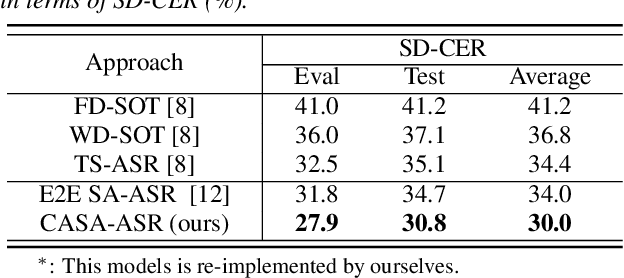

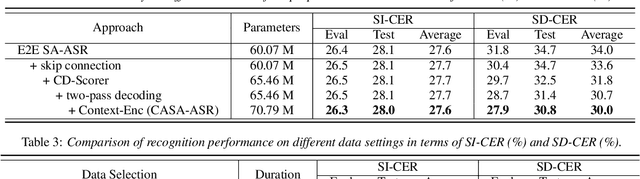

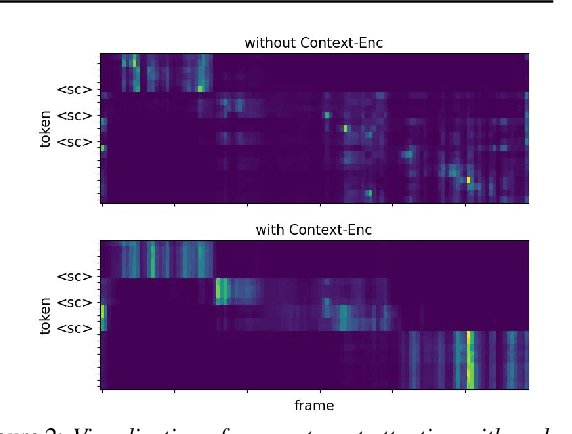

Recently, speaker-attributed automatic speech recognition (SA-ASR) has attracted a wide attention, which aims at answering the question ``who spoke what''. Different from modular systems, end-to-end (E2E) SA-ASR minimizes the speaker-dependent recognition errors directly and shows a promising applicability. In this paper, we propose a context-aware SA-ASR (CASA-ASR) model by enhancing the contextual modeling ability of E2E SA-ASR. Specifically, in CASA-ASR, a contextual text encoder is involved to aggregate the semantic information of the whole utterance, and a context-dependent scorer is employed to model the speaker discriminability by contrasting with speakers in the context. In addition, a two-pass decoding strategy is further proposed to fully leverage the contextual modeling ability resulting in a better recognition performance. Experimental results on AliMeeting corpus show that the proposed CASA-ASR model outperforms the original E2E SA-ASR system with a relative improvement of 11.76% in terms of speaker-dependent character error rate.

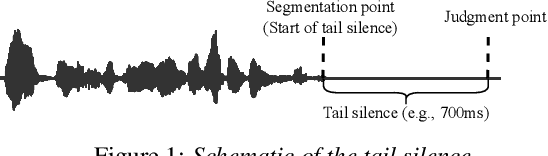

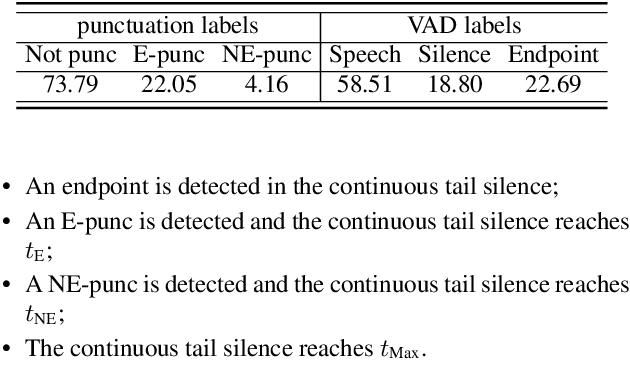

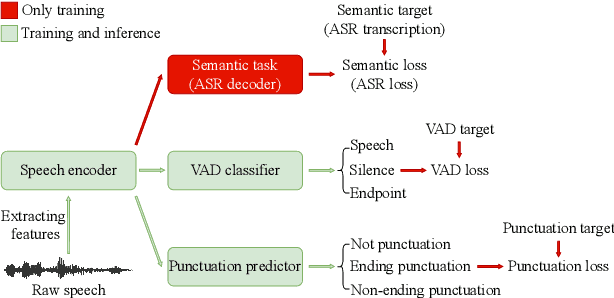

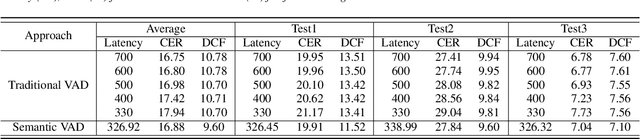

Semantic VAD: Low-Latency Voice Activity Detection for Speech Interaction

May 21, 2023Mohan Shi, Yuchun Shu, Lingyun Zuo, Qian Chen, Shiliang Zhang, Jie Zhang, Li-Rong Dai

For speech interaction, voice activity detection (VAD) is often used as a front-end. However, traditional VAD algorithms usually need to wait for a continuous tail silence to reach a preset maximum duration before segmentation, resulting in a large latency that affects user experience. In this paper, we propose a novel semantic VAD for low-latency segmentation. Different from existing methods, a frame-level punctuation prediction task is added to the semantic VAD, and the artificial endpoint is included in the classification category in addition to the often-used speech presence and absence. To enhance the semantic information of the model, we also incorporate an automatic speech recognition (ASR) related semantic loss. Evaluations on an internal dataset show that the proposed method can reduce the average latency by 53.3% without significant deterioration of character error rate in the back-end ASR compared to the traditional VAD approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge