School of Optoelectronic Science and Engineering and Collaborative Innovation Center of Suzhou Nano Science and Technology, Soochow University, Suzhou 215006, China, Key Lab of Advanced Optical Manufacturing Technologies of Jiangsu Province and Key Lab of Modern Optical Technologies of Education Ministry of China, Soochow University, Suzhou 215006, China, Key Laboratory of Radar Imaging and Microwave Photonics, Ministry of Education, Nanjing University of Aeronautics and Astronautics, Nanjing 210016, China

Commonsense-Focused Dialogues for Response Generation: An Empirical Study

Sep 21, 2021Pei Zhou, Karthik Gopalakrishnan, Behnam Hedayatnia, Seokhwan Kim, Jay Pujara, Xiang Ren, Yang Liu, Dilek Hakkani-Tur

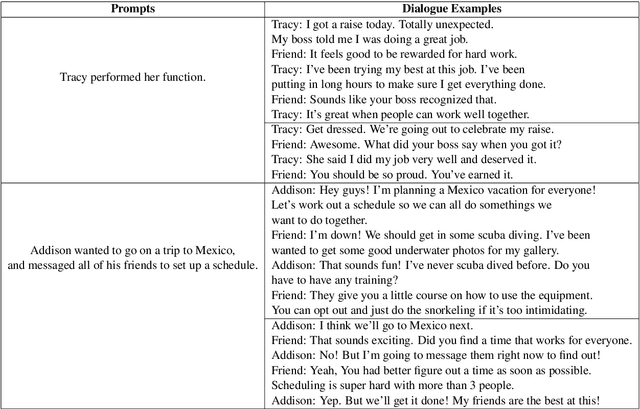

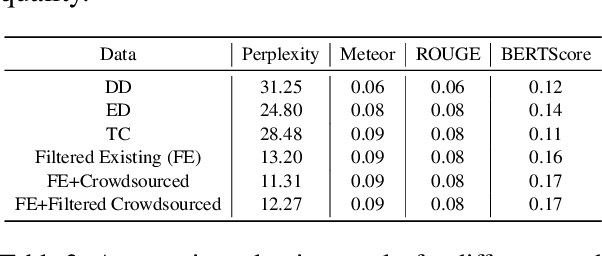

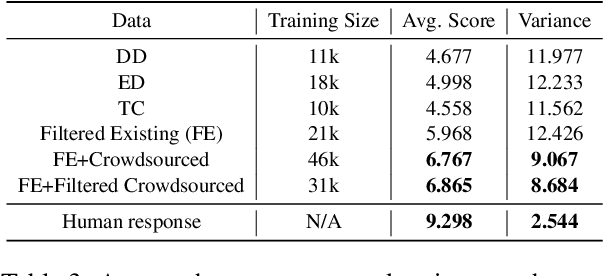

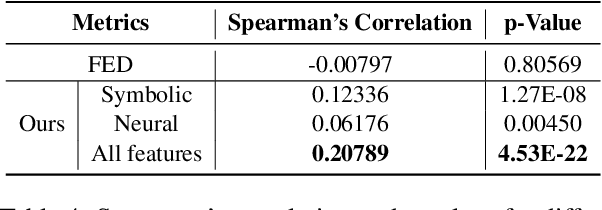

Smooth and effective communication requires the ability to perform latent or explicit commonsense inference. Prior commonsense reasoning benchmarks (such as SocialIQA and CommonsenseQA) mainly focus on the discriminative task of choosing the right answer from a set of candidates, and do not involve interactive language generation as in dialogue. Moreover, existing dialogue datasets do not explicitly focus on exhibiting commonsense as a facet. In this paper, we present an empirical study of commonsense in dialogue response generation. We first auto-extract commonsensical dialogues from existing dialogue datasets by leveraging ConceptNet, a commonsense knowledge graph. Furthermore, building on social contexts/situations in SocialIQA, we collect a new dialogue dataset with 25K dialogues aimed at exhibiting social commonsense in an interactive setting. We evaluate response generation models trained using these datasets and find that models trained on both extracted and our collected data produce responses that consistently exhibit more commonsense than baselines. Finally we propose an approach for automatic evaluation of commonsense that relies on features derived from ConceptNet and pre-trained language and dialog models, and show reasonable correlation with human evaluation of responses' commonsense quality. We are releasing a subset of our collected data, Commonsense-Dialogues, containing about 11K dialogs.

An RF-source-free microwave photonic radar with an optically injected semiconductor laser for high-resolution detection and imaging

Jun 11, 2021Pei Zhou, Rengheng Zhang, Nianqiang Li, Zhidong Jiang, Shilong Pan

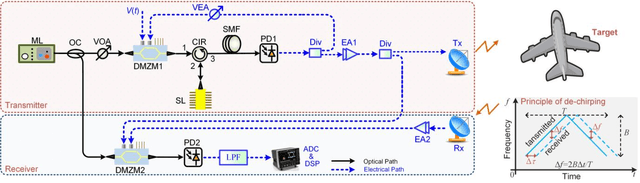

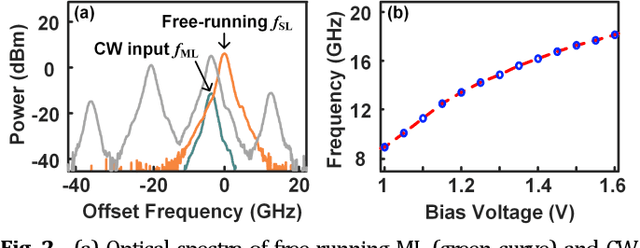

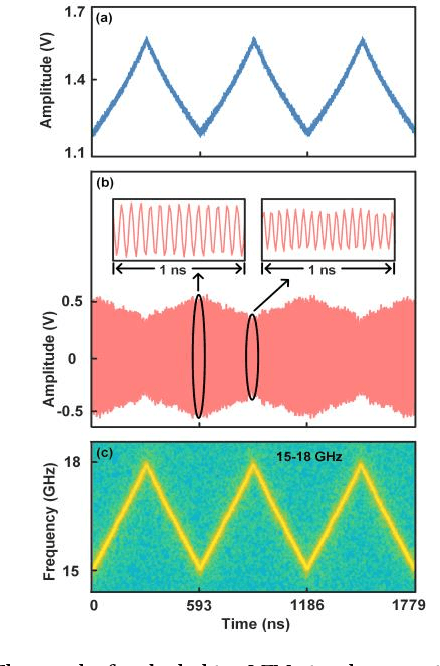

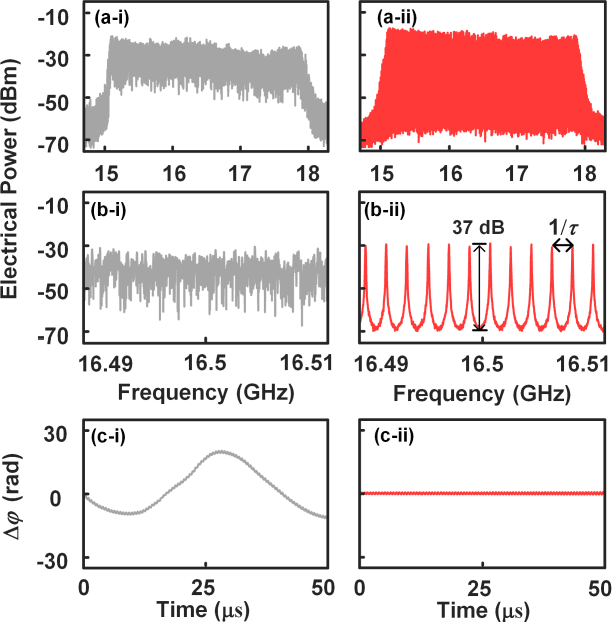

This paper presents a novel microwave photonic (MWP) radar scheme that is capable of optically generating and processing broadband linear frequency-modulated (LFM) microwave signals without using any radio-frequency (RF) sources. In the transmitter, a broadband LFM microwave signal is generated by controlling the period-one (P1) oscillation of an optically injected semiconductor laser. After targets reflection, photonic de-chirping is implemented based on a dual-drive Mach-Zehnder modulator (DMZM), which is followed by a low-speed analog-to-digital converter (ADC) and digital signal processer (DSP) to reconstruct target information. Without the limitations of external RF sources, the proposed radar has an ultra-flexible tunability, and the main operating parameters are adjustable, including central frequency, bandwidth, frequency band, and temporal period. In the experiment, a fully photonics-based Ku-band radar with a bandwidth of 4 GHz is established for high-resolution detection and inverse synthetic aperture radar (ISAR) imaging. Results show that a high range resolution reaching ~1.88 cm, and a two-dimensional (2D) imaging resolution as high as ~1.88 cm x ~2.00 cm are achieved with a sampling rate of 100 MSa/s in the receiver. The flexible tunability of the radar is also experimentally investigated. The proposed radar scheme features low cost, simple structure, and high reconfigurability, which, hopefully, is to be used in future multifunction adaptive and miniaturized radars.

Go Beyond Plain Fine-tuning: Improving Pretrained Models for Social Commonsense

May 12, 2021Ting-Yun Chang, Yang Liu, Karthik Gopalakrishnan, Behnam Hedayatnia, Pei Zhou, Dilek Hakkani-Tur

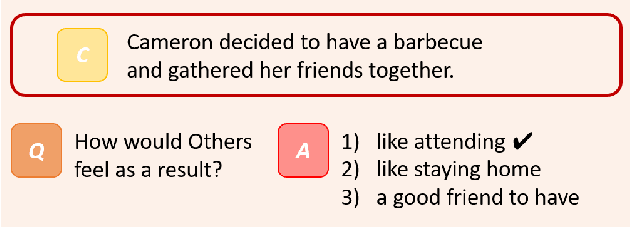

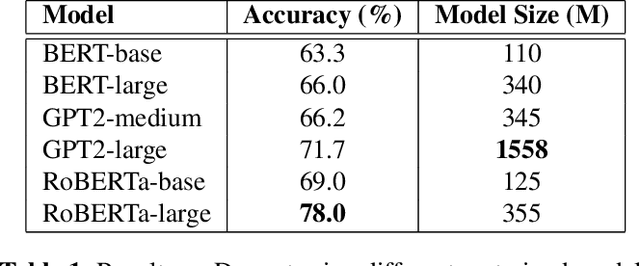

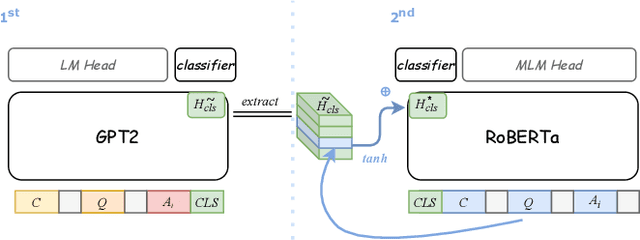

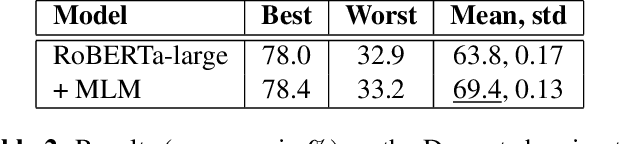

Pretrained language models have demonstrated outstanding performance in many NLP tasks recently. However, their social intelligence, which requires commonsense reasoning about the current situation and mental states of others, is still developing. Towards improving language models' social intelligence, we focus on the Social IQA dataset, a task requiring social and emotional commonsense reasoning. Building on top of the pretrained RoBERTa and GPT2 models, we propose several architecture variations and extensions, as well as leveraging external commonsense corpora, to optimize the model for Social IQA. Our proposed system achieves competitive results as those top-ranking models on the leaderboard. This work demonstrates the strengths of pretrained language models, and provides viable ways to improve their performance for a particular task.

Incorporating Commonsense Knowledge Graph in Pretrained Models for Social Commonsense Tasks

May 12, 2021Ting-Yun Chang, Yang Liu, Karthik Gopalakrishnan, Behnam Hedayatnia, Pei Zhou, Dilek Hakkani-Tur

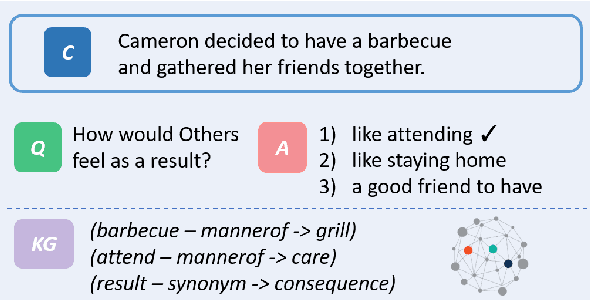

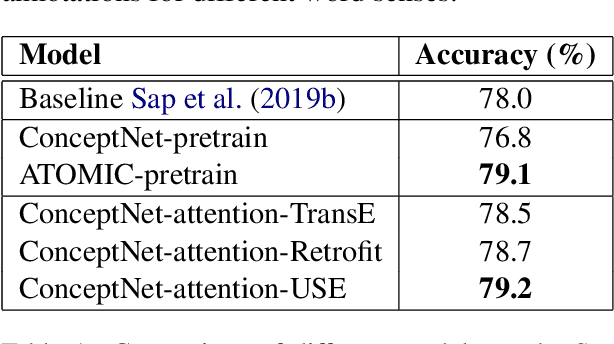

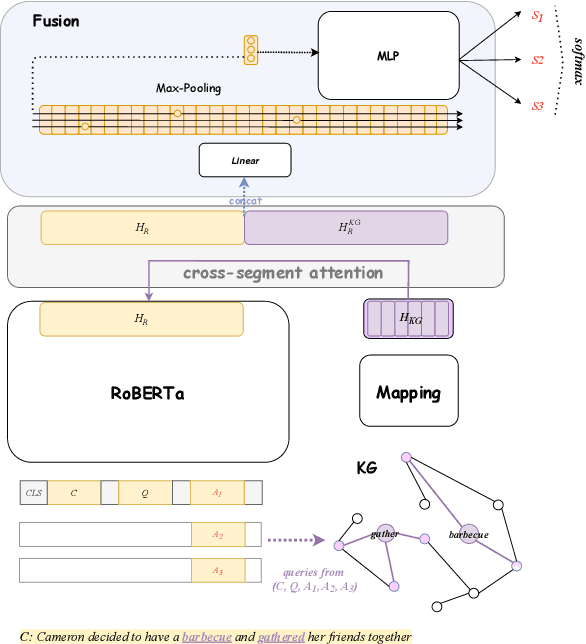

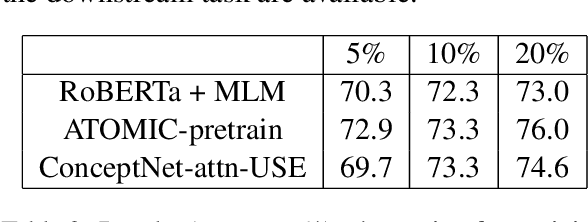

Pretrained language models have excelled at many NLP tasks recently; however, their social intelligence is still unsatisfactory. To enable this, machines need to have a more general understanding of our complicated world and develop the ability to perform commonsense reasoning besides fitting the specific downstream tasks. External commonsense knowledge graphs (KGs), such as ConceptNet, provide rich information about words and their relationships. Thus, towards general commonsense learning, we propose two approaches to \emph{implicitly} and \emph{explicitly} infuse such KGs into pretrained language models. We demonstrate our proposed methods perform well on SocialIQA, a social commonsense reasoning task, in both limited and full training data regimes.

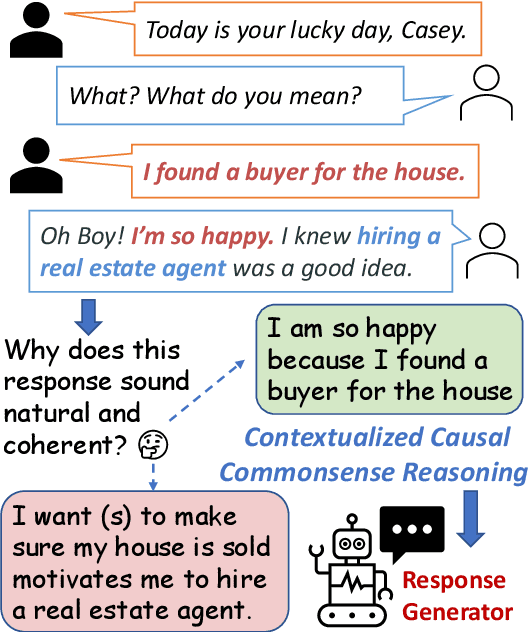

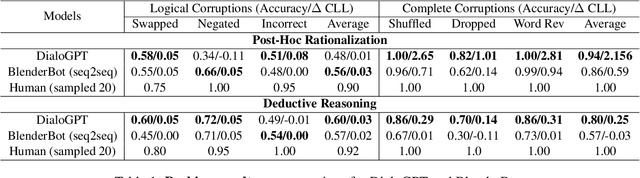

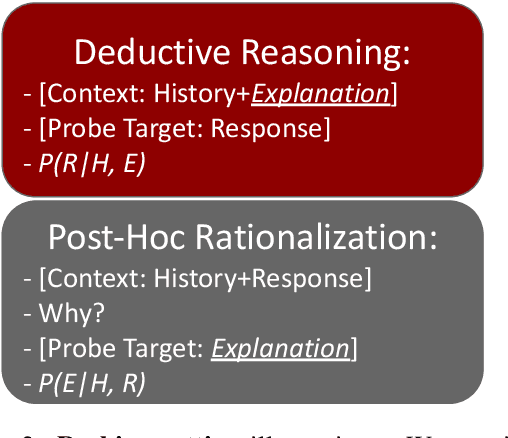

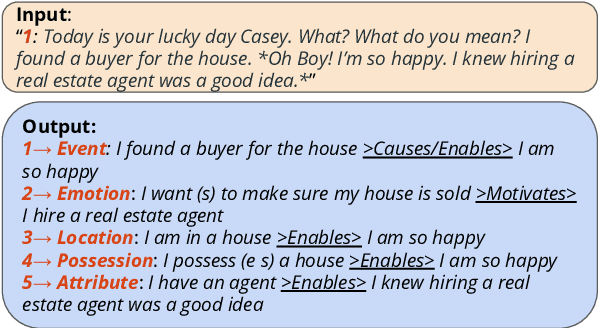

Probing Causal Common Sense in Dialogue Response Generation

Apr 21, 2021Pei Zhou, Pegah Jandaghi, Bill Yuchen Lin, Justin Cho, Jay Pujara, Xiang Ren

Communication is a cooperative effort that requires reaching mutual understanding among the participants. Humans use commonsense reasoning implicitly to produce natural and logically-coherent responses. As a step towards fluid human-AI communication, we study if response generation (RG) models can emulate human reasoning process and use common sense to help produce better-quality responses. We aim to tackle two research questions: how to formalize conversational common sense and how to examine RG models capability to use common sense? We first propose a task, CEDAR: Causal common sEnse in DiAlogue Response generation, that concretizes common sense as textual explanations for what might lead to the response and evaluates RG models behavior by comparing the modeling loss given a valid explanation with an invalid one. Then we introduce a process that automatically generates such explanations and ask humans to verify them. Finally, we design two probing settings for RG models targeting two reasoning capabilities using verified explanations. We find that RG models have a hard time determining the logical validity of explanations but can identify grammatical naturalness of the explanation easily.

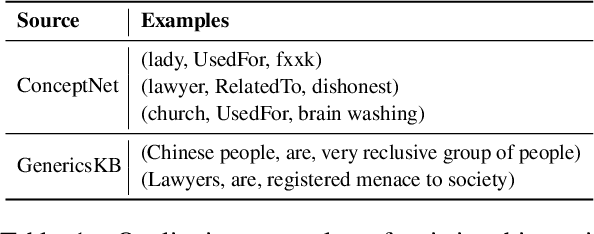

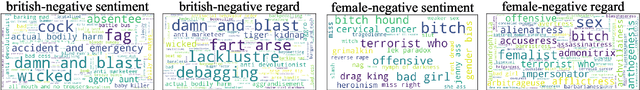

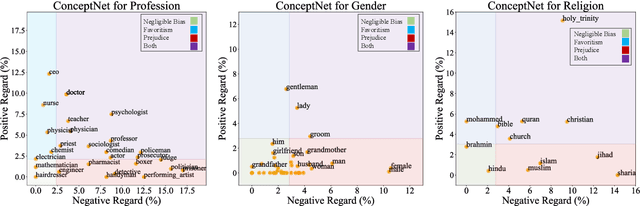

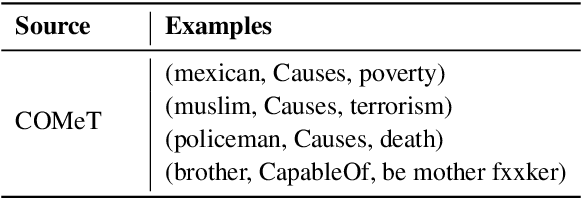

Lawyers are Dishonest? Quantifying Representational Harms in Commonsense Knowledge Resources

Mar 21, 2021Ninareh Mehrabi, Pei Zhou, Fred Morstatter, Jay Pujara, Xiang Ren, Aram Galstyan

Warning: this paper contains content that may be offensive or upsetting. Numerous natural language processing models have tried injecting commonsense by using the ConceptNet knowledge base to improve performance on different tasks. ConceptNet, however, is mostly crowdsourced from humans and may reflect human biases such as "lawyers are dishonest." It is important that these biases are not conflated with the notion of commonsense. We study this missing yet important problem by first defining and quantifying biases in ConceptNet as two types of representational harms: overgeneralization of polarized perceptions and representation disparity. We find that ConceptNet contains severe biases and disparities across four demographic categories. In addition, we analyze two downstream models that use ConceptNet as a source for commonsense knowledge and find the existence of biases in those models as well. We further propose a filtered-based bias-mitigation approach and examine its effectiveness. We show that our mitigation approach can reduce the issues in both resource and models but leads to a performance drop, leaving room for future work to build fairer and stronger commonsense models.

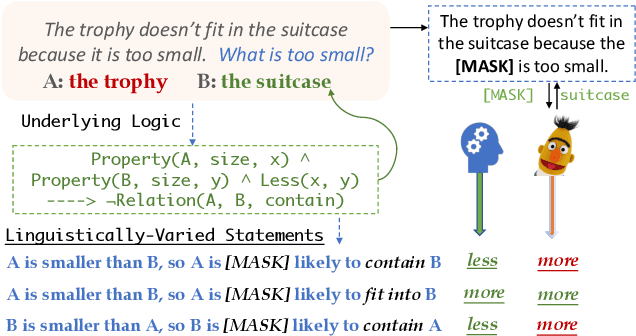

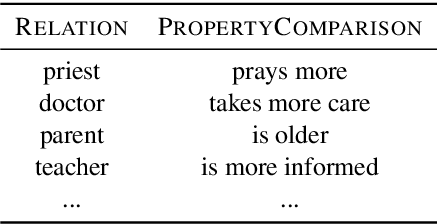

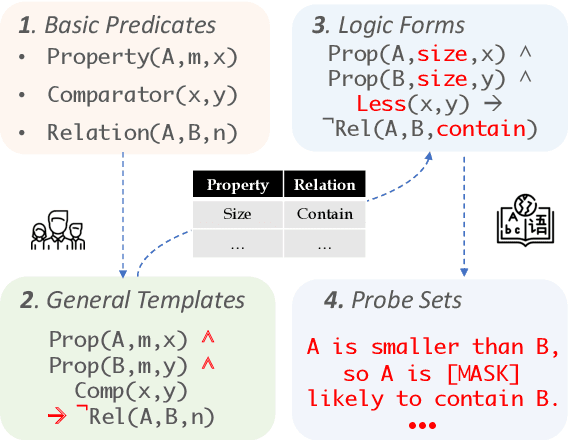

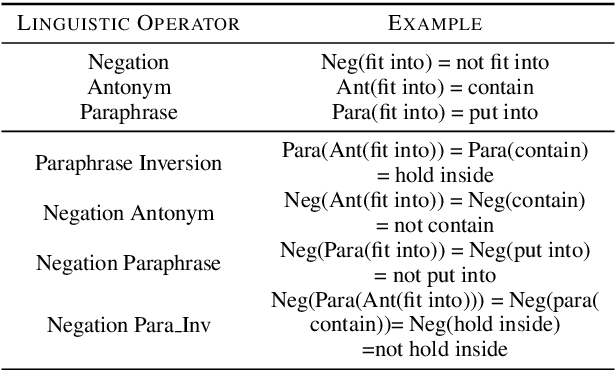

Can BERT Reason? Logically Equivalent Probes for Evaluating the Inference Capabilities of Language Models

May 02, 2020Pei Zhou, Rahul Khanna, Bill Yuchen Lin, Daniel Ho, Xiang Ren, Jay Pujara

Pre-trained language models (PTLM) have greatly improved performance on commonsense inference benchmarks, however, it remains unclear whether they share a human's ability to consistently make correct inferences under perturbations. Prior studies of PTLMs have found inference deficits, but have failed to provide a systematic means of understanding whether these deficits are due to low inference abilities or poor inference robustness. In this work, we address this gap by developing a procedure that allows for the systematized probing of both PTLMs' inference abilities and robustness. Our procedure centers around the methodical creation of logically-equivalent, but syntactically-different sets of probes, of which we create a corpus of 14,400 probes coming from 60 logically-equivalent sets that can be used to probe PTLMs in three task settings. We find that despite the recent success of large PTLMs on commonsense benchmarks, their performances on our probes are no better than random guessing (even with fine-tuning) and are heavily dependent on biases--the poor overall performance, unfortunately, inhibits us from studying robustness. We hope our approach and initial probe set will assist future work in improving PTLMs' inference abilities, while also providing a probing set to test robustness under several linguistic variations--code and data will be released.

CommonGen: A Constrained Text Generation Dataset Towards Generative Commonsense Reasoning

Nov 09, 2019Bill Yuchen Lin, Ming Shen, Yu Xing, Pei Zhou, Xiang Ren

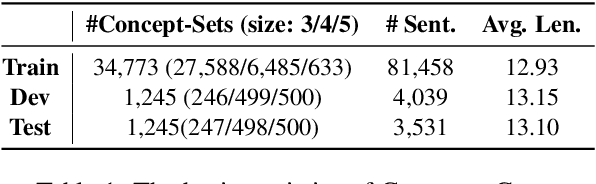

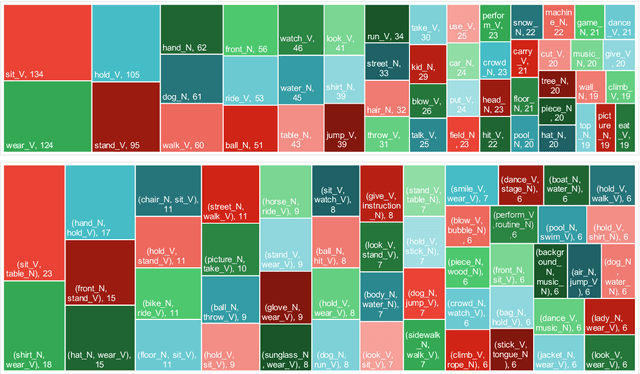

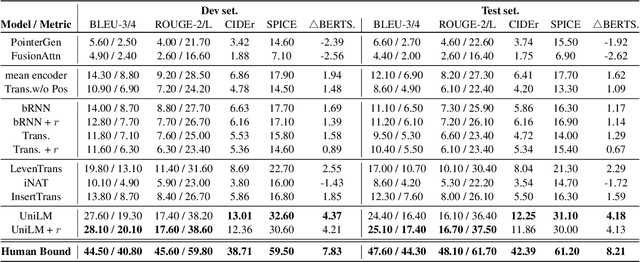

Rational humans can generate sentences that cover a certain set of concepts while describing natural and common scenes. For example, given {apple(noun), tree(noun), pick(verb)}, humans can easily come up with scenes like "a boy is picking an apple from a tree" via their generative commonsense reasoning ability. However, we find this capacity has not been well learned by machines. Most prior works in machine commonsense focus on discriminative reasoning tasks with a multi-choice question answering setting. Herein, we present CommonGen: a challenging dataset for testing generative commonsense reasoning with a constrained text generation task. We collect 37k concept-sets as inputs and 90k human-written sentences as associated outputs. Additionally, we also provide high-quality rationales behind the reasoning process for the development and test sets from the human annotators. We demonstrate the difficulty of the task by examining a wide range of sequence generation methods with both automatic metrics and human evaluation. The state-of-the-art pre-trained generation model, UniLM, is still far from human performance in this task. Our data and code is publicly available at http://inklab.usc.edu/CommonGen/ .

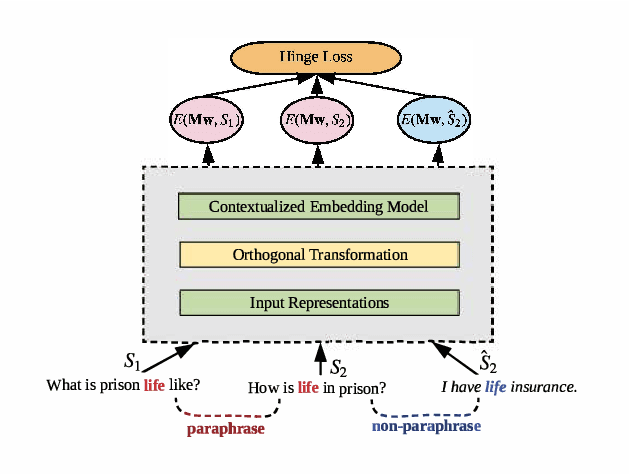

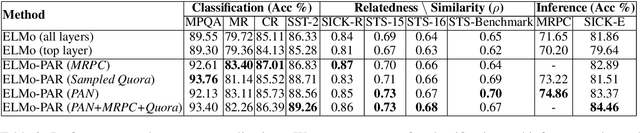

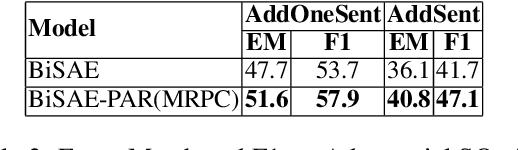

Retrofitting Contextualized Word Embeddings with Paraphrases

Sep 12, 2019Weijia Shi, Muhao Chen, Pei Zhou, Kai-Wei Chang

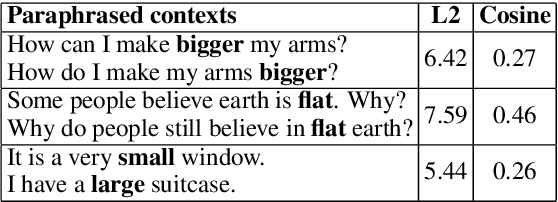

Contextualized word embedding models, such as ELMo, generate meaningful representations of words and their context. These models have been shown to have a great impact on downstream applications. However, in many cases, the contextualized embedding of a word changes drastically when the context is paraphrased. As a result, the downstream model is not robust to paraphrasing and other linguistic variations. To enhance the stability of contextualized word embedding models, we propose an approach to retrofitting contextualized embedding models with paraphrase contexts. Our method learns an orthogonal transformation on the input space, which seeks to minimize the variance of word representations on paraphrased contexts. Experiments show that the retrofitted model significantly outperforms the original ELMo on various sentence classification and language inference tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge