Ting Li

Designing Time Series Experiments in A/B Testing with Transformer Reinforcement Learning

Feb 02, 2026Abstract:A/B testing has become a gold standard for modern technological companies to conduct policy evaluation. Yet, its application to time series experiments, where policies are sequentially assigned over time, remains challenging. Existing designs suffer from two limitations: (i) they do not fully leverage the entire history for treatment allocation; (ii) they rely on strong assumptions to approximate the objective function (e.g., the mean squared error of the estimated treatment effect) for optimizing the design. We first establish an impossibility theorem showing that failure to condition on the full history leads to suboptimal designs, due to the dynamic dependencies in time series experiments. To address both limitations simultaneously, we next propose a transformer reinforcement learning (RL) approach which leverages transformers to condition allocation on the entire history and employs RL to directly optimize the MSE without relying on restrictive assumptions. Empirical evaluations on synthetic data, a publicly available dispatch simulator, and a real-world ridesharing dataset demonstrate that our proposal consistently outperforms existing designs.

Systematic validation of time-resolved diffuse optical simulators via non-contact SPAD-based measurements

Nov 17, 2025

Abstract:Objective: Time-domain diffuse optical imaging (DOI) requires accurate forward models for photon propagation in scattering media. However, existing simulators lack comprehensive experimental validation, especially for non-contact configurations with oblique illumination. This study rigorously evaluates three widely used open-source simulators, including MMC, NIRFASTer, and Toast++, using time-resolved experimental data. Approach: All simulations employed a unified mesh and point-source illumination. Virtual source correction was applied to FEM solvers for oblique incidence. A time-resolved DOI system with a 32 $\times$ 32 single-photon avalanche diode (SPAD) array acquired transmission-mode data from 16 standardized phantoms with varying absorption coefficient $μ_a$ and reduced scattering coefficient $μ_s'$. The simulation results were quantified across five metrics: spatial-domain (SD) precision, time-domain (TD) precision, oblique beam accuracy, computational speed, and mesh-density independence. Results: Among three simulators, MMC achieves superior accuracy in SD and TD metrics, and shows robustness across all optical properties. NIRFASTer and Toast++ demonstrate comparable overall performance. In general, MMC is optimal for accuracy-critical TD-DOI applications, while NIRFASTer and Toast++ suit scenarios prioritizing speed with sufficiently large $μ_s'$. Besides, virtual source correction is essential for non-contact FEM modeling, which reduced average errors by > 34% in large-angle scenarios. Significance: This work provides benchmarked guidelines for simulator selection during the development phase of next-generation TD-DOI systems. Our work represents the first study to systematically validate TD simulators against SPAD array-based data under clinically relevant non-contact conditions, bridging a critical gap in biomedical optical simulation standards.

Knowledge-Augmented Question Error Correction for Chinese Question Answer System with QuestionRAG

Nov 05, 2025Abstract:Input errors in question-answering (QA) systems often lead to incorrect responses. Large language models (LLMs) struggle with this task, frequently failing to interpret user intent (misinterpretation) or unnecessarily altering the original question's structure (over-correction). We propose QuestionRAG, a framework that tackles these problems. To address misinterpretation, it enriches the input with external knowledge (e.g., search results, related entities). To prevent over-correction, it uses reinforcement learning (RL) to align the model's objective with precise correction, not just paraphrasing. Our results demonstrate that knowledge augmentation is critical for understanding faulty questions. Furthermore, RL-based alignment proves significantly more effective than traditional supervised fine-tuning (SFT), boosting the model's ability to follow instructions and generalize. By integrating these two strategies, QuestionRAG unlocks the full potential of LLMs for the question correction task.

DeepSuM: Deep Sufficient Modality Learning Framework

Mar 03, 2025

Abstract:Multimodal learning has become a pivotal approach in developing robust learning models with applications spanning multimedia, robotics, large language models, and healthcare. The efficiency of multimodal systems is a critical concern, given the varying costs and resource demands of different modalities. This underscores the necessity for effective modality selection to balance performance gains against resource expenditures. In this study, we propose a novel framework for modality selection that independently learns the representation of each modality. This approach allows for the assessment of each modality's significance within its unique representation space, enabling the development of tailored encoders and facilitating the joint analysis of modalities with distinct characteristics. Our framework aims to enhance the efficiency and effectiveness of multimodal learning by optimizing modality integration and selection.

Baichuan-Omni-1.5 Technical Report

Jan 26, 2025

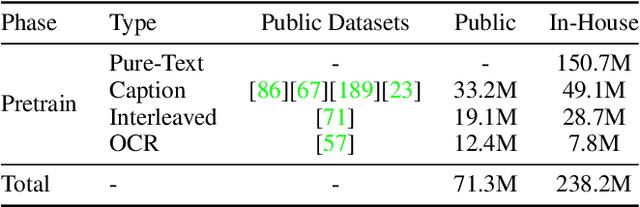

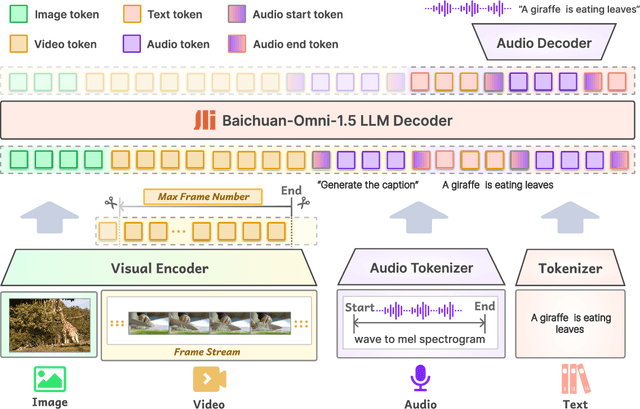

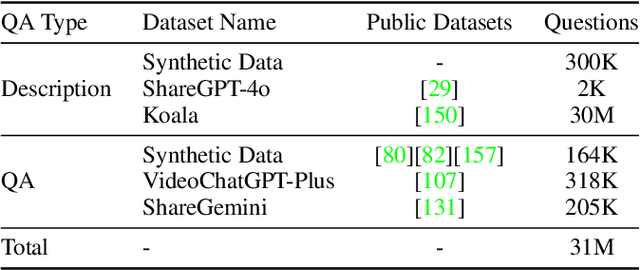

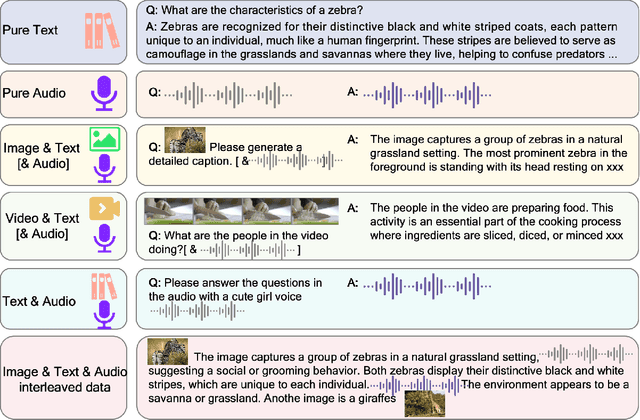

Abstract:We introduce Baichuan-Omni-1.5, an omni-modal model that not only has omni-modal understanding capabilities but also provides end-to-end audio generation capabilities. To achieve fluent and high-quality interaction across modalities without compromising the capabilities of any modality, we prioritized optimizing three key aspects. First, we establish a comprehensive data cleaning and synthesis pipeline for multimodal data, obtaining about 500B high-quality data (text, audio, and vision). Second, an audio-tokenizer (Baichuan-Audio-Tokenizer) has been designed to capture both semantic and acoustic information from audio, enabling seamless integration and enhanced compatibility with MLLM. Lastly, we designed a multi-stage training strategy that progressively integrates multimodal alignment and multitask fine-tuning, ensuring effective synergy across all modalities. Baichuan-Omni-1.5 leads contemporary models (including GPT4o-mini and MiniCPM-o 2.6) in terms of comprehensive omni-modal capabilities. Notably, it achieves results comparable to leading models such as Qwen2-VL-72B across various multimodal medical benchmarks.

Two-way Node Popularity Model for Directed and Bipartite Networks

Dec 11, 2024

Abstract:There has been extensive research on community detection in directed and bipartite networks. However, these studies often fail to consider the popularity of nodes in different communities, which is a common phenomenon in real-world networks. To address this issue, we propose a new probabilistic framework called the Two-Way Node Popularity Model (TNPM). The TNPM also accommodates edges from different distributions within a general sub-Gaussian family. We introduce the Delete-One-Method (DOM) for model fitting and community structure identification, and provide a comprehensive theoretical analysis with novel technical skills dealing with sub-Gaussian generalization. Additionally, we propose the Two-Stage Divided Cosine Algorithm (TSDC) to handle large-scale networks more efficiently. Our proposed methods offer multi-folded advantages in terms of estimation accuracy and computational efficiency, as demonstrated through extensive numerical studies. We apply our methods to two real-world applications, uncovering interesting findings.

ActiveSplat: High-Fidelity Scene Reconstruction through Active Gaussian Splatting

Oct 29, 2024Abstract:We propose ActiveSplat, an autonomous high-fidelity reconstruction system leveraging Gaussian splatting. Taking advantage of efficient and realistic rendering, the system establishes a unified framework for online mapping, viewpoint selection, and path planning. The key to ActiveSplat is a hybrid map representation that integrates both dense information about the environment and a sparse abstraction of the workspace. Therefore, the system leverages sparse topology for efficient viewpoint sampling and path planning, while exploiting view-dependent dense prediction for viewpoint selection, facilitating efficient decision-making with promising accuracy and completeness. A hierarchical planning strategy based on the topological map is adopted to mitigate repetitive trajectories and improve local granularity given limited budgets, ensuring high-fidelity reconstruction with photorealistic view synthesis. Extensive experiments and ablation studies validate the efficacy of the proposed method in terms of reconstruction accuracy, data coverage, and exploration efficiency. Project page: https://li-yuetao.github.io/ActiveSplat/.

Bayesian Power Steering: An Effective Approach for Domain Adaptation of Diffusion Models

Jun 06, 2024

Abstract:We propose a Bayesian framework for fine-tuning large diffusion models with a novel network structure called Bayesian Power Steering (BPS). We clarify the meaning behind adaptation from a \textit{large probability space} to a \textit{small probability space} and explore the task of fine-tuning pre-trained models using learnable modules from a Bayesian perspective. BPS extracts task-specific knowledge from a pre-trained model's learned prior distribution. It efficiently leverages large diffusion models, differentially intervening different hidden features with a head-heavy and foot-light configuration. Experiments highlight the superiority of BPS over contemporary methods across a range of tasks even with limited amount of data. Notably, BPS attains an FID score of 10.49 under the sketch condition on the COCO17 dataset.

Combining Experimental and Historical Data for Policy Evaluation

Jun 01, 2024Abstract:This paper studies policy evaluation with multiple data sources, especially in scenarios that involve one experimental dataset with two arms, complemented by a historical dataset generated under a single control arm. We propose novel data integration methods that linearly integrate base policy value estimators constructed based on the experimental and historical data, with weights optimized to minimize the mean square error (MSE) of the resulting combined estimator. We further apply the pessimistic principle to obtain more robust estimators, and extend these developments to sequential decision making. Theoretically, we establish non-asymptotic error bounds for the MSEs of our proposed estimators, and derive their oracle, efficiency and robustness properties across a broad spectrum of reward shift scenarios. Numerical experiments and real-data-based analyses from a ridesharing company demonstrate the superior performance of the proposed estimators.

MonoPCC: Photometric-invariant Cycle Constraint for Monocular Depth Estimation of Endoscopic Images

Apr 25, 2024

Abstract:Photometric constraint is indispensable for self-supervised monocular depth estimation. It involves warping a source image onto a target view using estimated depth&pose, and then minimizing the difference between the warped and target images. However, the endoscopic built-in light causes significant brightness fluctuations, and thus makes the photometric constraint unreliable. Previous efforts only mitigate this relying on extra models to calibrate image brightness. In this paper, we propose MonoPCC to address the brightness inconsistency radically by reshaping the photometric constraint into a cycle form. Instead of only warping the source image, MonoPCC constructs a closed loop consisting of two opposite forward-backward warping paths: from target to source and then back to target. Thus, the target image finally receives an image cycle-warped from itself, which naturally makes the constraint invariant to brightness changes. Moreover, MonoPCC transplants the source image's phase-frequency into the intermediate warped image to avoid structure lost, and also stabilizes the training via an exponential moving average (EMA) strategy to avoid frequent changes in the forward warping. The comprehensive and extensive experimental results on three datasets demonstrate that our proposed MonoPCC shows a great robustness to the brightness inconsistency, and exceeds other state-of-the-arts by reducing the absolute relative error by at least 7.27%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge