Ling Shao

Terminus Group, Beijing, China

Generative Multi-Label Zero-Shot Learning

Jan 28, 2021

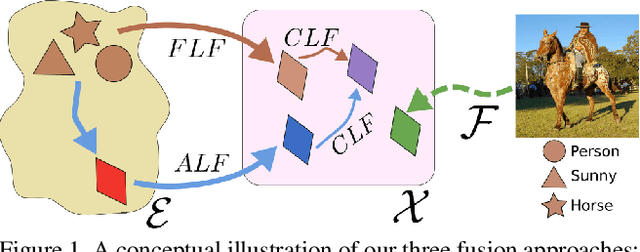

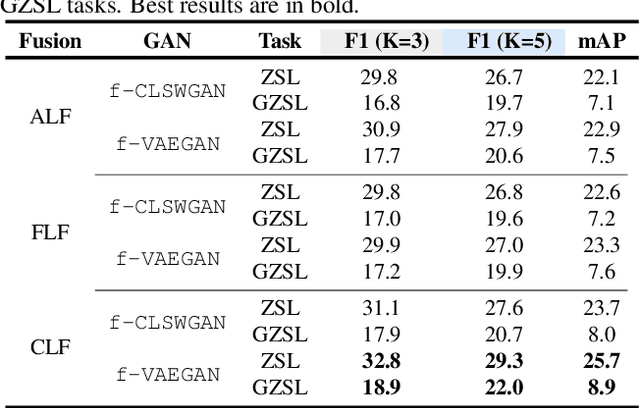

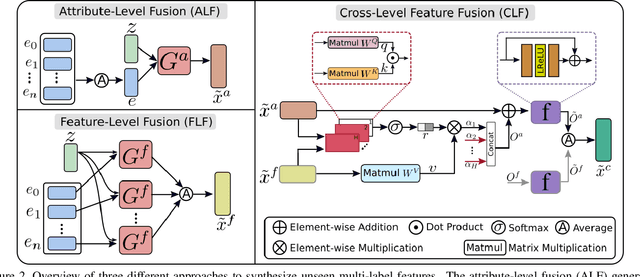

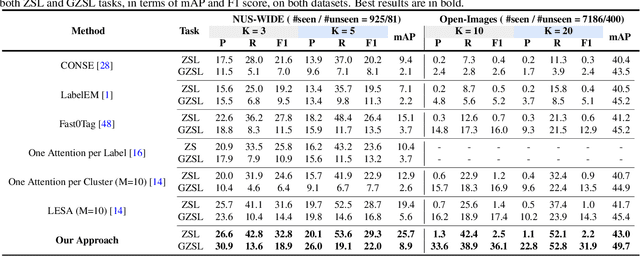

Abstract:Multi-label zero-shot learning strives to classify images into multiple unseen categories for which no data is available during training. The test samples can additionally contain seen categories in the generalized variant. Existing approaches rely on learning either shared or label-specific attention from the seen classes. Nevertheless, computing reliable attention maps for unseen classes during inference in a multi-label setting is still a challenge. In contrast, state-of-the-art single-label generative adversarial network (GAN) based approaches learn to directly synthesize the class-specific visual features from the corresponding class attribute embeddings. However, synthesizing multi-label features from GANs is still unexplored in the context of zero-shot setting. In this work, we introduce different fusion approaches at the attribute-level, feature-level and cross-level (across attribute and feature-levels) for synthesizing multi-label features from their corresponding multi-label class embedding. To the best of our knowledge, our work is the first to tackle the problem of multi-label feature synthesis in the (generalized) zero-shot setting. Comprehensive experiments are performed on three zero-shot image classification benchmarks: NUS-WIDE, Open Images and MS COCO. Our cross-level fusion-based generative approach outperforms the state-of-the-art on all three datasets. Furthermore, we show the generalization capabilities of our fusion approach in the zero-shot detection task on MS COCO, achieving favorable performance against existing methods. The source code is available at https://github.com/akshitac8/Generative_MLZSL.

Boundary-Aware Segmentation Network for Mobile and Web Applications

Jan 12, 2021

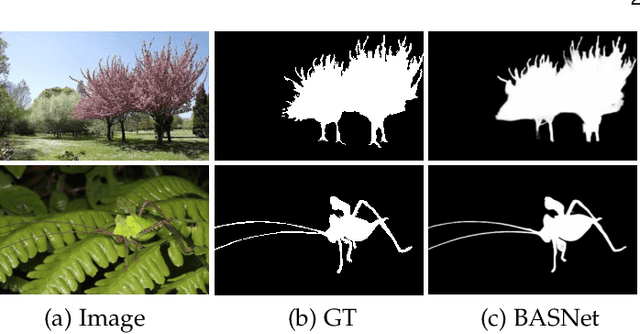

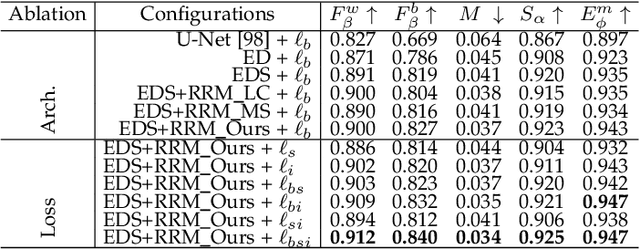

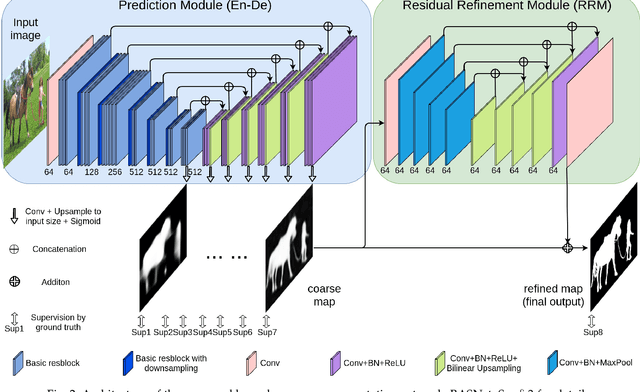

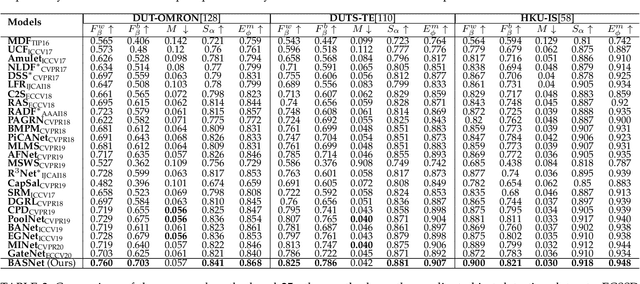

Abstract:Although deep models have greatly improved the accuracy and robustness of image segmentation, obtaining segmentation results with highly accurate boundaries and fine structures is still a challenging problem. In this paper, we propose a simple yet powerful Boundary-Aware Segmentation Network (BASNet), which comprises a predict-refine architecture and a hybrid loss, for highly accurate image segmentation. The predict-refine architecture consists of a densely supervised encoder-decoder network and a residual refinement module, which are respectively used to predict and refine a segmentation probability map. The hybrid loss is a combination of the binary cross entropy, structural similarity and intersection-over-union losses, which guide the network to learn three-level (ie, pixel-, patch- and map- level) hierarchy representations. We evaluate our BASNet on two reverse tasks including salient object segmentation, camouflaged object segmentation, showing that it achieves very competitive performance with sharp segmentation boundaries. Importantly, BASNet runs at over 70 fps on a single GPU which benefits many potential real applications. Based on BASNet, we further developed two (close to) commercial applications: AR COPY & PASTE, in which BASNet is integrated with augmented reality for "COPYING" and "PASTING" real-world objects, and OBJECT CUT, which is a web-based tool for automatic object background removal. Both applications have already drawn huge amount of attention and have important real-world impacts. The code and two applications will be publicly available at: https://github.com/NathanUA/BASNet.

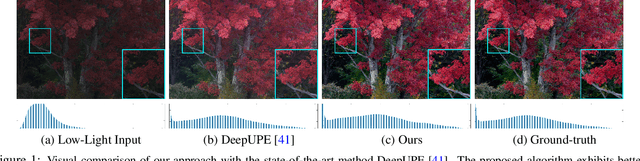

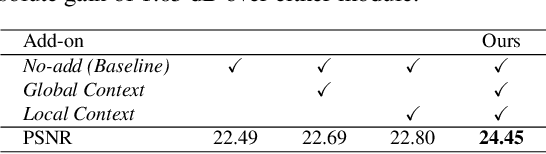

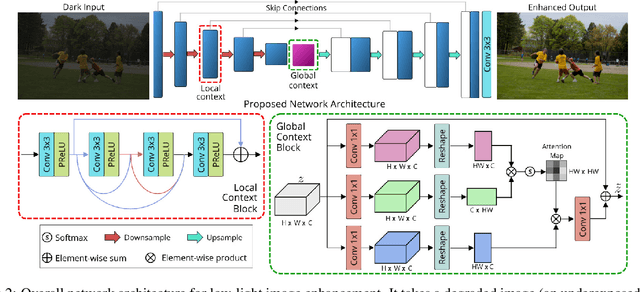

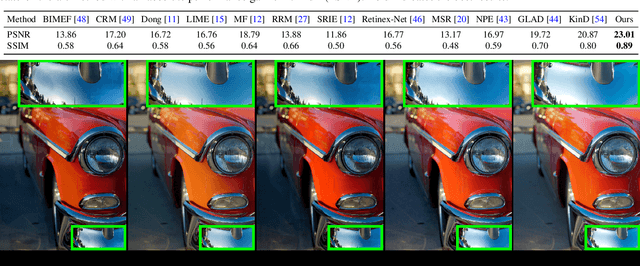

Low Light Image Enhancement via Global and Local Context Modeling

Jan 04, 2021

Abstract:Images captured under low-light conditions manifest poor visibility, lack contrast and color vividness. Compared to conventional approaches, deep convolutional neural networks (CNNs) perform well in enhancing images. However, being solely reliant on confined fixed primitives to model dependencies, existing data-driven deep models do not exploit the contexts at various spatial scales to address low-light image enhancement. These contexts can be crucial towards inferring several image enhancement tasks, e.g., local and global contrast, brightness and color corrections; which requires cues from both local and global spatial extent. To this end, we introduce a context-aware deep network for low-light image enhancement. First, it features a global context module that models spatial correlations to find complementary cues over full spatial domain. Second, it introduces a dense residual block that captures local context with a relatively large receptive field. We evaluate the proposed approach using three challenging datasets: MIT-Adobe FiveK, LoL, and SID. On all these datasets, our method performs favorably against the state-of-the-arts in terms of standard image fidelity metrics. In particular, compared to the best performing method on the MIT-Adobe FiveK dataset, our algorithm improves PSNR from 23.04 dB to 24.45 dB.

D2-Net: Weakly-Supervised Action Localization via Discriminative Embeddings and Denoised Activations

Dec 11, 2020

Abstract:This work proposes a weakly-supervised temporal action localization framework, called D2-Net, which strives to temporally localize actions using video-level supervision. Our main contribution is the introduction of a novel loss formulation, which jointly enhances the discriminability of latent embeddings and robustness of the output temporal class activations with respect to foreground-background noise caused by weak supervision. The proposed formulation comprises a discriminative and a denoising loss term for enhancing temporal action localization. The discriminative term incorporates a classification loss and utilizes a top-down attention mechanism to enhance the separability of latent foreground-background embeddings. The denoising loss term explicitly addresses the foreground-background noise in class activations by simultaneously maximizing intra-video and inter-video mutual information using a bottom-up attention mechanism. As a result, activations in the foreground regions are emphasized whereas those in the background regions are suppressed, thereby leading to more robust predictions. Comprehensive experiments are performed on two benchmarks: THUMOS14 and ActivityNet1.2. Our D2-Net performs favorably in comparison to the existing methods on both datasets, achieving gains as high as 3.6% in terms of mean average precision on THUMOS14.

Learning to Fuse Asymmetric Feature Maps in Siamese Trackers

Dec 04, 2020

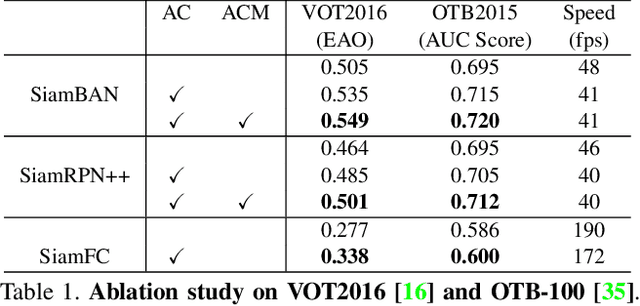

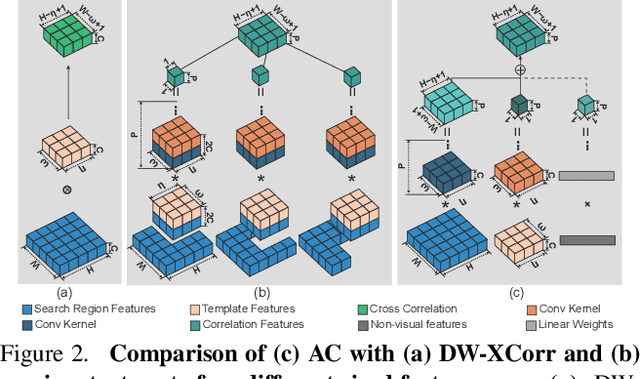

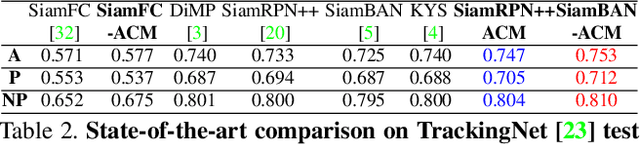

Abstract:In recent years, Siamese-based trackers have achieved promising performance in visual tracking. Most recent Siamese-based trackers typically employ a depth-wise cross-correlation (DW-XCorr) to obtain multi-channel correlation information from the two feature maps (target and search region). However, DW-XCorr has several limitations within Siamese-based tracking: it can easily be fooled by distractors, has fewer activated channels, and provides weak discrimination of object boundaries. Further, DW-XCorr is a handcrafted parameter-free module and cannot fully benefit from offline learning on large-scale data. We propose a learnable module, called the asymmetric convolution (ACM), which learns to better capture the semantic correlation information in offline training on large-scale data. Different from DW-XCorr and its predecessor (XCorr), which regard a single feature map as the convolution kernel, our ACM decomposes the convolution operation on a concatenated feature map into two mathematically equivalent operations, thereby avoiding the need for the feature maps to be of the same size (width and height) during concatenation. Our ACM can incorporate useful prior information, such as bounding-box size, with standard visual features. Furthermore, ACM can easily be integrated into existing Siamese trackers based on DW-XCorr or XCorr. To demonstrate its generalization ability, we integrate ACM into three representative trackers: SiamFC, SiamRPN++, and SiamBAN. Our experiments reveal the benefits of the proposed ACM, which outperforms existing methods on six tracking benchmarks. On the LaSOT test set, our ACM-based tracker obtains a significant improvement of 5.8% in terms of success (AUC), over the baseline.

DomainMix: Learning Generalizable Person Re-Identification Without Human Annotations

Nov 24, 2020

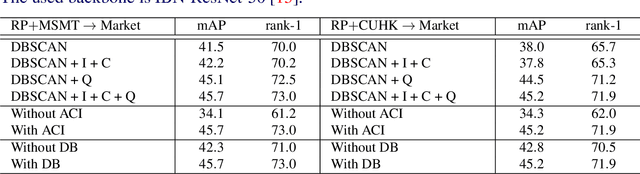

Abstract:Existing person re-identification methods often have low generalization capability, which is mostly due to the limited availability of large-scale labeled training data. However, labeling large-scale training data is very expensive and time-consuming. To address this, this paper presents a solution, called DomainMix, which can learn a person re-identification model from both synthetic and real-world data, for the first time, completely without human annotations. This way, the proposed method enjoys the cheap availability of large-scale training data, and benefiting from its scalability and diversity, the learned model is able to generalize well on unseen domains. Specifically, inspired from a recent work generating large-scale synthetic data for effective person re-identification training, the proposed method firstly applies unsupervised domain adaptation from labeled synthetic data to unlabeled real-world data to generate pseudo labels. Then, the two sources of data are directly mixed together for supervised training. However, a large domain gap still exists between them. To address this, a domain-invariant feature learning method is proposed, which designs an adversarial learning between domain-invariant feature learning and domain discrimination, and meanwhile learns a discriminant feature for person re-identification. This way, the domain gap between synthetic and real-world data is much reduced, and the learned feature is generalizable thanks to the large-scale and diverse training data. Experimental results show that the proposed annotation-free method is more or less comparable to the counterpart trained with full human annotations, which is quite promising. In addition, it achieves the current state of the art on several popular person re-identification datasets under direct cross-dataset evaluation.

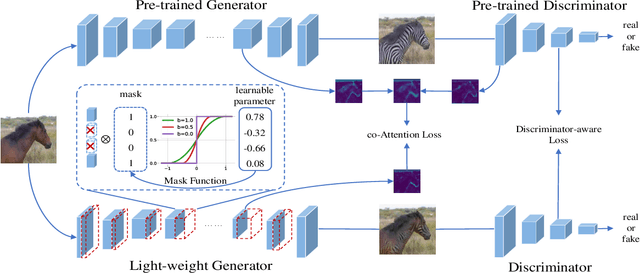

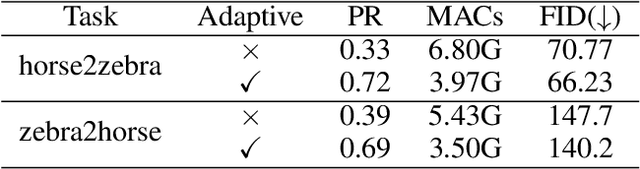

Learning Efficient GANs using Differentiable Masks and co-Attention Distillation

Nov 21, 2020

Abstract:Generative Adversarial Networks (GANs) have been widely-used in image translation, but their high computational and storage costs impede the deployment on mobile devices. Prevalent methods for CNN compression cannot be directly applied to GANs due to the complicated generator architecture and the unstable adversarial training. To solve these, in this paper, we introduce a novel GAN compression method, termed DMAD, by proposing a Differentiable Mask and a co-Attention Distillation. The former searches for a light-weight generator architecture in a training-adaptive manner. To overcome channel inconsistency when pruning the residual connections, an adaptive cross-block group sparsity is further incorporated. The latter simultaneously distills informative attention maps from both the generator and discriminator of a pre-trained model to the searched generator, effectively stabilizing the adversarial training of our light-weight model. Experiments show that DMAD can reduce the Multiply Accumulate Operations (MACs) of CycleGAN by 13x and that of Pix2Pix by 4x while retaining a comparable performance against the full model. Code is available at https://github.com/SJLeo/DMAD.

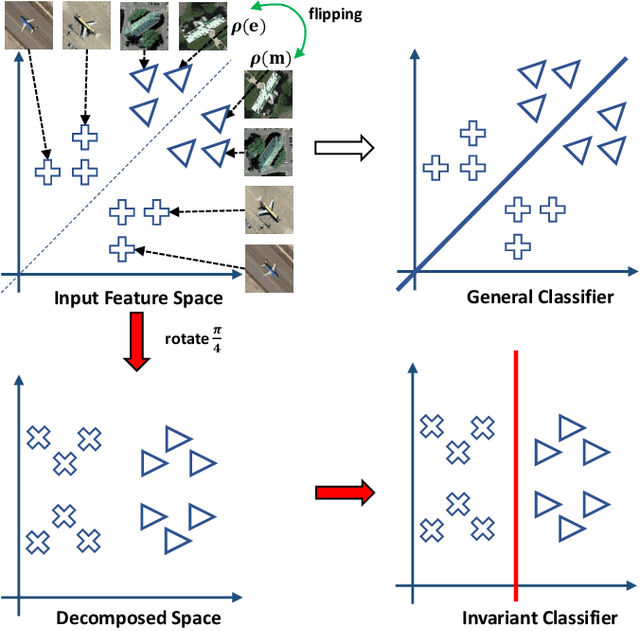

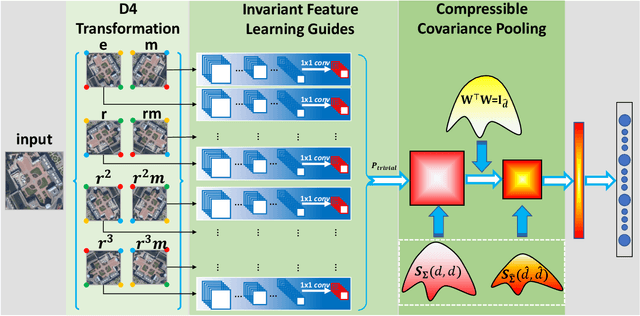

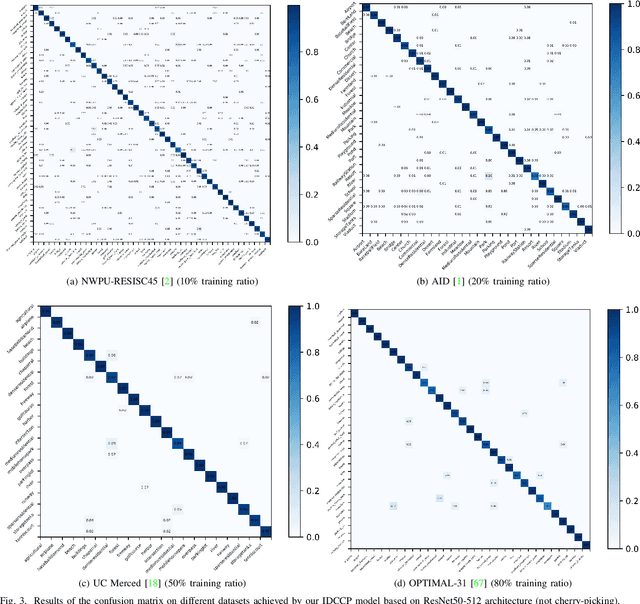

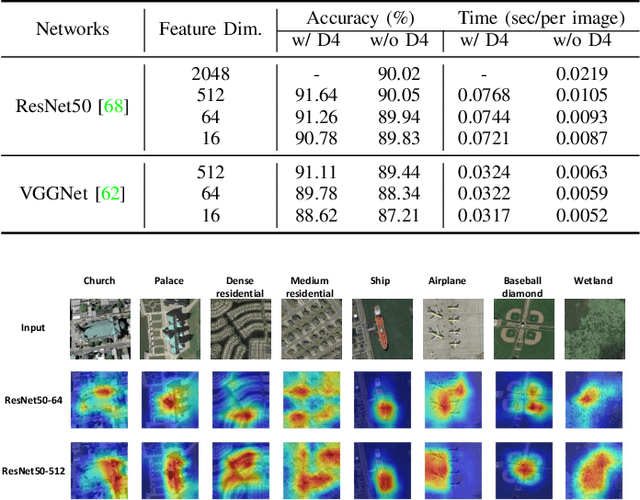

Invariant Deep Compressible Covariance Pooling for Aerial Scene Categorization

Nov 11, 2020

Abstract:Learning discriminative and invariant feature representation is the key to visual image categorization. In this article, we propose a novel invariant deep compressible covariance pooling (IDCCP) to solve nuisance variations in aerial scene categorization. We consider transforming the input image according to a finite transformation group that consists of multiple confounding orthogonal matrices, such as the D4 group. Then, we adopt a Siamese-style network to transfer the group structure to the representation space, where we can derive a trivial representation that is invariant under the group action. The linear classifier trained with trivial representation will also be possessed with invariance. To further improve the discriminative power of representation, we extend the representation to the tensor space while imposing orthogonal constraints on the transformation matrix to effectively reduce feature dimensions. We conduct extensive experiments on the publicly released aerial scene image data sets and demonstrate the superiority of this method compared with state-of-the-art methods. In particular, with using ResNet architecture, our IDCCP model can reduce the dimension of the tensor representation by about 98% without sacrificing accuracy (i.e., <0.5%).

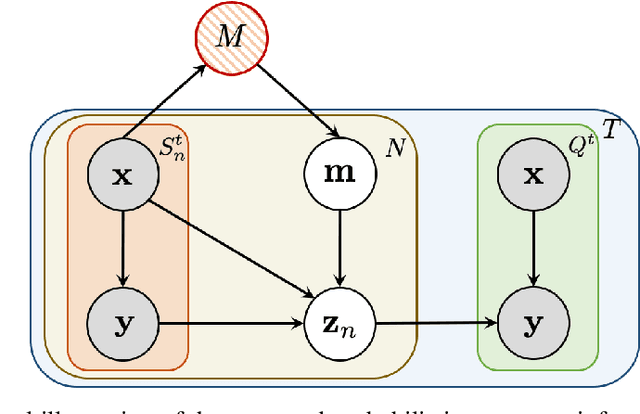

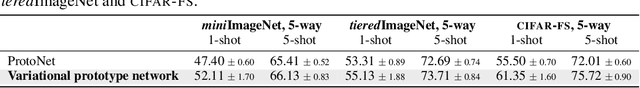

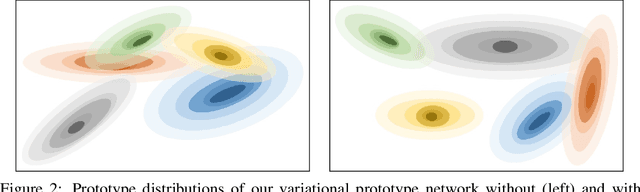

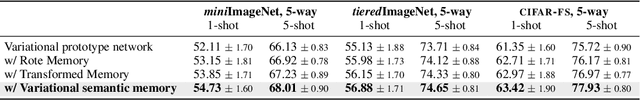

Learning to Learn Variational Semantic Memory

Oct 20, 2020

Abstract:In this paper, we introduce variational semantic memory into meta-learning to acquire long-term knowledge for few-shot learning. The variational semantic memory accrues and stores semantic information for the probabilistic inference of class prototypes in a hierarchical Bayesian framework. The semantic memory is grown from scratch and gradually consolidated by absorbing information from tasks it experiences. By doing so, it is able to accumulate long-term, general knowledge that enables it to learn new concepts of objects. We formulate memory recall as the variational inference of a latent memory variable from addressed contents, which offers a principled way to adapt the knowledge to individual tasks. Our variational semantic memory, as a new long-term memory module, confers principled recall and update mechanisms that enable semantic information to be efficiently accrued and adapted for few-shot learning. Experiments demonstrate that the probabilistic modelling of prototypes achieves a more informative representation of object classes compared to deterministic vectors. The consistent new state-of-the-art performance on four benchmarks shows the benefit of variational semantic memory in boosting few-shot recognition.

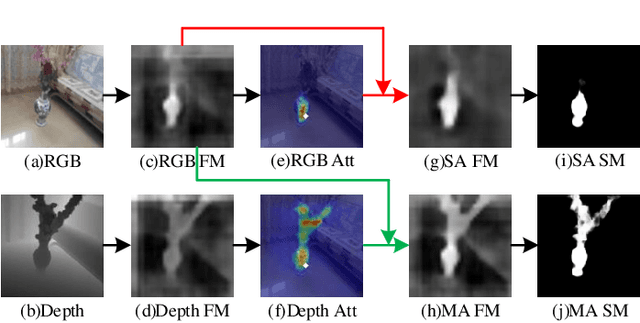

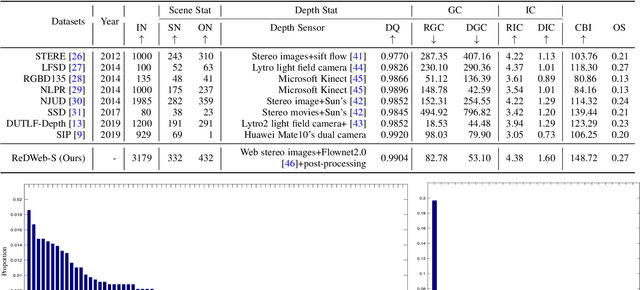

Learning Selective Mutual Attention and Contrast for RGB-D Saliency Detection

Oct 12, 2020

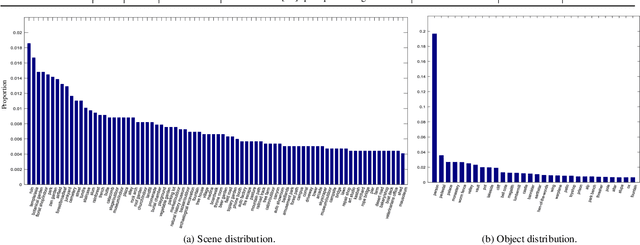

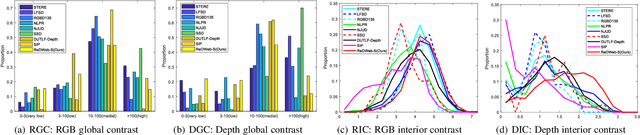

Abstract:How to effectively fuse cross-modal information is the key problem for RGB-D salient object detection. Early fusion and the result fusion schemes fuse RGB and depth information at the input and output stages, respectively, hence incur the problem of distribution gap or information loss. Many models use the feature fusion strategy but are limited by the low-order point-to-point fusion methods. In this paper, we propose a novel mutual attention model by fusing attention and contexts from different modalities. We use the non-local attention of one modality to propagate long-range contextual dependencies for the other modality, thus leveraging complementary attention cues to perform high-order and trilinear cross-modal interaction. We also propose to induce contrast inference from the mutual attention and obtain a unified model. Considering low-quality depth data may detriment the model performance, we further propose selective attention to reweight the added depth cues. We embed the proposed modules in a two-stream CNN for RGB-D SOD. Experimental results have demonstrated the effectiveness of our proposed model. Moreover, we also construct a new challenging large-scale RGB-D SOD dataset with high-quality, thus can both promote the training and evaluation of deep models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge