A Survey of Knowledge-Intensive NLP with Pre-Trained Language Models

Feb 17, 2022Da Yin, Li Dong, Hao Cheng, Xiaodong Liu, Kai-Wei Chang, Furu Wei, Jianfeng Gao

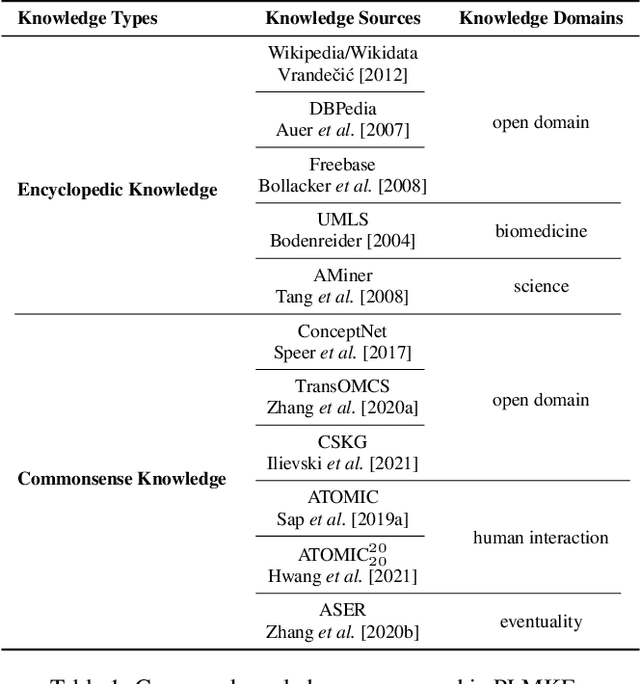

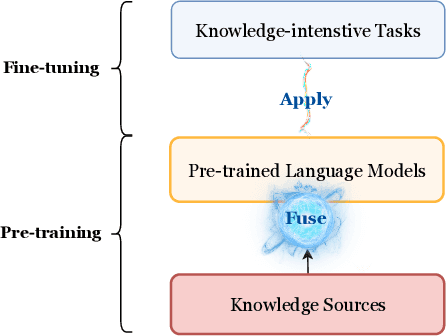

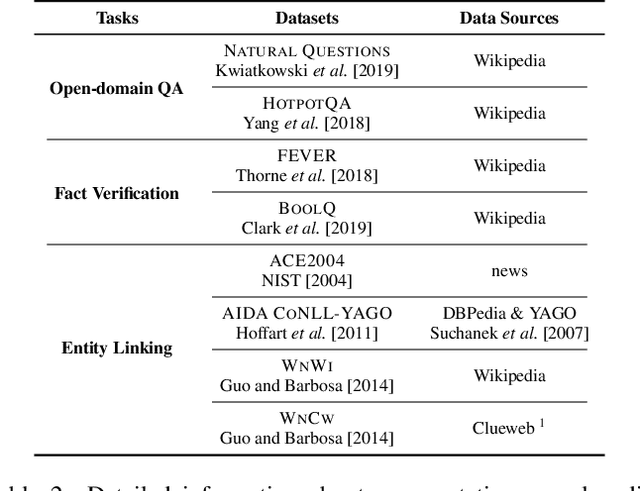

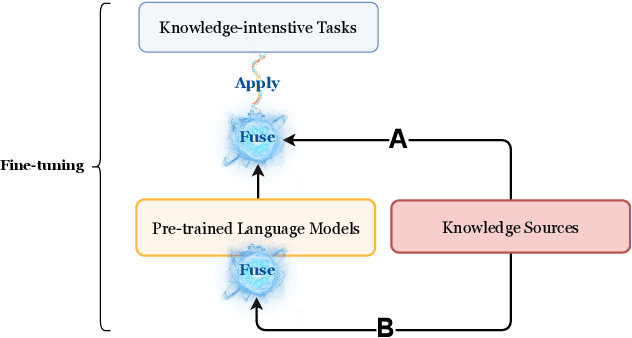

With the increasing of model capacity brought by pre-trained language models, there emerges boosting needs for more knowledgeable natural language processing (NLP) models with advanced functionalities including providing and making flexible use of encyclopedic and commonsense knowledge. The mere pre-trained language models, however, lack the capacity of handling such knowledge-intensive NLP tasks alone. To address this challenge, large numbers of pre-trained language models augmented with external knowledge sources are proposed and in rapid development. In this paper, we aim to summarize the current progress of pre-trained language model-based knowledge-enhanced models (PLMKEs) by dissecting their three vital elements: knowledge sources, knowledge-intensive NLP tasks, and knowledge fusion methods. Finally, we present the challenges of PLMKEs based on the discussion regarding the three elements and attempt to provide NLP practitioners with potential directions for further research.

AdaPrompt: Adaptive Model Training for Prompt-based NLP

Feb 10, 2022Yulong Chen, Yang Liu, Li Dong, Shuohang Wang, Chenguang Zhu, Michael Zeng, Yue Zhang

Prompt-based learning, with its capability to tackle zero-shot and few-shot NLP tasks, has gained much attention in community. The main idea is to bridge the gap between NLP downstream tasks and language modeling (LM), by mapping these tasks into natural language prompts, which are then filled by pre-trained language models (PLMs). However, for prompt learning, there are still two salient gaps between NLP tasks and pretraining. First, prompt information is not necessarily sufficiently present during LM pretraining. Second, task-specific data are not necessarily well represented during pretraining. We address these two issues by proposing AdaPrompt, adaptively retrieving external data for continual pretraining of PLMs by making use of both task and prompt characteristics. In addition, we make use of knowledge in Natural Language Inference models for deriving adaptive verbalizers. Experimental results on five NLP benchmarks show that AdaPrompt can improve over standard PLMs in few-shot settings. In addition, in zero-shot settings, our method outperforms standard prompt-based methods by up to 26.35\% relative error reduction.

Corrupted Image Modeling for Self-Supervised Visual Pre-Training

Feb 07, 2022Yuxin Fang, Li Dong, Hangbo Bao, Xinggang Wang, Furu Wei

We introduce Corrupted Image Modeling (CIM) for self-supervised visual pre-training. CIM uses an auxiliary generator with a small trainable BEiT to corrupt the input image instead of using artificial mask tokens, where some patches are randomly selected and replaced with plausible alternatives sampled from the BEiT output distribution. Given this corrupted image, an enhancer network learns to either recover all the original image pixels, or predict whether each visual token is replaced by a generator sample or not. The generator and the enhancer are simultaneously trained and synergistically updated. After pre-training, the enhancer can be used as a high-capacity visual encoder for downstream tasks. CIM is a general and flexible visual pre-training framework that is suitable for various network architectures. For the first time, CIM demonstrates that both ViT and CNN can learn rich visual representations using a unified, non-Siamese framework. Experimental results show that our approach achieves compelling results in vision benchmarks, such as ImageNet classification and ADE20K semantic segmentation. For example, 300-epoch CIM pre-trained vanilla ViT-Base/16 and ResNet-50 obtain 83.3 and 80.6 Top-1 fine-tuning accuracy on ImageNet-1K image classification respectively.

Kformer: Knowledge Injection in Transformer Feed-Forward Layers

Jan 15, 2022Yunzhi Yao, Shaohan Huang, Ningyu Zhang, Li Dong, Furu Wei, Huajun Chen

Knowledge-Enhanced Model have developed a diverse set of techniques for knowledge integration on different knowledge sources. However, most previous work neglect the language model's own ability and simply concatenate external knowledge at the input. Recent work proposed that Feed Forward Network (FFN) in pre-trained language model can be seen as an memory that stored factual knowledge. In this work, we explore the FFN in Transformer and propose a novel knowledge fusion model, namely Kformer, which incorporates external knowledge through the feed-forward layer in Transformer. We empirically find that simply injecting knowledge into FFN can enhance the pre-trained language model's ability and facilitate current knowledge fusion methods. Our results on two benchmarks in the commonsense reasoning (i.e., SocialIQA) and medical question answering (i.e., MedQA-USMLE) domains demonstrate that Kformer can utilize external knowledge deeply and achieves absolute improvements in these tasks.

Swin Transformer V2: Scaling Up Capacity and Resolution

Nov 18, 2021Ze Liu, Han Hu, Yutong Lin, Zhuliang Yao, Zhenda Xie, Yixuan Wei, Jia Ning, Yue Cao, Zheng Zhang, Li Dong, Furu Wei, Baining Guo

We present techniques for scaling Swin Transformer up to 3 billion parameters and making it capable of training with images of up to 1,536$\times$1,536 resolution. By scaling up capacity and resolution, Swin Transformer sets new records on four representative vision benchmarks: 84.0% top-1 accuracy on ImageNet-V2 image classification, 63.1/54.4 box/mask mAP on COCO object detection, 59.9 mIoU on ADE20K semantic segmentation, and 86.8% top-1 accuracy on Kinetics-400 video action classification. Our techniques are generally applicable for scaling up vision models, which has not been widely explored as that of NLP language models, partly due to the following difficulties in training and applications: 1) vision models often face instability issues at scale and 2) many downstream vision tasks require high resolution images or windows and it is not clear how to effectively transfer models pre-trained at low resolutions to higher resolution ones. The GPU memory consumption is also a problem when the image resolution is high. To address these issues, we present several techniques, which are illustrated by using Swin Transformer as a case study: 1) a post normalization technique and a scaled cosine attention approach to improve the stability of large vision models; 2) a log-spaced continuous position bias technique to effectively transfer models pre-trained at low-resolution images and windows to their higher-resolution counterparts. In addition, we share our crucial implementation details that lead to significant savings of GPU memory consumption and thus make it feasible to train large vision models with regular GPUs. Using these techniques and self-supervised pre-training, we successfully train a strong 3B Swin Transformer model and effectively transfer it to various vision tasks involving high-resolution images or windows, achieving the state-of-the-art accuracy on a variety of benchmarks.

VLMo: Unified Vision-Language Pre-Training with Mixture-of-Modality-Experts

Nov 03, 2021Wenhui Wang, Hangbo Bao, Li Dong, Furu Wei

We present a unified Vision-Language pretrained Model (VLMo) that jointly learns a dual encoder and a fusion encoder with a modular Transformer network. Specifically, we introduce Mixture-of-Modality-Experts (MoME) Transformer, where each block contains a pool of modality-specific experts and a shared self-attention layer. Because of the modeling flexibility of MoME, pretrained VLMo can be fine-tuned as a fusion encoder for vision-language classification tasks, or used as a dual encoder for efficient image-text retrieval. Moreover, we propose a stagewise pre-training strategy, which effectively leverages large-scale image-only and text-only data besides image-text pairs. Experimental results show that VLMo achieves state-of-the-art results on various vision-language tasks, including VQA and NLVR2. The code and pretrained models are available at https://aka.ms/vlmo.

Multilingual Machine Translation Systems from Microsoft for WMT21 Shared Task

Nov 03, 2021Jian Yang, Shuming Ma, Haoyang Huang, Dongdong Zhang, Li Dong, Shaohan Huang, Alexandre Muzio, Saksham Singhal, Hany Hassan Awadalla, Xia Song, Furu Wei

This report describes Microsoft's machine translation systems for the WMT21 shared task on large-scale multilingual machine translation. We participated in all three evaluation tracks including Large Track and two Small Tracks where the former one is unconstrained and the latter two are fully constrained. Our model submissions to the shared task were initialized with DeltaLM\footnote{\url{https://aka.ms/deltalm}}, a generic pre-trained multilingual encoder-decoder model, and fine-tuned correspondingly with the vast collected parallel data and allowed data sources according to track settings, together with applying progressive learning and iterative back-translation approaches to further improve the performance. Our final submissions ranked first on three tracks in terms of the automatic evaluation metric.

s2s-ft: Fine-Tuning Pretrained Transformer Encoders for Sequence-to-Sequence Learning

Oct 26, 2021Hangbo Bao, Li Dong, Wenhui Wang, Nan Yang, Furu Wei

Pretrained bidirectional Transformers, such as BERT, have achieved significant improvements in a wide variety of language understanding tasks, while it is not straightforward to directly apply them for natural language generation. In this paper, we present a sequence-to-sequence fine-tuning toolkit s2s-ft, which adopts pretrained Transformers for conditional generation tasks. Inspired by UniLM, we implement three sequence-to-sequence fine-tuning algorithms, namely, causal fine-tuning, masked fine-tuning, and pseudo-masked fine-tuning. By leveraging the existing pretrained bidirectional Transformers, experimental results show that s2s-ft achieves strong performance on several benchmarks of abstractive summarization, and question generation. Moreover, we demonstrate that the package s2s-ft supports both monolingual and multilingual NLG tasks. The s2s-ft toolkit is available at https://github.com/microsoft/unilm/tree/master/s2s-ft.

Allocating Large Vocabulary Capacity for Cross-lingual Language Model Pre-training

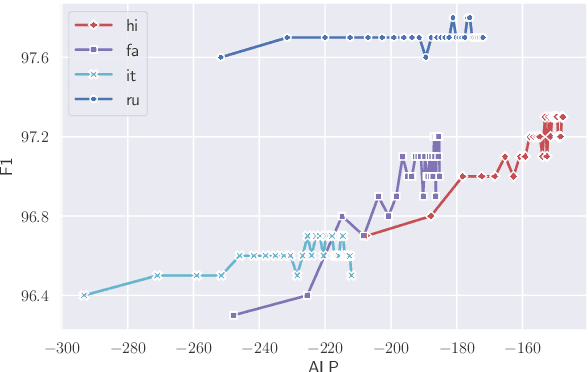

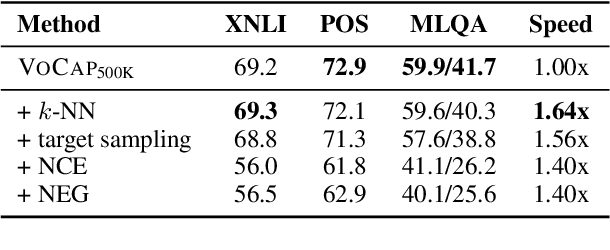

Sep 15, 2021Bo Zheng, Li Dong, Shaohan Huang, Saksham Singhal, Wanxiang Che, Ting Liu, Xia Song, Furu Wei

Compared to monolingual models, cross-lingual models usually require a more expressive vocabulary to represent all languages adequately. We find that many languages are under-represented in recent cross-lingual language models due to the limited vocabulary capacity. To this end, we propose an algorithm VoCap to determine the desired vocabulary capacity of each language. However, increasing the vocabulary size significantly slows down the pre-training speed. In order to address the issues, we propose k-NN-based target sampling to accelerate the expensive softmax. Our experiments show that the multilingual vocabulary learned with VoCap benefits cross-lingual language model pre-training. Moreover, k-NN-based target sampling mitigates the side-effects of increasing the vocabulary size while achieving comparable performance and faster pre-training speed. The code and the pretrained multilingual vocabularies are available at https://github.com/bozheng-hit/VoCapXLM.

XLM-E: Cross-lingual Language Model Pre-training via ELECTRA

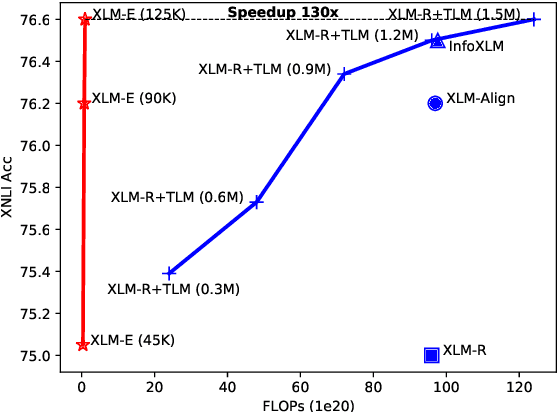

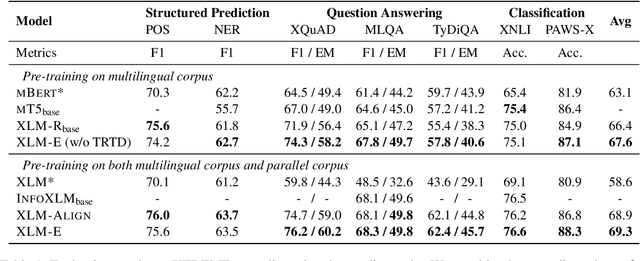

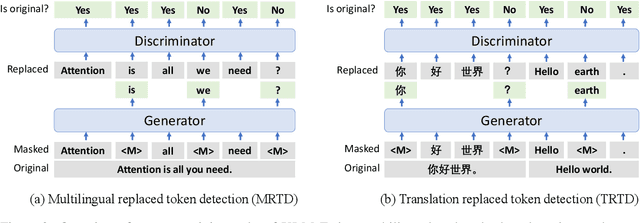

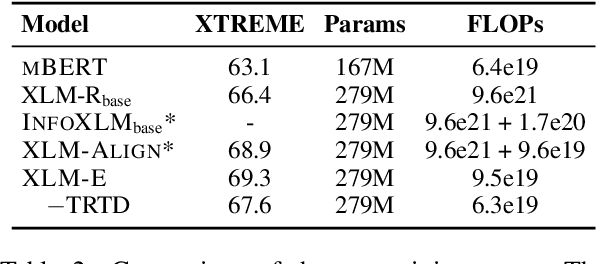

Jun 30, 2021Zewen Chi, Shaohan Huang, Li Dong, Shuming Ma, Saksham Singhal, Payal Bajaj, Xia Song, Furu Wei

In this paper, we introduce ELECTRA-style tasks to cross-lingual language model pre-training. Specifically, we present two pre-training tasks, namely multilingual replaced token detection, and translation replaced token detection. Besides, we pretrain the model, named as XLM-E, on both multilingual and parallel corpora. Our model outperforms the baseline models on various cross-lingual understanding tasks with much less computation cost. Moreover, analysis shows that XLM-E tends to obtain better cross-lingual transferability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge