Daxiang Dong

QianfanHuijin Technical Report: A Novel Multi-Stage Training Paradigm for Finance Industrial LLMs

Dec 30, 2025Abstract:Domain-specific enhancement of Large Language Models (LLMs) within the financial context has long been a focal point of industrial application. While previous models such as BloombergGPT and Baichuan-Finance primarily focused on knowledge enhancement, the deepening complexity of financial services has driven a growing demand for models that possess not only domain knowledge but also robust financial reasoning and agentic capabilities. In this paper, we present QianfanHuijin, a financial domain LLM, and propose a generalizable multi-stage training paradigm for industrial model enhancement. Our approach begins with Continual Pre-training (CPT) on financial corpora to consolidate the knowledge base. This is followed by a fine-grained Post-training pipeline designed with increasing specificity: starting with Financial SFT, progressing to Finance Reasoning RL and Finance Agentic RL, and culminating in General RL aligned with real-world business scenarios. Empirical results demonstrate that QianfanHuijin achieves superior performance across various authoritative financial benchmarks. Furthermore, ablation studies confirm that the targeted Reasoning RL and Agentic RL stages yield significant gains in their respective capabilities. These findings validate our motivation and suggest that this fine-grained, progressive post-training methodology is poised to become a mainstream paradigm for various industrial-enhanced LLMs.

Tree-of-Code: A Tree-Structured Exploring Framework for End-to-End Code Generation and Execution in Complex Task Handling

Dec 19, 2024Abstract:Solving complex reasoning tasks is a key real-world application of agents. Thanks to the pretraining of Large Language Models (LLMs) on code data, recent approaches like CodeAct successfully use code as LLM agents' action, achieving good results. However, CodeAct greedily generates the next action's code block by relying on fragmented thoughts, resulting in inconsistency and instability. Moreover, CodeAct lacks action-related ground-truth (GT), making its supervision signals and termination conditions questionable in multi-turn interactions. To address these issues, we first introduce a simple yet effective end-to-end code generation paradigm, CodeProgram, which leverages code's systematic logic to align with global reasoning and enable cohesive problem-solving. Then, we propose Tree-of-Code (ToC), which self-grows CodeProgram nodes based on the executable nature of the code and enables self-supervision in a GT-free scenario. Experimental results on two datasets using ten popular zero-shot LLMs show ToC remarkably boosts accuracy by nearly 20% over CodeAct with less than 1/4 turns. Several LLMs even perform better on one-turn CodeProgram than on multi-turn CodeAct. To further investigate the trade-off between efficacy and efficiency, we test different ToC tree sizes and exploration mechanisms. We also highlight the potential of ToC's end-to-end data generation for supervised and reinforced fine-tuning.

Tree-of-Code: A Hybrid Approach for Robust Complex Task Planning and Execution

Dec 18, 2024Abstract:The exceptional capabilities of large language models (LLMs) have substantially accelerated the rapid rise and widespread adoption of agents. Recent studies have demonstrated that generating Python code to consolidate LLM-based agents' actions into a unified action space (CodeAct) is a promising approach for developing real-world LLM agents. However, this step-by-step code generation approach often lacks consistency and robustness, leading to instability in agent applications, particularly for complex reasoning and out-of-domain tasks. In this paper, we propose a novel approach called Tree-of-Code (ToC) to tackle the challenges of complex problem planning and execution with an end-to-end mechanism. By integrating key ideas from both Tree-of-Thought and CodeAct, ToC combines their strengths to enhance solution exploration. In our framework, each final code execution result is treated as a node in the decision tree, with a breadth-first search strategy employed to explore potential solutions. The final outcome is determined through a voting mechanism based on the outputs of the nodes.

Warming Up Cold-Start CTR Prediction by Learning Item-Specific Feature Interactions

Jul 14, 2024Abstract:In recommendation systems, new items are continuously introduced, initially lacking interaction records but gradually accumulating them over time. Accurately predicting the click-through rate (CTR) for these items is crucial for enhancing both revenue and user experience. While existing methods focus on enhancing item ID embeddings for new items within general CTR models, they tend to adopt a global feature interaction approach, often overshadowing new items with sparse data by those with abundant interactions. Addressing this, our work introduces EmerG, a novel approach that warms up cold-start CTR prediction by learning item-specific feature interaction patterns. EmerG utilizes hypernetworks to generate an item-specific feature graph based on item characteristics, which is then processed by a Graph Neural Network (GNN). This GNN is specially tailored to provably capture feature interactions at any order through a customized message passing mechanism. We further design a meta learning strategy that optimizes parameters of hypernetworks and GNN across various item CTR prediction tasks, while only adjusting a minimal set of item-specific parameters within each task. This strategy effectively reduces the risk of overfitting when dealing with limited data. Extensive experiments on benchmark datasets validate that EmerG consistently performs the best given no, a few and sufficient instances of new items.

ColdNAS: Search to Modulate for User Cold-Start Recommendation

Jun 06, 2023

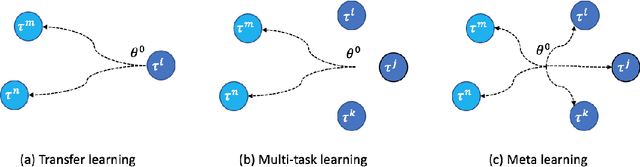

Abstract:Making personalized recommendation for cold-start users, who only have a few interaction histories, is a challenging problem in recommendation systems. Recent works leverage hypernetworks to directly map user interaction histories to user-specific parameters, which are then used to modulate predictor by feature-wise linear modulation function. These works obtain the state-of-the-art performance. However, the physical meaning of scaling and shifting in recommendation data is unclear. Instead of using a fixed modulation function and deciding modulation position by expertise, we propose a modulation framework called ColdNAS for user cold-start problem, where we look for proper modulation structure, including function and position, via neural architecture search. We design a search space which covers broad models and theoretically prove that this search space can be transformed to a much smaller space, enabling an efficient and robust one-shot search algorithm. Extensive experimental results on benchmark datasets show that ColdNAS consistently performs the best. We observe that different modulation functions lead to the best performance on different datasets, which validates the necessity of designing a searching-based method.

Large-scale Knowledge Distillation with Elastic Heterogeneous Computing Resources

Jul 14, 2022

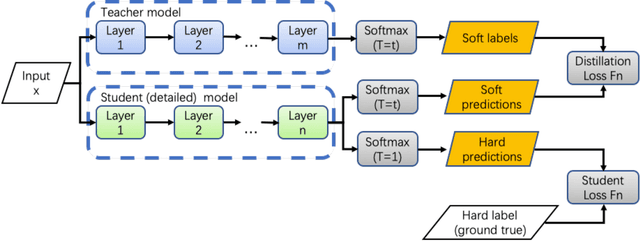

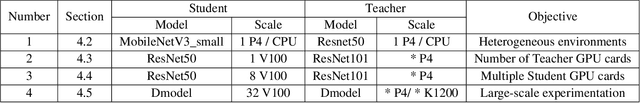

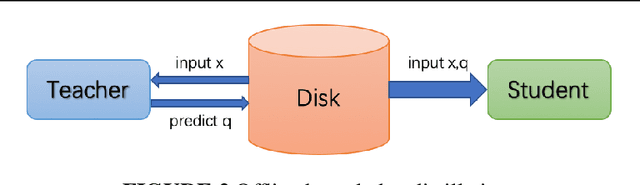

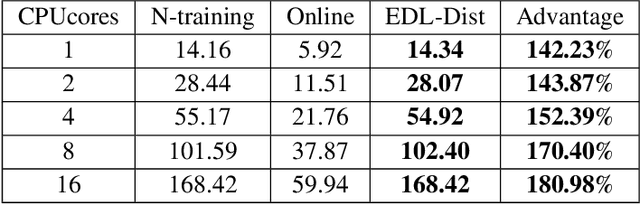

Abstract:Although more layers and more parameters generally improve the accuracy of the models, such big models generally have high computational complexity and require big memory, which exceed the capacity of small devices for inference and incurs long training time. In addition, it is difficult to afford long training time and inference time of big models even in high performance servers, as well. As an efficient approach to compress a large deep model (a teacher model) to a compact model (a student model), knowledge distillation emerges as a promising approach to deal with the big models. Existing knowledge distillation methods cannot exploit the elastic available computing resources and correspond to low efficiency. In this paper, we propose an Elastic Deep Learning framework for knowledge Distillation, i.e., EDL-Dist. The advantages of EDL-Dist are three-fold. First, the inference and the training process is separated. Second, elastic available computing resources can be utilized to improve the efficiency. Third, fault-tolerance of the training and inference processes is supported. We take extensive experimentation to show that the throughput of EDL-Dist is up to 3.125 times faster than the baseline method (online knowledge distillation) while the accuracy is similar or higher.

JIZHI: A Fast and Cost-Effective Model-As-A-Service System for Web-Scale Online Inference at Baidu

Jun 03, 2021

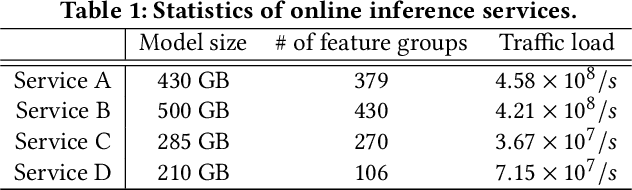

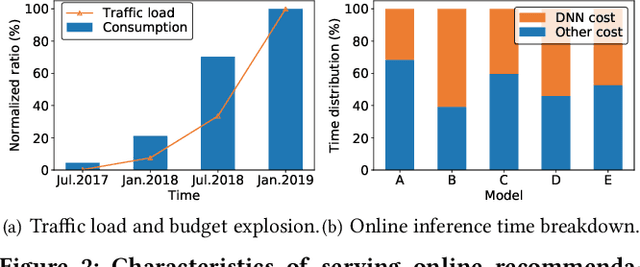

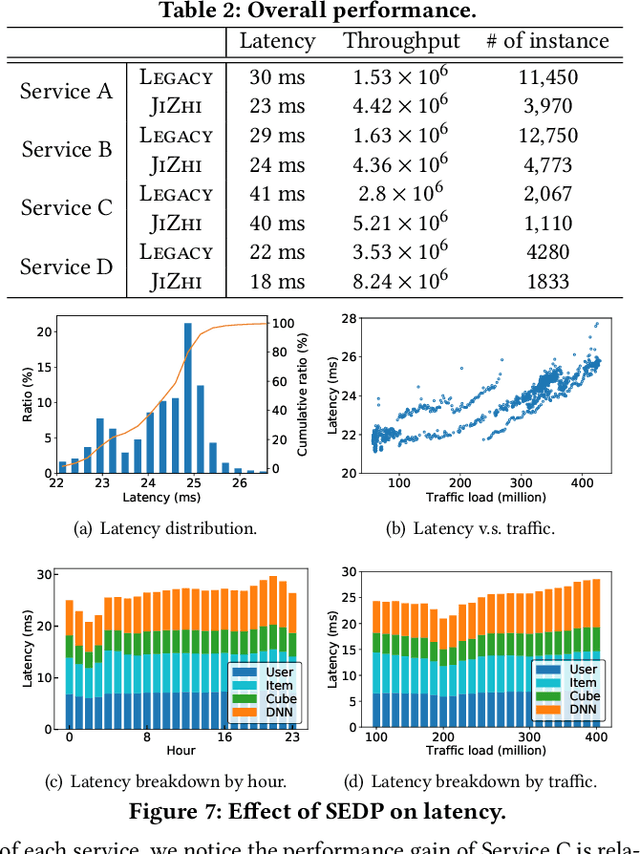

Abstract:In modern internet industries, deep learning based recommender systems have became an indispensable building block for a wide spectrum of applications, such as search engine, news feed, and short video clips. However, it remains challenging to carry the well-trained deep models for online real-time inference serving, with respect to the time-varying web-scale traffics from billions of users, in a cost-effective manner. In this work, we present JIZHI - a Model-as-a-Service system - that per second handles hundreds of millions of online inference requests to huge deep models with more than trillions of sparse parameters, for over twenty real-time recommendation services at Baidu, Inc. In JIZHI, the inference workflow of every recommendation request is transformed to a Staged Event-Driven Pipeline (SEDP), where each node in the pipeline refers to a staged computation or I/O intensive task processor. With traffics of real-time inference requests arrived, each modularized processor can be run in a fully asynchronized way and managed separately. Besides, JIZHI introduces heterogeneous and hierarchical storage to further accelerate the online inference process by reducing unnecessary computations and potential data access latency induced by ultra-sparse model parameters. Moreover, an intelligent resource manager has been deployed to maximize the throughput of JIZHI over the shared infrastructure by searching the optimal resource allocation plan from historical logs and fine-tuning the load shedding policies over intermediate system feedback. Extensive experiments have been done to demonstrate the advantages of JIZHI from the perspectives of end-to-end service latency, system-wide throughput, and resource consumption. JIZHI has helped Baidu saved more than ten million US dollars in hardware and utility costs while handling 200% more traffics without sacrificing inference efficiency.

RocketQA: An Optimized Training Approach to Dense Passage Retrieval for Open-Domain Question Answering

Oct 16, 2020

Abstract:In open-domain question answering, dense passage retrieval has become a new paradigm to retrieve relevant passages for answer finding. Typically, the dual-encoder architecture is adopted to learn dense representations of questions and passages for matching. However, it is difficult to train an effective dual-encoder due to the challenges including the discrepancy between training and inference, the existence of unlabeled positives and limited training data. To address these challenges, we propose an optimized training approach, called RocketQA, to improving dense passage retrieval. We make three major technical contributions in RocketQA, namely cross-batch negatives, denoised negative sampling and data augmentation. Extensive experiments show that RocketQA significantly outperforms previous state-of-the-art models on both MSMARCO and Natural Questions. Besides, built upon RocketQA, we achieve the first rank at the leaderboard of MSMARCO Passage Ranking Task.

Learning to Recommend via Meta Parameter Partition

Dec 04, 2019

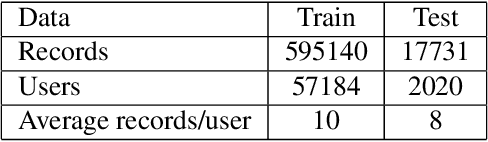

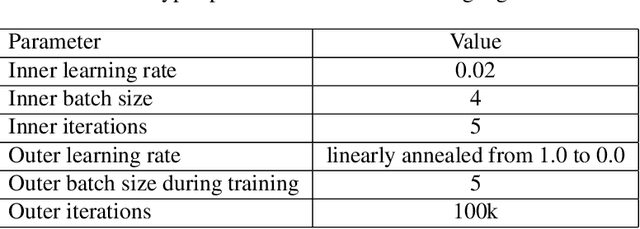

Abstract:In this paper we propose to solve an important problem in recommendation -- user cold start, based on meta leaning method. Previous meta learning approaches finetune all parameters for each new user, which is both computing and storage expensive. In contrast, we divide model parameters into fixed and adaptive parts and develop a two-stage meta learning algorithm to learn them separately. The fixed part, capturing user invariant features, is shared by all users and is learned during offline meta learning stage. The adaptive part, capturing user specific features, is learned during online meta learning stage. By decoupling user invariant parameters from user dependent parameters, the proposed approach is more efficient and storage cheaper than previous methods. It also has potential to deal with catastrophic forgetting while continually adapting for streaming coming users. Experiments on production data demonstrates that the proposed method converges faster and to a better performance than baseline methods. Meta-training without online meta model finetuning increases the AUC from 72.24% to 74.72% (2.48% absolute improvement). Online meta training achieves a further gain of 2.46\% absolute improvement comparing with offline meta training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge