Ning Yang

CompilerKV: Risk-Adaptive KV Compression via Offline Experience Compilation

Feb 09, 2026Abstract:Large Language Models (LLMs) in long-context scenarios are severely constrained by the linear growth of Key-Value (KV) cache memory. Existing KV compression methods rely either on static thresholds and attention-only heuristics or on coarse memory budget allocation. Under tight memory budgets, these methods overlook two key factors: prompt-dependent variation in compression risk and functional heterogeneity across attention heads, which destabilize token selection and lead to tail failures. To address these challenges, we propose CompilerKV, a risk-adaptive and head-aware compression framework that compiles offline experience into reusable decision tables for prefill-only deployment. CompilerKV integrates two key synergistic components: (i) a Head Heterogeneity Table, learned via offline contextual bandits, which assigns head-specific reliability weights to govern functional differences across attention heads explicitly; and (ii) a Risk-Adaptive Threshold Gating mechanism that jointly models attention entropy and local perplexity, transforming prompt-level risk into deployable retention thresholds. Experiments on LongBench show CompilerKV dominates SOTA methods under a 512-token budget, recovering 97.7\% of FullKV performance while achieving up to +5.2 points gain over the strongest competitor.

On the Superlinear Relationship between SGD Noise Covariance and Loss Landscape Curvature

Feb 05, 2026Abstract:Stochastic Gradient Descent (SGD) introduces anisotropic noise that is correlated with the local curvature of the loss landscape, thereby biasing optimization toward flat minima. Prior work often assumes an equivalence between the Fisher Information Matrix and the Hessian for negative log-likelihood losses, leading to the claim that the SGD noise covariance $\mathbf{C}$ is proportional to the Hessian $\mathbf{H}$. We show that this assumption holds only under restrictive conditions that are typically violated in deep neural networks. Using the recently discovered Activity--Weight Duality, we find a more general relationship agnostic to the specific loss formulation, showing that $\mathbf{C} \propto \mathbb{E}_p[\mathbf{h}_p^2]$, where $\mathbf{h}_p$ denotes the per-sample Hessian with $\mathbf{H} = \mathbb{E}_p[\mathbf{h}_p]$. As a consequence, $\mathbf{C}$ and $\mathbf{H}$ commute approximately rather than coincide exactly, and their diagonal elements follow an approximate power-law relation $C_{ii} \propto H_{ii}^γ$ with a theoretically bounded exponent $1 \leq γ\leq 2$, determined by per-sample Hessian spectra. Experiments across datasets, architectures, and loss functions validate these bounds, providing a unified characterization of the noise-curvature relationship in deep learning.

Chain-of-Thought Compression Should Not Be Blind: V-Skip for Efficient Multimodal Reasoning via Dual-Path Anchoring

Jan 21, 2026Abstract:While Chain-of-Thought (CoT) reasoning significantly enhances the performance of Multimodal Large Language Models (MLLMs), its autoregressive nature incurs prohibitive latency constraints. Current efforts to mitigate this via token compression often fail by blindly applying text-centric metrics to multimodal contexts. We identify a critical failure mode termed Visual Amnesia, where linguistically redundant tokens are erroneously pruned, leading to hallucinations. To address this, we introduce V-Skip that reformulates token pruning as a Visual-Anchored Information Bottleneck (VA-IB) optimization problem. V-Skip employs a dual-path gating mechanism that weighs token importance through both linguistic surprisal and cross-modal attention flow, effectively rescuing visually salient anchors. Extensive experiments on Qwen2-VL and Llama-3.2 families demonstrate that V-Skip achieves a $2.9\times$ speedup with negligible accuracy loss. Specifically, it preserves fine-grained visual details, outperforming other baselines over 30\% on the DocVQA.

Transient learning dynamics drive escape from sharp valleys in Stochastic Gradient Descent

Jan 16, 2026Abstract:Stochastic gradient descent (SGD) is central to deep learning, yet the dynamical origin of its preference for flatter, more generalizable solutions remains unclear. Here, by analyzing SGD learning dynamics, we identify a nonequilibrium mechanism governing solution selection. Numerical experiments reveal a transient exploratory phase in which SGD trajectories repeatedly escape sharp valleys and transition toward flatter regions of the loss landscape. By using a tractable physical model, we show that the SGD noise reshapes the landscape into an effective potential that favors flat solutions. Crucially, we uncover a transient freezing mechanism: as training proceeds, growing energy barriers suppress inter-valley transitions and ultimately trap the dynamics within a single basin. Increasing the SGD noise strength delays this freezing, which enhances convergence to flatter minima. Together, these results provide a unified physical framework linking learning dynamics, loss-landscape geometry, and generalization, and suggest principles for the design of more effective optimization algorithms.

AI-Native 6G Physical Layer with Cross-Module Optimization and Cooperative Control Agents

Jan 07, 2026Abstract:In this article, a framework of AI-native cross-module optimized physical layer with cooperative control agents is proposed, which involves optimization across global AI/ML modules of the physical layer with innovative design of multiple enhancement mechanisms and control strategies. Specifically, it achieves simultaneous optimization across global modules of uplink AI/ML-based joint source-channel coding with modulation, and downlink AI/ML-based modulation with precoding and corresponding data detection, reducing traditional inter-module information barriers to facilitate end-to-end optimization toward global objectives. Moreover, multiple enhancement mechanisms are also proposed, including i) an AI/ML-based cross-layer modulation approach with theoretical analysis for downlink transmission that breaks the isolation of inter-layer features to expand the solution space for determining improved constellation, ii) a utility-oriented precoder construction method that shifts the role of the AI/ML-based CSI feedback decoder from recovering the original CSI to directly generating precoding matrices aiming to improve end-to-end performance, and iii) incorporating modulation into AI/ML-based CSI feedback to bypass bit-level bottlenecks that introduce quantization errors, non-differentiable gradients, and limitations in constellation solution spaces. Furthermore, AI/ML based control agents for optimized transmission schemes are proposed that leverage AI/ML to perform model switching according to channel state, thereby enabling integrated control for global throughput optimization. Finally, simulation results demonstrate the superiority of the proposed solutions in terms of BLER and throughput. These extensive simulations employ more practical assumptions that are aligned with the requirements of the 3GPP, which hopefully provides valuable insights for future standardization discussions.

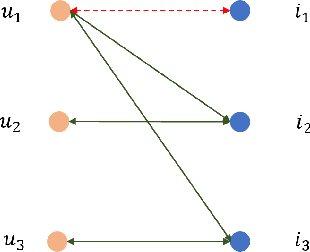

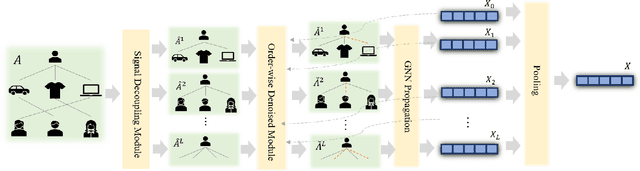

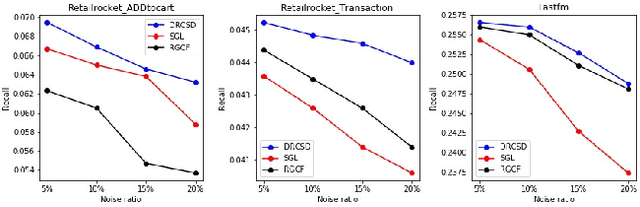

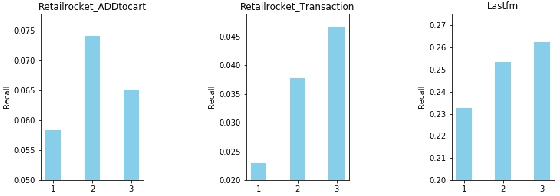

Denoised Recommendation Model with Collaborative Signal Decoupling

Nov 06, 2025

Abstract:Although the collaborative filtering (CF) algorithm has achieved remarkable performance in recommendation systems, it suffers from suboptimal recommendation performance due to noise in the user-item interaction matrix. Numerous noise-removal studies have improved recommendation models, but most existing approaches conduct denoising on a single graph. This may cause attenuation of collaborative signals: removing edges between two nodes can interrupt paths between other nodes, weakening path-dependent collaborative information. To address these limitations, this study proposes a novel GNN-based CF model called DRCSD for denoising unstable interactions. DRCSD includes two core modules: a collaborative signal decoupling module (decomposes signals into distinct orders by structural characteristics) and an order-wise denoising module (performs targeted denoising on each order). Additionally, the information aggregation mechanism of traditional GNN-based CF models is modified to avoid cross-order signal interference until the final pooling operation. Extensive experiments on three public real-world datasets show that DRCSD has superior robustness against unstable interactions and achieves statistically significant performance improvements in recommendation accuracy metrics compared to state-of-the-art baseline models.

ASCoT: An Adaptive Self-Correction Chain-of-Thought Method for Late-Stage Fragility in LLMs

Aug 07, 2025

Abstract:Chain-of-Thought (CoT) prompting has significantly advanced the reasoning capabilities of Large Language Models (LLMs), yet the reliability of these reasoning chains remains a critical challenge. A widely held "cascading failure" hypothesis suggests that errors are most detrimental when they occur early in the reasoning process. This paper challenges that assumption through systematic error-injection experiments, revealing a counter-intuitive phenomenon we term "Late-Stage Fragility": errors introduced in the later stages of a CoT chain are significantly more likely to corrupt the final answer than identical errors made at the beginning. To address this specific vulnerability, we introduce the Adaptive Self-Correction Chain-of-Thought (ASCoT) method. ASCoT employs a modular pipeline in which an Adaptive Verification Manager (AVM) operates first, followed by the Multi-Perspective Self-Correction Engine (MSCE). The AVM leverages a Positional Impact Score function I(k) that assigns different weights based on the position within the reasoning chains, addressing the Late-Stage Fragility issue by identifying and prioritizing high-risk, late-stage steps. Once these critical steps are identified, the MSCE applies robust, dual-path correction specifically to the failure parts. Extensive experiments on benchmarks such as GSM8K and MATH demonstrate that ASCoT achieves outstanding accuracy, outperforming strong baselines, including standard CoT. Our work underscores the importance of diagnosing specific failure modes in LLM reasoning and advocates for a shift from uniform verification strategies to adaptive, vulnerability-aware correction mechanisms.

XRoboToolkit: A Cross-Platform Framework for Robot Teleoperation

Jul 31, 2025

Abstract:The rapid advancement of Vision-Language-Action models has created an urgent need for large-scale, high-quality robot demonstration datasets. Although teleoperation is the predominant method for data collection, current approaches suffer from limited scalability, complex setup procedures, and suboptimal data quality. This paper presents XRoboToolkit, a cross-platform framework for extended reality based robot teleoperation built on the OpenXR standard. The system features low-latency stereoscopic visual feedback, optimization-based inverse kinematics, and support for diverse tracking modalities including head, controller, hand, and auxiliary motion trackers. XRoboToolkit's modular architecture enables seamless integration across robotic platforms and simulation environments, spanning precision manipulators, mobile robots, and dexterous hands. We demonstrate the framework's effectiveness through precision manipulation tasks and validate data quality by training VLA models that exhibit robust autonomous performance.

Enhancing Efficiency and Propulsion in Bio-mimetic Robotic Fish through End-to-End Deep Reinforcement Learning

Jun 05, 2025Abstract:Aquatic organisms are known for their ability to generate efficient propulsion with low energy expenditure. While existing research has sought to leverage bio-inspired structures to reduce energy costs in underwater robotics, the crucial role of control policies in enhancing efficiency has often been overlooked. In this study, we optimize the motion of a bio-mimetic robotic fish using deep reinforcement learning (DRL) to maximize propulsion efficiency and minimize energy consumption. Our novel DRL approach incorporates extended pressure perception, a transformer model processing sequences of observations, and a policy transfer scheme. Notably, significantly improved training stability and speed within our approach allow for end-to-end training of the robotic fish. This enables agiler responses to hydrodynamic environments and possesses greater optimization potential compared to pre-defined motion pattern controls. Our experiments are conducted on a serially connected rigid robotic fish in a free stream with a Reynolds number of 6000 using computational fluid dynamics (CFD) simulations. The DRL-trained policies yield impressive results, demonstrating both high efficiency and propulsion. The policies also showcase the agent's embodiment, skillfully utilizing its body structure and engaging with surrounding fluid dynamics, as revealed through flow analysis. This study provides valuable insights into the bio-mimetic underwater robots optimization through DRL training, capitalizing on their structural advantages, and ultimately contributing to more efficient underwater propulsion systems.

DASH: Input-Aware Dynamic Layer Skipping for Efficient LLM Inference with Markov Decision Policies

May 23, 2025

Abstract:Large language models (LLMs) have achieved remarkable performance across a wide range of NLP tasks. However, their substantial inference cost poses a major barrier to real-world deployment, especially in latency-sensitive scenarios. To address this challenge, we propose \textbf{DASH}, an adaptive layer-skipping framework that dynamically selects computation paths conditioned on input characteristics. We model the skipping process as a Markov Decision Process (MDP), enabling fine-grained token-level decisions based on intermediate representations. To mitigate potential performance degradation caused by skipping, we introduce a lightweight compensation mechanism that injects differential rewards into the decision process. Furthermore, we design an asynchronous execution strategy that overlaps layer computation with policy evaluation to minimize runtime overhead. Experiments on multiple LLM architectures and NLP benchmarks show that our method achieves significant inference acceleration while maintaining competitive task performance, outperforming existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge