Chenliang Li

Automatic Expert Selection for Multi-Scenario and Multi-Task Search

Jun 06, 2022

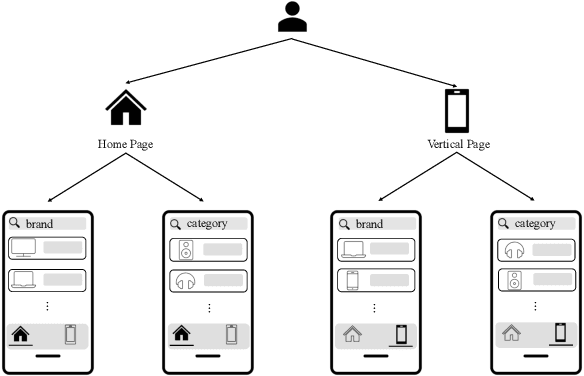

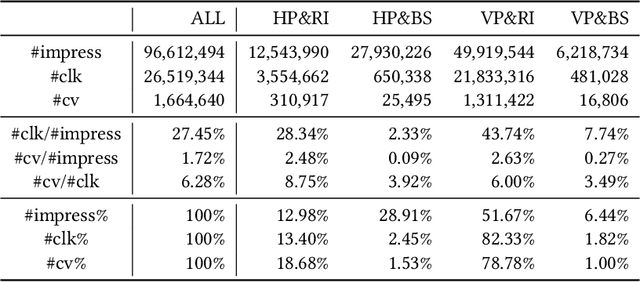

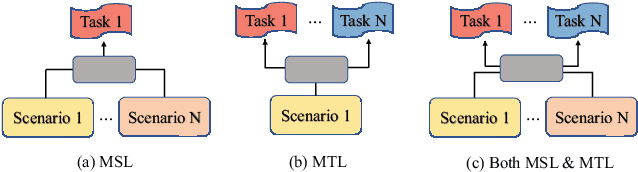

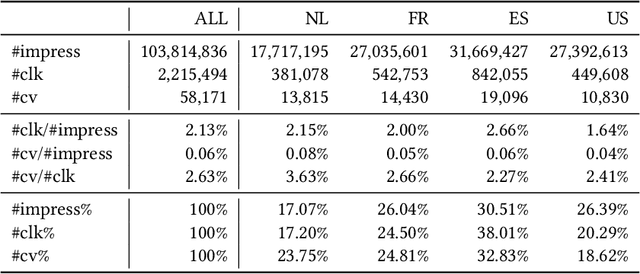

Abstract:Multi-scenario learning (MSL) enables a service provider to cater for users' fine-grained demands by separating services for different user sectors, e.g., by user's geographical region. Under each scenario there is a need to optimize multiple task-specific targets e.g., click through rate and conversion rate, known as multi-task learning (MTL). Recent solutions for MSL and MTL are mostly based on the multi-gate mixture-of-experts (MMoE) architecture. MMoE structure is typically static and its design requires domain-specific knowledge, making it less effective in handling both MSL and MTL. In this paper, we propose a novel Automatic Expert Selection framework for Multi-scenario and Multi-task search, named AESM^{2}. AESM^{2} integrates both MSL and MTL into a unified framework with an automatic structure learning. Specifically, AESM^{2} stacks multi-task layers over multi-scenario layers. This hierarchical design enables us to flexibly establish intrinsic connections between different scenarios, and at the same time also supports high-level feature extraction for different tasks. At each multi-scenario/multi-task layer, a novel expert selection algorithm is proposed to automatically identify scenario-/task-specific and shared experts for each input. Experiments over two real-world large-scale datasets demonstrate the effectiveness of AESM^{2} over a battery of strong baselines. Online A/B test also shows substantial performance gain on multiple metrics. Currently, AESM^{2} has been deployed online for serving major traffic.

mPLUG: Effective and Efficient Vision-Language Learning by Cross-modal Skip-connections

May 25, 2022

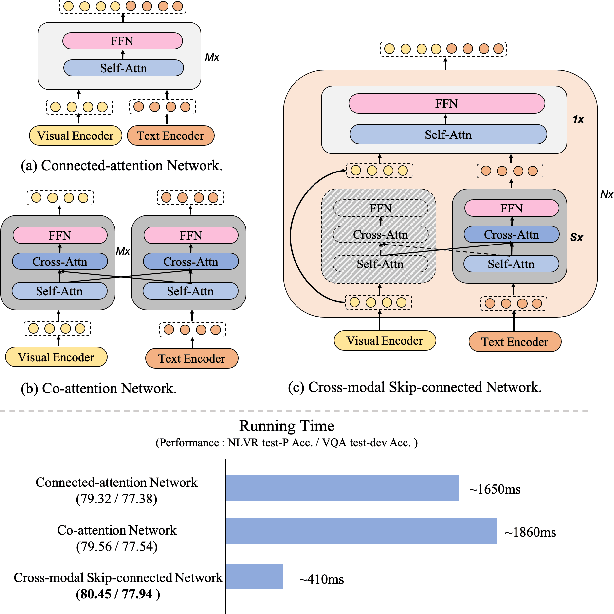

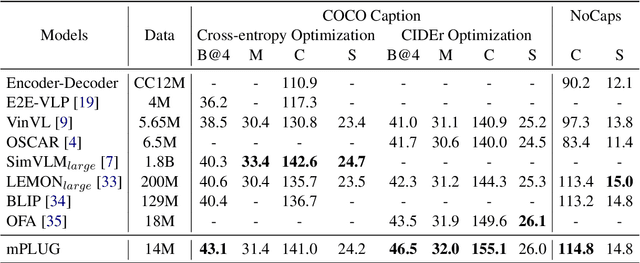

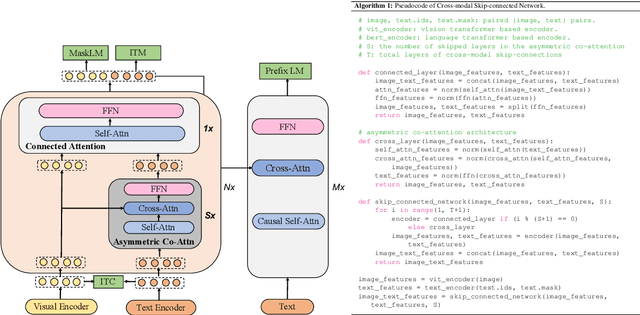

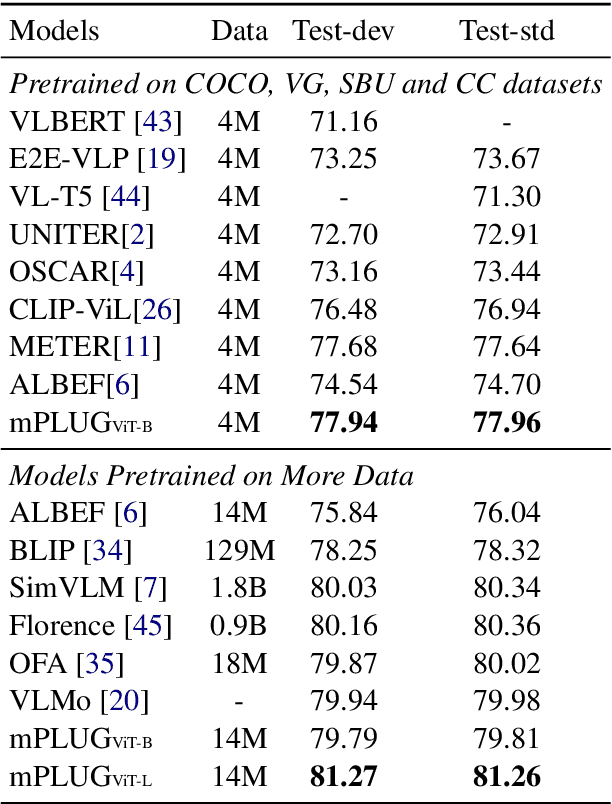

Abstract:Large-scale pretrained foundation models have been an emerging paradigm for building artificial intelligence (AI) systems, which can be quickly adapted to a wide range of downstream tasks. This paper presents mPLUG, a new vision-language foundation model for both cross-modal understanding and generation. Most existing pre-trained models suffer from the problems of low computational efficiency and information asymmetry brought by the long visual sequence in cross-modal alignment. To address these problems, mPLUG introduces an effective and efficient vision-language architecture with novel cross-modal skip-connections, which creates inter-layer shortcuts that skip a certain number of layers for time-consuming full self-attention on the vision side. mPLUG is pre-trained end-to-end on large-scale image-text pairs with both discriminative and generative objectives. It achieves state-of-the-art results on a wide range of vision-language downstream tasks, such as image captioning, image-text retrieval, visual grounding and visual question answering. mPLUG also demonstrates strong zero-shot transferability when directly transferred to multiple video-language tasks.

Price DOES Matter! Modeling Price and Interest Preferences in Session-based Recommendation

May 09, 2022

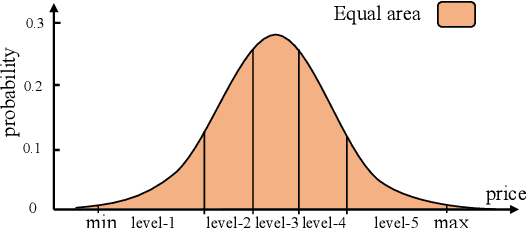

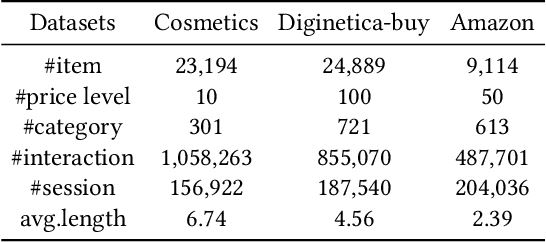

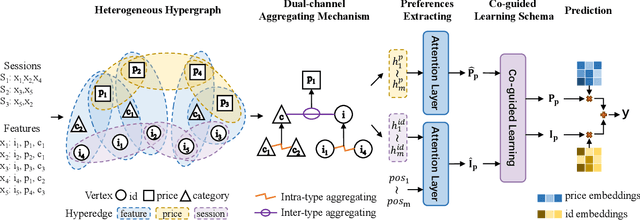

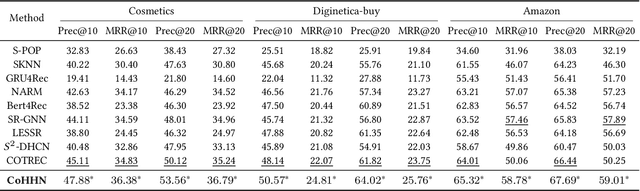

Abstract:Session-based recommendation aims to predict items that an anonymous user would like to purchase based on her short behavior sequence. The current approaches towards session-based recommendation only focus on modeling users' interest preferences, while they all ignore a key attribute of an item, i.e., the price. Many marketing studies have shown that the price factor significantly influences users' behaviors and the purchase decisions of users are determined by both price and interest preferences simultaneously. However, it is nontrivial to incorporate price preferences for session-based recommendation. Firstly, it is hard to handle heterogeneous information from various features of items to capture users' price preferences. Secondly, it is difficult to model the complex relations between price and interest preferences in determining user choices. To address the above challenges, we propose a novel method Co-guided Heterogeneous Hypergraph Network (CoHHN) for session-based recommendation. Towards the first challenge, we devise a heterogeneous hypergraph to represent heterogeneous information and rich relations among them. A dual-channel aggregating mechanism is then designed to aggregate various information in the heterogeneous hypergraph. After that, we extract users' price preferences and interest preferences via attention layers. As to the second challenge, a co-guided learning scheme is designed to model the relations between price and interest preferences and enhance the learning of each other. Finally, we predict user actions based on item features and users' price and interest preferences. Extensive experiments on three real-world datasets demonstrate the effectiveness of the proposed CoHHN. Further analysis reveals the significance of price for session-based recommendation.

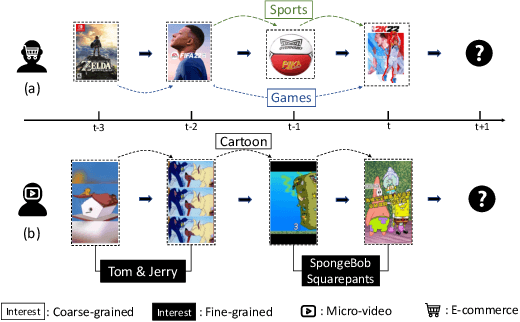

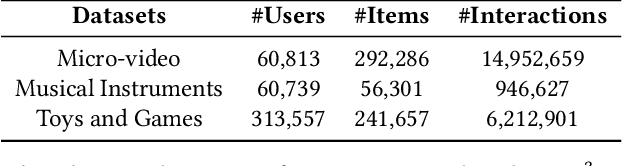

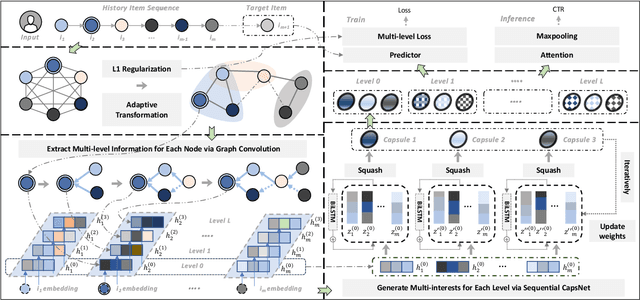

When Multi-Level Meets Multi-Interest: A Multi-Grained Neural Model for Sequential Recommendation

May 03, 2022

Abstract:Sequential recommendation aims at identifying the next item that is preferred by a user based on their behavioral history. Compared to conventional sequential models that leverage attention mechanisms and RNNs, recent efforts mainly follow two directions for improvement: multi-interest learning and graph convolutional aggregation. Specifically, multi-interest methods such as ComiRec and MIMN, focus on extracting different interests for a user by performing historical item clustering, while graph convolution methods including TGSRec and SURGE elect to refine user preferences based on multi-level correlations between historical items. Unfortunately, neither of them realizes that these two types of solutions can mutually complement each other, by aggregating multi-level user preference to achieve more precise multi-interest extraction for a better recommendation. To this end, in this paper, we propose a unified multi-grained neural model(named MGNM) via a combination of multi-interest learning and graph convolutional aggregation. Concretely, MGNM first learns the graph structure and information aggregation paths of the historical items for a user. It then performs graph convolution to derive item representations in an iterative fashion, in which the complex preferences at different levels can be well captured. Afterwards, a novel sequential capsule network is proposed to inject the sequential patterns into the multi-interest extraction process, leading to a more precise interest learning in a multi-grained manner.

Knowledge Graph Contrastive Learning for Recommendation

May 02, 2022

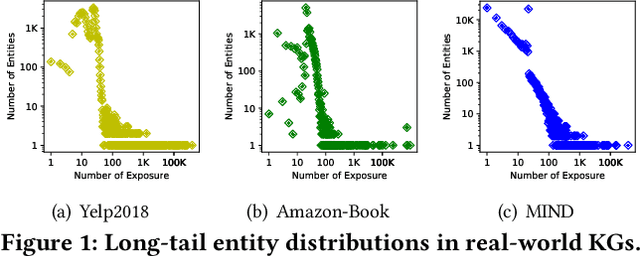

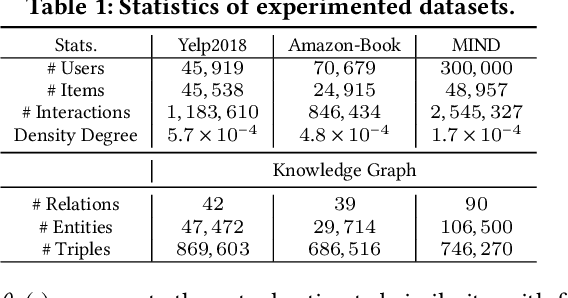

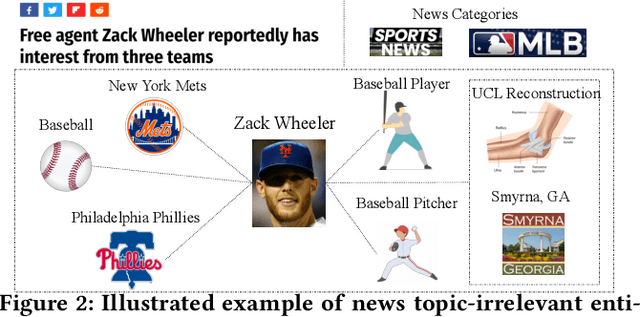

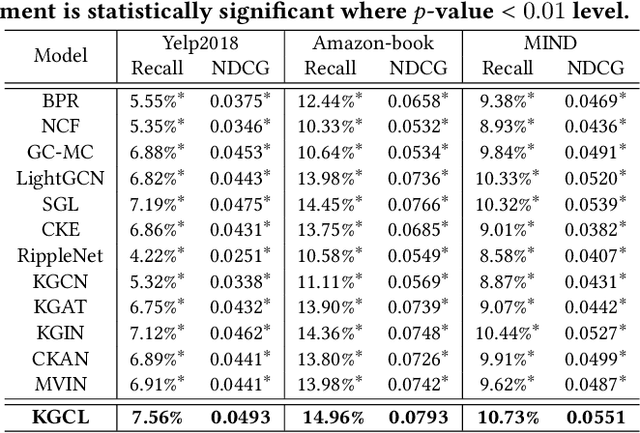

Abstract:Knowledge Graphs (KGs) have been utilized as useful side information to improve recommendation quality. In those recommender systems, knowledge graph information often contains fruitful facts and inherent semantic relatedness among items. However, the success of such methods relies on the high quality knowledge graphs, and may not learn quality representations with two challenges: i) The long-tail distribution of entities results in sparse supervision signals for KG-enhanced item representation; ii) Real-world knowledge graphs are often noisy and contain topic-irrelevant connections between items and entities. Such KG sparsity and noise make the item-entity dependent relations deviate from reflecting their true characteristics, which significantly amplifies the noise effect and hinders the accurate representation of user's preference. To fill this research gap, we design a general Knowledge Graph Contrastive Learning framework (KGCL) that alleviates the information noise for knowledge graph-enhanced recommender systems. Specifically, we propose a knowledge graph augmentation schema to suppress KG noise in information aggregation, and derive more robust knowledge-aware representations for items. In addition, we exploit additional supervision signals from the KG augmentation process to guide a cross-view contrastive learning paradigm, giving a greater role to unbiased user-item interactions in gradient descent and further suppressing the noise. Extensive experiments on three public datasets demonstrate the consistent superiority of our KGCL over state-of-the-art techniques. KGCL also achieves strong performance in recommendation scenarios with sparse user-item interactions, long-tail and noisy KG entities. Our implementation codes are available at https://github.com/yuh-yang/KGCL-SIGIR22

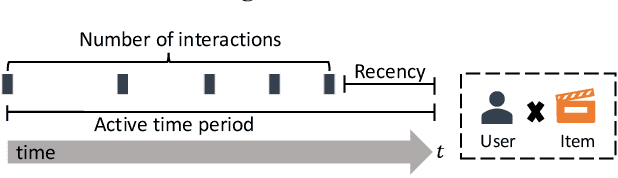

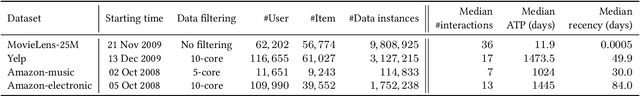

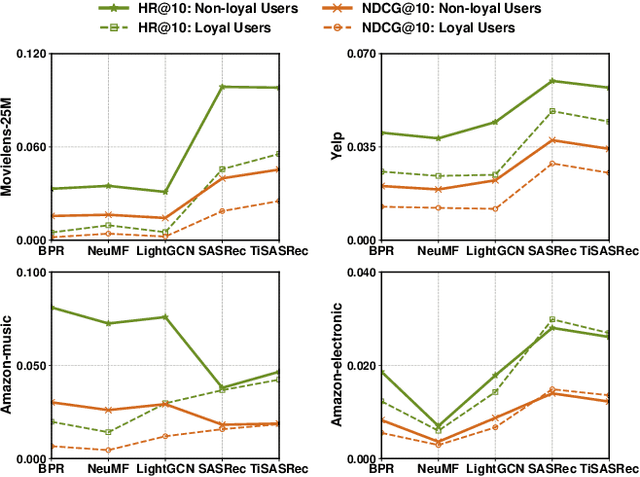

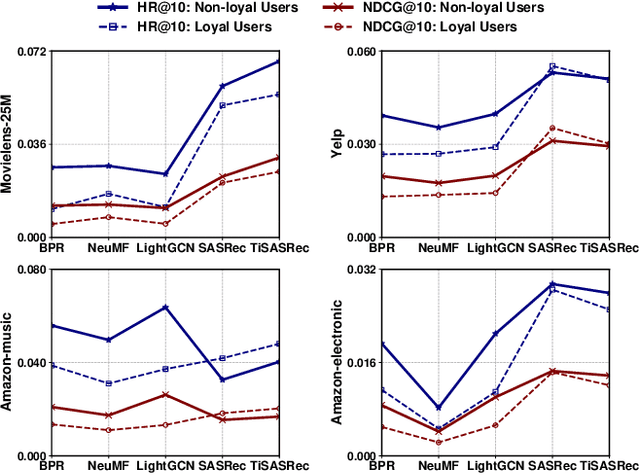

Recommender May Not Favor Loyal Users

Apr 12, 2022

Abstract:In academic research, recommender systems are often evaluated on benchmark datasets, without much consideration about the global timeline. Hence, we are unable to answer questions like: Do loyal users enjoy better recommendations than non-loyal users? Loyalty can be defined by the time period a user has been active in a recommender system, or by the number of historical interactions a user has. In this paper, we offer a comprehensive analysis of recommendation results along global timeline. We conduct experiments with five widely used models, i.e., BPR, NeuMF, LightGCN, SASRec and TiSASRec, on four benchmark datasets, i.e., MovieLens-25M, Yelp, Amazon-music, and Amazon-electronic. Our experiment results give an answer "No" to the above question. Users with many historical interactions suffer from relatively poorer recommendations. Users who stay with the system for a short time period enjoy better recommendations. Both findings are counter-intuitive. Interestingly, users who have recently interacted with the system, with respect to the time point of the test instance, enjoy better recommendations. The finding on recency applies to all users, regardless of users' loyalty. Our study offers a different perspective to understand recommender performance, and our findings could trigger a revisit of recommender model design.

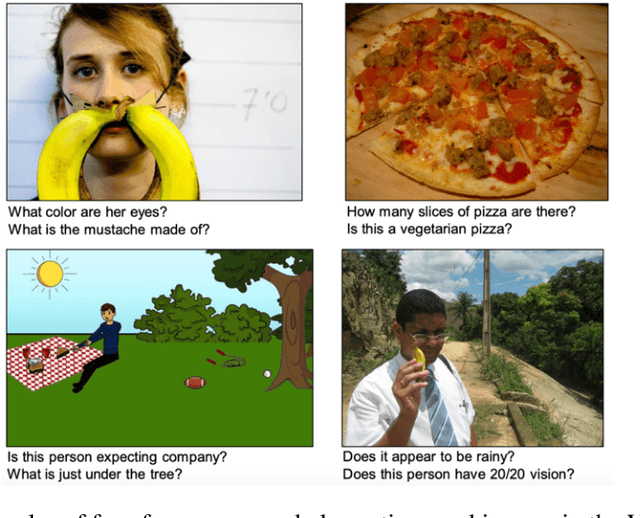

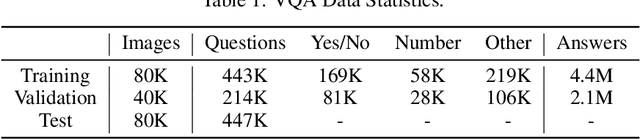

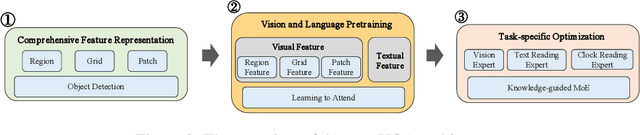

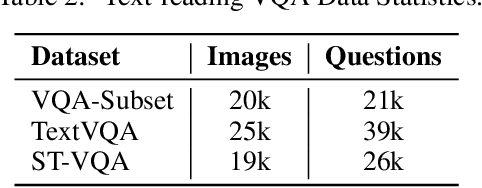

Achieving Human Parity on Visual Question Answering

Nov 19, 2021

Abstract:The Visual Question Answering (VQA) task utilizes both visual image and language analysis to answer a textual question with respect to an image. It has been a popular research topic with an increasing number of real-world applications in the last decade. This paper describes our recent research of AliceMind-MMU (ALIbaba's Collection of Encoder-decoders from Machine IntelligeNce lab of Damo academy - MultiMedia Understanding) that obtains similar or even slightly better results than human being does on VQA. This is achieved by systematically improving the VQA pipeline including: (1) pre-training with comprehensive visual and textual feature representation; (2) effective cross-modal interaction with learning to attend; and (3) A novel knowledge mining framework with specialized expert modules for the complex VQA task. Treating different types of visual questions with corresponding expertise needed plays an important role in boosting the performance of our VQA architecture up to the human level. An extensive set of experiments and analysis are conducted to demonstrate the effectiveness of the new research work.

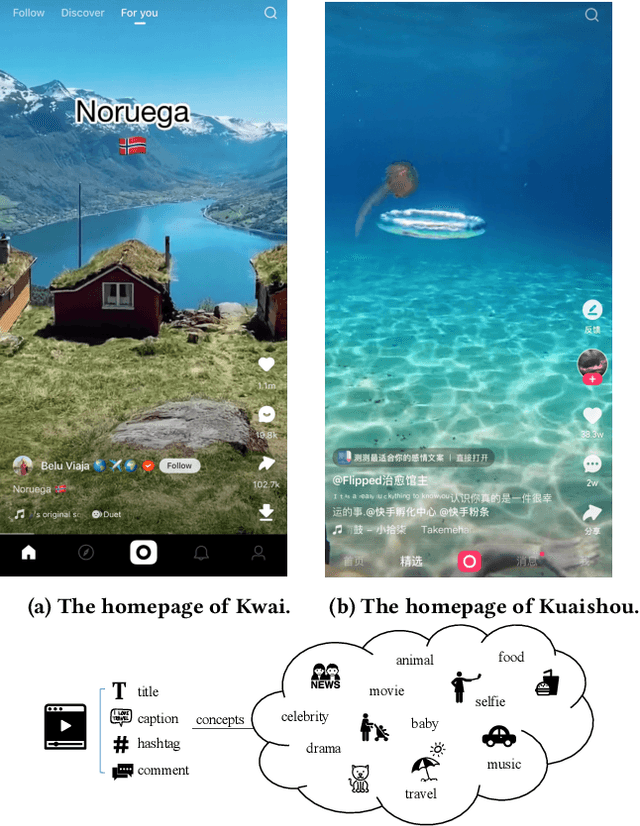

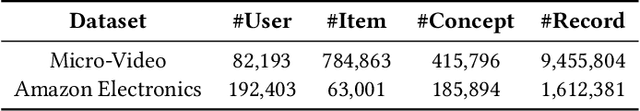

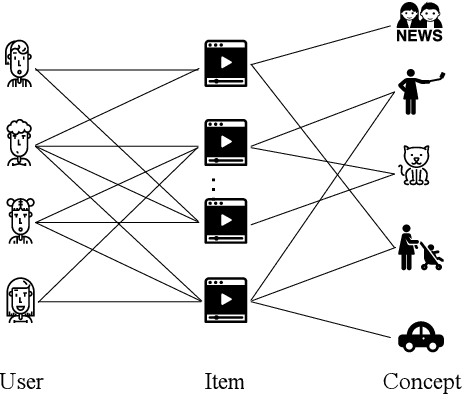

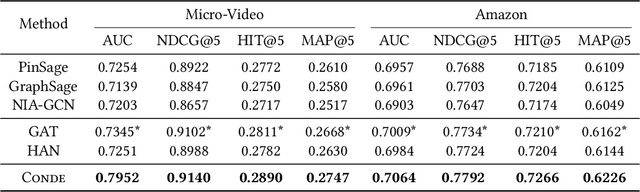

Concept-Aware Denoising Graph Neural Network for Micro-Video Recommendation

Sep 28, 2021

Abstract:Recently, micro-video sharing platforms such as Kuaishou and Tiktok have become a major source of information for people's lives. Thanks to the large traffic volume, short video lifespan and streaming fashion of these services, it has become more and more pressing to improve the existing recommender systems to accommodate these challenges in a cost-effective way. In this paper, we propose a novel concept-aware denoising graph neural network (named CONDE) for micro-video recommendation. CONDE consists of a three-phase graph convolution process to derive user and micro-video representations: warm-up propagation, graph denoising and preference refinement. A heterogeneous tripartite graph is constructed by connecting user nodes with video nodes, and video nodes with associated concept nodes, extracted from captions and comments of the videos. To address the noisy information in the graph, we introduce a user-oriented graph denoising phase to extract a subgraph which can better reflect the user's preference. Despite the main focus of micro-video recommendation in this paper, we also show that our method can be generalized to other types of tasks. Therefore, we also conduct empirical studies on a well-known public E-commerce dataset. The experimental results suggest that the proposed CONDE achieves significantly better recommendation performance than the existing state-of-the-art solutions.

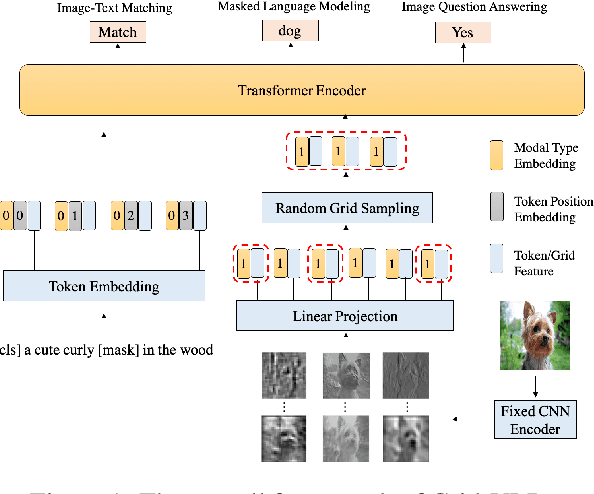

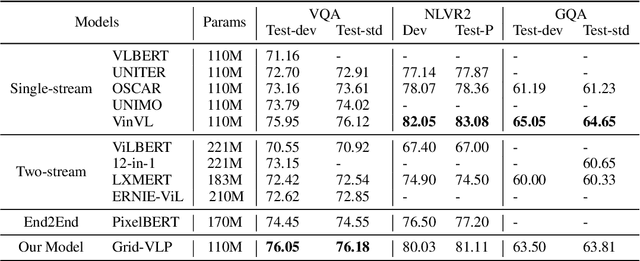

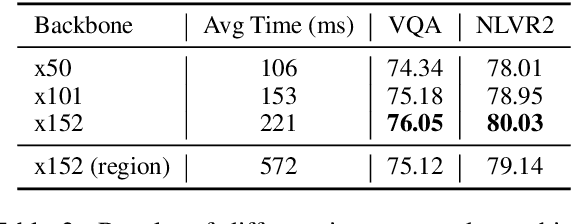

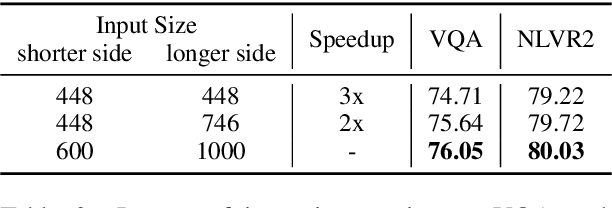

Grid-VLP: Revisiting Grid Features for Vision-Language Pre-training

Aug 21, 2021

Abstract:Existing approaches to vision-language pre-training (VLP) heavily rely on an object detector based on bounding boxes (regions), where salient objects are first detected from images and then a Transformer-based model is used for cross-modal fusion. Despite their superior performance, these approaches are bounded by the capability of the object detector in terms of both effectiveness and efficiency. Besides, the presence of object detection imposes unnecessary constraints on model designs and makes it difficult to support end-to-end training. In this paper, we revisit grid-based convolutional features for vision-language pre-training, skipping the expensive region-related steps. We propose a simple yet effective grid-based VLP method that works surprisingly well with the grid features. By pre-training only with in-domain datasets, the proposed Grid-VLP method can outperform most competitive region-based VLP methods on three examined vision-language understanding tasks. We hope that our findings help to further advance the state of the art of vision-language pre-training, and provide a new direction towards effective and efficient VLP.

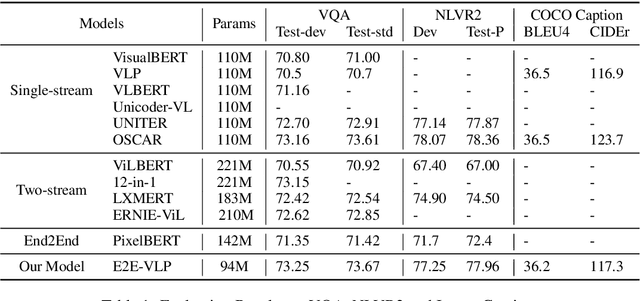

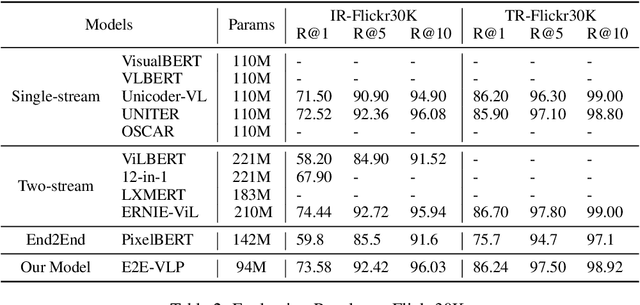

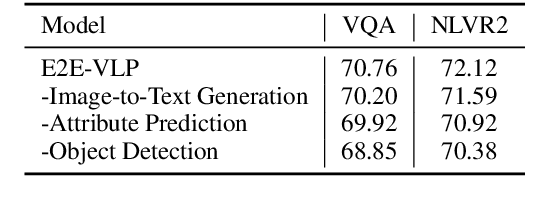

E2E-VLP: End-to-End Vision-Language Pre-training Enhanced by Visual Learning

Jun 04, 2021

Abstract:Vision-language pre-training (VLP) on large-scale image-text pairs has achieved huge success for the cross-modal downstream tasks. The most existing pre-training methods mainly adopt a two-step training procedure, which firstly employs a pre-trained object detector to extract region-based visual features, then concatenates the image representation and text embedding as the input of Transformer to train. However, these methods face problems of using task-specific visual representation of the specific object detector for generic cross-modal understanding, and the computation inefficiency of two-stage pipeline. In this paper, we propose the first end-to-end vision-language pre-trained model for both V+L understanding and generation, namely E2E-VLP, where we build a unified Transformer framework to jointly learn visual representation, and semantic alignments between image and text. We incorporate the tasks of object detection and image captioning into pre-training with a unified Transformer encoder-decoder architecture for enhancing visual learning. An extensive set of experiments have been conducted on well-established vision-language downstream tasks to demonstrate the effectiveness of this novel VLP paradigm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge