Ali-akbar Agha-mohammadi

Jet Propulsion Lab., California Institute of Technology and

LAMP: Large-Scale Autonomous Mapping and Positioning for Exploration of Perceptually-Degraded Subterranean Environments

Mar 05, 2020

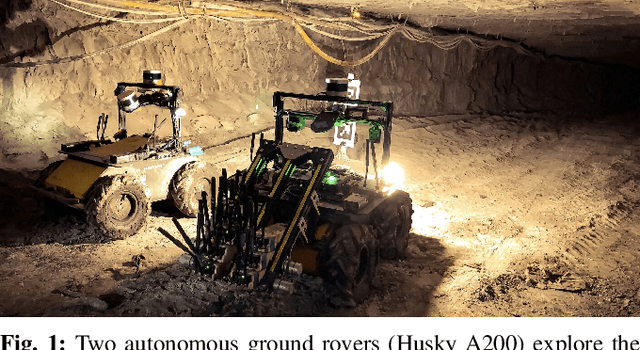

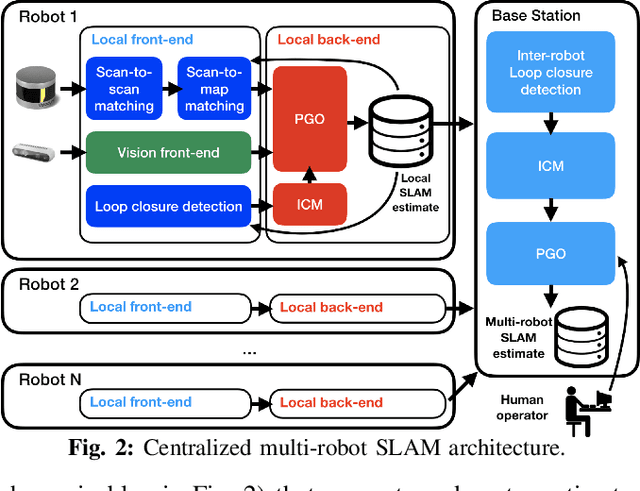

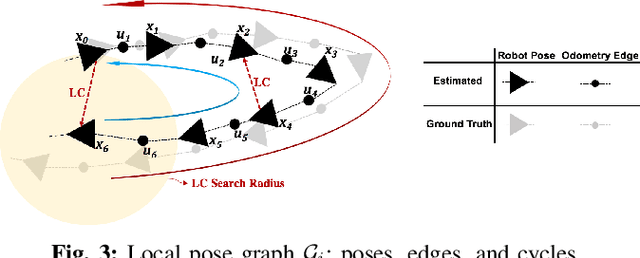

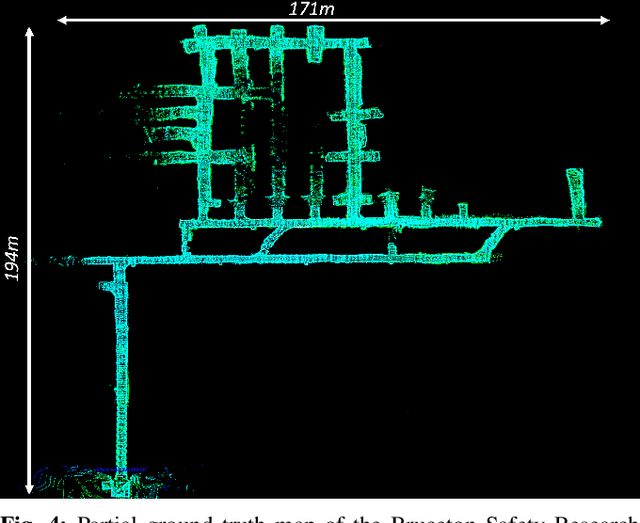

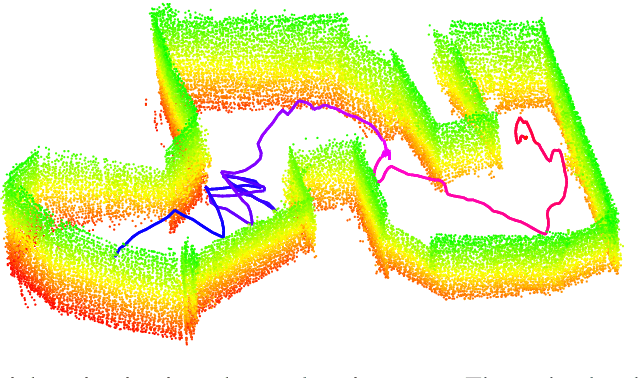

Abstract:Simultaneous Localization and Mapping (SLAM) in large-scale, unknown, and complex subterranean environments is a challenging problem. Sensors must operate in off-nominal conditions; uneven and slippery terrains make wheel odometry inaccurate, while long corridors without salient features make exteroceptive sensing ambiguous and prone to drift; finally, spurious loop closures that are frequent in environments with repetitive appearance, such as tunnels and mines, could result in a significant distortion of the entire map. These challenges are in stark contrast with the need to build highly-accurate 3D maps to support a wide variety of applications, ranging from disaster response to the exploration of underground extraterrestrial worlds. This paper reports on the implementation and testing of a lidar-based multi-robot SLAM system developed in the context of the DARPA Subterranean Challenge. We present a system architecture to enhance subterranean operation, including an accurate lidar-based front-end, and a flexible and robust back-end that automatically rejects outlying loop closures. We present an extensive evaluation in large-scale, challenging subterranean environments, including the results obtained in the Tunnel Circuit of the DARPA Subterranean Challenge. Finally, we discuss potential improvements, limitations of the state of the art, and future research directions.

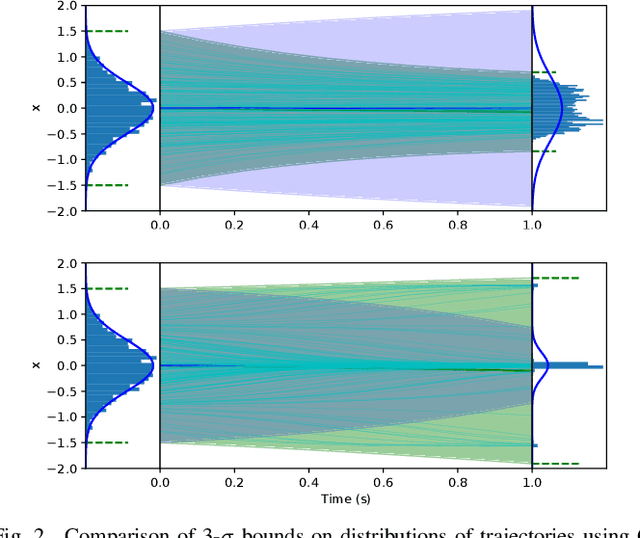

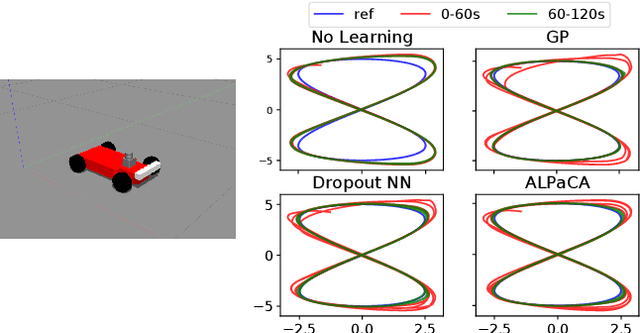

Deep Learning Tubes for Tube MPC

Feb 05, 2020

Abstract:Learning-based control aims to construct models of a system to use for planning or trajectory optimization, e.g. in model-based reinforcement learning. In order to obtain guarantees of safety in this context, uncertainty must be accurately quantified. This uncertainty may come from errors in learning (due to a lack of data, for example), or may be inherent to the system. Propagating uncertainty in learned dynamics models is a difficult problem. Common approaches rely on restrictive assumptions of how distributions are parameterized or propagated in time. In contrast, in this work we propose using deep learning to obtain expressive and flexible models of how these distributions behave, which we then use for nonlinear Model Predictive Control (MPC). First, we introduce a deep quantile regression framework for control which enforces probabilistic quantile bounds and quantifies epistemic uncertainty. Next, using our method we explore three different approaches for learning tubes which contain the possible trajectories of the system, and demonstrate how to use each of them in a Tube MPC scheme. Furthermore, we prove these schemes are recursively feasible and satisfy constraints with a desired margin of probability. Finally, we present experiments in simulation on a nonlinear quadrotor system, demonstrating the practical efficacy of these ideas.

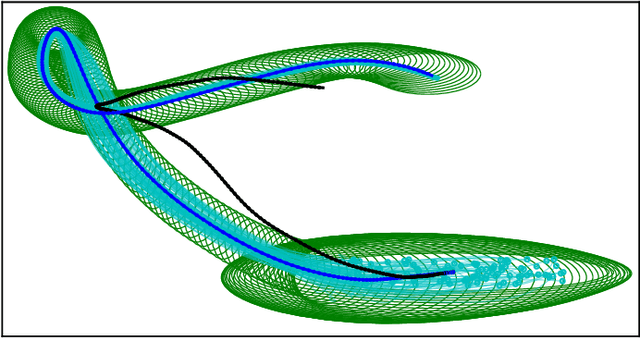

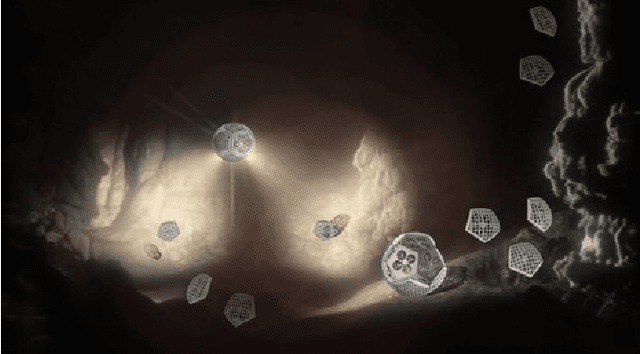

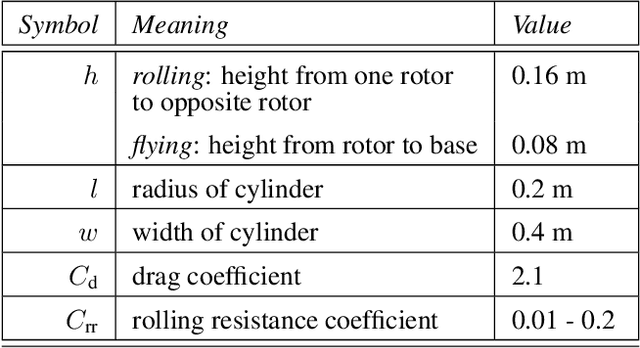

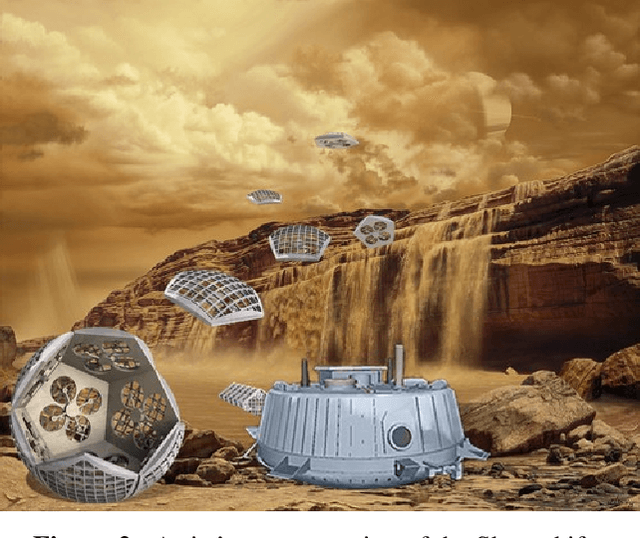

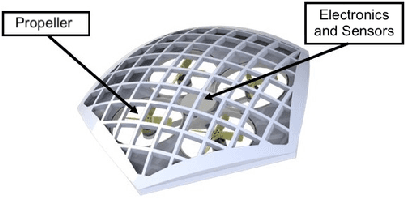

Shapeshifter: A Multi-Agent, Multi-Modal Robotic Platform for Exploration of Titan

Feb 03, 2020

Abstract:In this paper we present a mission architecture and a robotic platform, the Shapeshifter, that allow multi-domain and redundant mobility on Saturn's moon Titan, and potentially other bodies with atmospheres. The Shapeshifter is a collection of simple and affordable robotic units, called Cobots, comparable to personal palm-size quadcopters. By attaching and detaching with each other, multiple Cobots can shape-shift into novel structures, capable of (a) rolling on the surface, to increase the traverse range, (b) flying in a flight array formation, and (c) swimming on or under liquid. A ground station complements the robotic platform, hosting science instrumentation and providing power to recharge the batteries of the Cobots. In the first part of this paper we experimentally show the flying, docking and rolling capabilities of a Shapeshifter constituted by two Cobots, presenting ad-hoc control algorithms. We additionally evaluate the energy-efficiency of the rolling-based mobility strategy by deriving an analytic model of the power consumption and by integrating it in a high-fidelity simulation environment. In the second part we tailor our mission architecture to the exploration of Titan. We show that the properties of the Shapeshifter allow the exploration of the possible cryovolcano Sotra Patera, Titan's Mare and canyons.

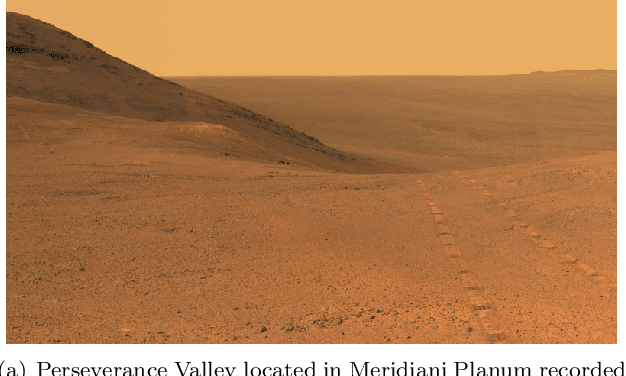

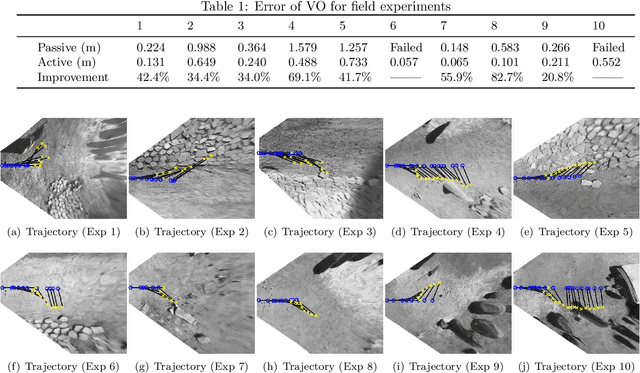

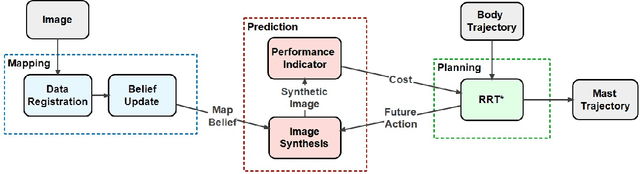

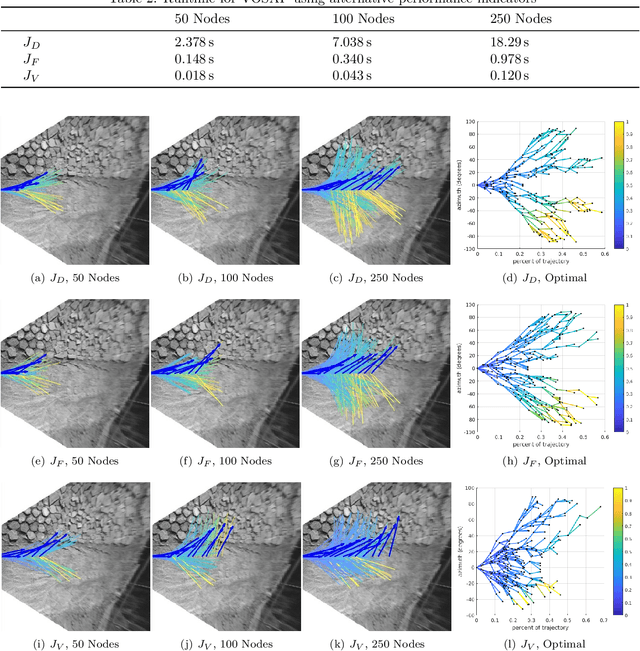

Perception-aware Autonomous Mast Motion Planning for Planetary Exploration Rovers

Dec 14, 2019

Abstract:Highly accurate real-time localization is of fundamental importance for the safety and efficiency of planetary rovers exploring the surface of Mars. Mars rover operations rely on vision-based systems to avoid hazards as well as plan safe routes. However, vision-based systems operate on the assumption that sufficient visual texture is visible in the scene. This poses a challenge for vision-based navigation on Mars where regions lacking visual texture are prevalent. To overcome this, we make use of the ability of the rover to actively steer the visual sensor to improve fault tolerance and maximize the perception performance. This paper answers the question of where and when to look by presenting a method for predicting the sensor trajectory that maximizes the localization performance of the rover. This is accomplished by an online assessment of possible trajectories using synthetic, future camera views created from previous observations of the scene. The proposed trajectories are quantified and chosen based on the expected localization performance. In this work, we validate the proposed method in field experiments at the Jet Propulsion Laboratory (JPL) Mars Yard. Furthermore, multiple performance metrics are identified and evaluated for reducing the overall runtime of the algorithm. We show how actively steering the perception system increases the localization accuracy compared to traditional fixed-sensor configurations.

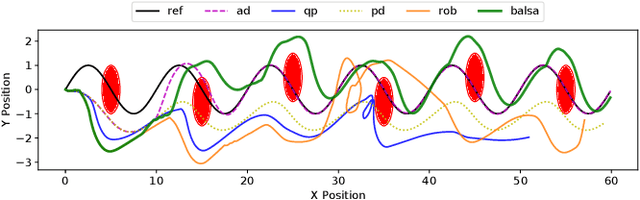

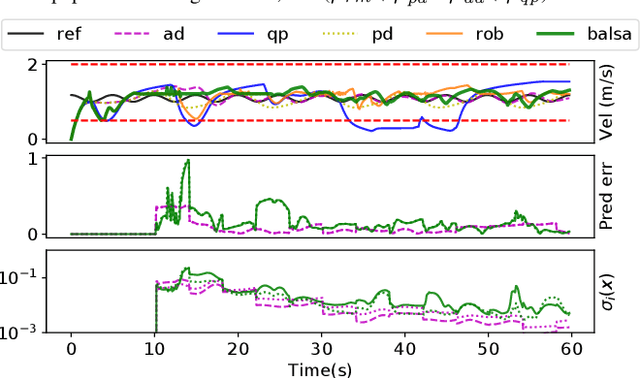

Bayesian Learning-Based Adaptive Control for Safety Critical Systems

Oct 05, 2019

Abstract:Deep learning has enjoyed much recent success, and applying state-of-the-art model learning methods to controls is an exciting prospect. However, there is a strong reluctance to use these methods on safety-critical systems, which have constraints on safety, stability, and real-time performance. We propose a framework which satisfies these constraints while allowing the use of deep neural networks for learning model uncertainties. Central to our method is the use of Bayesian model learning, which provides an avenue for maintaining appropriate degrees of caution in the face of the unknown. In the proposed approach, we develop an adaptive control framework leveraging the theory of stochastic CLFs (Control Lypunov Functions) and stochastic CBFs (Control Barrier Functions) along with tractable Bayesian model learning via Gaussian Processes or Bayesian neural networks. Under reasonable assumptions, we guarantee stability and safety while adapting to unknown dynamics with probability 1. We demonstrate this architecture for high-speed terrestrial mobility targeting potential applications in safety-critical high-speed Mars rover missions.

Contact Inertial Odometry: Collisions are your Friend

Aug 30, 2019

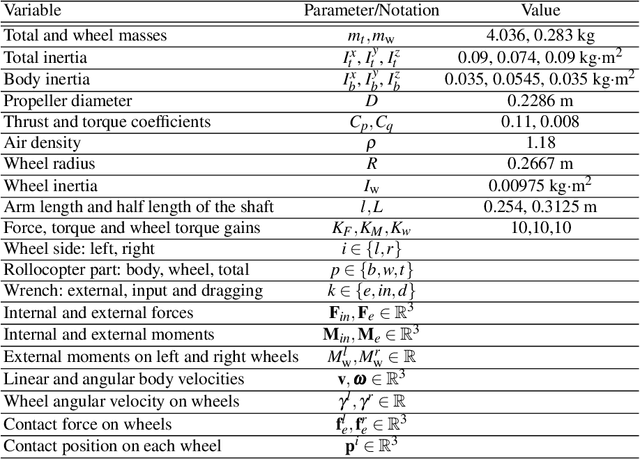

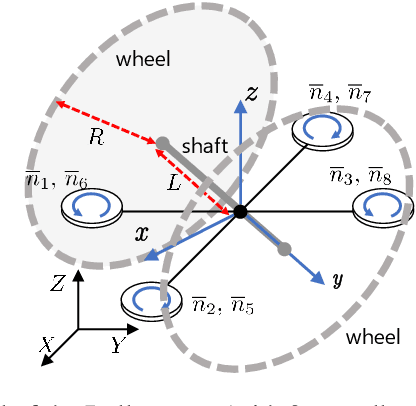

Abstract:Autonomous exploration of unknown environments with aerial vehicles remains a challenging problem, especially in perceptually degraded conditions. Dust, smoke, fog, and a lack of visual or LiDAR-based features result in severe difficulties for state estimation and planning. The absence of measurement updates from visual or LiDAR odometry can cause large drifts in velocity estimates while propagating measurements from an IMU. Furthermore, it is not possible to construct a map for collision checking in absence of pose updates. In this work, we show that it is indeed possible to navigate without any exteroceptive sensing by exploiting collisions instead of treating them as constraints. To this end, we first perform modeling and system identification for a hybrid ground and aerial vehicle which can withstand collisions. Next, we develop a novel external wrench estimation algorithm for this class of vehicles. We then present a novel contact-based inertial odometry (CIO) algorithm: it uses estimated external forces to detect collisions and to generate pseudo-measurements of the robot velocity, fused in an Extended Kalman Filter. Finally, we implement a reactive planner and control law which encourage exploration by bouncing off obstacles. We validate our framework in hardware experiments and show that a quadrotor can traverse a cluttered environment using an IMU only. This work can be used on drones to recover from visual inertial odometry failure or on micro-drones that do not have the payload capacity to carry cameras, LiDARs or powerful computers.

Bi-directional Value Learning for Risk-aware Planning Under Uncertainty: Extended Version

Apr 06, 2019

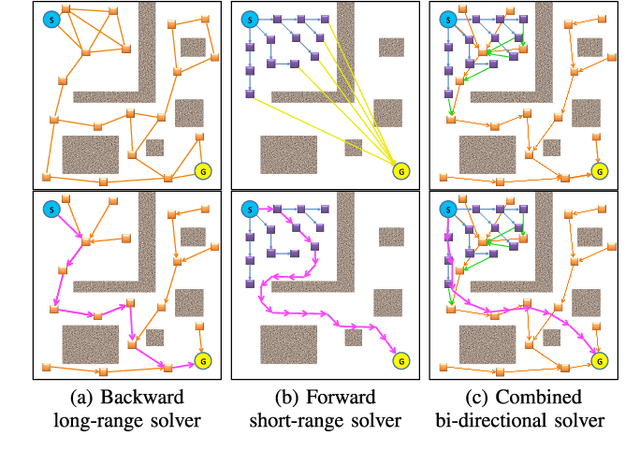

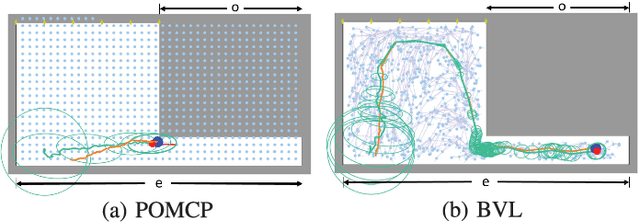

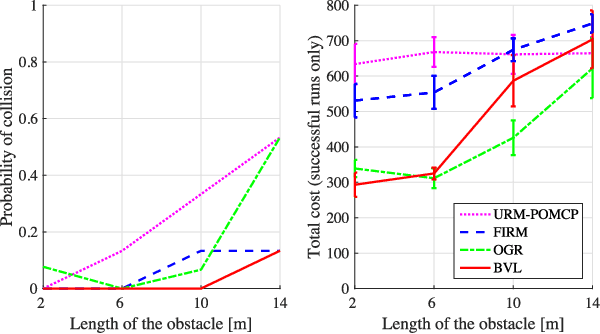

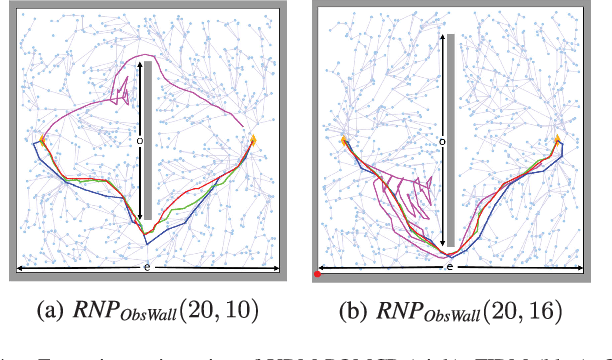

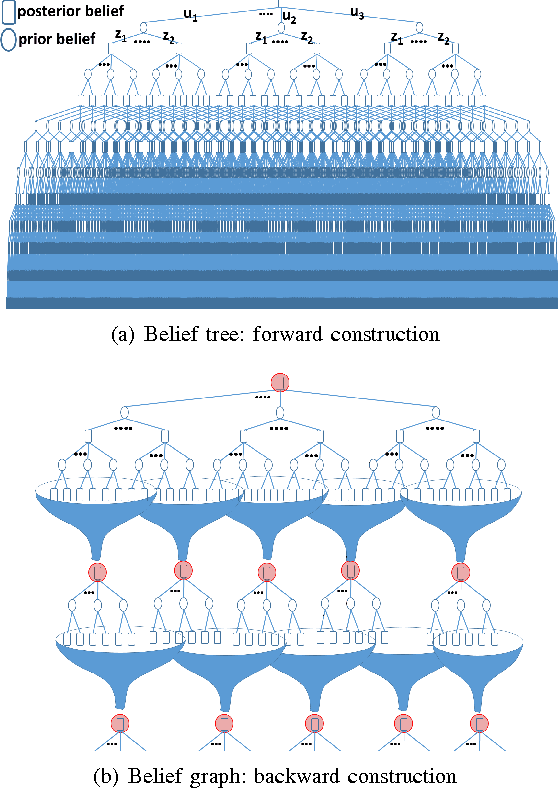

Abstract:Decision-making under uncertainty is a crucial ability for autonomous systems. In its most general form, this problem can be formulated as a Partially Observable Markov Decision Process (POMDP). The solution policy of a POMDP can be implicitly encoded as a value function. In partially observable settings, the value function is typically learned via forward simulation of the system evolution. Focusing on accurate and long-range risk assessment, we propose a novel method, where the value function is learned in different phases via a bi-directional search in belief space. A backward value learning process provides a long-range and risk-aware base policy. A forward value learning process ensures local optimality and updates the policy via forward simulations. We consider a class of scalable and continuous-space rover navigation problems (RNP) to assess the safety, scalability, and optimality of the proposed algorithm. The results demonstrate the capabilities of the proposed algorithm in evaluating long-range risk/safety of the planner while addressing continuous problems with long planning horizons.

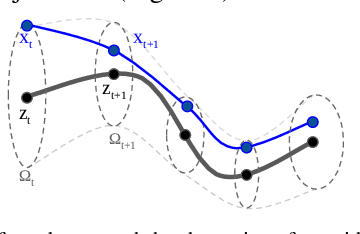

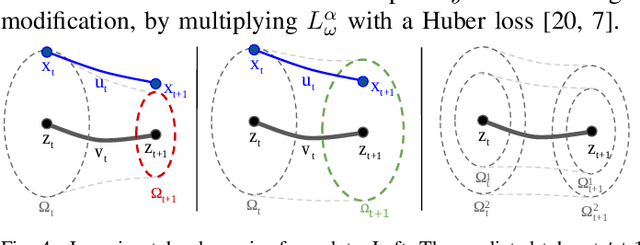

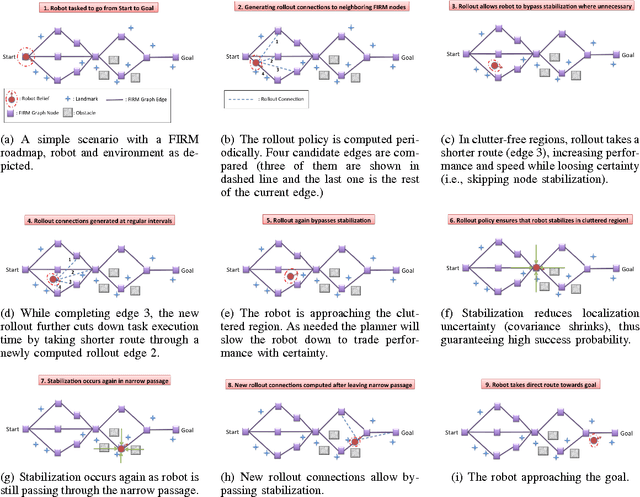

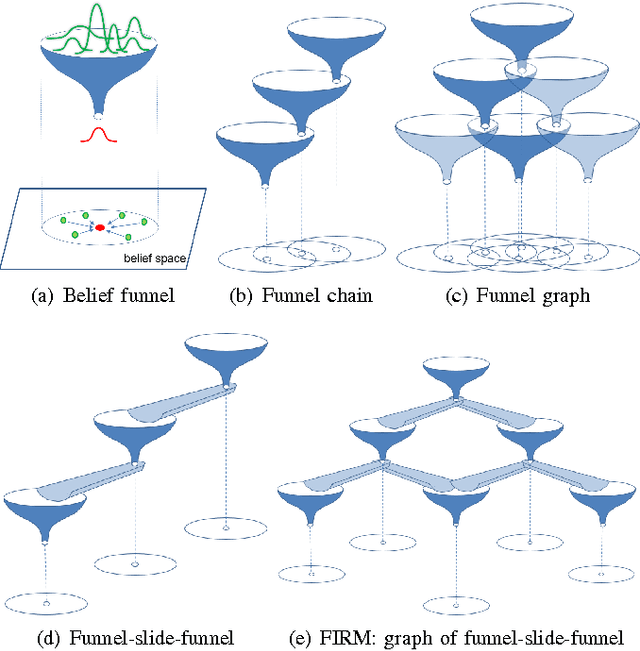

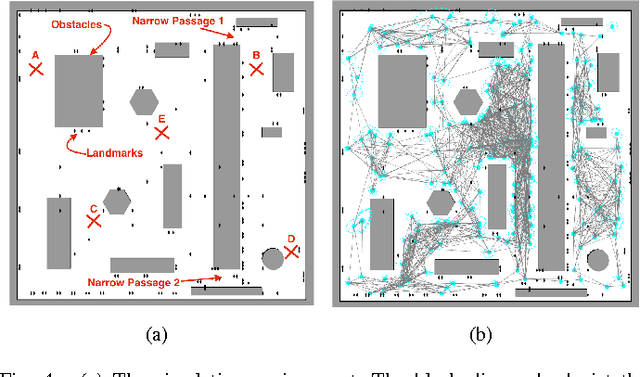

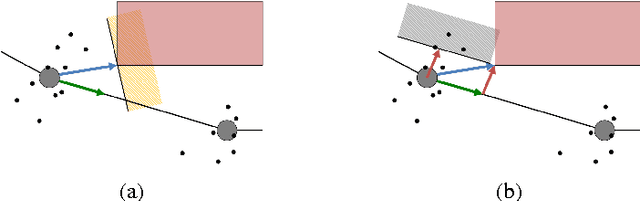

SLAP: Simultaneous Localization and Planning Under Uncertainty for Physical Mobile Robots via Dynamic Replanning in Belief Space: Extended version

May 13, 2018

Abstract:Simultaneous localization and Planning (SLAP) is a crucial ability for an autonomous robot operating under uncertainty. In its most general form, SLAP induces a continuous POMDP (partially-observable Markov decision process), which needs to be repeatedly solved online. This paper addresses this problem and proposes a dynamic replanning scheme in belief space. The underlying POMDP, which is continuous in state, action, and observation space, is approximated offline via sampling-based methods, but operates in a replanning loop online to admit local improvements to the coarse offline policy. This construct enables the proposed method to combat changing environments and large localization errors, even when the change alters the homotopy class of the optimal trajectory. It further outperforms the state-of-the-art FIRM (Feedback-based Information RoadMap) method by eliminating unnecessary stabilization steps. Applying belief space planning to physical systems brings with it a plethora of challenges. A key focus of this paper is to implement the proposed planner on a physical robot and show the SLAP solution performance under uncertainty, in changing environments and in the presence of large disturbances, such as a kidnapped robot situation.

Real-Time Stochastic Kinodynamic Motion Planning via Multiobjective Search on GPUs

Feb 23, 2017

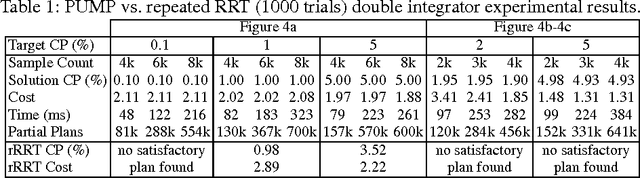

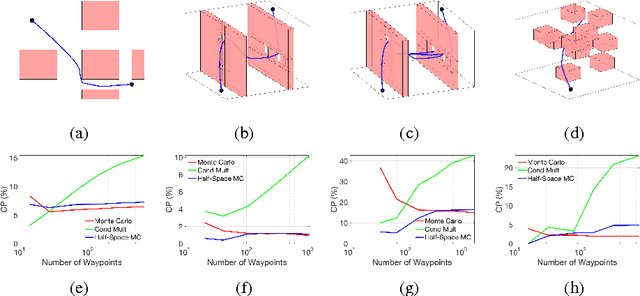

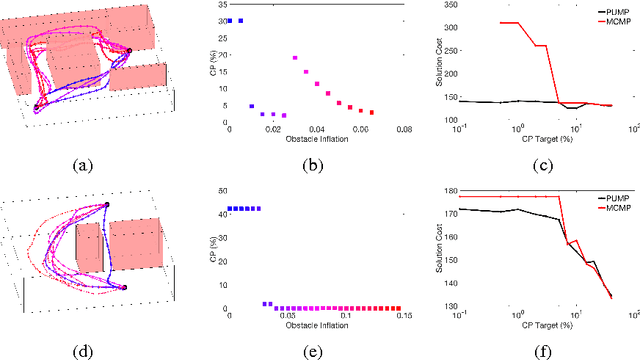

Abstract:In this paper we present the PUMP (Parallel Uncertainty-aware Multiobjective Planning) algorithm for addressing the stochastic kinodynamic motion planning problem, whereby one seeks a low-cost, dynamically-feasible motion plan subject to a constraint on collision probability (CP). To ensure exhaustive evaluation of candidate motion plans (as needed to tradeoff the competing objectives of performance and safety), PUMP incrementally builds the Pareto front of the problem, accounting for the optimization objective and an approximation of CP. This is performed by a massively parallel multiobjective search, here implemented with a focus on GPUs. Upon termination of the exploration phase, PUMP searches the Pareto set of motion plans to identify the lowest cost solution that is certified to satisfy the CP constraint (according to an asymptotically exact estimator). We introduce a novel particle-based CP approximation scheme, designed for efficient GPU implementation, which accounts for dependencies over the history of a trajectory execution. We present numerical experiments for quadrotor planning wherein PUMP identifies solutions in ~100 ms, evaluating over one hundred thousand partial plans through the course of its exploration phase. The results show that this multiobjective search achieves a lower motion plan cost, for the same CP constraint, compared to a safety buffer-based search heuristic and repeated RRT trials.

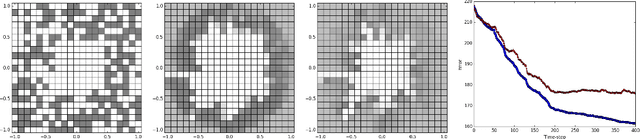

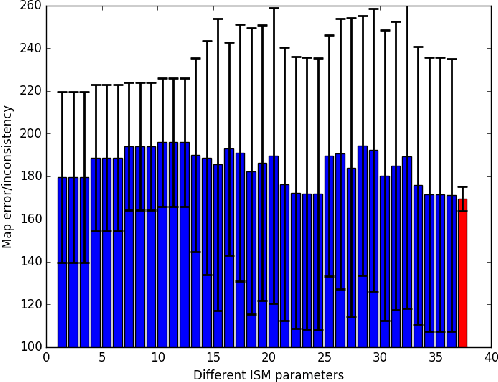

SMAP: Simultaneous Mapping and Planning on Occupancy Grids

Sep 19, 2016

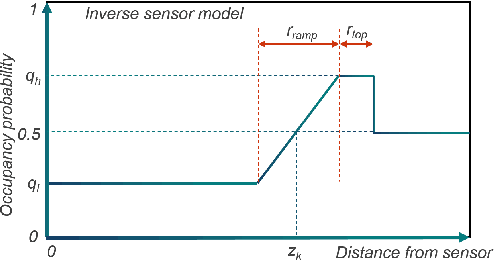

Abstract:Occupancy grids are the most common framework when it comes to creating a map of the environment using a robot. This paper studies occupancy grids from the motion planning perspective and proposes a mapping method that provides richer data (map) for the purpose of planning and collision avoidance. Typically, in occupancy grid mapping, each cell contains a single number representing the probability of cell being occupied. This leads to conflicts in the map, and more importantly inconsistency between the map error and reported confidence values. Such inconsistencies pose challenges for the planner that relies on the generated map for planning motions. In this work, we store a richer data at each voxel including an accurate estimate of the variance of occupancy. We show that in addition to achieving maps that are often more accurate than tradition methods, the proposed filtering scheme demonstrates a much higher level of consistency between its error and its reported confidence. This allows the planner to reason about acquisition of the future sensory information. Such planning can lead to active perception maneuvers that while guiding the robot toward the goal aims at increasing the confidence in parts of the map that are relevant to accomplishing the task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge