Tara Bartlett

Hybrid Aerial-Ground Vehicle Autonomy in GPS-denied Environments

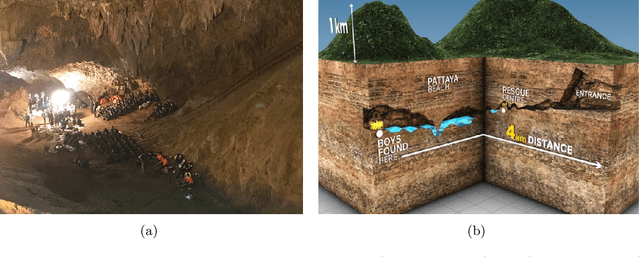

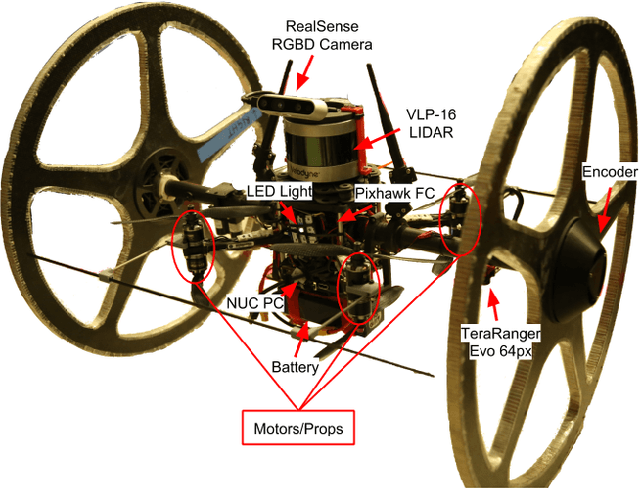

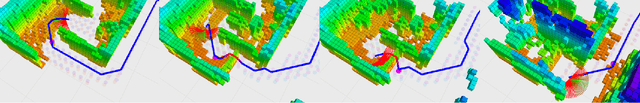

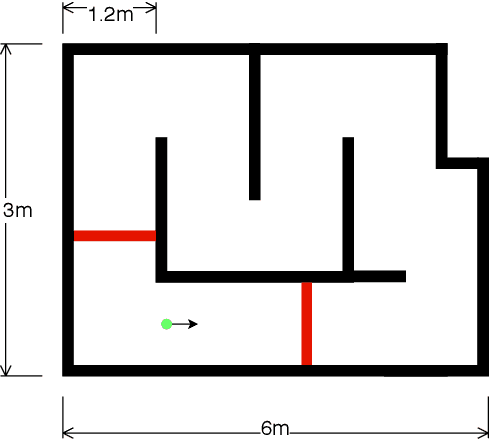

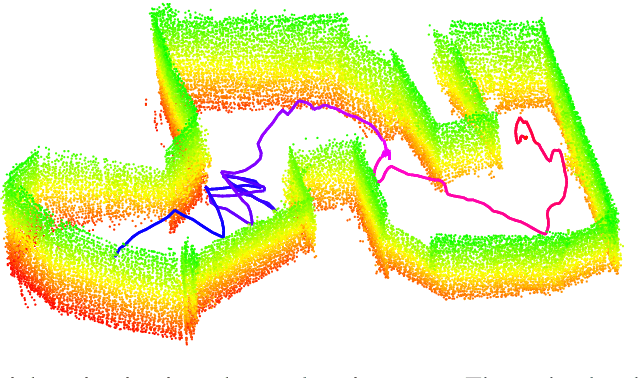

Sep 16, 2024Abstract:The DARPA Subterranean Challenge is leading the development of robots capable of mapping underground mines and tunnels up to 8km in length and identify objects and people. Developing these autonomous abilities paves the way for future planetary cave and surface exploration missions. The Co-STAR team, competing in this challenge, is developing a hybrid aerial-ground vehicle, known as the Rollocopter. The current design of this vehicle is a drone with wheels attached. This allows for the vehicle to roll, actuated by the propellers, and fly only when necessary, hence benefiting from the reduced power consumption of the ground mode and the enhanced mobility of the aerial mode. This thesis focuses on the development and increased robustness of the local planning architecture for the Rollocopter. The first development of thesis is a local planner capable of collision avoidance. The local planning node provides the basic functionality required for the vehicle to navigate autonomously. The next stage was augmenting this with the ability to plan more reliably without localisation. This was then integrated with a hybrid mobility mode capable of rolling and flying to exploit power and mobility benefits of the respective configurations. A traversability analysis algorithm as well as determining the terrain that the vehicle is able to traverse is in the late stages of development for informing the decisions of the hybrid planner. A simulator was developed to test the planning algorithms and improve the robustness of the vehicle to different environments. The results presented in this thesis are related to the mobility of the rollocopter and the range of environments that the vehicle is capable of traversing. Videos are included in which the vehicle successfully navigates through dust-ridden tunnels, horizontal mazes, and areas with rough terrain.

Real-time Coupled Centroidal Motion and Footstep Planning for Biped Robots

Sep 16, 2024Abstract:This paper presents an algorithm that finds a centroidal motion and footstep plan for a Spring-Loaded Inverted Pendulum (SLIP)-like bipedal robot model substantially faster than real-time. This is achieved with a novel representation of the dynamic footstep planning problem, where each point in the environment is considered a potential foothold that can apply a force to the center of mass to keep it on a desired trajectory. For a biped, up to two such footholds per time step must be selected, and we approximate this cardinality constraint with an iteratively reweighted $l_1$-norm minimization. Along with a linearizing approximation of an angular momentum constraint, this results in a quadratic program can be solved for a contact schedule and center of mass trajectory with automatic gait discovery. A 2 s planning horizon with 13 time steps and 20 surfaces available at each time is solved in 142 ms, roughly ten times faster than comparable existing methods in the literature. We demonstrate the versatility of this program in a variety of simulated environments.

NeBula: Quest for Robotic Autonomy in Challenging Environments; TEAM CoSTAR at the DARPA Subterranean Challenge

Mar 28, 2021

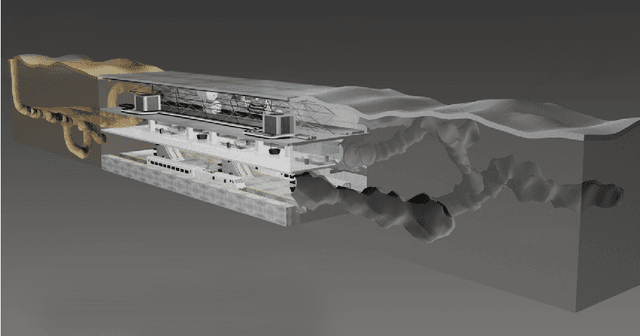

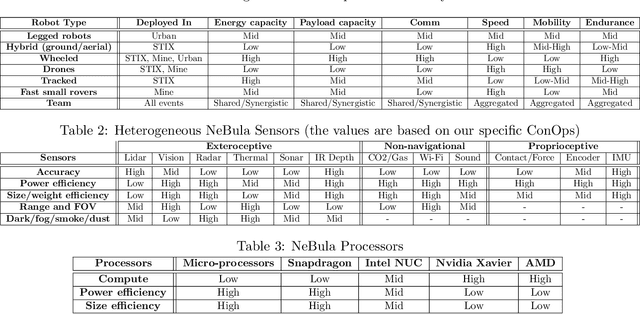

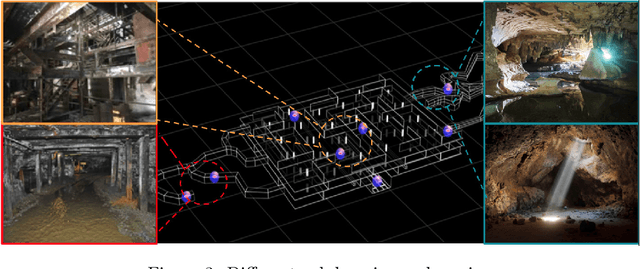

Abstract:This paper presents and discusses algorithms, hardware, and software architecture developed by the TEAM CoSTAR (Collaborative SubTerranean Autonomous Robots), competing in the DARPA Subterranean Challenge. Specifically, it presents the techniques utilized within the Tunnel (2019) and Urban (2020) competitions, where CoSTAR achieved 2nd and 1st place, respectively. We also discuss CoSTAR's demonstrations in Martian-analog surface and subsurface (lava tubes) exploration. The paper introduces our autonomy solution, referred to as NeBula (Networked Belief-aware Perceptual Autonomy). NeBula is an uncertainty-aware framework that aims at enabling resilient and modular autonomy solutions by performing reasoning and decision making in the belief space (space of probability distributions over the robot and world states). We discuss various components of the NeBula framework, including: (i) geometric and semantic environment mapping; (ii) a multi-modal positioning system; (iii) traversability analysis and local planning; (iv) global motion planning and exploration behavior; (i) risk-aware mission planning; (vi) networking and decentralized reasoning; and (vii) learning-enabled adaptation. We discuss the performance of NeBula on several robot types (e.g. wheeled, legged, flying), in various environments. We discuss the specific results and lessons learned from fielding this solution in the challenging courses of the DARPA Subterranean Challenge competition.

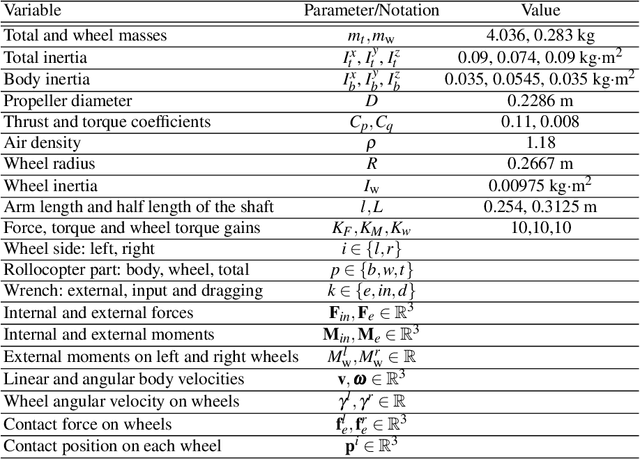

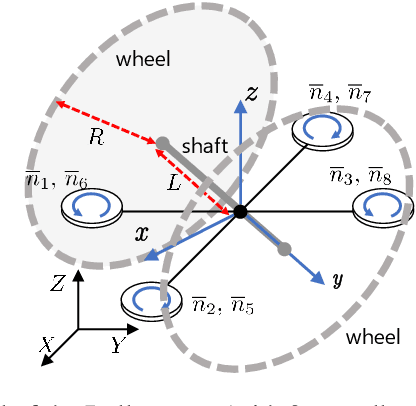

Autonomous Hybrid Ground/Aerial Mobility in Unknown Environments

Sep 11, 2020

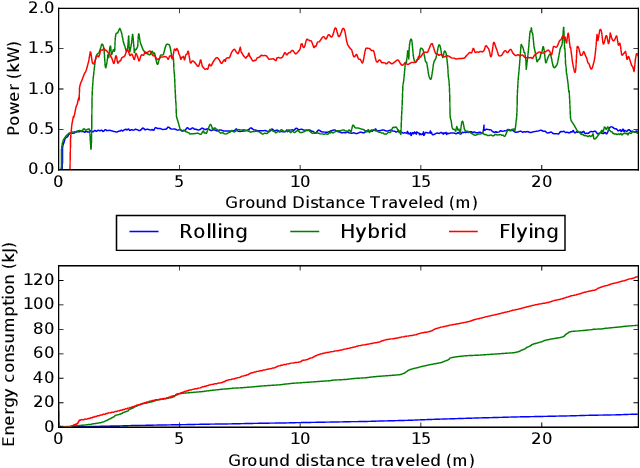

Abstract:Hybrid ground and aerial vehicles can possess distinct advantages over ground-only or flight-only designs in terms of energy savings and increased mobility. In this work we outline our unified framework for controls, planning, and autonomy of hybrid ground/air vehicles. Our contribution is three-fold: 1) We develop a control scheme for the control of passive two-wheeled hybrid ground/aerial vehicles. 2) We present a unified planner for both rolling and flying by leveraging differential flatness mappings. 3) We conduct experiments leveraging mapping and global planning for hybrid mobility in unknown environments, showing that hybrid mobility uses up to five times less energy than flying only.

Contact Inertial Odometry: Collisions are your Friend

Aug 30, 2019

Abstract:Autonomous exploration of unknown environments with aerial vehicles remains a challenging problem, especially in perceptually degraded conditions. Dust, smoke, fog, and a lack of visual or LiDAR-based features result in severe difficulties for state estimation and planning. The absence of measurement updates from visual or LiDAR odometry can cause large drifts in velocity estimates while propagating measurements from an IMU. Furthermore, it is not possible to construct a map for collision checking in absence of pose updates. In this work, we show that it is indeed possible to navigate without any exteroceptive sensing by exploiting collisions instead of treating them as constraints. To this end, we first perform modeling and system identification for a hybrid ground and aerial vehicle which can withstand collisions. Next, we develop a novel external wrench estimation algorithm for this class of vehicles. We then present a novel contact-based inertial odometry (CIO) algorithm: it uses estimated external forces to detect collisions and to generate pseudo-measurements of the robot velocity, fused in an Extended Kalman Filter. Finally, we implement a reactive planner and control law which encourage exploration by bouncing off obstacles. We validate our framework in hardware experiments and show that a quadrotor can traverse a cluttered environment using an IMU only. This work can be used on drones to recover from visual inertial odometry failure or on micro-drones that do not have the payload capacity to carry cameras, LiDARs or powerful computers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge