Time Series Analysis

Time series analysis comprises statistical methods for analyzing a sequence of data points collected over an interval of time to identify interesting patterns and trends.

Papers and Code

Structured Sparse Transition Matrices to Enable State Tracking in State-Space Models

Sep 26, 2025

Modern state-space models (SSMs) often utilize transition matrices which enable efficient computation but pose restrictions on the model's expressivity, as measured in terms of the ability to emulate finite-state automata (FSA). While unstructured transition matrices are optimal in terms of expressivity, they come at a prohibitively high compute and memory cost even for moderate state sizes. We propose a structured sparse parametrization of transition matrices in SSMs that enables FSA state tracking with optimal state size and depth, while keeping the computational cost of the recurrence comparable to that of diagonal SSMs. Our method, PD-SSM, parametrizes the transition matrix as the product of a column one-hot matrix ($P$) and a complex-valued diagonal matrix ($D$). Consequently, the computational cost of parallel scans scales linearly with the state size. Theoretically, the model is BIBO-stable and can emulate any $N$-state FSA with one layer of dimension $N$ and a linear readout of size $N \times N$, significantly improving on all current structured SSM guarantees. Experimentally, the model significantly outperforms a wide collection of modern SSM variants on various FSA state tracking tasks. On multiclass time-series classification, the performance is comparable to that of neural controlled differential equations, a paradigm explicitly built for time-series analysis. Finally, we integrate PD-SSM into a hybrid Transformer-SSM architecture and demonstrate that the model can effectively track the states of a complex FSA in which transitions are encoded as a set of variable-length English sentences. The code is available at https://github.com/IBM/expressive-sparse-state-space-model

StructuralDecompose: A Modular Framework for Robust Time Series Decomposition in R

Oct 06, 2025We present StructuralDecompose, an R package for modular and interpretable time series decomposition. Unlike existing approaches that treat decomposition as a monolithic process, StructuralDecompose separates the analysis into distinct components: changepoint detection, anomaly detection, smoothing, and decomposition. This design provides flexibility and robust- ness, allowing users to tailor methods to specific time series characteristics. We demonstrate the package on simulated and real-world datasets, benchmark its performance against state-of-the- art tools such as Rbeast and autostsm, and discuss its role in interpretable machine learning workflows.

DyWPE: Signal-Aware Dynamic Wavelet Positional Encoding for Time Series Transformers

Sep 18, 2025Existing positional encoding methods in transformers are fundamentally signal-agnostic, deriving positional information solely from sequence indices while ignoring the underlying signal characteristics. This limitation is particularly problematic for time series analysis, where signals exhibit complex, non-stationary dynamics across multiple temporal scales. We introduce Dynamic Wavelet Positional Encoding (DyWPE), a novel signal-aware framework that generates positional embeddings directly from input time series using the Discrete Wavelet Transform (DWT). Comprehensive experiments in ten diverse time series datasets demonstrate that DyWPE consistently outperforms eight existing state-of-the-art positional encoding methods, achieving average relative improvements of 9.1\% compared to baseline sinusoidal absolute position encoding in biomedical signals, while maintaining competitive computational efficiency.

Learning with Preserving for Continual Multitask Learning

Nov 11, 2025Artificial intelligence systems in critical fields like autonomous driving and medical imaging analysis often continually learn new tasks using a shared stream of input data. For instance, after learning to detect traffic signs, a model may later need to learn to classify traffic lights or different types of vehicles using the same camera feed. This scenario introduces a challenging setting we term Continual Multitask Learning (CMTL), where a model sequentially learns new tasks on an underlying data distribution without forgetting previously learned abilities. Existing continual learning methods often fail in this setting because they learn fragmented, task-specific features that interfere with one another. To address this, we introduce Learning with Preserving (LwP), a novel framework that shifts the focus from preserving task outputs to maintaining the geometric structure of the shared representation space. The core of LwP is a Dynamically Weighted Distance Preservation (DWDP) loss that prevents representation drift by regularizing the pairwise distances between latent data representations. This mechanism of preserving the underlying geometric structure allows the model to retain implicit knowledge and support diverse tasks without requiring a replay buffer, making it suitable for privacy-conscious applications. Extensive evaluations on time-series and image benchmarks show that LwP not only mitigates catastrophic forgetting but also consistently outperforms state-of-the-art baselines in CMTL tasks. Notably, our method shows superior robustness to distribution shifts and is the only approach to surpass the strong single-task learning baseline, underscoring its effectiveness for real-world dynamic environments.

TENDE: Transfer Entropy Neural Diffusion Estimation

Oct 15, 2025Transfer entropy measures directed information flow in time series, and it has become a fundamental quantity in applications spanning neuroscience, finance, and complex systems analysis. However, existing estimation methods suffer from the curse of dimensionality, require restrictive distributional assumptions, or need exponentially large datasets for reliable convergence. We address these limitations in the literature by proposing TENDE (Transfer Entropy Neural Diffusion Estimation), a novel approach that leverages score-based diffusion models to estimate transfer entropy through conditional mutual information. By learning score functions of the relevant conditional distributions, TENDE provides flexible, scalable estimation while making minimal assumptions about the underlying data-generating process. We demonstrate superior accuracy and robustness compared to existing neural estimators and other state-of-the-art approaches across synthetic benchmarks and real data.

SERVIMON: AI-Driven Predictive Maintenance and Real-Time Monitoring for Astronomical Observatories

Oct 31, 2025

Objective: ServiMon is designed to offer a scalable and intelligent pipeline for data collection and auditing to monitor distributed astronomical systems such as the ASTRI Mini-Array. The system enhances quality control, predictive maintenance, and real-time anomaly detection for telescope operations. Methods: ServiMon integrates cloud-native technologies-including Prometheus, Grafana, Cassandra, Kafka, and InfluxDB-for telemetry collection and processing. It employs machine learning algorithms, notably Isolation Forest, to detect anomalies in Cassandra performance metrics. Key indicators such as read/write latency, throughput, and memory usage are continuously monitored, stored as time-series data, and preprocessed for feature engineering. Anomalies detected by the model are logged in InfluxDB v2 and accessed via Flux for real-time monitoring and visualization. Results: AI-based anomaly detection increases system resilience by identifying performance degradation at an early stage, minimizing downtime, and optimizing telescope operations. Additionally, ServiMon supports astrostatistical analysis by correlating telemetry with observational data, thus enhancing scientific data quality. AI-generated alerts also improve real-time monitoring, enabling proactive system management. Conclusion: ServiMon's scalable framework proves effective for predictive maintenance and real-time monitoring of astronomical infrastructures. By leveraging cloud and edge computing, it is adaptable to future large-scale experiments, optimizing both performance and cost. The combination of machine learning and big data analytics makes ServiMon a robust and flexible solution for modern and next-generation observational astronomy.

Realistic CDSS Drug Dosing with End-to-end Recurrent Q-learning for Dual Vasopressor Control

Oct 01, 2025Reinforcement learning (RL) applications in Clinical Decision Support Systems (CDSS) frequently encounter skepticism from practitioners regarding inoperable dosing decisions. We address this challenge with an end-to-end approach for learning optimal drug dosing and control policies for dual vasopressor administration in intensive care unit (ICU) patients with septic shock. For realistic drug dosing, we apply action space design that accommodates discrete, continuous, and directional dosing strategies in a system that combines offline conservative Q-learning with a novel recurrent modeling in a replay buffer to capture temporal dependencies in ICU time-series data. Our comparative analysis of norepinephrine dosing strategies across different action space formulations reveals that the designed action spaces improve interpretability and facilitate clinical adoption while preserving efficacy. Empirical results1 on eICU and MIMIC demonstrate that action space design profoundly influences learned behavioral policies. The proposed methods achieve improved patient outcomes of over 15% in survival improvement probability, while aligning with established clinical protocols.

Lightweight Ac Arc Fault Diagnosis via Fourier Transform Inspired Multi-frequency Neural Network

Oct 30, 2025

Lightweight online detection of series arc faults is critically needed in residential and industrial power systems to prevent electrical fires. Existing diagnostic methods struggle to achieve both rapid response and robust accuracy under resource-constrained conditions. To overcome the challenge, this work suggests leveraging a multi-frequency neural network named MFNN, embedding prior physical knowledge into the network. Inspired by arcing current curve and the Fourier decomposition analysis, we create an adaptive activation function with super-expressiveness, termed EAS, and a novel network architecture with branch networks to help MFNN extract features with multiple frequencies. In our experiments, eight advanced arc fault diagnosis models across an experimental dataset with multiple sampling times and multi-level noise are used to demonstrate the superiority of MFNN. The corresponding experiments show: 1) The MFNN outperforms other models in arc fault location, befitting from signal decomposition of branch networks. 2) The noise immunity of MFNN is much better than that of other models, achieving 14.51% over LCNN and 16.3% over BLS in test accuracy when SNR=-9. 3) EAS and the network architecture contribute to the excellent performance of MFNN.

Rmd: Robust Modal Decomposition with Constrained Bandwidth

Oct 27, 2025Modal decomposition techniques, such as Empirical Mode Decomposition (EMD), Variational Mode Decomposition (VMD), and Singular Spectrum Analysis (SSA), have advanced time-frequency signal analysis since the early 21st century. These methods are generally classified into two categories: numerical optimization-based methods (EMD, VMD) and spectral decomposition methods (SSA) that consider the physical meaning of signals. The former can produce spurious modes due to the lack of physical constraints, while the latter is more sensitive to noise and struggles with nonlinear signals. Despite continuous improvements in these methods, a modal decomposition approach that effectively combines the strengths of both categories remains elusive. This paper thus proposes a Robust Modal Decomposition (RMD) method with constrained bandwidth, which preserves the intrinsic structure of the signal by mapping the time series into its trajectory-GRAM matrix in phase space. Moreover, the method incorporates bandwidth constraints during the decomposition process, enhancing noise resistance. Extensive experiments on synthetic and real-world datasets, including millimeter-wave radar echoes, electrocardiogram (ECG), phonocardiogram (PCG), and bearing fault detection data, demonstrate the method's effectiveness and versatility. All code and dataset samples are available on GitHub: https://github.com/Einstein-sworder/RMD.

On Evaluating Loss Functions for Stock Ranking: An Empirical Analysis With Transformer Model

Oct 15, 2025

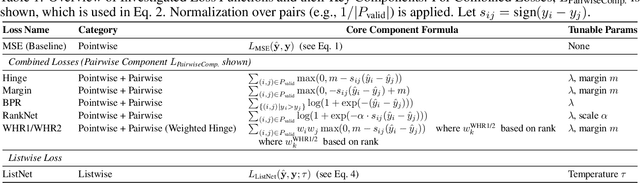

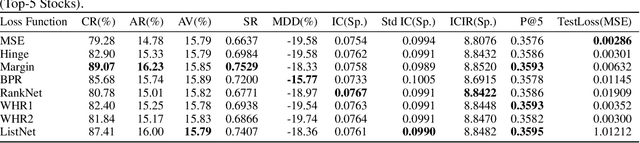

Quantitative trading strategies rely on accurately ranking stocks to identify profitable investments. Effective portfolio management requires models that can reliably order future stock returns. Transformer models are promising for understanding financial time series, but how different training loss functions affect their ability to rank stocks well is not yet fully understood. Financial markets are challenging due to their changing nature and complex relationships between stocks. Standard loss functions, which aim for simple prediction accuracy, often aren't enough. They don't directly teach models to learn the correct order of stock returns. While many advanced ranking losses exist from fields such as information retrieval, there hasn't been a thorough comparison to see how well they work for ranking financial returns, especially when used with modern Transformer models for stock selection. This paper addresses this gap by systematically evaluating a diverse set of advanced loss functions including pointwise, pairwise, listwise for daily stock return forecasting to facilitate rank-based portfolio selection on S&P 500 data. We focus on assessing how each loss function influences the model's ability to discern profitable relative orderings among assets. Our research contributes a comprehensive benchmark revealing how different loss functions impact a model's ability to learn cross-sectional and temporal patterns crucial for portfolio selection, thereby offering practical guidance for optimizing ranking-based trading strategies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge