Ziyuan Huang

Rethinking Efficient Tuning Methods from a Unified Perspective

Mar 01, 2023Abstract:Parameter-efficient transfer learning (PETL) based on large-scale pre-trained foundation models has achieved great success in various downstream applications. Existing tuning methods, such as prompt, prefix, and adapter, perform task-specific lightweight adjustments to different parts of the original architecture. However, they take effect on only some parts of the pre-trained models, i.e., only the feed-forward layers or the self-attention layers, which leaves the remaining frozen structures unable to adapt to the data distributions of downstream tasks. Further, the existing structures are strongly coupled with the Transformers, hindering parameter-efficient deployment as well as the design flexibility for new approaches. In this paper, we revisit the design paradigm of PETL and derive a unified framework U-Tuning for parameter-efficient transfer learning, which is composed of an operation with frozen parameters and a unified tuner that adapts the operation for downstream applications. The U-Tuning framework can simultaneously encompass existing methods and derive new approaches for parameter-efficient transfer learning, which prove to achieve on-par or better performances on CIFAR-100 and FGVC datasets when compared with existing PETL methods.

Physically Plausible Animation of Human Upper Body from a Single Image

Dec 09, 2022

Abstract:We present a new method for generating controllable, dynamically responsive, and photorealistic human animations. Given an image of a person, our system allows the user to generate Physically plausible Upper Body Animation (PUBA) using interaction in the image space, such as dragging their hand to various locations. We formulate a reinforcement learning problem to train a dynamic model that predicts the person's next 2D state (i.e., keypoints on the image) conditioned on a 3D action (i.e., joint torque), and a policy that outputs optimal actions to control the person to achieve desired goals. The dynamic model leverages the expressiveness of 3D simulation and the visual realism of 2D videos. PUBA generates 2D keypoint sequences that achieve task goals while being responsive to forceful perturbation. The sequences of keypoints are then translated by a pose-to-image generator to produce the final photorealistic video.

Progressive Learning without Forgetting

Nov 28, 2022Abstract:Learning from changing tasks and sequential experience without forgetting the obtained knowledge is a challenging problem for artificial neural networks. In this work, we focus on two challenging problems in the paradigm of Continual Learning (CL) without involving any old data: (i) the accumulation of catastrophic forgetting caused by the gradually fading knowledge space from which the model learns the previous knowledge; (ii) the uncontrolled tug-of-war dynamics to balance the stability and plasticity during the learning of new tasks. In order to tackle these problems, we present Progressive Learning without Forgetting (PLwF) and a credit assignment regime in the optimizer. PLwF densely introduces model functions from previous tasks to construct a knowledge space such that it contains the most reliable knowledge on each task and the distribution information of different tasks, while credit assignment controls the tug-of-war dynamics by removing gradient conflict through projection. Extensive ablative experiments demonstrate the effectiveness of PLwF and credit assignment. In comparison with other CL methods, we report notably better results even without relying on any raw data.

PVT++: A Simple End-to-End Latency-Aware Visual Tracking Framework

Nov 21, 2022Abstract:Visual object tracking is an essential capability of intelligent robots. Most existing approaches have ignored the online latency that can cause severe performance degradation during real-world processing. Especially for unmanned aerial vehicle, where robust tracking is more challenging and onboard computation is limited, latency issue could be fatal. In this work, we present a simple framework for end-to-end latency-aware tracking, i.e., end-to-end predictive visual tracking (PVT++). PVT++ is capable of turning most leading-edge trackers into predictive trackers by appending an online predictor. Unlike existing solutions that use model-based approaches, our framework is learnable, such that it can take not only motion information as input but it can also take advantage of visual cues or a combination of both. Moreover, since PVT++ is end-to-end optimizable, it can further boost the latency-aware tracking performance by joint training. Additionally, this work presents an extended latency-aware evaluation benchmark for assessing an any-speed tracker in the online setting. Empirical results on robotic platform from aerial perspective show that PVT++ can achieve up to 60% performance gain on various trackers and exhibit better robustness than prior model-based solution, largely mitigating the degradation brought by latency. Code and models will be made public.

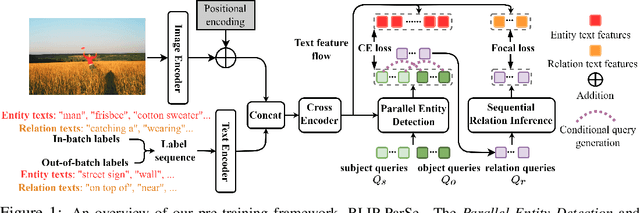

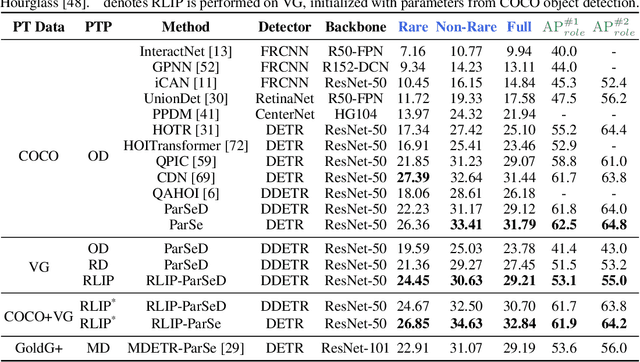

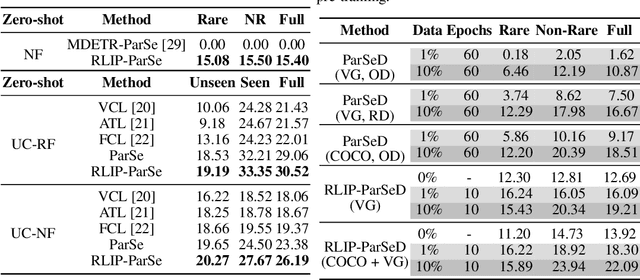

RLIP: Relational Language-Image Pre-training for Human-Object Interaction Detection

Sep 05, 2022

Abstract:The task of Human-Object Interaction (HOI) detection targets fine-grained visual parsing of humans interacting with their environment, enabling a broad range of applications. Prior work has demonstrated the benefits of effective architecture design and integration of relevant cues for more accurate HOI detection. However, the design of an appropriate pre-training strategy for this task remains underexplored by existing approaches. To address this gap, we propose Relational Language-Image Pre-training (RLIP), a strategy for contrastive pre-training that leverages both entity and relation descriptions. To make effective use of such pre-training, we make three technical contributions: (1) a new Parallel entity detection and Sequential relation inference (ParSe) architecture that enables the use of both entity and relation descriptions during holistically optimized pre-training; (2) a synthetic data generation framework, Label Sequence Extension, that expands the scale of language data available within each minibatch; (3) mechanisms to account for ambiguity, Relation Quality Labels and Relation Pseudo-Labels, to mitigate the influence of ambiguous/noisy samples in the pre-training data. Through extensive experiments, we demonstrate the benefits of these contributions, collectively termed RLIP-ParSe, for improved zero-shot, few-shot and fine-tuning HOI detection performance as well as increased robustness to learning from noisy annotations. Code will be available at \url{https://github.com/JacobYuan7/RLIP}.

MAR: Masked Autoencoders for Efficient Action Recognition

Jul 24, 2022

Abstract:Standard approaches for video recognition usually operate on the full input videos, which is inefficient due to the widely present spatio-temporal redundancy in videos. Recent progress in masked video modelling, i.e., VideoMAE, has shown the ability of vanilla Vision Transformers (ViT) to complement spatio-temporal contexts given only limited visual contents. Inspired by this, we propose propose Masked Action Recognition (MAR), which reduces the redundant computation by discarding a proportion of patches and operating only on a part of the videos. MAR contains the following two indispensable components: cell running masking and bridging classifier. Specifically, to enable the ViT to perceive the details beyond the visible patches easily, cell running masking is presented to preserve the spatio-temporal correlations in videos, which ensures the patches at the same spatial location can be observed in turn for easy reconstructions. Additionally, we notice that, although the partially observed features can reconstruct semantically explicit invisible patches, they fail to achieve accurate classification. To address this, a bridging classifier is proposed to bridge the semantic gap between the ViT encoded features for reconstruction and the features specialized for classification. Our proposed MAR reduces the computational cost of ViT by 53% and extensive experiments show that MAR consistently outperforms existing ViT models with a notable margin. Especially, we found a ViT-Large trained by MAR outperforms the ViT-Huge trained by a standard training scheme by convincing margins on both Kinetics-400 and Something-Something v2 datasets, while our computation overhead of ViT-Large is only 14.5% of ViT-Huge.

Learning from Untrimmed Videos: Self-Supervised Video Representation Learning with Hierarchical Consistency

Apr 06, 2022

Abstract:Natural videos provide rich visual contents for self-supervised learning. Yet most existing approaches for learning spatio-temporal representations rely on manually trimmed videos, leading to limited diversity in visual patterns and limited performance gain. In this work, we aim to learn representations by leveraging more abundant information in untrimmed videos. To this end, we propose to learn a hierarchy of consistencies in videos, i.e., visual consistency and topical consistency, corresponding respectively to clip pairs that tend to be visually similar when separated by a short time span and share similar topics when separated by a long time span. Specifically, a hierarchical consistency learning framework HiCo is presented, where the visually consistent pairs are encouraged to have the same representation through contrastive learning, while the topically consistent pairs are coupled through a topical classifier that distinguishes whether they are topic related. Further, we impose a gradual sampling algorithm for proposed hierarchical consistency learning, and demonstrate its theoretical superiority. Empirically, we show that not only HiCo can generate stronger representations on untrimmed videos, it also improves the representation quality when applied to trimmed videos. This is in contrast to standard contrastive learning that fails to learn appropriate representations from untrimmed videos.

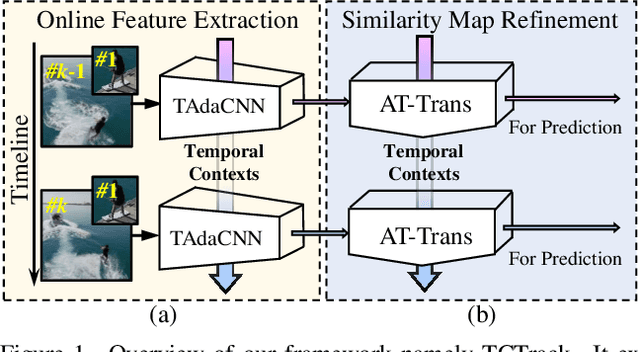

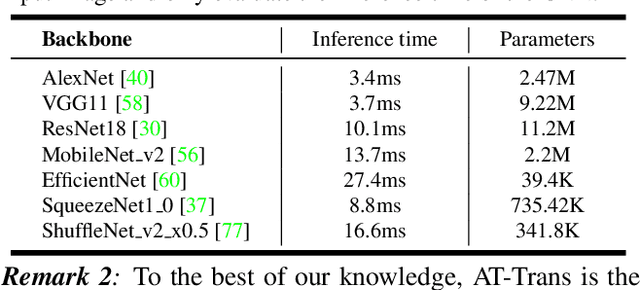

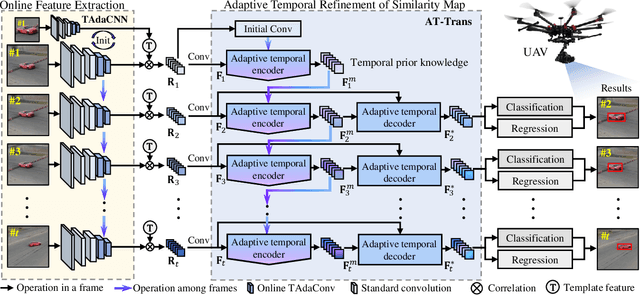

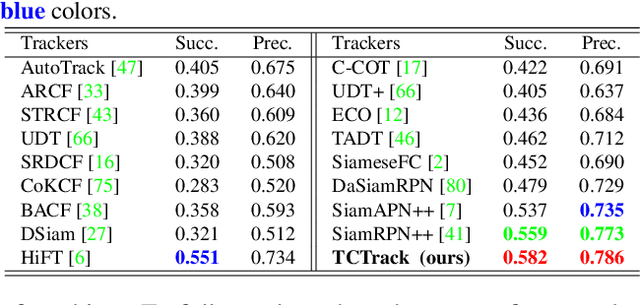

TCTrack: Temporal Contexts for Aerial Tracking

Mar 28, 2022

Abstract:Temporal contexts among consecutive frames are far from being fully utilized in existing visual trackers. In this work, we present TCTrack, a comprehensive framework to fully exploit temporal contexts for aerial tracking. The temporal contexts are incorporated at \textbf{two levels}: the extraction of \textbf{features} and the refinement of \textbf{similarity maps}. Specifically, for feature extraction, an online temporally adaptive convolution is proposed to enhance the spatial features using temporal information, which is achieved by dynamically calibrating the convolution weights according to the previous frames. For similarity map refinement, we propose an adaptive temporal transformer, which first effectively encodes temporal knowledge in a memory-efficient way, before the temporal knowledge is decoded for accurate adjustment of the similarity map. TCTrack is effective and efficient: evaluation on four aerial tracking benchmarks shows its impressive performance; real-world UAV tests show its high speed of over 27 FPS on NVIDIA Jetson AGX Xavier.

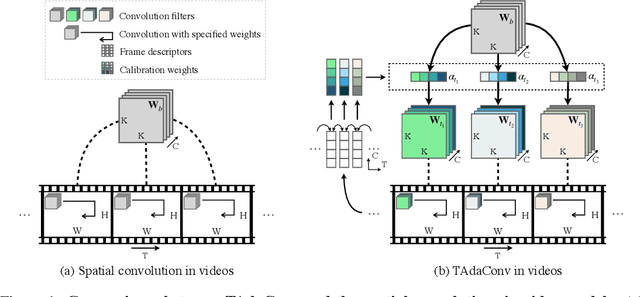

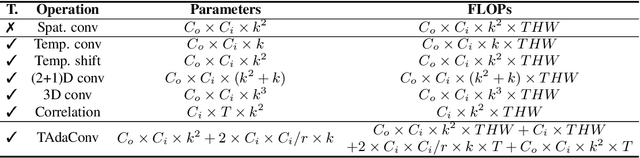

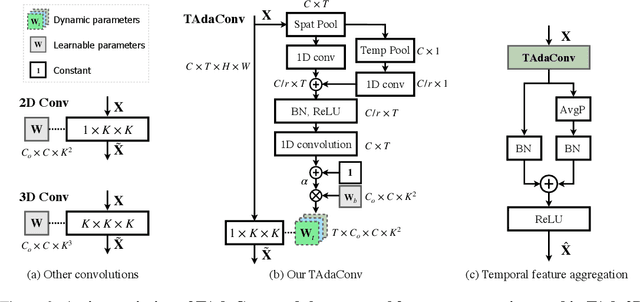

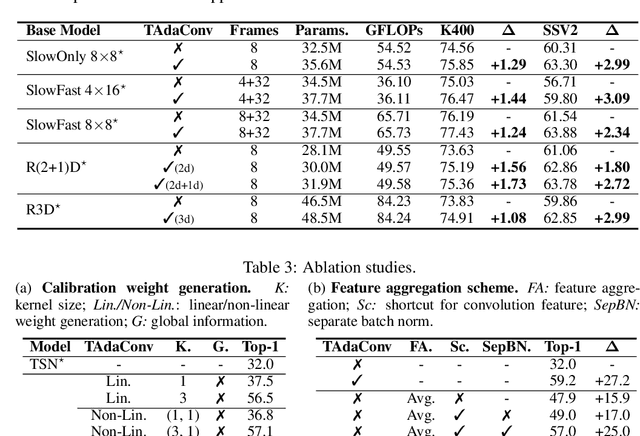

TAda! Temporally-Adaptive Convolutions for Video Understanding

Oct 12, 2021

Abstract:Spatial convolutions are widely used in numerous deep video models. It fundamentally assumes spatio-temporal invariance, i.e., using shared weights for every location in different frames. This work presents Temporally-Adaptive Convolutions (TAdaConv) for video understanding, which shows that adaptive weight calibration along the temporal dimension is an efficient way to facilitate modelling complex temporal dynamics in videos. Specifically, TAdaConv empowers the spatial convolutions with temporal modelling abilities by calibrating the convolution weights for each frame according to its local and global temporal context. Compared to previous temporal modelling operations, TAdaConv is more efficient as it operates over the convolution kernels instead of the features, whose dimension is an order of magnitude smaller than the spatial resolutions. Further, the kernel calibration also brings an increased model capacity. We construct TAda2D networks by replacing the spatial convolutions in ResNet with TAdaConv, which leads to on par or better performance compared to state-of-the-art approaches on multiple video action recognition and localization benchmarks. We also demonstrate that as a readily plug-in operation with negligible computation overhead, TAdaConv can effectively improve many existing video models with a convincing margin. Codes and models will be made available at https://github.com/alibaba-mmai-research/pytorch-video-understanding.

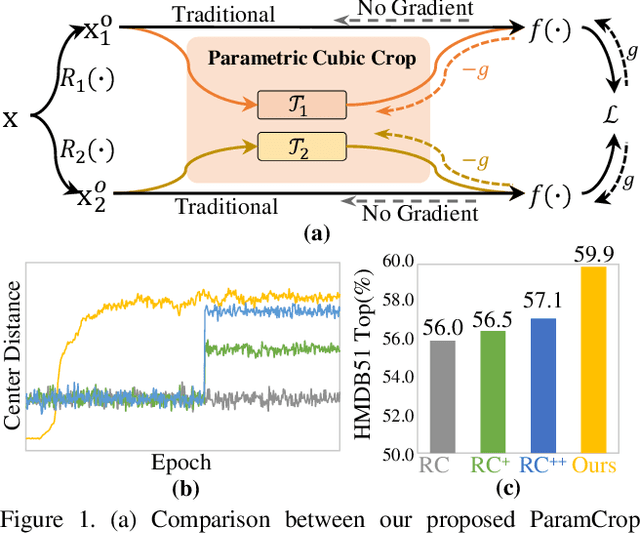

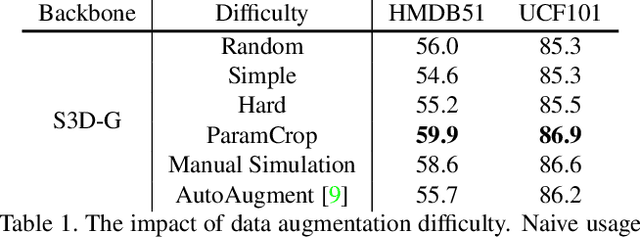

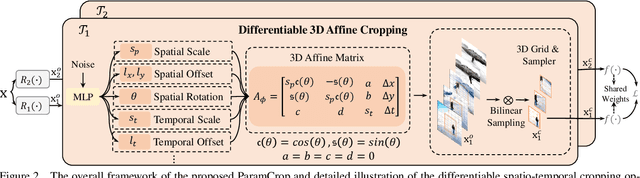

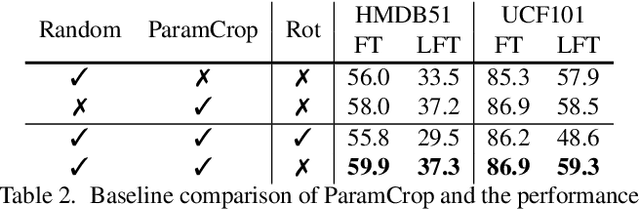

ParamCrop: Parametric Cubic Cropping for Video Contrastive Learning

Sep 05, 2021

Abstract:The central idea of contrastive learning is to discriminate between different instances and force different views of the same instance to share the same representation. To avoid trivial solutions, augmentation plays an important role in generating different views, among which random cropping is shown to be effective for the model to learn a strong and generalized representation. Commonly used random crop operation keeps the difference between two views statistically consistent along the training process. In this work, we challenge this convention by showing that adaptively controlling the disparity between two augmented views along the training process enhances the quality of the learnt representation. Specifically, we present a parametric cubic cropping operation, ParamCrop, for video contrastive learning, which automatically crops a 3D cubic from the video by differentiable 3D affine transformations. ParamCrop is trained simultaneously with the video backbone using an adversarial objective and learns an optimal cropping strategy from the data. The visualizations show that the center distance and the IoU between two augmented views are adaptively controlled by ParamCrop and the learned change in the disparity along the training process is beneficial to learning a strong representation. Extensive ablation studies demonstrate the effectiveness of the proposed ParamCrop on multiple contrastive learning frameworks and video backbones. With ParamCrop, we improve the state-of-the-art performance on both HMDB51 and UCF101 datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge