Ziyi Chen

Provably Efficient Algorithms for S- and Non-Rectangular Robust MDPs with General Parameterization

Feb 11, 2026Abstract:We study robust Markov decision processes (RMDPs) with general policy parameterization under s-rectangular and non-rectangular uncertainty sets. Prior work is largely limited to tabular policies, and hence either lacks sample complexity guarantees or incurs high computational cost. Our method reduces the average reward RMDPs to entropy-regularized discounted robust MDPs, restoring strong duality and enabling tractable equilibrium computation. We prove novel Lipschitz and Lipschitz-smoothness properties for general policy parameterizations that extends to infinite state spaces. To address infinite-horizon gradient estimation, we introduce a multilevel Monte Carlo gradient estimator with $\tilde{\mathcal{O}}(ε^{-2})$ sample complexity, a factor of $\mathcal{O}(ε^{-2})$ improvement over prior work. Building on this, we design a projected gradient descent algorithm for s-rectangular uncertainty ($\mathcal{O}(ε^{-5})$) and a Frank--Wolfe algorithm for non-rectangular uncertainty ($\mathcal{O}(ε^{-4})$ discounted, $\mathcal{O}(ε^{-10.5})$ average reward), significantly improving prior results in both the discounted setting and average reward setting. Our work is the first one to provide sample complexity guarantees for RMDPs with general policy parameterization beyond $(s, a)$-rectangularity. It also provides the first such guarantees in the average reward setting and improves existing bounds for discounted robust MDPs.

AdaFuse: Adaptive Ensemble Decoding with Test-Time Scaling for LLMs

Jan 09, 2026Abstract:Large language models (LLMs) exhibit complementary strengths arising from differences in pretraining data, model architectures, and decoding behaviors. Inference-time ensembling provides a practical way to combine these capabilities without retraining. However, existing ensemble approaches suffer from fundamental limitations. Most rely on fixed fusion granularity, which lacks the flexibility required for mid-generation adaptation and fails to adapt to different generation characteristics across tasks. To address these challenges, we propose AdaFuse, an adaptive ensemble decoding framework that dynamically selects semantically appropriate fusion units during generation. Rather than committing to a fixed granularity, AdaFuse adjusts fusion behavior on the fly based on the decoding context, with words serving as basic building blocks for alignment. To be specific, we introduce an uncertainty-based criterion to decide whether to apply ensembling at each decoding step. Under confident decoding states, the model continues generation directly. In less certain states, AdaFuse invokes a diversity-aware scaling strategy to explore alternative candidate continuations and inform ensemble decisions. This design establishes a synergistic interaction between adaptive ensembling and test-time scaling, where ensemble decisions guide targeted exploration, and the resulting diversity in turn strengthens ensemble quality. Experiments on open-domain question answering, arithmetic reasoning, and machine translation demonstrate that AdaFuse consistently outperforms strong ensemble baselines, achieving an average relative improvement of 6.88%. The code is available at https://github.com/CCM0111/AdaFuse.

Learnable Query Aggregation with KV Routing for Cross-view Geo-localisation

Dec 30, 2025Abstract:Cross-view geo-localisation (CVGL) aims to estimate the geographic location of a query image by matching it with images from a large-scale database. However, the significant view-point discrepancies present considerable challenges for effective feature aggregation and alignment. To address these challenges, we propose a novel CVGL system that incorporates three key improvements. Firstly, we leverage the DINOv2 backbone with a convolution adapter fine-tuning to enhance model adaptability to cross-view variations. Secondly, we propose a multi-scale channel reallocation module to strengthen the diversity and stability of spatial representations. Finally, we propose an improved aggregation module that integrates a Mixture-of-Experts (MoE) routing into the feature aggregation process. Specifically, the module dynamically selects expert subspaces for the keys and values in a cross-attention framework, enabling adaptive processing of heterogeneous input domains. Extensive experiments on the University-1652 and SUES-200 datasets demonstrate that our method achieves competitive performance with fewer trained parameters.

AstraNav-World: World Model for Foresight Control and Consistency

Dec 25, 2025Abstract:Embodied navigation in open, dynamic environments demands accurate foresight of how the world will evolve and how actions will unfold over time. We propose AstraNav-World, an end-to-end world model that jointly reasons about future visual states and action sequences within a unified probabilistic framework. Our framework integrates a diffusion-based video generator with a vision-language policy, enabling synchronized rollouts where predicted scenes and planned actions are updated simultaneously. Training optimizes two complementary objectives: generating action-conditioned multi-step visual predictions and deriving trajectories conditioned on those predicted visuals. This bidirectional constraint makes visual predictions executable and keeps decisions grounded in physically consistent, task-relevant futures, mitigating cumulative errors common in decoupled "envision-then-plan" pipelines. Experiments across diverse embodied navigation benchmarks show improved trajectory accuracy and higher success rates. Ablations confirm the necessity of tight vision-action coupling and unified training, with either branch removal degrading both prediction quality and policy reliability. In real-world testing, AstraNav-World demonstrated exceptional zero-shot capabilities, adapting to previously unseen scenarios without any real-world fine-tuning. These results suggest that AstraNav-World captures transferable spatial understanding and planning-relevant navigation dynamics, rather than merely overfitting to simulation-specific data distribution. Overall, by unifying foresight vision and control within a single generative model, we move closer to reliable, interpretable, and general-purpose embodied agents that operate robustly in open-ended real-world settings.

Query-aware Hub Prototype Learning for Few-Shot 3D Point Cloud Semantic Segmentation

Dec 09, 2025Abstract:Few-shot 3D point cloud semantic segmentation (FS-3DSeg) aims to segment novel classes with only a few labeled samples. However, existing metric-based prototype learning methods generate prototypes solely from the support set, without considering their relevance to query data. This often results in prototype bias, where prototypes overfit support-specific characteristics and fail to generalize to the query distribution, especially in the presence of distribution shifts, which leads to degraded segmentation performance. To address this issue, we propose a novel Query-aware Hub Prototype (QHP) learning method that explicitly models semantic correlations between support and query sets. Specifically, we propose a Hub Prototype Generation (HPG) module that constructs a bipartite graph connecting query and support points, identifies frequently linked support hubs, and generates query-relevant prototypes that better capture cross-set semantics. To further mitigate the influence of bad hubs and ambiguous prototypes near class boundaries, we introduce a Prototype Distribution Optimization (PDO) module, which employs a purity-reweighted contrastive loss to refine prototype representations by pulling bad hubs and outlier prototypes closer to their corresponding class centers. Extensive experiments on S3DIS and ScanNet demonstrate that QHP achieves substantial performance gains over state-of-the-art methods, effectively narrowing the semantic gap between prototypes and query sets in FS-3DSeg.

MiroThinker: Pushing the Performance Boundaries of Open-Source Research Agents via Model, Context, and Interactive Scaling

Nov 18, 2025Abstract:We present MiroThinker v1.0, an open-source research agent designed to advance tool-augmented reasoning and information-seeking capabilities. Unlike previous agents that only scale up model size or context length, MiroThinker explores interaction scaling at the model level, systematically training the model to handle deeper and more frequent agent-environment interactions as a third dimension of performance improvement. Unlike LLM test-time scaling, which operates in isolation and risks degradation with longer reasoning chains, interactive scaling leverages environment feedback and external information acquisition to correct errors and refine trajectories. Through reinforcement learning, the model achieves efficient interaction scaling: with a 256K context window, it can perform up to 600 tool calls per task, enabling sustained multi-turn reasoning and complex real-world research workflows. Across four representative benchmarks-GAIA, HLE, BrowseComp, and BrowseComp-ZH-the 72B variant achieves up to 81.9%, 37.7%, 47.1%, and 55.6% accuracy respectively, surpassing previous open-source agents and approaching commercial counterparts such as GPT-5-high. Our analysis reveals that MiroThinker benefits from interactive scaling consistently: research performance improves predictably as the model engages in deeper and more frequent agent-environment interactions, demonstrating that interaction depth exhibits scaling behaviors analogous to model size and context length. These findings establish interaction scaling as a third critical dimension for building next-generation open research agents, complementing model capacity and context windows.

Achieve Performatively Optimal Policy for Performative Reinforcement Learning

Oct 06, 2025

Abstract:Performative reinforcement learning is an emerging dynamical decision making framework, which extends reinforcement learning to the common applications where the agent's policy can change the environmental dynamics. Existing works on performative reinforcement learning only aim at a performatively stable (PS) policy that maximizes an approximate value function. However, there is a provably positive constant gap between the PS policy and the desired performatively optimal (PO) policy that maximizes the original value function. In contrast, this work proposes a zeroth-order Frank-Wolfe algorithm (0-FW) algorithm with a zeroth-order approximation of the performative policy gradient in the Frank-Wolfe framework, and obtains \textbf{the first polynomial-time convergence to the desired PO} policy under the standard regularizer dominance condition. For the convergence analysis, we prove two important properties of the nonconvex value function. First, when the policy regularizer dominates the environmental shift, the value function satisfies a certain gradient dominance property, so that any stationary point (not PS) of the value function is a desired PO. Second, though the value function has unbounded gradient, we prove that all the sufficiently stationary points lie in a convex and compact policy subspace $\Pi_{\Delta}$, where the policy value has a constant lower bound $\Delta>0$ and thus the gradient becomes bounded and Lipschitz continuous. Experimental results also demonstrate that our 0-FW algorithm is more effective than the existing algorithms in finding the desired PO policy.

Zeroth-Order Methods for Stochastic Nonconvex Nonsmooth Composite Optimization

Oct 06, 2025Abstract:This work aims to solve a stochastic nonconvex nonsmooth composite optimization problem. Previous works on composite optimization problem requires the major part to satisfy Lipschitz smoothness or some relaxed smoothness conditions, which excludes some machine learning examples such as regularized ReLU network and sparse support matrix machine. In this work, we focus on stochastic nonconvex composite optimization problem without any smoothness assumptions. In particular, we propose two new notions of approximate stationary points for such optimization problem and obtain finite-time convergence results of two zeroth-order algorithms to these two approximate stationary points respectively. Finally, we demonstrate that these algorithms are effective using numerical experiments.

Trade-off in Estimating the Number of Byzantine Clients in Federated Learning

Oct 06, 2025

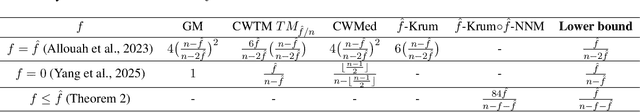

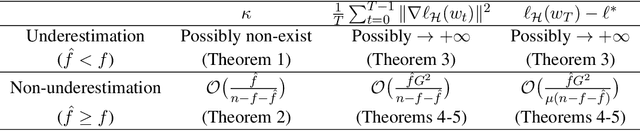

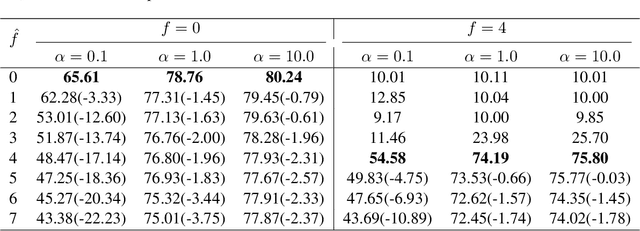

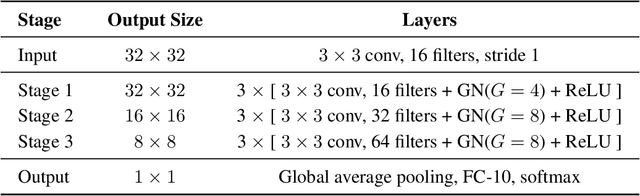

Abstract:Federated learning has attracted increasing attention at recent large-scale optimization and machine learning research and applications, but is also vulnerable to Byzantine clients that can send any erroneous signals. Robust aggregators are commonly used to resist Byzantine clients. This usually requires to estimate the unknown number $f$ of Byzantine clients, and thus accordingly select the aggregators with proper degree of robustness (i.e., the maximum number $\hat{f}$ of Byzantine clients allowed by the aggregator). Such an estimation should have important effect on the performance, which has not been systematically studied to our knowledge. This work will fill in the gap by theoretically analyzing the worst-case error of aggregators as well as its induced federated learning algorithm for any cases of $\hat{f}$ and $f$. Specifically, we will show that underestimation ($\hat{f}<f$) can lead to arbitrarily poor performance for both aggregators and federated learning. For non-underestimation ($\hat{f}\ge f$), we have proved optimal lower and upper bounds of the same order on the errors of both aggregators and federated learning. All these optimal bounds are proportional to $\hat{f}/(n-f-\hat{f})$ with $n$ clients, which monotonically increases with larger $\hat{f}$. This indicates a fundamental trade-off: while an aggregator with a larger robustness degree $\hat{f}$ can solve federated learning problems of wider range $f\in [0,\hat{f}]$, the performance can deteriorate when there are actually fewer or even no Byzantine clients (i.e., $f\in [0,\hat{f})$).

Rectified Robust Policy Optimization for Model-Uncertain Constrained Reinforcement Learning without Strong Duality

Aug 24, 2025

Abstract:The goal of robust constrained reinforcement learning (RL) is to optimize an agent's performance under the worst-case model uncertainty while satisfying safety or resource constraints. In this paper, we demonstrate that strong duality does not generally hold in robust constrained RL, indicating that traditional primal-dual methods may fail to find optimal feasible policies. To overcome this limitation, we propose a novel primal-only algorithm called Rectified Robust Policy Optimization (RRPO), which operates directly on the primal problem without relying on dual formulations. We provide theoretical convergence guarantees under mild regularity assumptions, showing convergence to an approximately optimal feasible policy with iteration complexity matching the best-known lower bound when the uncertainty set diameter is controlled in a specific level. Empirical results in a grid-world environment validate the effectiveness of our approach, demonstrating that RRPO achieves robust and safe performance under model uncertainties while the non-robust method can violate the worst-case safety constraints.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge