Yiming Liu

Extreme Video Compression with Pre-trained Diffusion Models

Feb 14, 2024Abstract:Diffusion models have achieved remarkable success in generating high quality image and video data. More recently, they have also been used for image compression with high perceptual quality. In this paper, we present a novel approach to extreme video compression leveraging the predictive power of diffusion-based generative models at the decoder. The conditional diffusion model takes several neural compressed frames and generates subsequent frames. When the reconstruction quality drops below the desired level, new frames are encoded to restart prediction. The entire video is sequentially encoded to achieve a visually pleasing reconstruction, considering perceptual quality metrics such as the learned perceptual image patch similarity (LPIPS) and the Frechet video distance (FVD), at bit rates as low as 0.02 bits per pixel (bpp). Experimental results demonstrate the effectiveness of the proposed scheme compared to standard codecs such as H.264 and H.265 in the low bpp regime. The results showcase the potential of exploiting the temporal relations in video data using generative models. Code is available at: https://github.com/ElesionKyrie/Extreme-Video-Compression-With-Prediction-Using-Pre-trainded-Diffusion-Models-

Passive Beamforming For Practical RIS-Assisted Communication Systems With Non-Ideal Hardware

Jan 15, 2024Abstract:Reconfigurable intelligent surface (RIS) technology is a promising solution to improve the performance of existing wireless communications. To achieve its cost-effectiveness advantage, there inevitably exist certain hardware impairments in the system. Therefore, it is more reasonable to design passive beamforming in this scenario. Some existing research has considered such problems under transceiver impairments. However, their performance still leaves room for improvement, possibly due to their algorithms not properly handling the fractional structure of the objective function. To address this, the passive beamforming is redesigned in this correspondence paper, taking into account both transceiver impairments and the practical phase-shift model. We tackle the fractional structure of the problem by employing the quadratic transform. The remaining sub-problems are addressed utilizing the penalty-based method and the difference-of-convex programming. Since we provide closed-form solutions for all sub-problems, our algorithm is highly efficient. The simulation results demonstrate the superiority of our proposed algorithm.

MARIS: Referring Image Segmentation via Mutual-Aware Attention Features

Nov 27, 2023Abstract:Referring image segmentation (RIS) aims to segment a particular region based on a language expression prompt. Existing methods incorporate linguistic features into visual features and obtain multi-modal features for mask decoding. However, these methods may segment the visually salient entity instead of the correct referring region, as the multi-modal features are dominated by the abundant visual context. In this paper, we propose MARIS, a referring image segmentation method that leverages the Segment Anything Model (SAM) and introduces a mutual-aware attention mechanism to enhance the cross-modal fusion via two parallel branches. Specifically, our mutual-aware attention mechanism consists of Vision-Guided Attention and Language-Guided Attention, which bidirectionally model the relationship between visual and linguistic features. Correspondingly, we design a Mask Decoder to enable explicit linguistic guidance for more consistent segmentation with the language expression. To this end, a multi-modal query token is proposed to integrate linguistic information and interact with visual information simultaneously. Extensive experiments on three benchmark datasets show that our method outperforms the state-of-the-art RIS methods. Our code will be publicly available.

Image Reconstruction for Accelerated MR Scan with Faster Fourier Convolutional Neural Networks

Jun 05, 2023

Abstract:Partial scan is a common approach to accelerate Magnetic Resonance Imaging (MRI) data acquisition in both 2D and 3D settings. However, accurately reconstructing images from partial scan data (i.e., incomplete k-space matrices) remains challenging due to lack of an effectively global receptive field in both spatial and k-space domains. To address this problem, we propose the following: (1) a novel convolutional operator called Faster Fourier Convolution (FasterFC) to replace the two consecutive convolution operations typically used in convolutional neural networks (e.g., U-Net, ResNet). Based on the spectral convolution theorem in Fourier theory, FasterFC employs alternating kernels of size 1 in 3D case) in different domains to extend the dual-domain receptive field to the global and achieves faster calculation speed than traditional Fast Fourier Convolution (FFC). (2) A 2D accelerated MRI method, FasterFC-End-to-End-VarNet, which uses FasterFC to improve the sensitivity maps and reconstruction quality. (3) A multi-stage 3D accelerated MRI method called FasterFC-based Single-to-group Network (FAS-Net) that utilizes a single-to-group algorithm to guide k-space domain reconstruction, followed by FasterFC-based cascaded convolutional neural networks to expand the effective receptive field in the dual-domain. Experimental results on the fastMRI and Stanford MRI Data datasets demonstrate that FasterFC improves the quality of both 2D and 3D reconstruction. Moreover, FAS-Net, as a 3D high-resolution multi-coil (eight) accelerated MRI method, achieves superior reconstruction performance in both qualitative and quantitative results compared with state-of-the-art 2D and 3D methods.

Flexible Alignment Super-Resolution Network for Multi-Contrast MRI

Oct 07, 2022

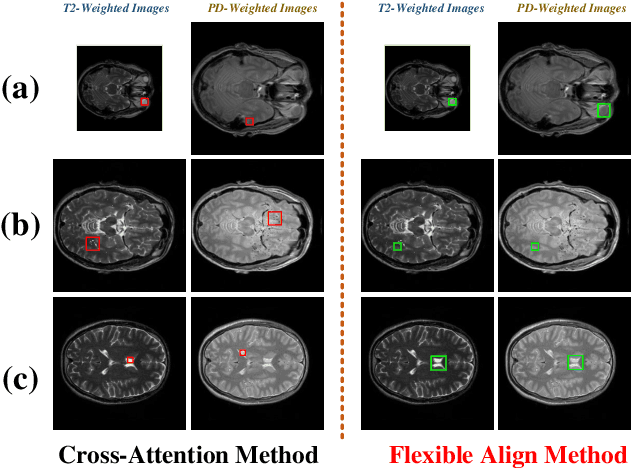

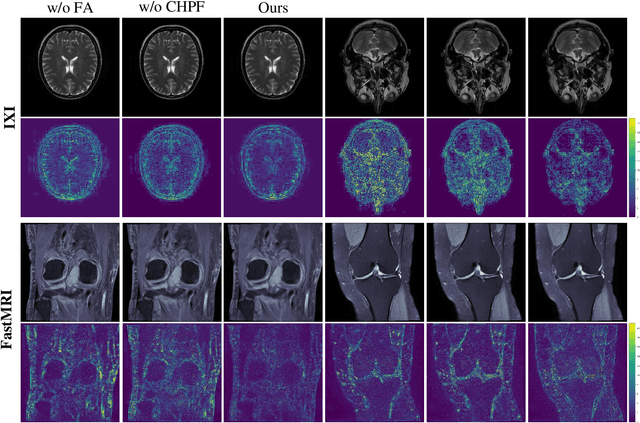

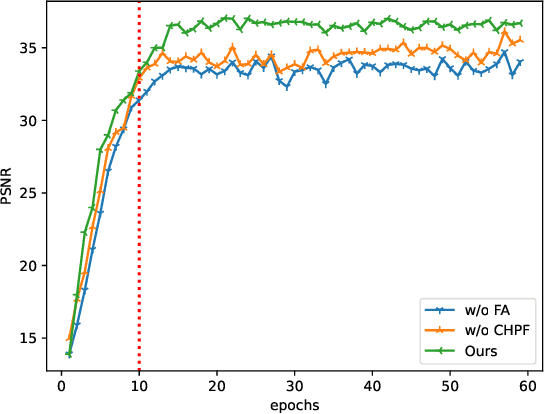

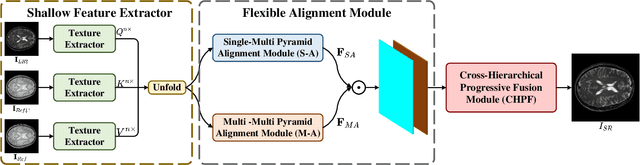

Abstract:Magnetic resonance images play an essential role in clinical diagnosis by acquiring the structural information of biological tissue. However, during acquiring magnetic resonance images, patients have to endure physical and psychological discomfort, including irritating noise and acute anxiety. To make the patient feel cozier, technically, it will reduce the retention time that patients stay in the strong magnetic field at the expense of image quality. Therefore, Super-Resolution plays a crucial role in preprocessing the low-resolution images for more precise medical analysis. In this paper, we propose the Flexible Alignment Super-Resolution Network (FASR-Net) for multi-contrast magnetic resonance images Super-Resolution. The core of multi-contrast SR is to match the patches of low-resolution and reference images. However, the inappropriate foreground scale and patch size of multi-contrast MRI sometimes lead to the mismatch of patches. To tackle this problem, the Flexible Alignment module is proposed to endow receptive fields with flexibility. Flexible Alignment module contains two parts: (1) The Single-Multi Pyramid Alignmet module serves for low-resolution and reference image with different scale. (2) The Multi-Multi Pyramid Alignment module serves for low-resolution and reference image with the same scale. Extensive experiments on the IXI and FastMRI datasets demonstrate that the FASR-Net outperforms the existing state-of-the-art approaches. In addition, by comparing the reconstructed images with the counterparts obtained by the existing algorithms, our method could retain more textural details by leveraging multi-contrast images.

Robust PCA Unrolling Network for Super-resolution Vessel Extraction in X-ray Coronary Angiography

Apr 24, 2022

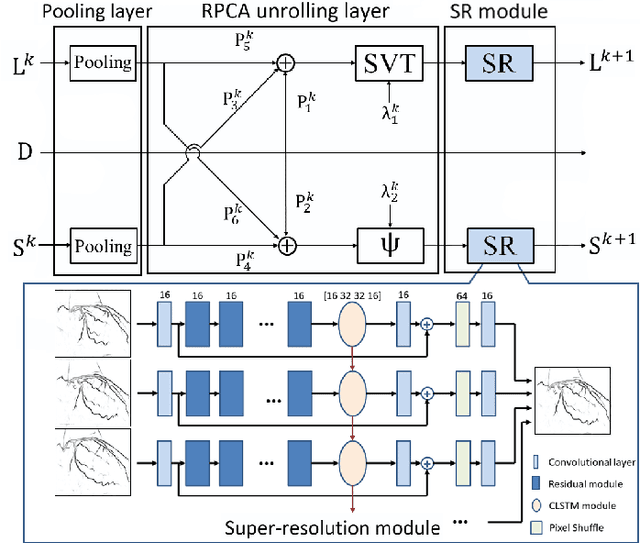

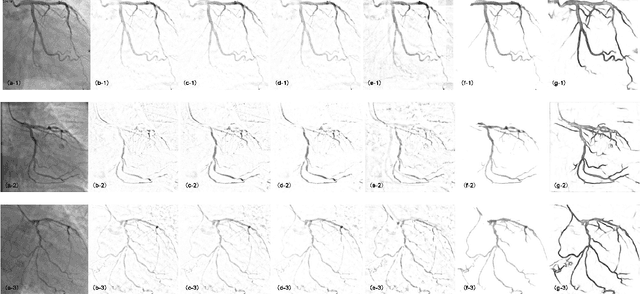

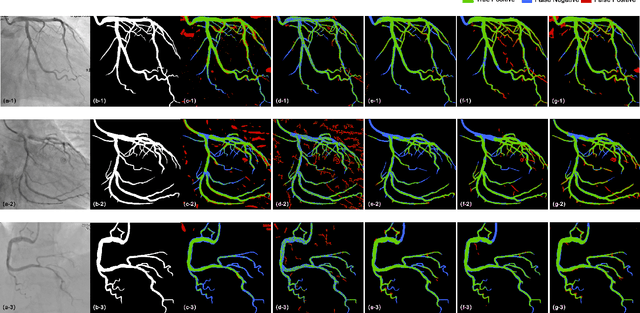

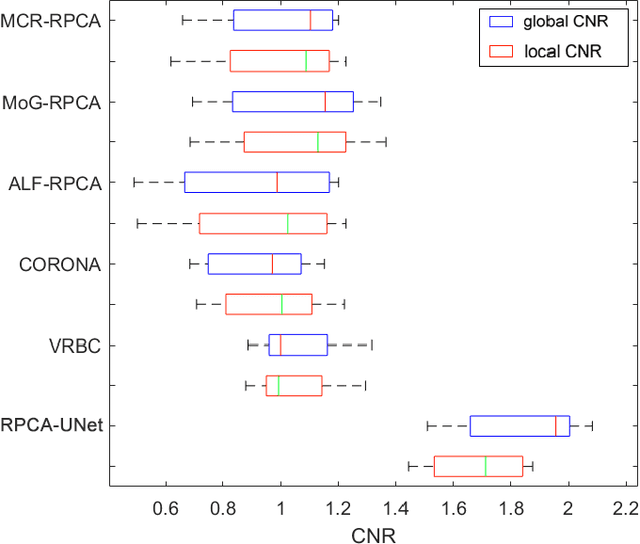

Abstract:Although robust PCA has been increasingly adopted to extract vessels from X-ray coronary angiography (XCA) images, challenging problems such as inefficient vessel-sparsity modelling, noisy and dynamic background artefacts, and high computational cost still remain unsolved. Therefore, we propose a novel robust PCA unrolling network with sparse feature selection for super-resolution XCA vessel imaging. Being embedded within a patch-wise spatiotemporal super-resolution framework that is built upon a pooling layer and a convolutional long short-term memory network, the proposed network can not only gradually prune complex vessel-like artefacts and noisy backgrounds in XCA during network training but also iteratively learn and select the high-level spatiotemporal semantic information of moving contrast agents flowing in the XCA-imaged vessels. The experimental results show that the proposed method significantly outperforms state-of-the-art methods, especially in the imaging of the vessel network and its distal vessels, by restoring the intensity and geometry profiles of heterogeneous vessels against complex and dynamic backgrounds.

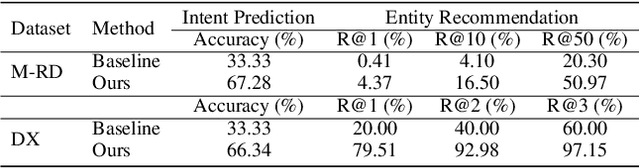

FORCE: A Framework of Rule-Based Conversational Recommender System

Mar 18, 2022

Abstract:The conversational recommender systems (CRSs) have received extensive attention in recent years. However, most of the existing works focus on various deep learning models, which are largely limited by the requirement of large-scale human-annotated datasets. Such methods are not able to deal with the cold-start scenarios in industrial products. To alleviate the problem, we propose FORCE, a Framework Of Rule-based Conversational Recommender system that helps developers to quickly build CRS bots by simple configuration. We conduct experiments on two datasets in different languages and domains to verify its effectiveness and usability.

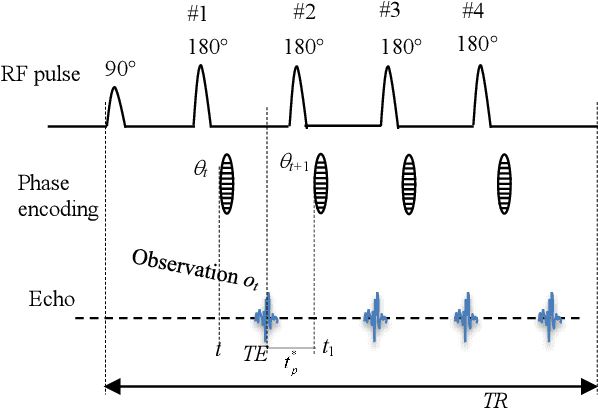

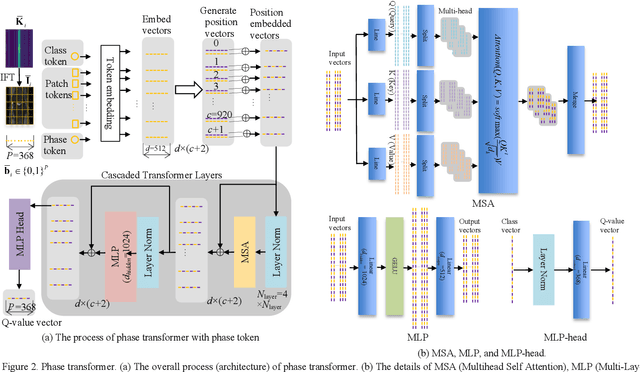

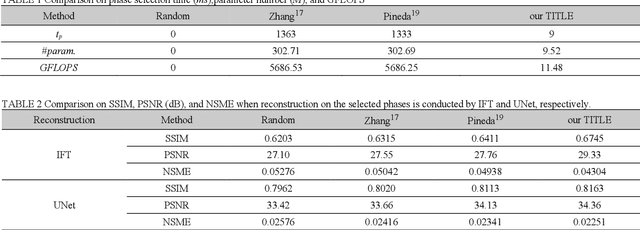

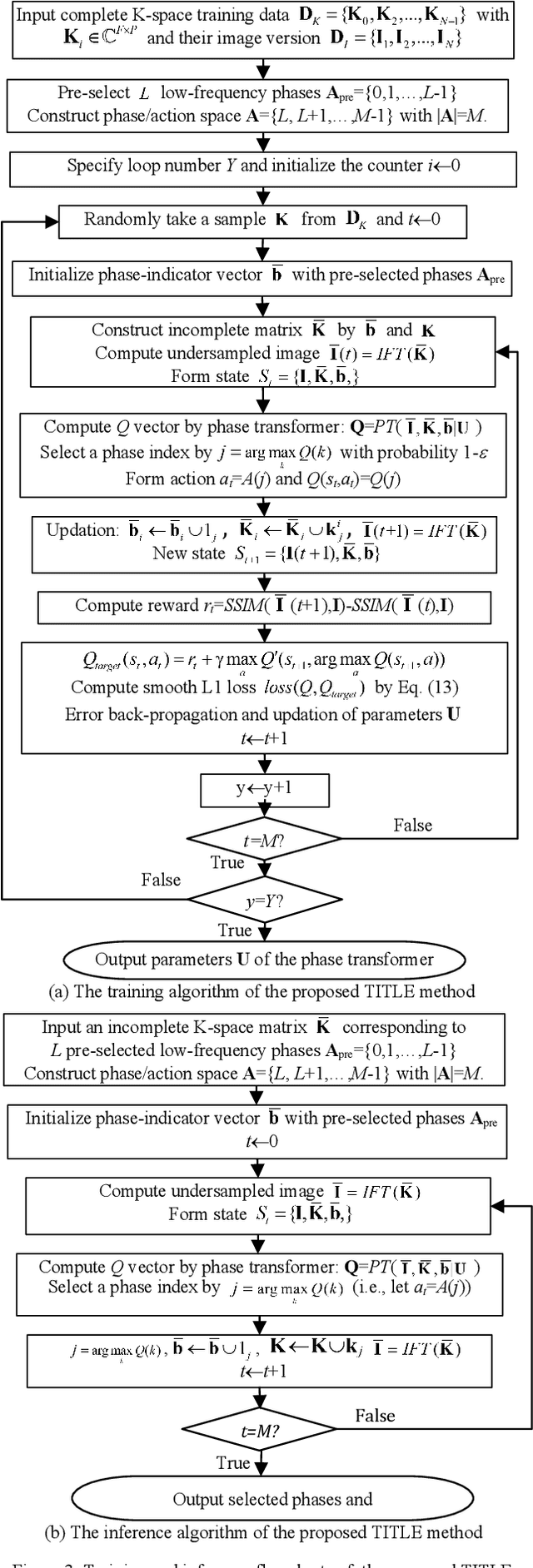

Active Phase-Encode Selection for Slice-Specific Fast MR Scanning Using a Transformer-Based Deep Reinforcement Learning Framework

Mar 11, 2022

Abstract:Purpose: Long scan time in phase encoding for forming complete K-space matrices is a critical drawback of MRI, making patients uncomfortable and wasting important time for diagnosing emergent diseases. This paper aims to reducing the scan time by actively and sequentially selecting partial phases in a short time so that a slice can be accurately reconstructed from the resultant slice-specific incomplete K-space matrix. Methods: A transformer based deep reinforcement learning framework is proposed for actively determining a sequence of partial phases according to reconstruction-quality based Q-value (a function of reward), where the reward is the improvement degree of reconstructed image quality. The Q-value is efficiently predicted from binary phase-indicator vectors, incomplete K-space matrices and their corresponding undersampled images with a light-weight transformer so that the sequential information of phases and global relationship in images can be used. The inverse Fourier transform is employed for efficiently computing the undersampled images and hence gaining the rewards of selecting phases. Results: Experimental results on the fastMRI dataset with original K-space data accessible demonstrate the efficiency and accuracy superiorities of proposed method. Compared with the state-of-the-art reinforcement learning based method proposed by Pineda et al., the proposed method is roughly 150 times faster and achieves significant improvement in reconstruction accuracy. Conclusions: We have proposed a light-weight transformer based deep reinforcement learning framework for generating high-quality slice-specific trajectory consisting of a small number of phases. The proposed method, called TITLE (Transformer Involved Trajectory LEarning), has remarkable superiority in phase-encode selection efficiency and image reconstruction accuracy.

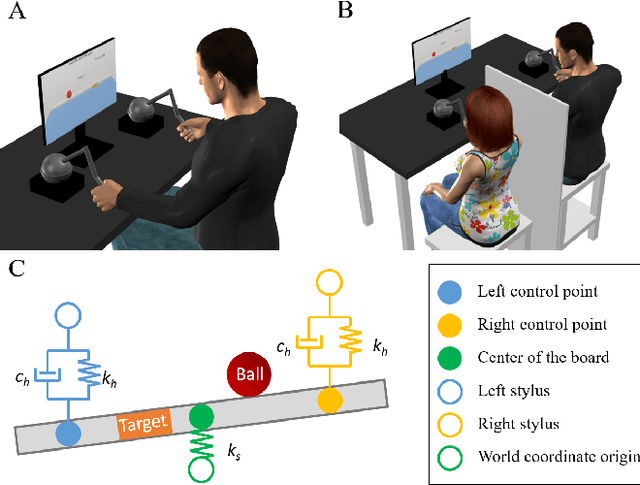

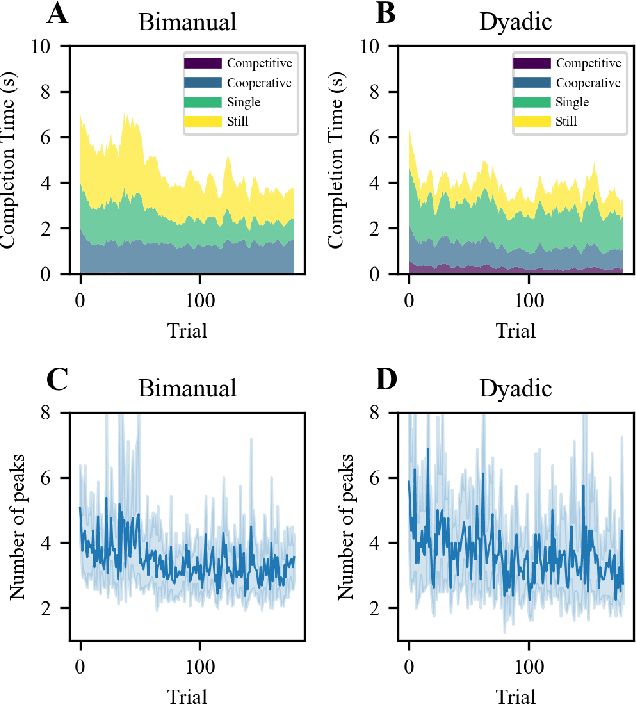

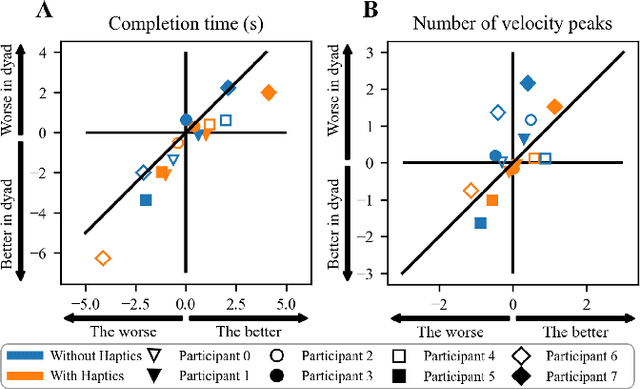

The role of haptic communication in dyadic collaborative object manipulation tasks

Mar 02, 2022

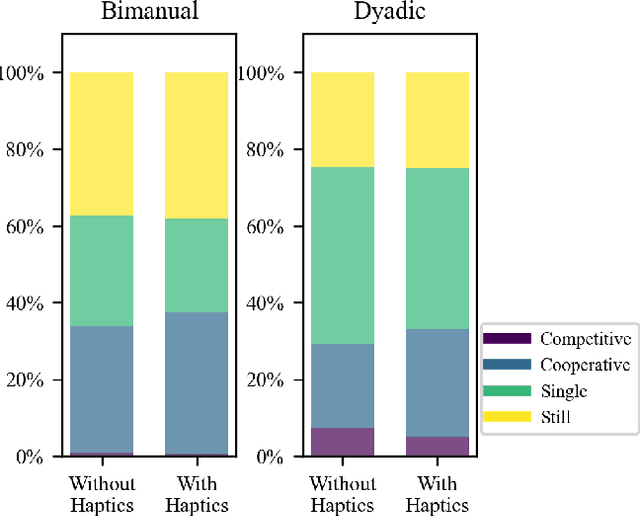

Abstract:Intuitive and efficient physical human-robot collaboration relies on the mutual observability of the human and the robot, i.e. the two entities being able to interpret each other's intentions and actions. This is remedied by a myriad of methods involving human sensing or intention decoding, as well as human-robot turn-taking and sequential task planning. However, the physical interaction establishes a rich channel of communication through forces, torques and haptics in general, which is often overlooked in industrial implementations of human-robot interaction. In this work, we investigate the role of haptics in human collaborative physical tasks, to identify how to integrate physical communication in human-robot teams. We present a task to balance a ball at a target position on a board either bimanually by one participant, or dyadically by two participants, with and without haptic information. The task requires that the two sides coordinate with each other, in real-time, to balance the ball at the target. We found that with training the completion time and number of velocity peaks of the ball decreased, and that participants gradually became consistent in their braking strategy. Moreover we found that the presence of haptic information improved the performance (decreased completion time) and led to an increase in overall cooperative movements. Overall, our results show that humans can better coordinate with one another when haptic feedback is available. These results also highlight the likely importance of haptic communication in human-robot physical interaction, both as a tool to infer human intentions and to make the robot behaviour interpretable to humans.

Deep Residual Fourier Transformation for Single Image Deblurring

Nov 23, 2021

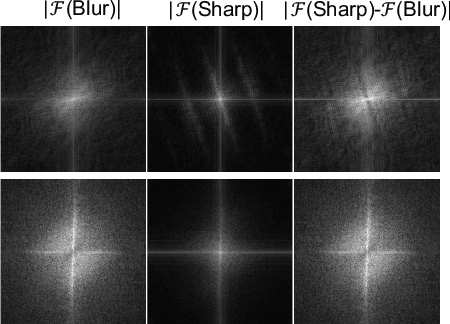

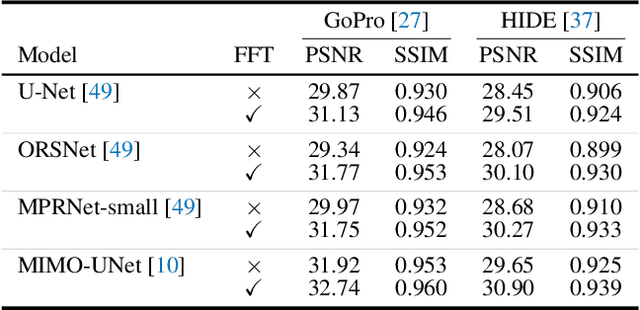

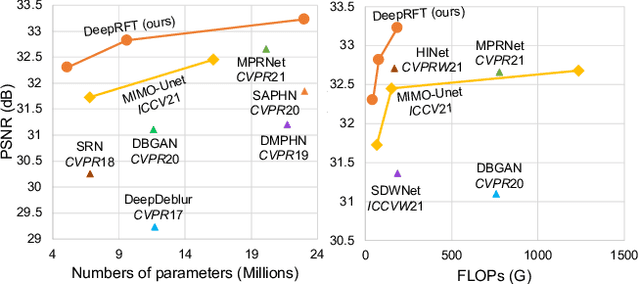

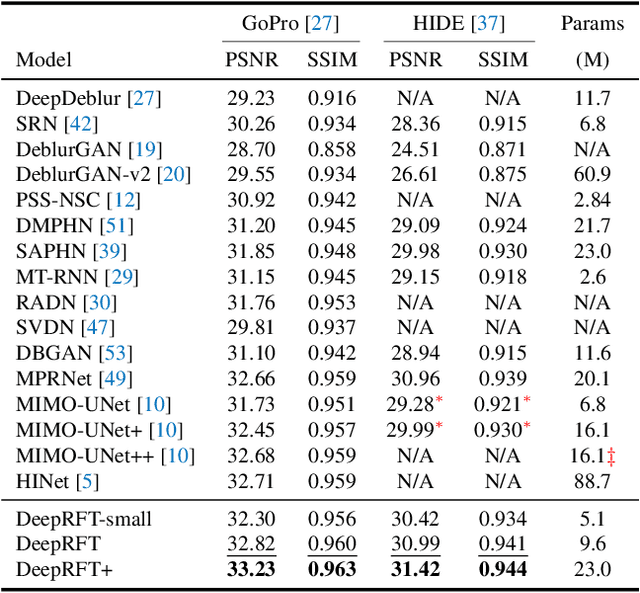

Abstract:It has been a common practice to adopt the ResBlock, which learns the difference between blurry and sharp image pairs, in end-to-end image deblurring architectures. Reconstructing a sharp image from its blurry counterpart requires changes regarding both low- and high-frequency information. Although conventional ResBlock may have good abilities in capturing the high-frequency components of images, it tends to overlook the low-frequency information. Moreover, ResBlock usually fails to felicitously model the long-distance information which is non-trivial in reconstructing a sharp image from its blurry counterpart. In this paper, we present a Residual Fast Fourier Transform with Convolution Block (Res FFT-Conv Block), capable of capturing both long-term and short-term interactions, while integrating both low- and high-frequency residual information. Res FFT-Conv Block is a conceptually simple yet computationally efficient, and plug-and-play block, leading to remarkable performance gains in different architectures. With Res FFT-Conv Block, we further propose a Deep Residual Fourier Transformation (DeepRFT) framework, based upon MIMO-UNet, achieving state-of-the-art image deblurring performance on GoPro, HIDE, RealBlur and DPDD datasets. Experiments show our DeepRFT can boost image deblurring performance significantly (e.g., with 1.09 dB improvement in PSNR on GoPro dataset compared with MIMO-UNet), and DeepRFT+ even reaches 33.23 dB in PSNR on GoPro dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge