Chasing Consistency in Text-to-3D Generation from a Single Image

Sep 07, 2023Yichen Ouyang, Wenhao Chai, Jiayi Ye, Dapeng Tao, Yibing Zhan, Gaoang Wang

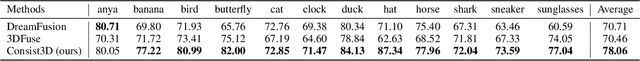

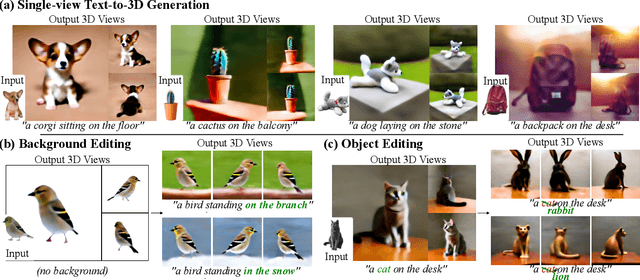

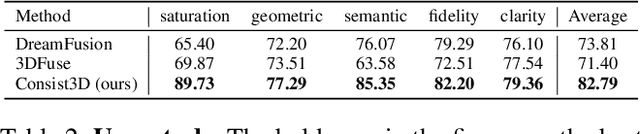

Text-to-3D generation from a single-view image is a popular but challenging task in 3D vision. Although numerous methods have been proposed, existing works still suffer from the inconsistency issues, including 1) semantic inconsistency, 2) geometric inconsistency, and 3) saturation inconsistency, resulting in distorted, overfitted, and over-saturated generations. In light of the above issues, we present Consist3D, a three-stage framework Chasing for semantic-, geometric-, and saturation-Consistent Text-to-3D generation from a single image, in which the first two stages aim to learn parameterized consistency tokens, and the last stage is for optimization. Specifically, the semantic encoding stage learns a token independent of views and estimations, promoting semantic consistency and robustness. Meanwhile, the geometric encoding stage learns another token with comprehensive geometry and reconstruction constraints under novel-view estimations, reducing overfitting and encouraging geometric consistency. Finally, the optimization stage benefits from the semantic and geometric tokens, allowing a low classifier-free guidance scale and therefore preventing oversaturation. Experimental results demonstrate that Consist3D produces more consistent, faithful, and photo-realistic 3D assets compared to previous state-of-the-art methods. Furthermore, Consist3D also allows background and object editing through text prompts.

Free-Form Composition Networks for Egocentric Action Recognition

Jul 13, 2023Haoran Wang, Qinghua Cheng, Baosheng Yu, Yibing Zhan, Dapeng Tao, Liang Ding, Haibin Ling

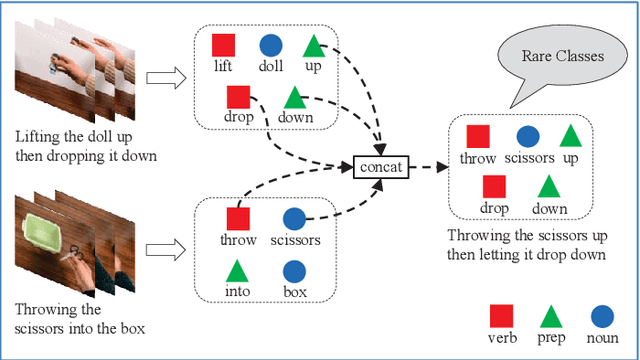

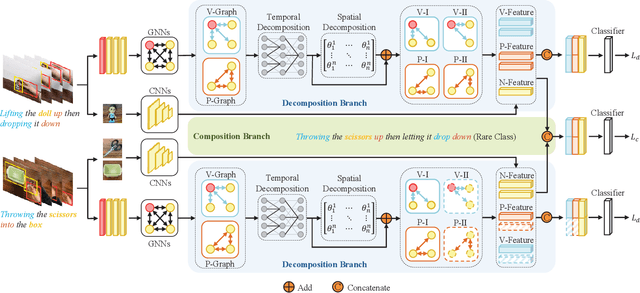

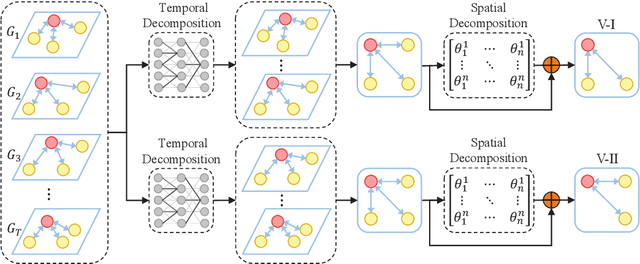

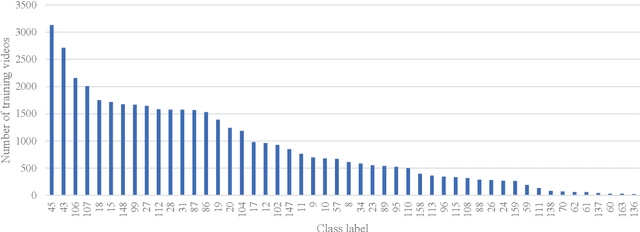

Egocentric action recognition is gaining significant attention in the field of human action recognition. In this paper, we address data scarcity issue in egocentric action recognition from a compositional generalization perspective. To tackle this problem, we propose a free-form composition network (FFCN) that can simultaneously learn disentangled verb, preposition, and noun representations, and then use them to compose new samples in the feature space for rare classes of action videos. First, we use a graph to capture the spatial-temporal relations among different hand/object instances in each action video. We thus decompose each action into a set of verb and preposition spatial-temporal representations using the edge features in the graph. The temporal decomposition extracts verb and preposition representations from different video frames, while the spatial decomposition adaptively learns verb and preposition representations from action-related instances in each frame. With these spatial-temporal representations of verbs and prepositions, we can compose new samples for those rare classes in a free-form manner, which is not restricted to a rigid form of a verb and a noun. The proposed FFCN can directly generate new training data samples for rare classes, hence significantly improve action recognition performance. We evaluated our method on three popular egocentric action recognition datasets, Something-Something V2, H2O, and EPIC-KITCHENS-100, and the experimental results demonstrate the effectiveness of the proposed method for handling data scarcity problems, including long-tailed and few-shot egocentric action recognition.

On Exploring Node-feature and Graph-structure Diversities for Node Drop Graph Pooling

Jun 22, 2023Chuang Liu, Yibing Zhan, Baosheng Yu, Liu Liu, Bo Du, Wenbin Hu, Tongliang Liu

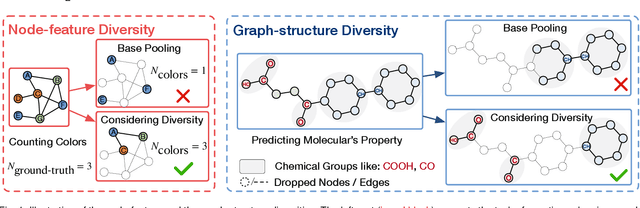

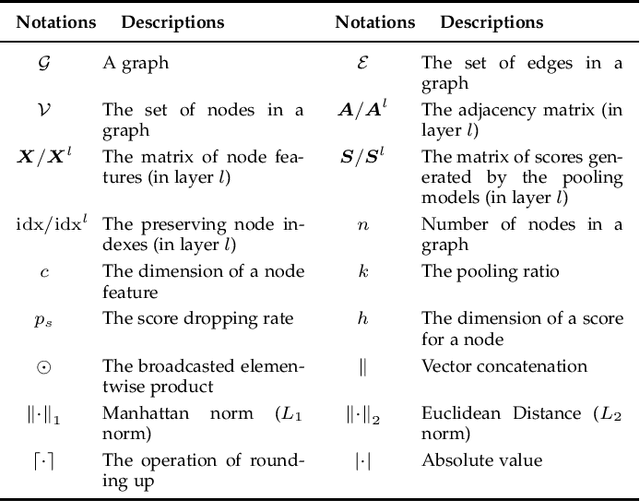

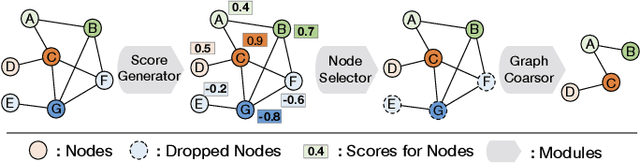

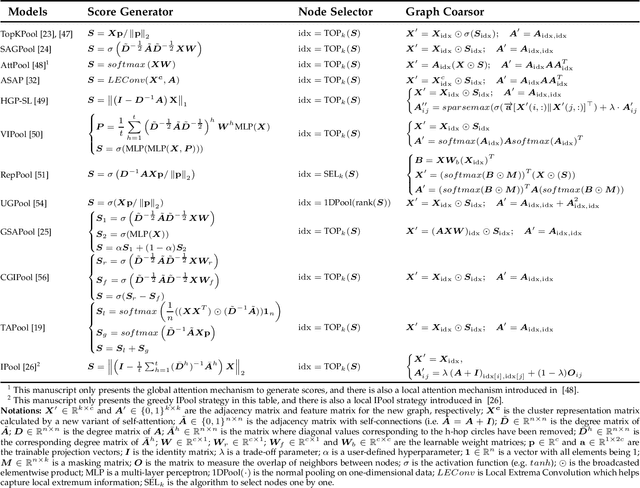

A pooling operation is essential for effective graph-level representation learning, where the node drop pooling has become one mainstream graph pooling technology. However, current node drop pooling methods usually keep the top-k nodes according to their significance scores, which ignore the graph diversity in terms of the node features and the graph structures, thus resulting in suboptimal graph-level representations. To address the aforementioned issue, we propose a novel plug-and-play score scheme and refer to it as MID, which consists of a \textbf{M}ultidimensional score space with two operations, \textit{i.e.}, fl\textbf{I}pscore and \textbf{D}ropscore. Specifically, the multidimensional score space depicts the significance of nodes through multiple criteria; the flipscore encourages the maintenance of dissimilar node features; and the dropscore forces the model to notice diverse graph structures instead of being stuck in significant local structures. To evaluate the effectiveness of our proposed MID, we perform extensive experiments by applying it to a wide variety of recent node drop pooling methods, including TopKPool, SAGPool, GSAPool, and ASAP. Specifically, the proposed MID can efficiently and consistently achieve about 2.8\% average improvements over the above four methods on seventeen real-world graph classification datasets, including four social datasets (IMDB-BINARY, IMDB-MULTI, REDDIT-BINARY, and COLLAB), and thirteen biochemical datasets (D\&D, PROTEINS, NCI1, MUTAG, PTC-MR, NCI109, ENZYMES, MUTAGENICITY, FRANKENSTEIN, HIV, BBBP, TOXCAST, and TOX21). Code is available at~\url{https://github.com/whuchuang/mid}.

Divide, Conquer, and Combine: Mixture of Semantic-Independent Experts for Zero-Shot Dialogue State Tracking

Jun 01, 2023Qingyue Wang, Liang Ding, Yanan Cao, Yibing Zhan, Zheng Lin, Shi Wang, Dacheng Tao, Li Guo

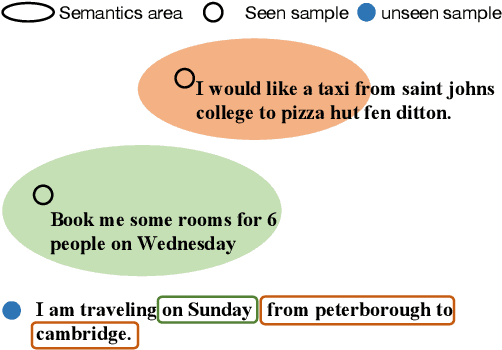

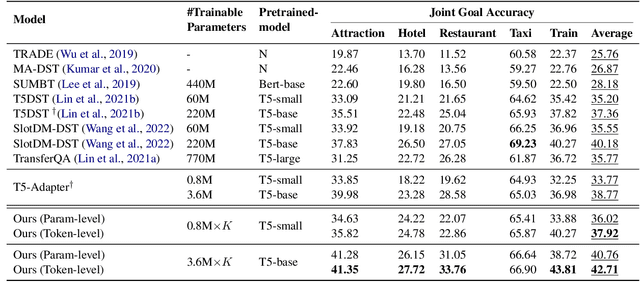

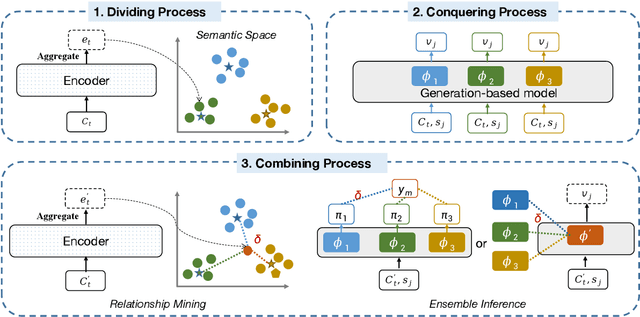

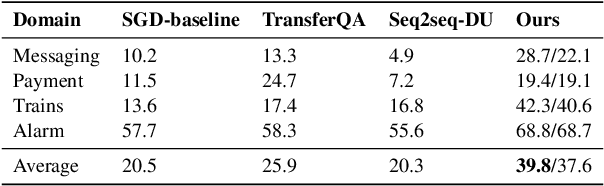

Zero-shot transfer learning for Dialogue State Tracking (DST) helps to handle a variety of task-oriented dialogue domains without the cost of collecting in-domain data. Existing works mainly study common data- or model-level augmentation methods to enhance the generalization but fail to effectively decouple the semantics of samples, limiting the zero-shot performance of DST. In this paper, we present a simple and effective "divide, conquer and combine" solution, which explicitly disentangles the semantics of seen data, and leverages the performance and robustness with the mixture-of-experts mechanism. Specifically, we divide the seen data into semantically independent subsets and train corresponding experts, the newly unseen samples are mapped and inferred with mixture-of-experts with our designed ensemble inference. Extensive experiments on MultiWOZ2.1 upon the T5-Adapter show our schema significantly and consistently improves the zero-shot performance, achieving the SOTA on settings without external knowledge, with only 10M trainable parameters1.

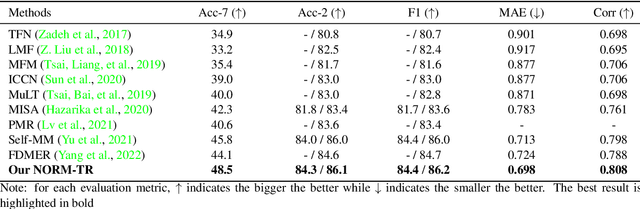

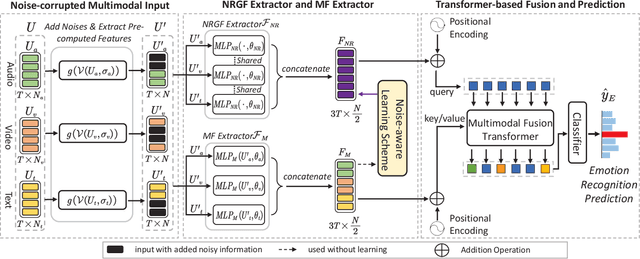

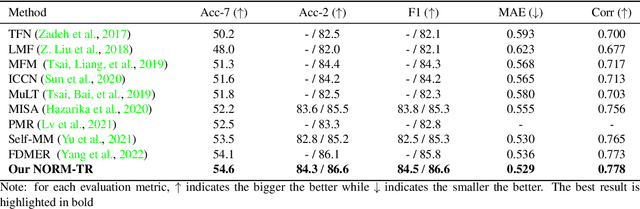

Noise-Resistant Multimodal Transformer for Emotion Recognition

May 04, 2023Yuanyuan Liu, Haoyu Zhang, Yibing Zhan, Zijing Chen, Guanghao Yin, Lin Wei, Zhe Chen

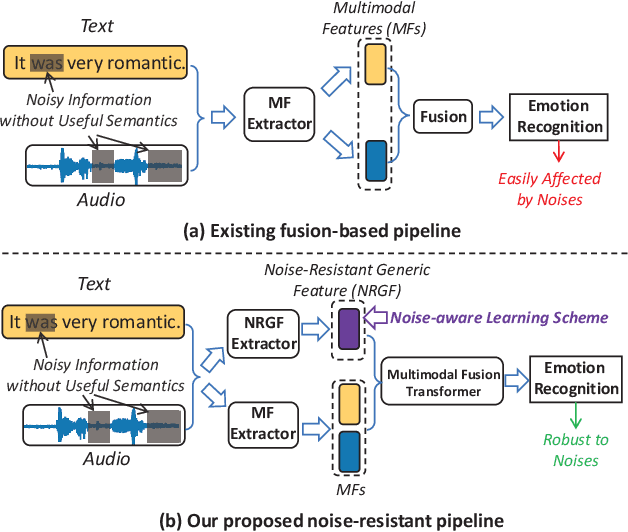

Multimodal emotion recognition identifies human emotions from various data modalities like video, text, and audio. However, we found that this task can be easily affected by noisy information that does not contain useful semantics. To this end, we present a novel paradigm that attempts to extract noise-resistant features in its pipeline and introduces a noise-aware learning scheme to effectively improve the robustness of multimodal emotion understanding. Our new pipeline, namely Noise-Resistant Multimodal Transformer (NORM-TR), mainly introduces a Noise-Resistant Generic Feature (NRGF) extractor and a Transformer for the multimodal emotion recognition task. In particular, we make the NRGF extractor learn a generic and disturbance-insensitive representation so that consistent and meaningful semantics can be obtained. Furthermore, we apply a Transformer to incorporate Multimodal Features (MFs) of multimodal inputs based on their relations to the NRGF. Therefore, the possible insensitive but useful information of NRGF could be complemented by MFs that contain more details. To train the NORM-TR properly, our proposed noise-aware learning scheme complements normal emotion recognition losses by enhancing the learning against noises. Our learning scheme explicitly adds noises to either all the modalities or a specific modality at random locations of a multimodal input sequence. We correspondingly introduce two adversarial losses to encourage the NRGF extractor to learn to extract the NRGFs invariant to the added noises, thus facilitating the NORM-TR to achieve more favorable multimodal emotion recognition performance. In practice, on several popular multimodal datasets, our NORM-TR achieves state-of-the-art performance and outperforms existing methods by a large margin, which demonstrates that the ability to resist noisy information is important for effective emotion recognition.

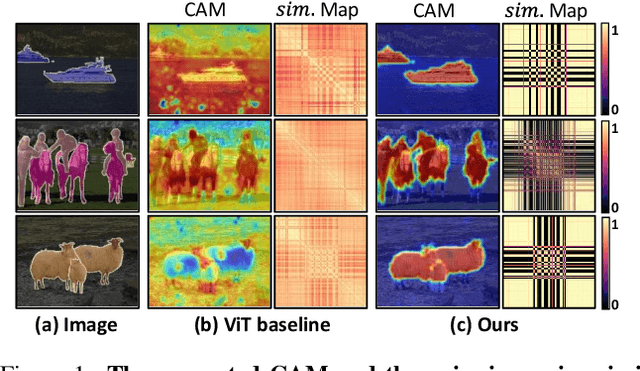

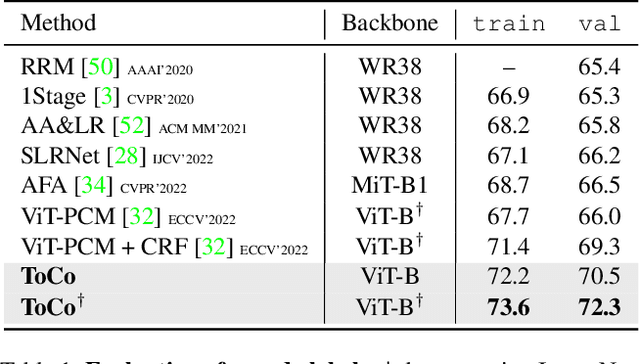

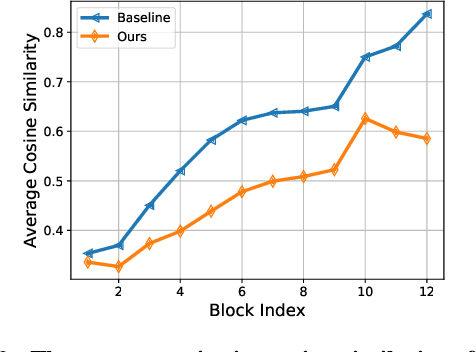

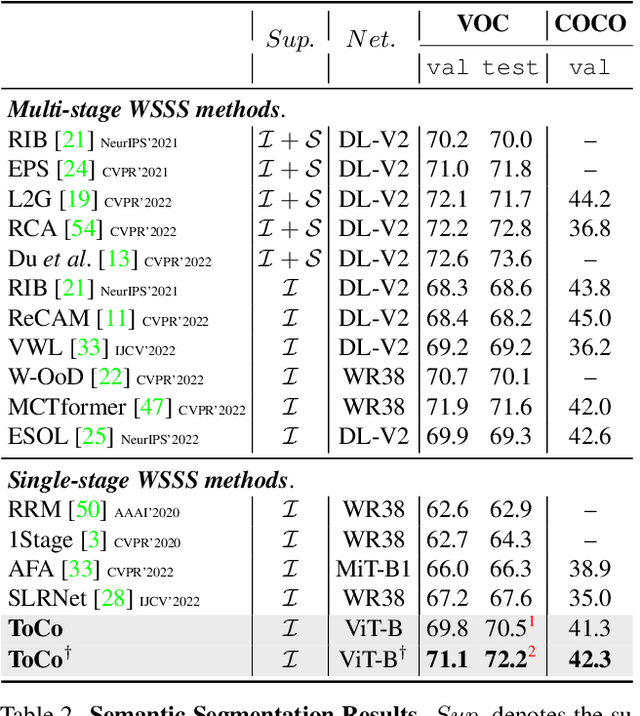

Token Contrast for Weakly-Supervised Semantic Segmentation

Mar 02, 2023Lixiang Ru, Heliang Zheng, Yibing Zhan, Bo Du

Weakly-Supervised Semantic Segmentation (WSSS) using image-level labels typically utilizes Class Activation Map (CAM) to generate the pseudo labels. Limited by the local structure perception of CNN, CAM usually cannot identify the integral object regions. Though the recent Vision Transformer (ViT) can remedy this flaw, we observe it also brings the over-smoothing issue, \ie, the final patch tokens incline to be uniform. In this work, we propose Token Contrast (ToCo) to address this issue and further explore the virtue of ViT for WSSS. Firstly, motivated by the observation that intermediate layers in ViT can still retain semantic diversity, we designed a Patch Token Contrast module (PTC). PTC supervises the final patch tokens with the pseudo token relations derived from intermediate layers, allowing them to align the semantic regions and thus yield more accurate CAM. Secondly, to further differentiate the low-confidence regions in CAM, we devised a Class Token Contrast module (CTC) inspired by the fact that class tokens in ViT can capture high-level semantics. CTC facilitates the representation consistency between uncertain local regions and global objects by contrasting their class tokens. Experiments on the PASCAL VOC and MS COCO datasets show the proposed ToCo can remarkably surpass other single-stage competitors and achieve comparable performance with state-of-the-art multi-stage methods. Code is available at https://github.com/rulixiang/ToCo.

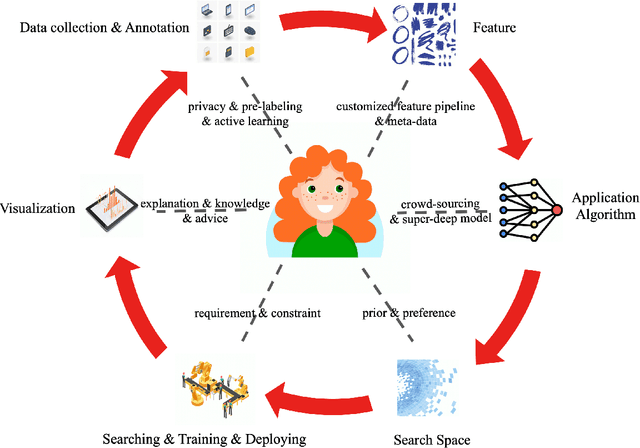

OmniForce: On Human-Centered, Large Model Empowered and Cloud-Edge Collaborative AutoML System

Mar 01, 2023Chao Xue, Wei Liu, Shuai Xie, Zhenfang Wang, Jiaxing Li, Xuyang Peng, Liang Ding, Shanshan Zhao, Qiong Cao, Yibo Yang, Fengxiang He, Bohua Cai, Rongcheng Bian, Yiyan Zhao, Heliang Zheng, Xiangyang Liu, Dongkai Liu, Daqing Liu, Li Shen, Chang Li, Shijin Zhang, Yukang Zhang, Guanpu Chen, Shixiang Chen, Yibing Zhan, Jing Zhang, Chaoyue Wang, Dacheng Tao

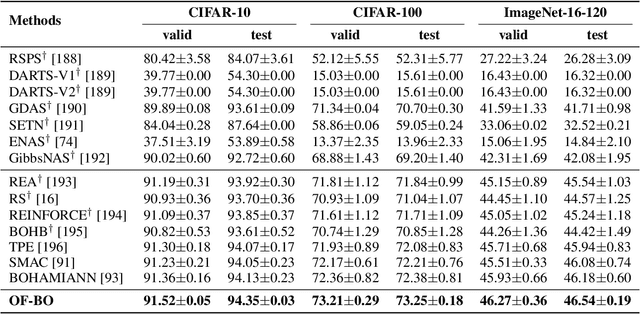

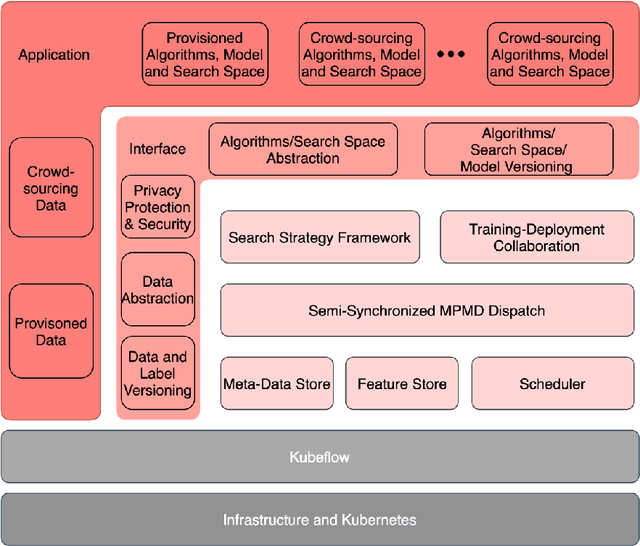

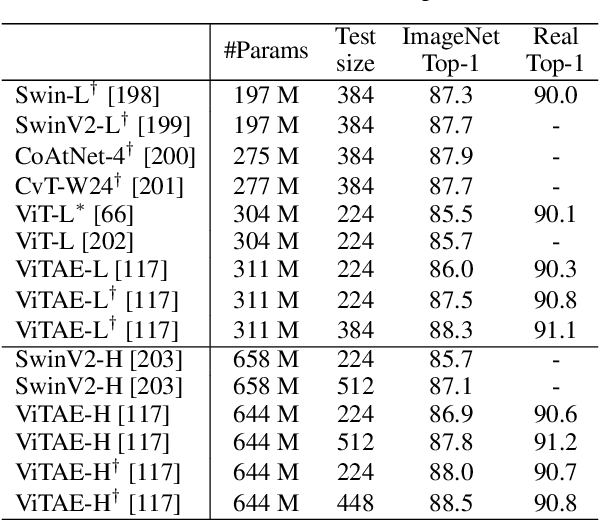

Automated machine learning (AutoML) seeks to build ML models with minimal human effort. While considerable research has been conducted in the area of AutoML in general, aiming to take humans out of the loop when building artificial intelligence (AI) applications, scant literature has focused on how AutoML works well in open-environment scenarios such as the process of training and updating large models, industrial supply chains or the industrial metaverse, where people often face open-loop problems during the search process: they must continuously collect data, update data and models, satisfy the requirements of the development and deployment environment, support massive devices, modify evaluation metrics, etc. Addressing the open-environment issue with pure data-driven approaches requires considerable data, computing resources, and effort from dedicated data engineers, making current AutoML systems and platforms inefficient and computationally intractable. Human-computer interaction is a practical and feasible way to tackle the problem of open-environment AI. In this paper, we introduce OmniForce, a human-centered AutoML (HAML) system that yields both human-assisted ML and ML-assisted human techniques, to put an AutoML system into practice and build adaptive AI in open-environment scenarios. Specifically, we present OmniForce in terms of ML version management; pipeline-driven development and deployment collaborations; a flexible search strategy framework; and widely provisioned and crowdsourced application algorithms, including large models. Furthermore, the (large) models constructed by OmniForce can be automatically turned into remote services in a few minutes; this process is dubbed model as a service (MaaS). Experimental results obtained in multiple search spaces and real-world use cases demonstrate the efficacy and efficiency of OmniForce.

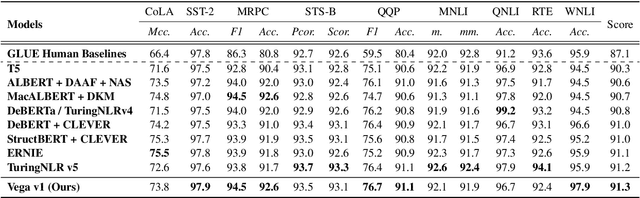

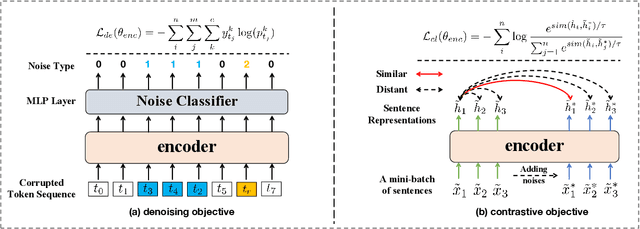

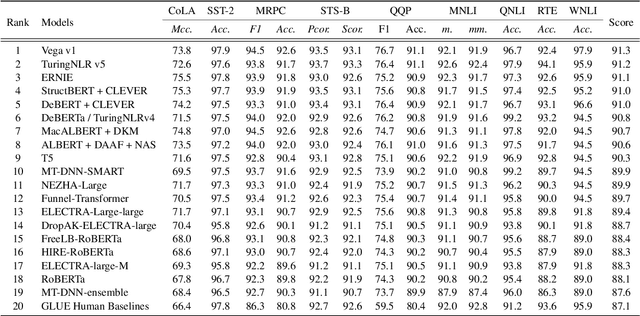

Bag of Tricks for Effective Language Model Pretraining and Downstream Adaptation: A Case Study on GLUE

Feb 18, 2023Qihuang Zhong, Liang Ding, Keqin Peng, Juhua Liu, Bo Du, Li Shen, Yibing Zhan, Dacheng Tao

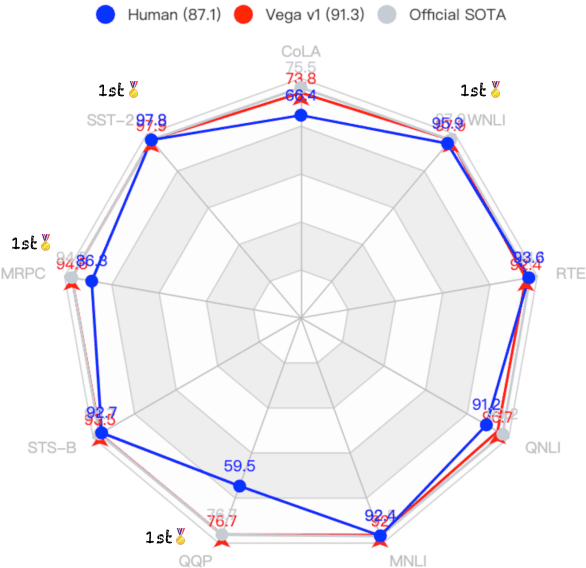

This technical report briefly describes our JDExplore d-team's submission Vega v1 on the General Language Understanding Evaluation (GLUE) leaderboard, where GLUE is a collection of nine natural language understanding tasks, including question answering, linguistic acceptability, sentiment analysis, text similarity, paraphrase detection, and natural language inference. [Method] We investigate several effective strategies and choose their best combination setting as the training recipes. As for model structure, we employ the vanilla Transformer with disentangled attention as the basic block encoder. For self-supervised training, we employ the representative denoising objective (i.e., replaced token detection) in phase 1 and combine the contrastive objective (i.e., sentence embedding contrastive learning) with it in phase 2. During fine-tuning, several advanced techniques such as transductive fine-tuning, self-calibrated fine-tuning, and adversarial fine-tuning are adopted. [Results] According to our submission record (Jan. 2022), with our optimized pretraining and fine-tuning strategies, our 1.3 billion model sets new state-of-the-art on 4/9 tasks, achieving the best average score of 91.3. Encouragingly, our Vega v1 is the first to exceed powerful human performance on the two challenging tasks, i.e., SST-2 and WNLI. We believe our empirically successful recipe with a bag of tricks could shed new light on developing efficient discriminative large language models.

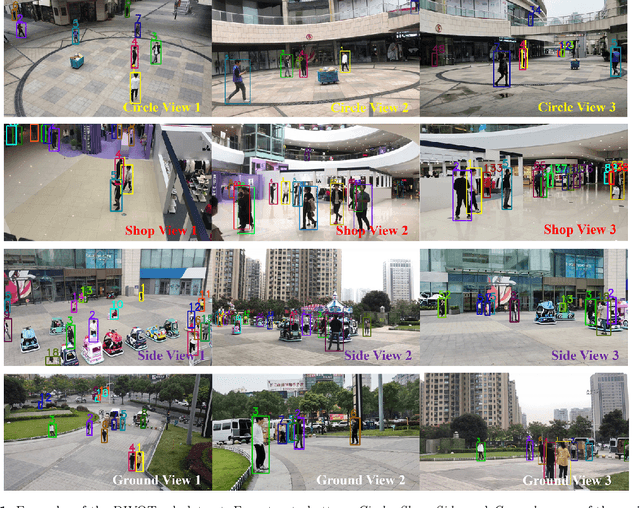

DIVOTrack: A Novel Dataset and Baseline Method for Cross-View Multi-Object Tracking in DIVerse Open Scenes

Feb 15, 2023Shenghao Hao, Peiyuan Liu, Yibing Zhan, Kaixun Jin, Zuozhu Liu, Mingli Song, Jenq-Neng Hwang, Gaoang Wang

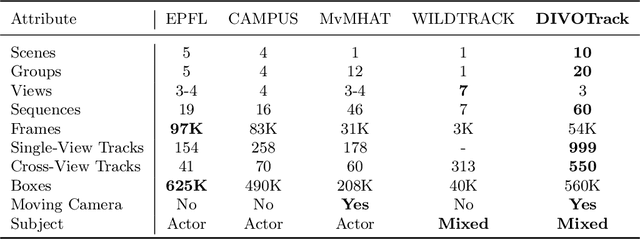

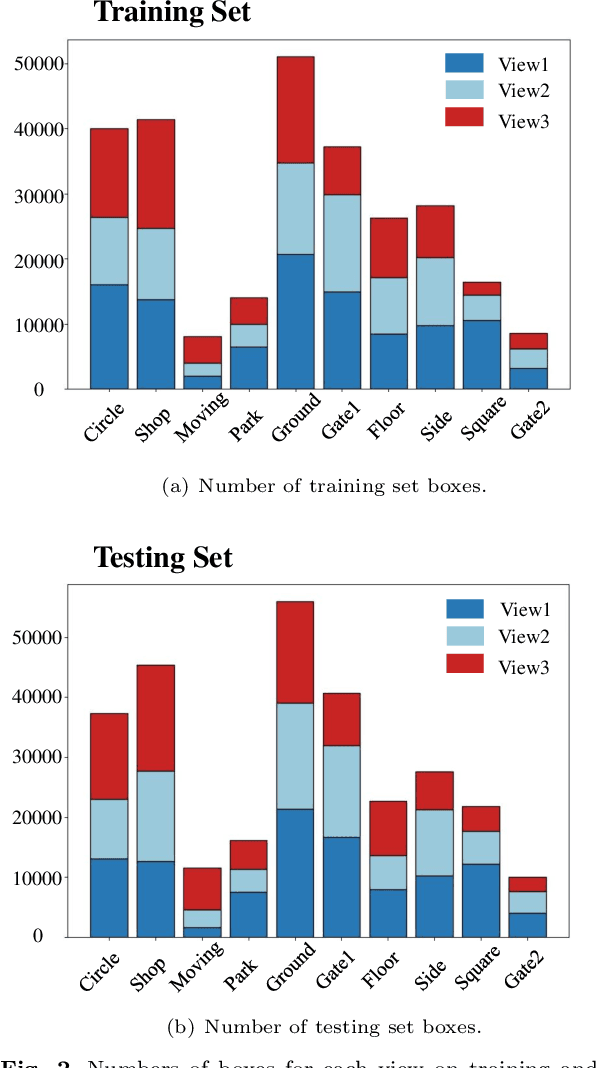

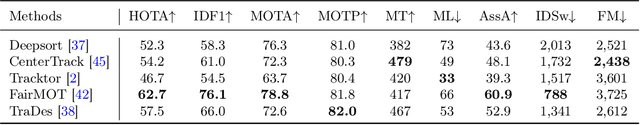

Cross-view multi-object tracking aims to link objects between frames and camera views with substantial overlaps. Although cross-view multi-object tracking has received increased attention in recent years, existing datasets still have several issues, including 1) missing real-world scenarios, 2) lacking diverse scenes, 3) owning a limited number of tracks, 4) comprising only static cameras, and 5) lacking standard benchmarks, which hinder the investigation and comparison of cross-view tracking methods. To solve the aforementioned issues, we introduce DIVOTrack: a new cross-view multi-object tracking dataset for DIVerse Open scenes with dense tracking pedestrians in realistic and non-experimental environments. Our DIVOTrack has ten distinct scenarios and 550 cross-view tracks, surpassing all cross-view multi-object tracking datasets currently available. Furthermore, we provide a novel baseline cross-view tracking method with a unified joint detection and cross-view tracking framework named CrossMOT, which learns object detection, single-view association, and cross-view matching with an all-in-one embedding model. Finally, we present a summary of current methodologies and a set of standard benchmarks with our DIVOTrack to provide a fair comparison and conduct a comprehensive analysis of current approaches and our proposed CrossMOT. The dataset and code are available at https://github.com/shengyuhao/DIVOTrack.

Original or Translated? On the Use of Parallel Data for Translation Quality Estimation

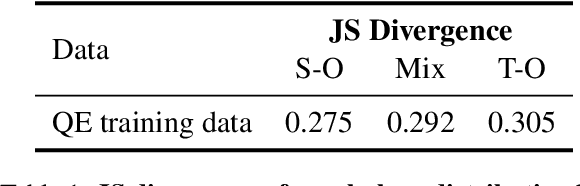

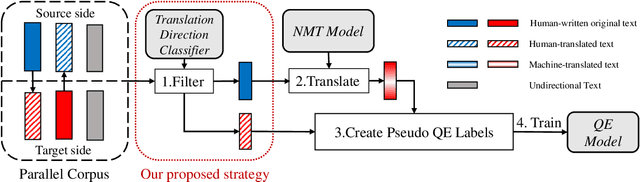

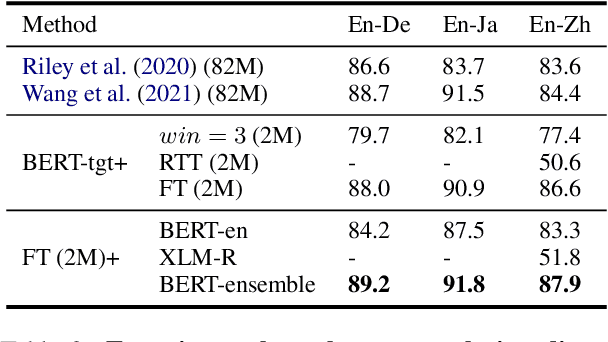

Dec 20, 2022Baopu Qiu, Liang Ding, Di Wu, Lin Shang, Yibing Zhan, Dacheng Tao

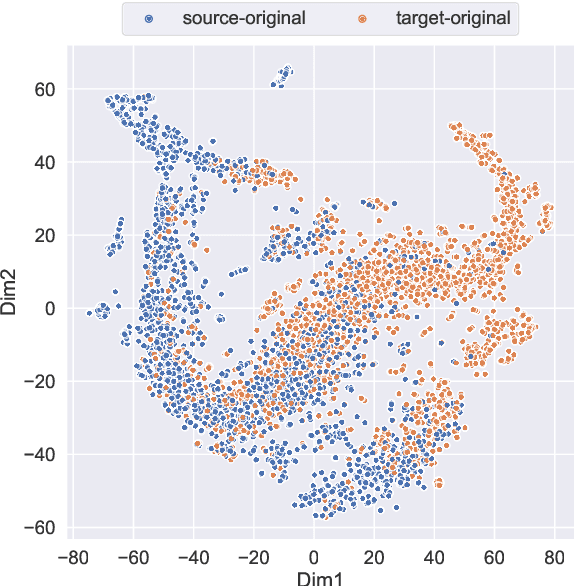

Machine Translation Quality Estimation (QE) is the task of evaluating translation output in the absence of human-written references. Due to the scarcity of human-labeled QE data, previous works attempted to utilize the abundant unlabeled parallel corpora to produce additional training data with pseudo labels. In this paper, we demonstrate a significant gap between parallel data and real QE data: for QE data, it is strictly guaranteed that the source side is original texts and the target side is translated (namely translationese). However, for parallel data, it is indiscriminate and the translationese may occur on either source or target side. We compare the impact of parallel data with different translation directions in QE data augmentation, and find that using the source-original part of parallel corpus consistently outperforms its target-original counterpart. Moreover, since the WMT corpus lacks direction information for each parallel sentence, we train a classifier to distinguish source- and target-original bitext, and carry out an analysis of their difference in both style and domain. Together, these findings suggest using source-original parallel data for QE data augmentation, which brings a relative improvement of up to 4.0% and 6.4% compared to undifferentiated data on sentence- and word-level QE tasks respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge