End-to-End Multi-speaker ASR with Independent Vector Analysis

Apr 01, 2022Robin Scheibler, Wangyou Zhang, Xuankai Chang, Shinji Watanabe, Yanmin Qian

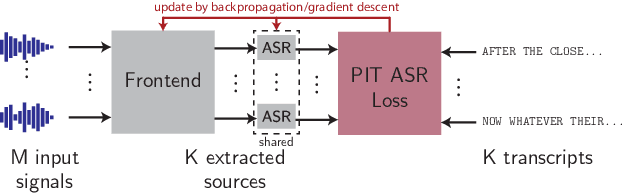

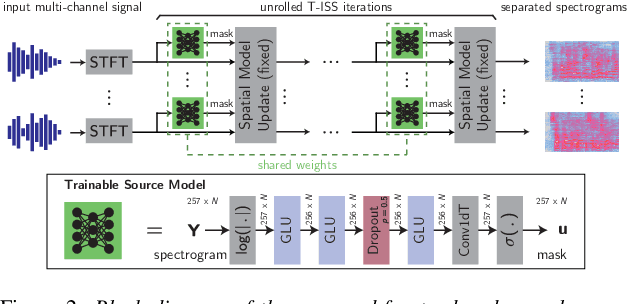

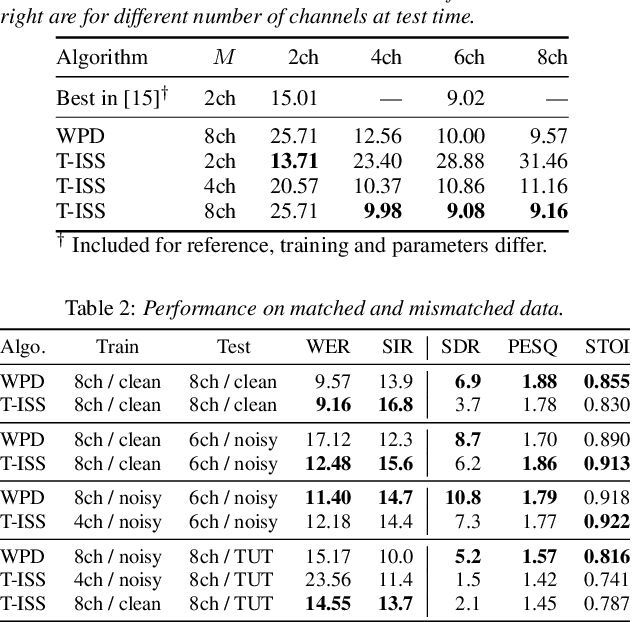

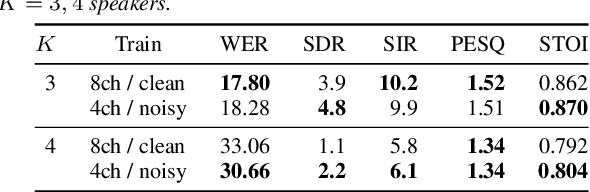

We develop an end-to-end system for multi-channel, multi-speaker automatic speech recognition. We propose a frontend for joint source separation and dereverberation based on the independent vector analysis (IVA) paradigm. It uses the fast and stable iterative source steering algorithm together with a neural source model. The parameters from the ASR module and the neural source model are optimized jointly from the ASR loss itself. We demonstrate competitive performance with previous systems using neural beamforming frontends. First, we explore the trade-offs when using various number of channels for training and testing. Second, we demonstrate that the proposed IVA frontend performs well on noisy data, even when trained on clean mixtures only. Furthermore, it extends without retraining to the separation of more speakers, which is demonstrated on mixtures of three and four speakers.

Towards Low-distortion Multi-channel Speech Enhancement: The ESPNet-SE Submission to The L3DAS22 Challenge

Feb 24, 2022Yen-Ju Lu, Samuele Cornell, Xuankai Chang, Wangyou Zhang, Chenda Li, Zhaoheng Ni, Zhong-Qiu Wang, Shinji Watanabe

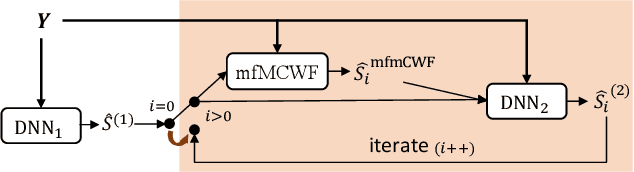

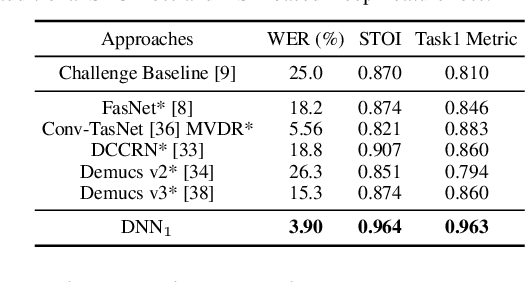

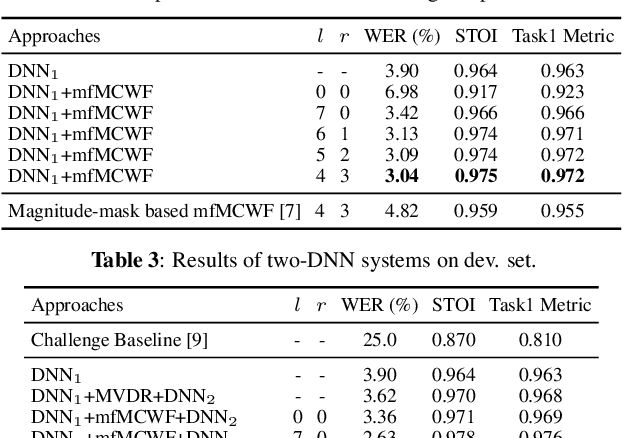

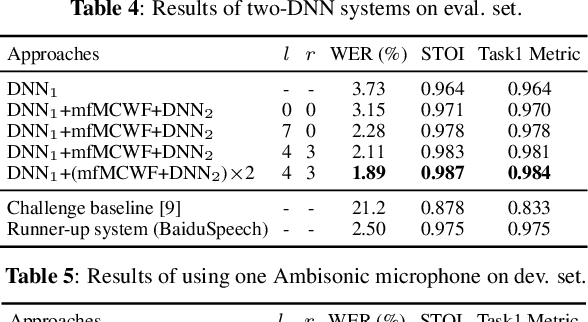

This paper describes our submission to the L3DAS22 Challenge Task 1, which consists of speech enhancement with 3D Ambisonic microphones. The core of our approach combines Deep Neural Network (DNN) driven complex spectral mapping with linear beamformers such as the multi-frame multi-channel Wiener filter. Our proposed system has two DNNs and a linear beamformer in between. Both DNNs are trained to perform complex spectral mapping, using a combination of waveform and magnitude spectrum losses. The estimated signal from the first DNN is used to drive a linear beamformer, and the beamforming result, together with this enhanced signal, are used as extra inputs for the second DNN which refines the estimation. Then, from this new estimated signal, the linear beamformer and second DNN are run iteratively. The proposed method was ranked first in the challenge, achieving, on the evaluation set, a ranking metric of 0.984, versus 0.833 of the challenge baseline.

Separating Long-Form Speech with Group-Wise Permutation Invariant Training

Nov 17, 2021Wangyou Zhang, Zhuo Chen, Naoyuki Kanda, Shujie Liu, Jinyu Li, Sefik Emre Eskimez, Takuya Yoshioka, Xiong Xiao, Zhong Meng, Yanmin Qian, Furu Wei

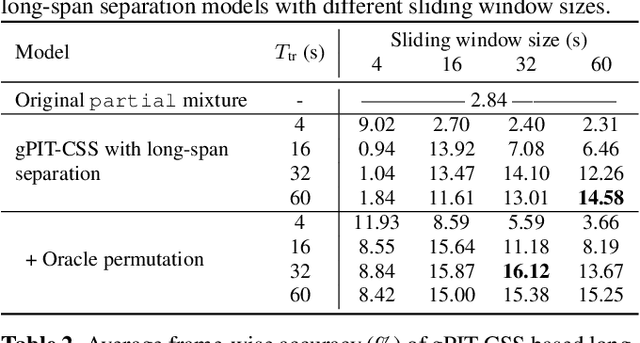

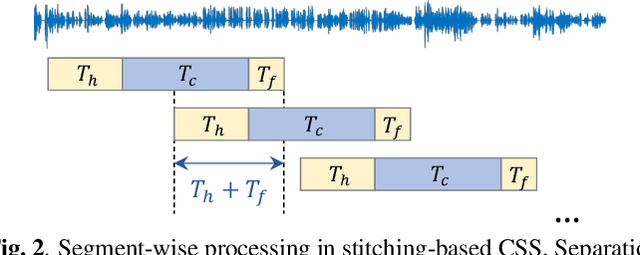

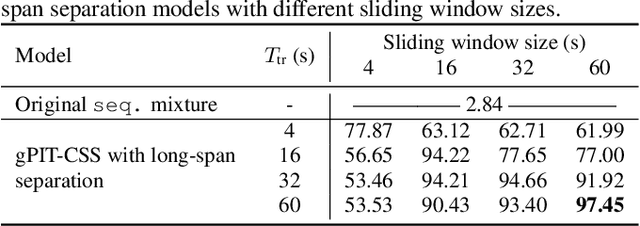

Multi-talker conversational speech processing has drawn many interests for various applications such as meeting transcription. Speech separation is often required to handle overlapped speech that is commonly observed in conversation. Although the original utterancelevel permutation invariant training-based continuous speech separation approach has proven to be effective in various conditions, it lacks the ability to leverage the long-span relationship of utterances and is computationally inefficient due to the highly overlapped sliding windows. To overcome these drawbacks, we propose a novel training scheme named Group-PIT, which allows direct training of the speech separation models on the long-form speech with a low computational cost for label assignment. Two different speech separation approaches with Group-PIT are explored, including direct long-span speech separation and short-span speech separation with long-span tracking. The experiments on the simulated meeting-style data demonstrate the effectiveness of our proposed approaches, especially in dealing with a very long speech input.

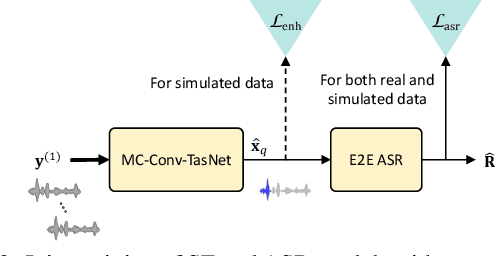

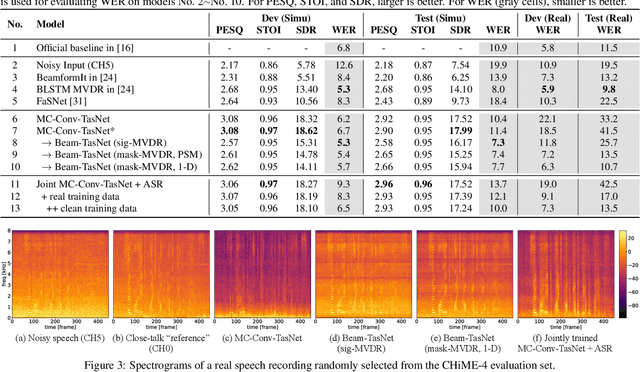

Closing the Gap Between Time-Domain Multi-Channel Speech Enhancement on Real and Simulation Conditions

Oct 27, 2021Wangyou Zhang, Jing Shi, Chenda Li, Shinji Watanabe, Yanmin Qian

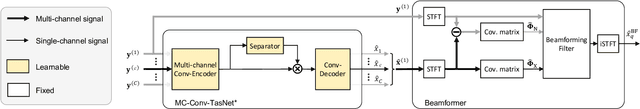

The deep learning based time-domain models, e.g. Conv-TasNet, have shown great potential in both single-channel and multi-channel speech enhancement. However, many experiments on the time-domain speech enhancement model are done in simulated conditions, and it is not well studied whether the good performance can generalize to real-world scenarios. In this paper, we aim to provide an insightful investigation of applying multi-channel Conv-TasNet based speech enhancement to both simulation and real data. Our preliminary experiments show a large performance gap between the two conditions in terms of the ASR performance. Several approaches are applied to close this gap, including the integration of multi-channel Conv-TasNet into the beamforming model with various strategies, and the joint training of speech enhancement and speech recognition models. Our experiments on the CHiME-4 corpus show that our proposed approaches can greatly reduce the speech recognition performance discrepancy between simulation and real data, while preserving the strong speech enhancement capability in the frontend.

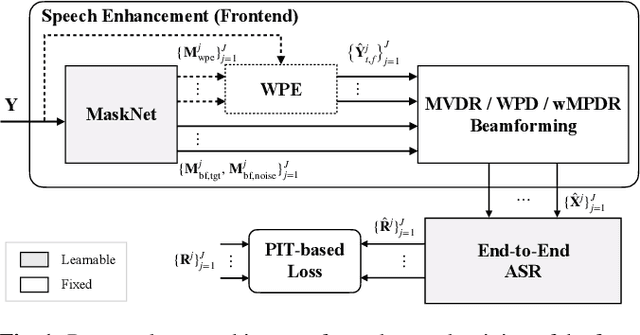

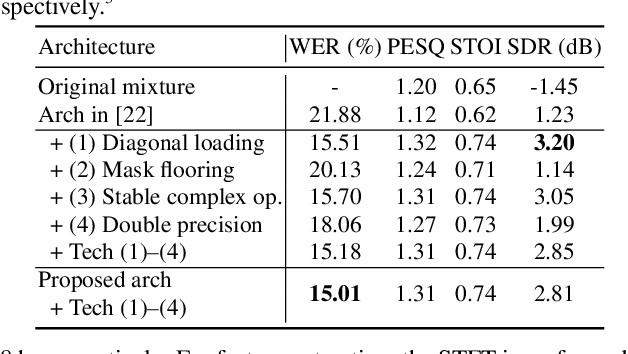

End-to-End Dereverberation, Beamforming, and Speech Recognition with Improved Numerical Stability and Advanced Frontend

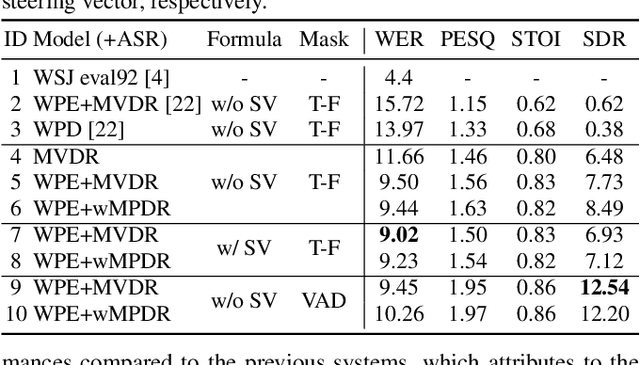

Feb 23, 2021Wangyou Zhang, Christoph Boeddeker, Shinji Watanabe, Tomohiro Nakatani, Marc Delcroix, Keisuke Kinoshita, Tsubasa Ochiai, Naoyuki Kamo, Reinhold Haeb-Umbach, Yanmin Qian

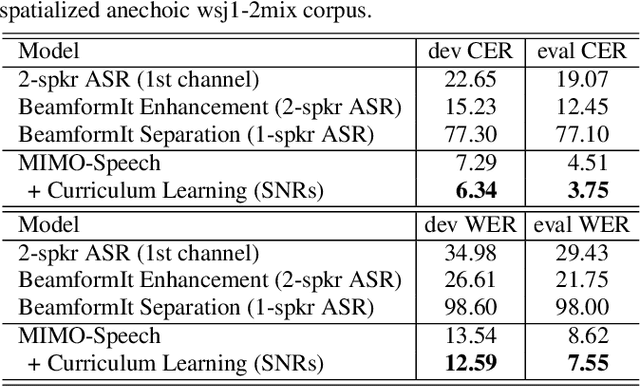

Recently, the end-to-end approach has been successfully applied to multi-speaker speech separation and recognition in both single-channel and multichannel conditions. However, severe performance degradation is still observed in the reverberant and noisy scenarios, and there is still a large performance gap between anechoic and reverberant conditions. In this work, we focus on the multichannel multi-speaker reverberant condition, and propose to extend our previous framework for end-to-end dereverberation, beamforming, and speech recognition with improved numerical stability and advanced frontend subnetworks including voice activity detection like masks. The techniques significantly stabilize the end-to-end training process. The experiments on the spatialized wsj1-2mix corpus show that the proposed system achieves about 35% WER relative reduction compared to our conventional multi-channel E2E ASR system, and also obtains decent speech dereverberation and separation performance (SDR=12.5 dB) in the reverberant multi-speaker condition while trained only with the ASR criterion.

The 2020 ESPnet update: new features, broadened applications, performance improvements, and future plans

Dec 23, 2020Shinji Watanabe, Florian Boyer, Xuankai Chang, Pengcheng Guo, Tomoki Hayashi, Yosuke Higuchi, Takaaki Hori, Wen-Chin Huang, Hirofumi Inaguma, Naoyuki Kamo, Shigeki Karita, Chenda Li, Jing Shi, Aswin Shanmugam Subramanian, Wangyou Zhang

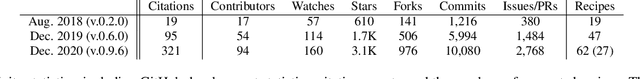

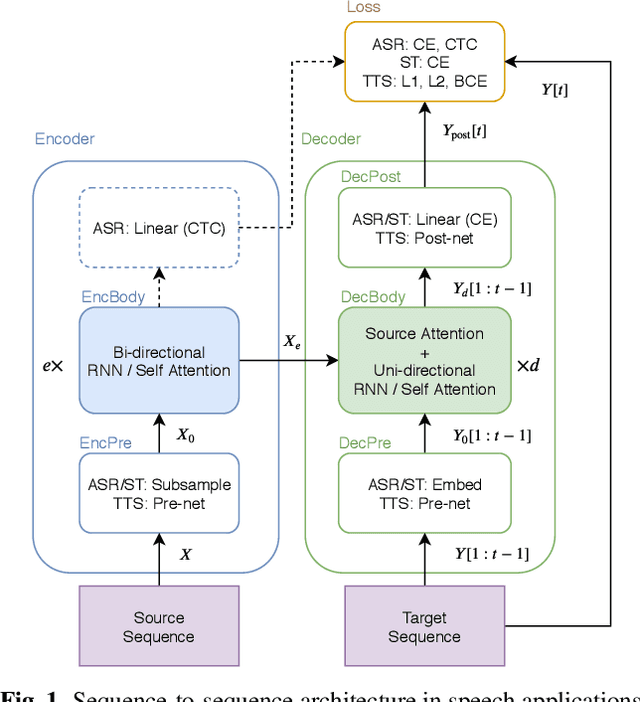

This paper describes the recent development of ESPnet (https://github.com/espnet/espnet), an end-to-end speech processing toolkit. This project was initiated in December 2017 to mainly deal with end-to-end speech recognition experiments based on sequence-to-sequence modeling. The project has grown rapidly and now covers a wide range of speech processing applications. Now ESPnet also includes text to speech (TTS), voice conversation (VC), speech translation (ST), and speech enhancement (SE) with support for beamforming, speech separation, denoising, and dereverberation. All applications are trained in an end-to-end manner, thanks to the generic sequence to sequence modeling properties, and they can be further integrated and jointly optimized. Also, ESPnet provides reproducible all-in-one recipes for these applications with state-of-the-art performance in various benchmarks by incorporating transformer, advanced data augmentation, and conformer. This project aims to provide up-to-date speech processing experience to the community so that researchers in academia and various industry scales can develop their technologies collaboratively.

End-to-End Multi-speaker Speech Recognition with Transformer

Feb 13, 2020Xuankai Chang, Wangyou Zhang, Yanmin Qian, Jonathan Le Roux, Shinji Watanabe

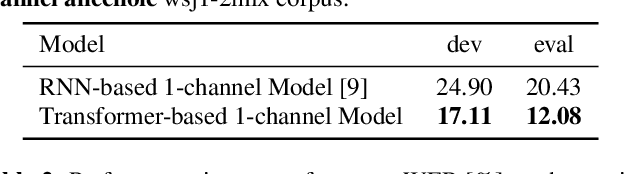

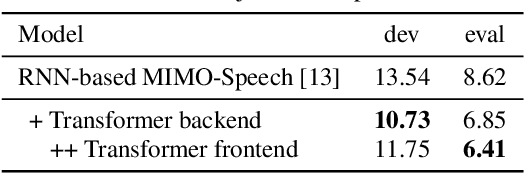

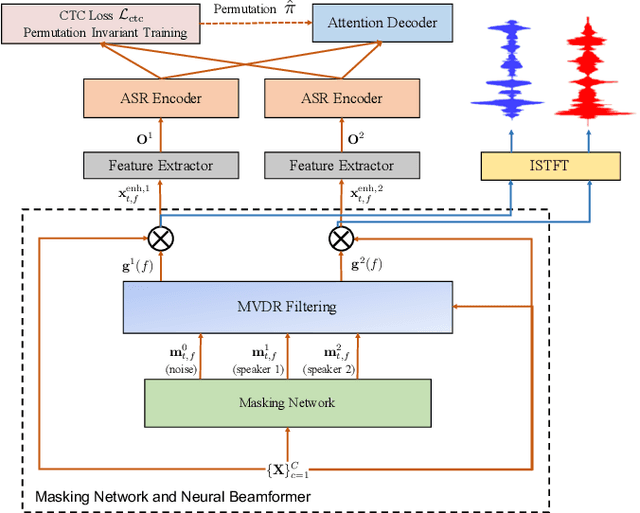

Recently, fully recurrent neural network (RNN) based end-to-end models have been proven to be effective for multi-speaker speech recognition in both the single-channel and multi-channel scenarios. In this work, we explore the use of Transformer models for these tasks by focusing on two aspects. First, we replace the RNN-based encoder-decoder in the speech recognition model with a Transformer architecture. Second, in order to use the Transformer in the masking network of the neural beamformer in the multi-channel case, we modify the self-attention component to be restricted to a segment rather than the whole sequence in order to reduce computation. Besides the model architecture improvements, we also incorporate an external dereverberation preprocessing, the weighted prediction error (WPE), enabling our model to handle reverberated signals. Experiments on the spatialized wsj1-2mix corpus show that the Transformer-based models achieve 40.9% and 25.6% relative WER reduction, down to 12.1% and 6.4% WER, under the anechoic condition in single-channel and multi-channel tasks, respectively, while in the reverberant case, our methods achieve 41.5% and 13.8% relative WER reduction, down to 16.5% and 15.2% WER.

MIMO-SPEECH: End-to-End Multi-Channel Multi-Speaker Speech Recognition

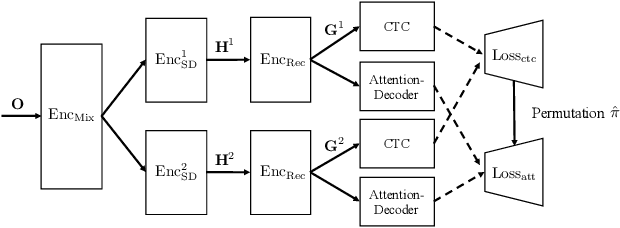

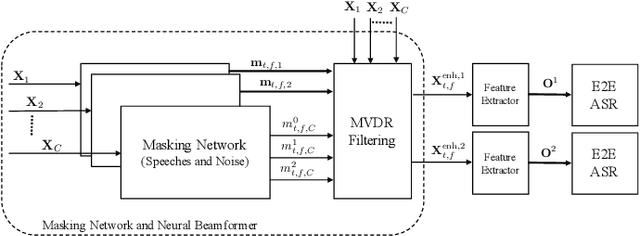

Oct 16, 2019Xuankai Chang, Wangyou Zhang, Yanmin Qian, Jonathan Le Roux, Shinji Watanabe

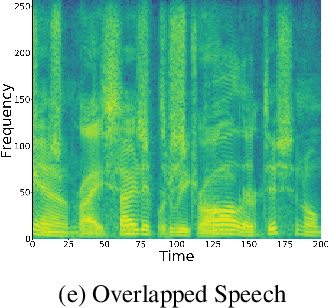

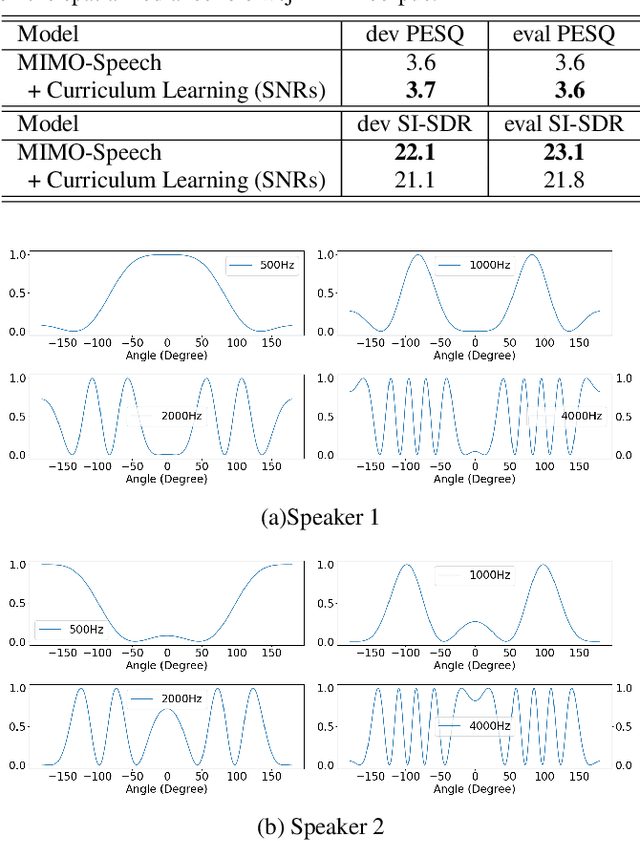

Recently, the end-to-end approach has proven its efficacy in monaural multi-speaker speech recognition. However, high word error rates (WERs) still prevent these systems from being used in practical applications. On the other hand, the spatial information in multi-channel signals has proven helpful in far-field speech recognition tasks. In this work, we propose a novel neural sequence-to-sequence (seq2seq) architecture, MIMO-Speech, which extends the original seq2seq to deal with multi-channel input and multi-channel output so that it can fully model multi-channel multi-speaker speech separation and recognition. MIMO-Speech is a fully neural end-to-end framework, which is optimized only via an ASR criterion. It is comprised of: 1) a monaural masking network, 2) a multi-source neural beamformer, and 3) a multi-output speech recognition model. With this processing, the input overlapped speech is directly mapped to text sequences. We further adopted a curriculum learning strategy, making the best use of the training set to improve the performance. The experiments on the spatialized wsj1-2mix corpus show that our model can achieve more than 60% WER reduction compared to the single-channel system with high quality enhanced signals (SI-SDR = 23.1 dB) obtained by the above separation function.

A Comparative Study on Transformer vs RNN in Speech Applications

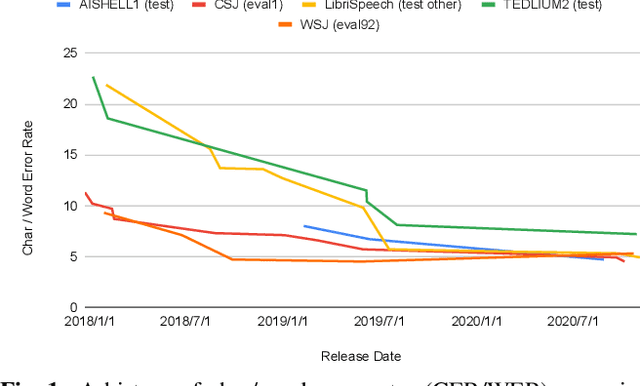

Sep 28, 2019Shigeki Karita, Nanxin Chen, Tomoki Hayashi, Takaaki Hori, Hirofumi Inaguma, Ziyan Jiang, Masao Someki, Nelson Enrique Yalta Soplin, Ryuichi Yamamoto, Xiaofei Wang, Shinji Watanabe, Takenori Yoshimura, Wangyou Zhang

Sequence-to-sequence models have been widely used in end-to-end speech processing, for example, automatic speech recognition (ASR), speech translation (ST), and text-to-speech (TTS). This paper focuses on an emergent sequence-to-sequence model called Transformer, which achieves state-of-the-art performance in neural machine translation and other natural language processing applications. We undertook intensive studies in which we experimentally compared and analyzed Transformer and conventional recurrent neural networks (RNN) in a total of 15 ASR, one multilingual ASR, one ST, and two TTS benchmarks. Our experiments revealed various training tips and significant performance benefits obtained with Transformer for each task including the surprising superiority of Transformer in 13/15 ASR benchmarks in comparison with RNN. We are preparing to release Kaldi-style reproducible recipes using open source and publicly available datasets for all the ASR, ST, and TTS tasks for the community to succeed our exciting outcomes.

* Accepted at ASRU 2019

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge