Wai Lam

TRIP: Triangular Document-level Pre-training for Multilingual Language Models

Dec 15, 2022

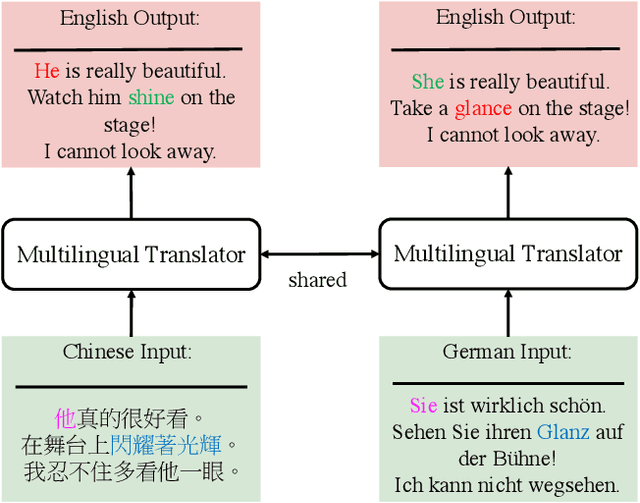

Abstract:Despite the current success of multilingual pre-training, most prior works focus on leveraging monolingual data or bilingual parallel data and overlooked the value of trilingual parallel data. This paper presents \textbf{Tri}angular Document-level \textbf{P}re-training (\textbf{TRIP}), which is the first in the field to extend the conventional monolingual and bilingual pre-training to a trilingual setting by (i) \textbf{Grafting} the same documents in two languages into one mixed document, and (ii) predicting the remaining one language as the reference translation. Our experiments on document-level MT and cross-lingual abstractive summarization show that TRIP brings by up to 3.65 d-BLEU points and 6.2 ROUGE-L points on three multilingual document-level machine translation benchmarks and one cross-lingual abstractive summarization benchmark, including multiple strong state-of-the-art (SOTA) scores. In-depth analysis indicates that TRIP improves document-level machine translation and captures better document contexts in at least three characteristics: (i) tense consistency, (ii) noun consistency and (iii) conjunction presence.

From Clozing to Comprehending: Retrofitting Pre-trained Language Model to Pre-trained Machine Reader

Dec 09, 2022Abstract:We present Pre-trained Machine Reader (PMR), a novel method to retrofit Pre-trained Language Models (PLMs) into Machine Reading Comprehension (MRC) models without acquiring labeled data. PMR is capable of resolving the discrepancy between model pre-training and downstream fine-tuning of existing PLMs, and provides a unified solver for tackling various extraction tasks. To achieve this, we construct a large volume of general-purpose and high-quality MRC-style training data with the help of Wikipedia hyperlinks and design a Wiki Anchor Extraction task to guide the MRC-style pre-training process. Although conceptually simple, PMR is particularly effective in solving extraction tasks including Extractive Question Answering and Named Entity Recognition, where it shows tremendous improvements over previous approaches especially under low-resource settings. Moreover, viewing sequence classification task as a special case of extraction task in our MRC formulation, PMR is even capable to extract high-quality rationales to explain the classification process, providing more explainability of the predictions.

On the Effectiveness of Parameter-Efficient Fine-Tuning

Nov 28, 2022

Abstract:Fine-tuning pre-trained models has been ubiquitously proven to be effective in a wide range of NLP tasks. However, fine-tuning the whole model is parameter inefficient as it always yields an entirely new model for each task. Currently, many research works propose to only fine-tune a small portion of the parameters while keeping most of the parameters shared across different tasks. These methods achieve surprisingly good performance and are shown to be more stable than their corresponding fully fine-tuned counterparts. However, such kind of methods is still not well understood. Some natural questions arise: How does the parameter sparsity lead to promising performance? Why is the model more stable than the fully fine-tuned models? How to choose the tunable parameters? In this paper, we first categorize the existing methods into random approaches, rule-based approaches, and projection-based approaches based on how they choose which parameters to tune. Then, we show that all of the methods are actually sparse fine-tuned models and conduct a novel theoretical analysis of them. We indicate that the sparsity is actually imposing a regularization on the original model by controlling the upper bound of the stability. Such stability leads to better generalization capability which has been empirically observed in a lot of recent research works. Despite the effectiveness of sparsity grounded by our theory, it still remains an open problem of how to choose the tunable parameters. To better choose the tunable parameters, we propose a novel Second-order Approximation Method (SAM) which approximates the original problem with an analytically solvable optimization function. The tunable parameters are determined by directly optimizing the approximation function. The experimental results show that our proposed SAM model outperforms many strong baseline models and it also verifies our theoretical analysis.

Towards Generalizable and Robust Text-to-SQL Parsing

Oct 23, 2022

Abstract:Text-to-SQL parsing tackles the problem of mapping natural language questions to executable SQL queries. In practice, text-to-SQL parsers often encounter various challenging scenarios, requiring them to be generalizable and robust. While most existing work addresses a particular generalization or robustness challenge, we aim to study it in a more comprehensive manner. In specific, we believe that text-to-SQL parsers should be (1) generalizable at three levels of generalization, namely i.i.d., zero-shot, and compositional, and (2) robust against input perturbations. To enhance these capabilities of the parser, we propose a novel TKK framework consisting of Task decomposition, Knowledge acquisition, and Knowledge composition to learn text-to-SQL parsing in stages. By dividing the learning process into multiple stages, our framework improves the parser's ability to acquire general SQL knowledge instead of capturing spurious patterns, making it more generalizable and robust. Experimental results under various generalization and robustness settings show that our framework is effective in all scenarios and achieves state-of-the-art performance on the Spider, SParC, and CoSQL datasets. Code can be found at https://github.com/AlibabaResearch/DAMO-ConvAI/tree/main/tkk.

McQueen: a Benchmark for Multimodal Conversational Query Rewrite

Oct 23, 2022

Abstract:The task of query rewrite aims to convert an in-context query to its fully-specified version where ellipsis and coreference are completed and referred-back according to the history context. Although much progress has been made, less efforts have been paid to real scenario conversations that involve drawing information from more than one modalities. In this paper, we propose the task of multimodal conversational query rewrite (McQR), which performs query rewrite under the multimodal visual conversation setting. We collect a large-scale dataset named McQueen based on manual annotation, which contains 15k visual conversations and over 80k queries where each one is associated with a fully-specified rewrite version. In addition, for entities appearing in the rewrite, we provide the corresponding image box annotation. We then use the McQueen dataset to benchmark a state-of-the-art method for effectively tackling the McQR task, which is based on a multimodal pre-trained model with pointer generator. Extensive experiments are performed to demonstrate the effectiveness of our model on this task\footnote{The dataset and code of this paper are both available in \url{https://github.com/yfyuan01/MQR}

Retrofitting Multilingual Sentence Embeddings with Abstract Meaning Representation

Oct 18, 2022

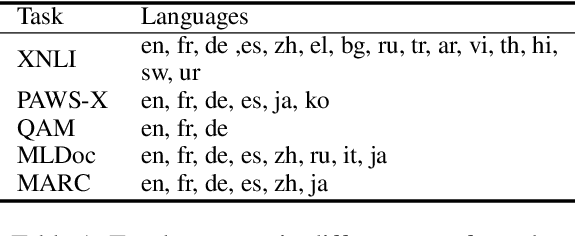

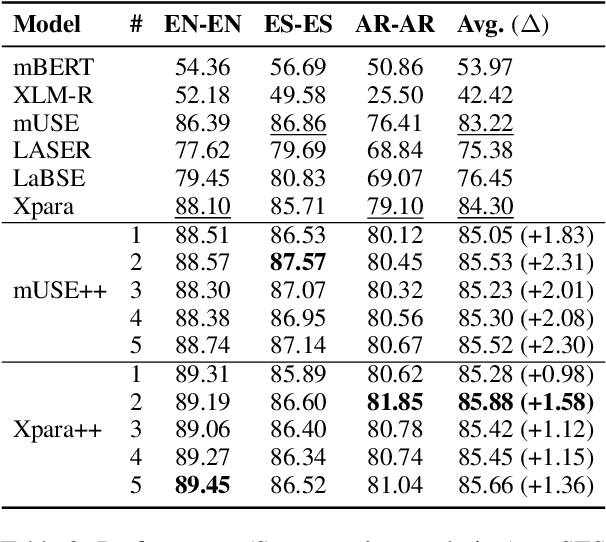

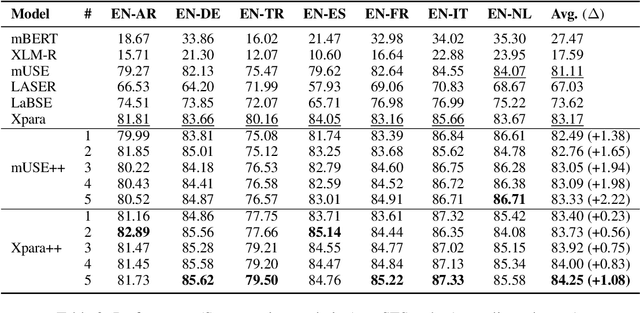

Abstract:We introduce a new method to improve existing multilingual sentence embeddings with Abstract Meaning Representation (AMR). Compared with the original textual input, AMR is a structured semantic representation that presents the core concepts and relations in a sentence explicitly and unambiguously. It also helps reduce surface variations across different expressions and languages. Unlike most prior work that only evaluates the ability to measure semantic similarity, we present a thorough evaluation of existing multilingual sentence embeddings and our improved versions, which include a collection of five transfer tasks in different downstream applications. Experiment results show that retrofitting multilingual sentence embeddings with AMR leads to better state-of-the-art performance on both semantic textual similarity and transfer tasks. Our codebase and evaluation scripts can be found at \url{https://github.com/jcyk/MSE-AMR}.

PACIFIC: Towards Proactive Conversational Question Answering over Tabular and Textual Data in Finance

Oct 17, 2022

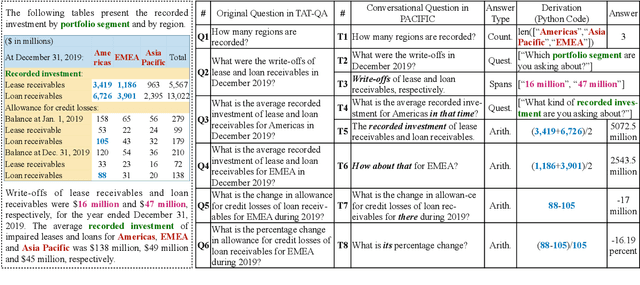

Abstract:To facilitate conversational question answering (CQA) over hybrid contexts in finance, we present a new dataset, named PACIFIC. Compared with existing CQA datasets, PACIFIC exhibits three key features: (i) proactivity, (ii) numerical reasoning, and (iii) hybrid context of tables and text. A new task is defined accordingly to study Proactive Conversational Question Answering (PCQA), which combines clarification question generation and CQA. In addition, we propose a novel method, namely UniPCQA, to adapt a hybrid format of input and output content in PCQA into the Seq2Seq problem, including the reformulation of the numerical reasoning process as code generation. UniPCQA performs multi-task learning over all sub-tasks in PCQA and incorporates a simple ensemble strategy to alleviate the error propagation issue in the multi-task learning by cross-validating top-$k$ sampled Seq2Seq outputs. We benchmark the PACIFIC dataset with extensive baselines and provide comprehensive evaluations on each sub-task of PCQA.

ConReader: Exploring Implicit Relations in Contracts for Contract Clause Extraction

Oct 17, 2022

Abstract:We study automatic Contract Clause Extraction (CCE) by modeling implicit relations in legal contracts. Existing CCE methods mostly treat contracts as plain text, creating a substantial barrier to understanding contracts of high complexity. In this work, we first comprehensively analyze the complexity issues of contracts and distill out three implicit relations commonly found in contracts, namely, 1) Long-range Context Relation that captures the correlations of distant clauses; 2) Term-Definition Relation that captures the relation between important terms with their corresponding definitions; and 3) Similar Clause Relation that captures the similarities between clauses of the same type. Then we propose a novel framework ConReader to exploit the above three relations for better contract understanding and improving CCE. Experimental results show that ConReader makes the prediction more interpretable and achieves new state-of-the-art on two CCE tasks in both conventional and zero-shot settings.

PeerDA: Data Augmentation via Modeling Peer Relation for Span Identification Tasks

Oct 17, 2022

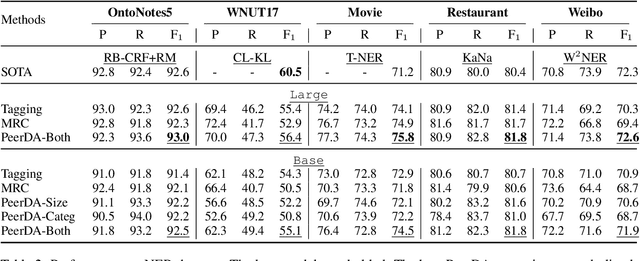

Abstract:Span Identification (SpanID) is a family of NLP tasks that aims to detect and classify text spans. Different from previous works that merely leverage Subordinate (\textsc{Sub}) relation about \textit{if a span is an instance of a certain category} to train SpanID models, we explore Peer (\textsc{Pr}) relation, which indicates that \textit{the two spans are two different instances from the same category sharing similar features}, and propose a novel \textbf{Peer} \textbf{D}ata \textbf{A}ugmentation (PeerDA) approach to treat span-span pairs with the \textsc{Pr} relation as a kind of augmented training data. PeerDA has two unique advantages: (1) There are a large number of span-span pairs with the \textsc{Pr} relation for augmenting the training data. (2) The augmented data can prevent over-fitting to the superficial span-category mapping by pushing SpanID models to leverage more on spans' semantics. Experimental results on ten datasets over four diverse SpanID tasks across seven domains demonstrate the effectiveness of PeerDA. Notably, seven of them achieve state-of-the-art results.

Multilingual Transitivity and Bidirectional Multilingual Agreement for Multilingual Document-level Machine Translation

Oct 05, 2022

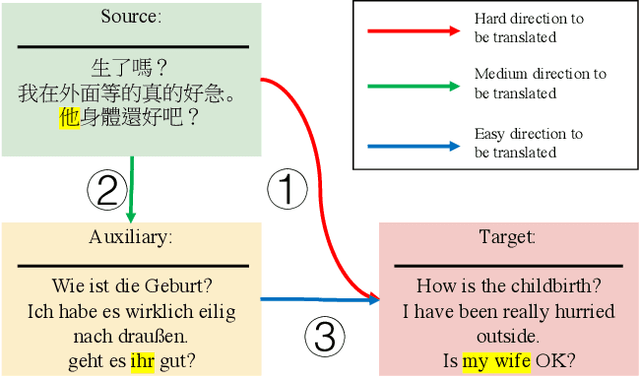

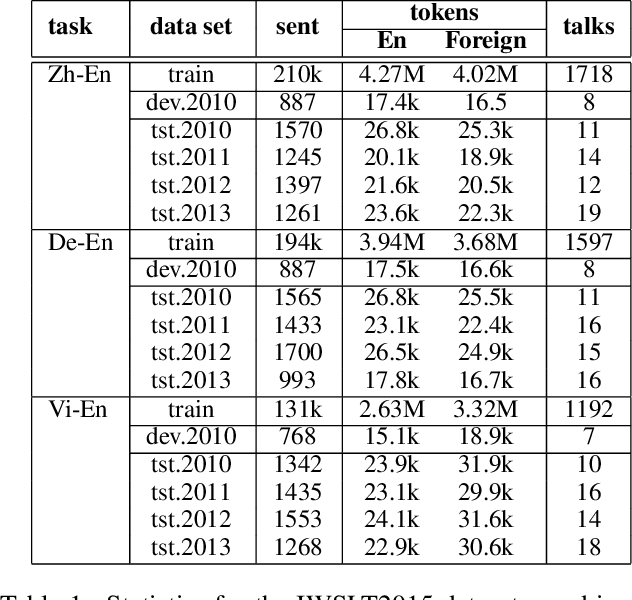

Abstract:Multilingual machine translation has been proven an effective strategy to support translation between multiple languages with a single model. However, most studies focus on multilingual sentence translation without considering generating long documents across different languages, which requires an understanding of multilingual context dependency and is typically harder. In this paper, we first spot that naively incorporating auxiliary multilingual data either auxiliary-target or source-auxiliary brings no improvement to the source-target language pair in our interest. Motivated by this observation, we propose a novel framework called Multilingual Transitivity (MTrans) to find an implicit optimal route via source-auxiliary-target within the multilingual model. To encourage MTrans, we propose a novel method called Triplet Parallel Data (TPD), which uses parallel triplets that contain (source-auxiliary, auxiliary-target, and source-target) for training. The auxiliary language then serves as a pivot and automatically facilitates the implicit information transition flow which is easier to translate. We further propose a novel framework called Bidirectional Multilingual Agreement (Bi-MAgree) that encourages the bidirectional agreement between different languages. To encourage Bi-MAgree, we propose a novel method called Multilingual Kullback-Leibler Divergence (MKL) that forces the output distribution of the inputs with the same meaning but in different languages to be consistent with each other. The experimental results indicate that our methods bring consistent improvements over strong baselines on three document translation tasks: IWSLT2015 Zh-En, De-En, and Vi-En. Our analysis validates the usefulness and existence of MTrans and Bi-MAgree, and our frameworks and methods are effective on synthetic auxiliary data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge