Marco Pavone

Sanford University and

Heterogeneous-Agent Trajectory Forecasting Incorporating Class Uncertainty

Apr 26, 2021

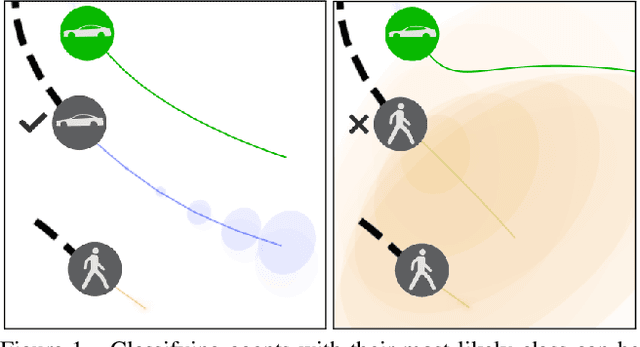

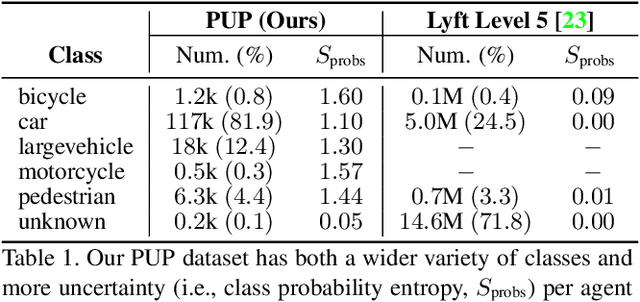

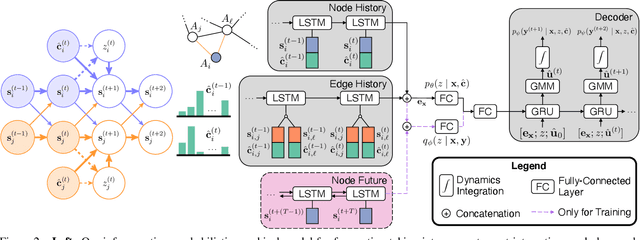

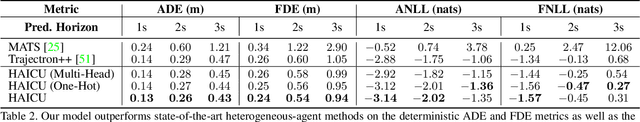

Abstract:Reasoning about the future behavior of other agents is critical to safe robot navigation. The multiplicity of plausible futures is further amplified by the uncertainty inherent to agent state estimation from data, including positions, velocities, and semantic class. Forecasting methods, however, typically neglect class uncertainty, conditioning instead only on the agent's most likely class, even though perception models often return full class distributions. To exploit this information, we present HAICU, a method for heterogeneous-agent trajectory forecasting that explicitly incorporates agents' class probabilities. We additionally present PUP, a new challenging real-world autonomous driving dataset, to investigate the impact of Perceptual Uncertainty in Prediction. It contains challenging crowded scenes with unfiltered agent class probabilities that reflect the long-tail of current state-of-the-art perception systems. We demonstrate that incorporating class probabilities in trajectory forecasting significantly improves performance in the face of uncertainty, and enables new forecasting capabilities such as counterfactual predictions.

Graph Neural Network Reinforcement Learning for Autonomous Mobility-on-Demand Systems

Apr 23, 2021

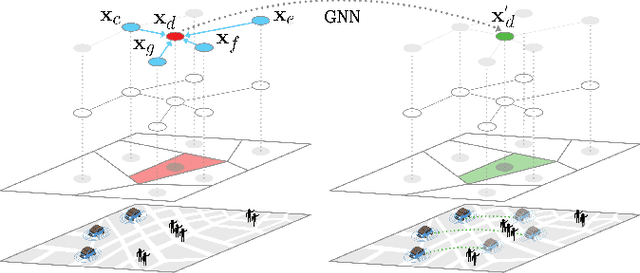

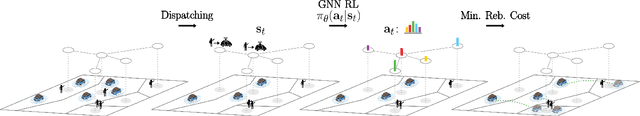

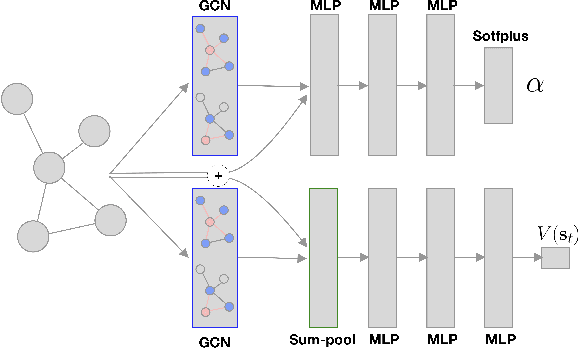

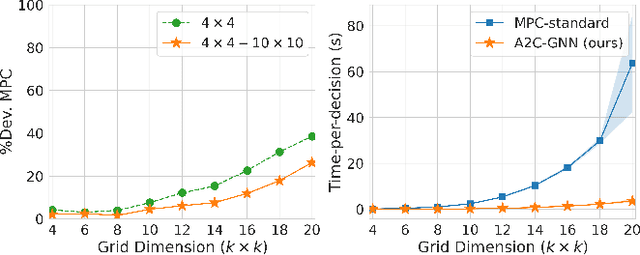

Abstract:Autonomous mobility-on-demand (AMoD) systems represent a rapidly developing mode of transportation wherein travel requests are dynamically handled by a coordinated fleet of robotic, self-driving vehicles. Given a graph representation of the transportation network - one where, for example, nodes represent areas of the city, and edges the connectivity between them - we argue that the AMoD control problem is naturally cast as a node-wise decision-making problem. In this paper, we propose a deep reinforcement learning framework to control the rebalancing of AMoD systems through graph neural networks. Crucially, we demonstrate that graph neural networks enable reinforcement learning agents to recover behavior policies that are significantly more transferable, generalizable, and scalable than policies learned through other approaches. Empirically, we show how the learned policies exhibit promising zero-shot transfer capabilities when faced with critical portability tasks such as inter-city generalization, service area expansion, and adaptation to potentially complex urban topologies.

Particle MPC for Uncertain and Learning-Based Control

Apr 13, 2021

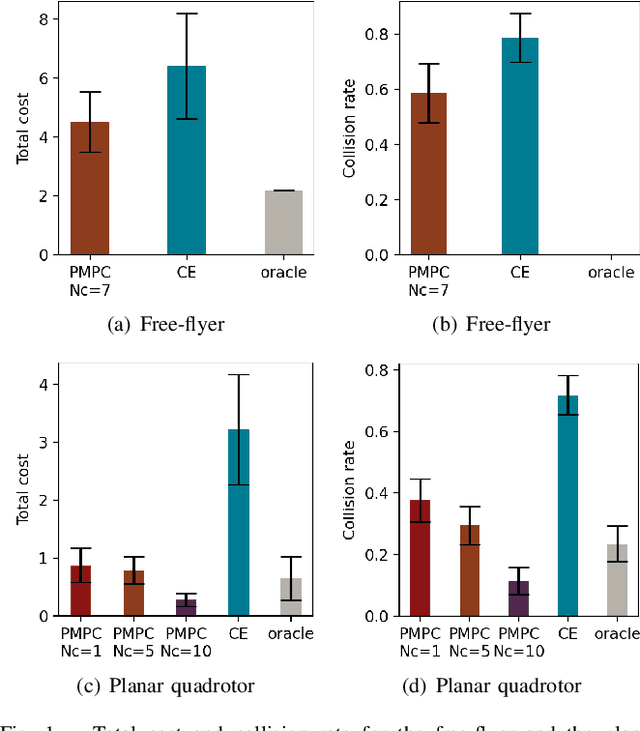

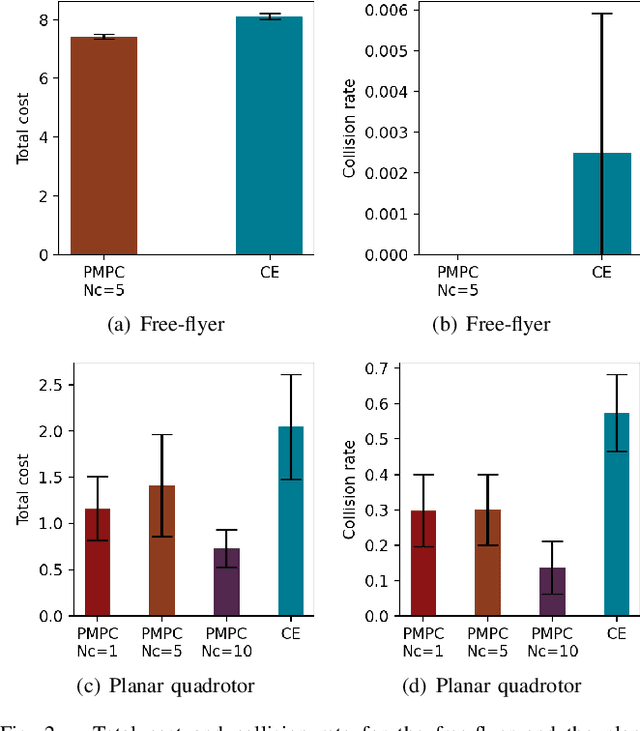

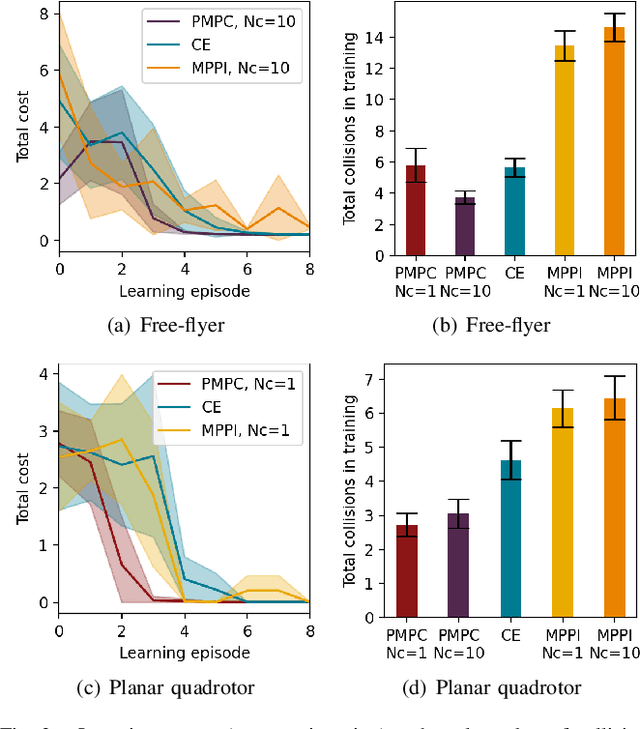

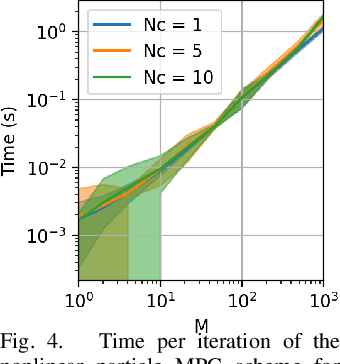

Abstract:As robotic systems move from highly structured environments to open worlds, incorporating uncertainty from dynamics learning or state estimation into the control pipeline is essential for robust performance. In this paper we present a nonlinear particle model predictive control (PMPC) approach to control under uncertainty, which directly incorporates any particle-based uncertainty representation, such as those common in robotics. Our approach builds on scenario methods for MPC, but in contrast to existing approaches, which either constrain all or only the first timestep to share actions across scenarios, we investigate the impact of a \textit{partial consensus horizon}. Implementing this optimization for nonlinear dynamics by leveraging sequential convex optimization, our approach yields an efficient framework that can be tuned to the particular information gain dynamics of a system to mitigate both over-conservatism and over-optimism. We investigate our approach for two robotic systems across three problem settings: time-varying, partially observed dynamics; sensing uncertainty; and model-based reinforcement learning, and show that our approach improves performance over baselines in all settings.

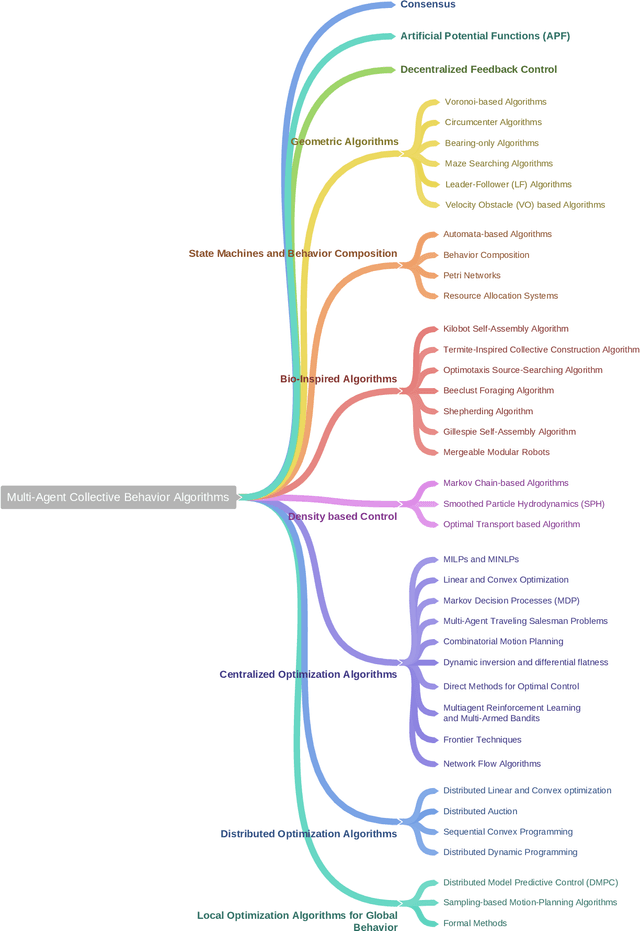

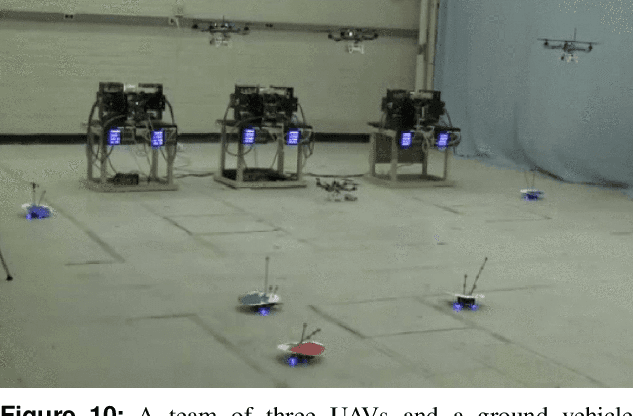

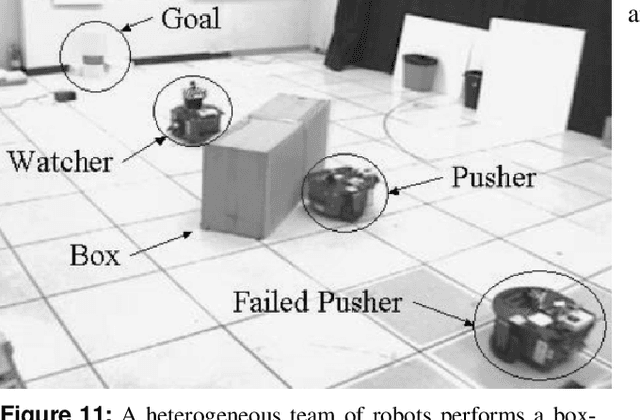

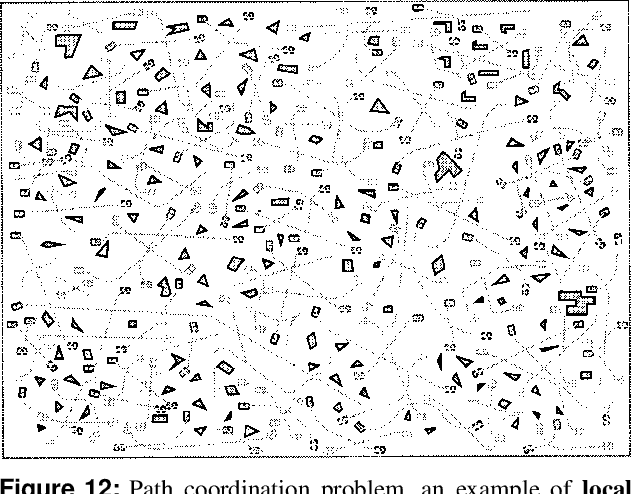

Multi-Agent Algorithms for Collective Behavior: A structural and application-focused atlas

Mar 20, 2021

Abstract:The goal of this paper is to provide a survey and application-focused atlas of collective behavior coordination algorithms for multi-agent systems. We survey the general family of collective behavior algorithms for multi-agent systems and classify them according to their underlying mathematical structure. In doing so, we aim to capture fundamental mathematical properties of algorithms (e.g., scalability with respect to the number of agents and bandwidth use) and to show how the same algorithm or family of algorithms can be used for multiple tasks and applications. Collectively, this paper provides an application-focused atlas of algorithms for collective behavior of multi-agent systems, with three objectives: 1. to act as a tutorial guide to practitioners in the selection of coordination algorithms for a given application; 2. to highlight how mathematically similar algorithms can be used for a variety of tasks, ranging from low-level control to high-level coordination; 3. to explore the state-of-the-art in the field of control of multi-agent systems and identify areas for future research.

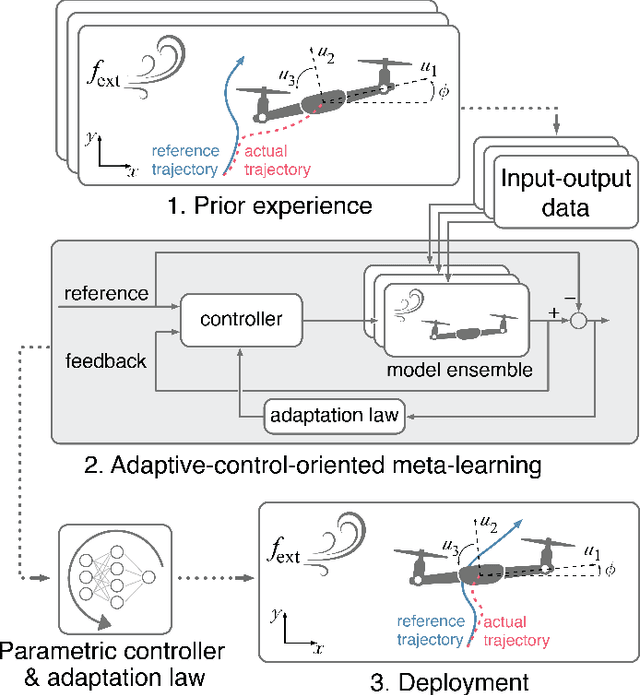

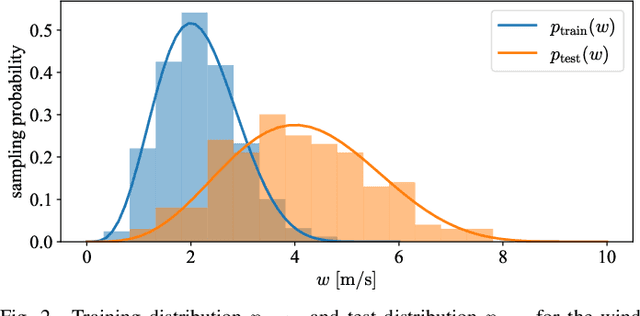

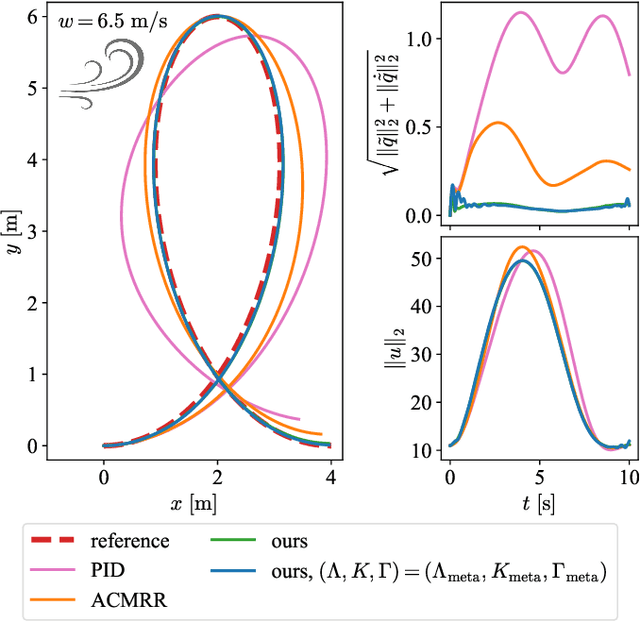

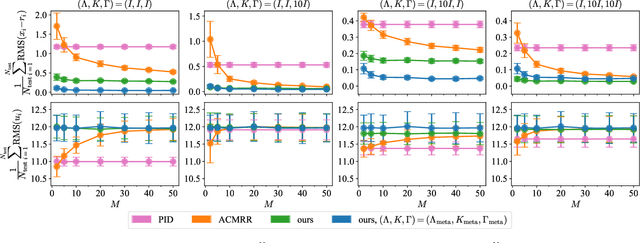

Adaptive-Control-Oriented Meta-Learning for Nonlinear Systems

Mar 07, 2021

Abstract:Real-time adaptation is imperative to the control of robots operating in complex, dynamic environments. Adaptive control laws can endow even nonlinear systems with good trajectory tracking performance, provided that any uncertain dynamics terms are linearly parameterizable with known nonlinear features. However, it is often difficult to specify such features a priori, such as for aerodynamic disturbances on rotorcraft or interaction forces between a manipulator arm and various objects. In this paper, we turn to data-driven modeling with neural networks to learn, offline from past data, an adaptive controller with an internal parametric model of these nonlinear features. Our key insight is that we can better prepare the controller for deployment with control-oriented meta-learning of features in closed-loop simulation, rather than regression-oriented meta-learning of features to fit input-output data. Specifically, we meta-learn the adaptive controller with closed-loop tracking simulation as the base-learner and the average tracking error as the meta-objective. With a nonlinear planar rotorcraft subject to wind, we demonstrate that our adaptive controller outperforms other controllers trained with regression-oriented meta-learning when deployed in closed-loop for trajectory tracking control.

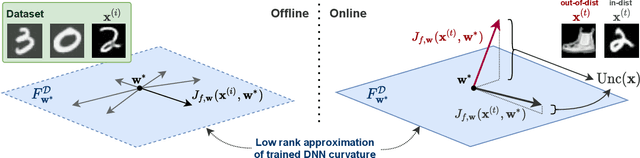

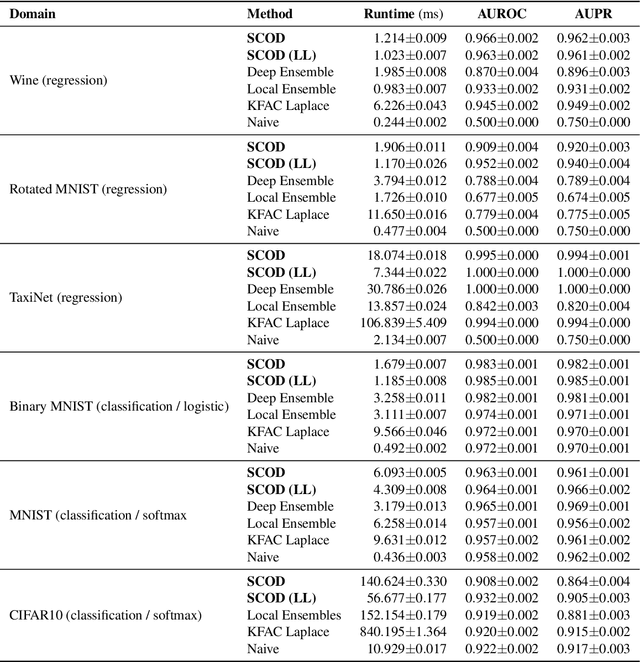

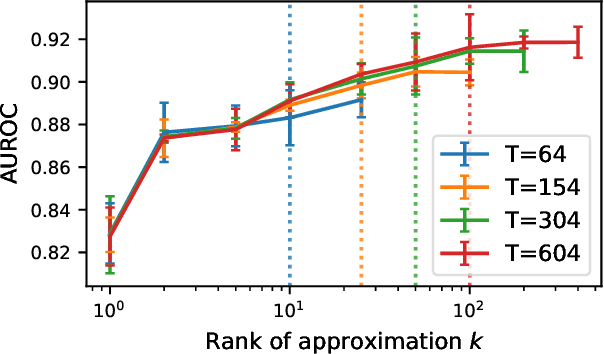

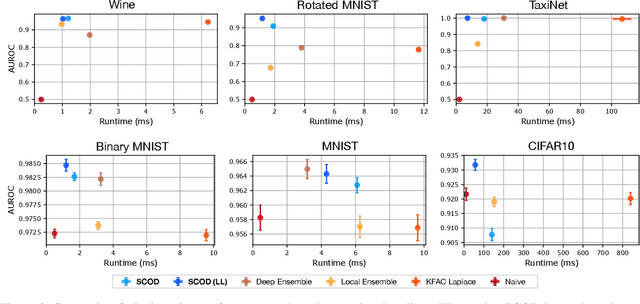

Sketching Curvature for Efficient Out-of-Distribution Detection for Deep Neural Networks

Feb 24, 2021

Abstract:In order to safely deploy Deep Neural Networks (DNNs) within the perception pipelines of real-time decision making systems, there is a need for safeguards that can detect out-of-training-distribution (OoD) inputs both efficiently and accurately. Building on recent work leveraging the local curvature of DNNs to reason about epistemic uncertainty, we propose Sketching Curvature of OoD Detection (SCOD), an architecture-agnostic framework for equipping any trained DNN with a task-relevant epistemic uncertainty estimate. Offline, given a trained model and its training data, SCOD employs tools from matrix sketching to tractably compute a low-rank approximation of the Fisher information matrix, which characterizes which directions in the weight space are most influential on the predictions over the training data. Online, we estimate uncertainty by measuring how much perturbations orthogonal to these directions can alter predictions at a new test input. We apply SCOD to pre-trained networks of varying architectures on several tasks, ranging from regression to classification. We demonstrate that SCOD achieves comparable or better OoD detection performance with lower computational burden relative to existing baselines.

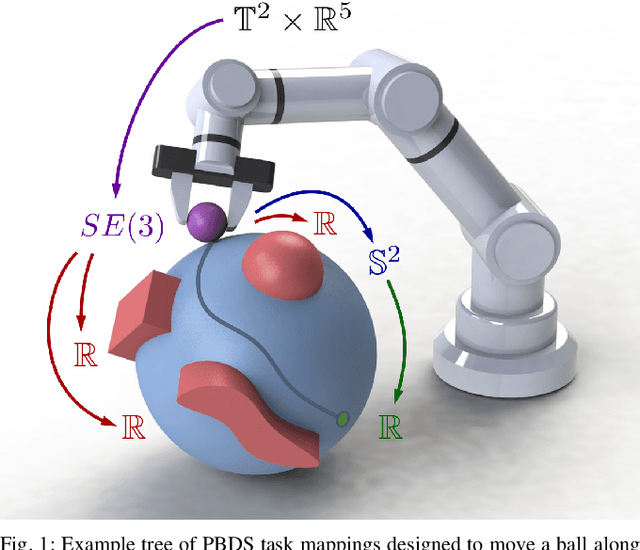

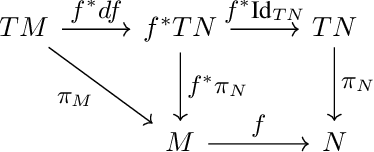

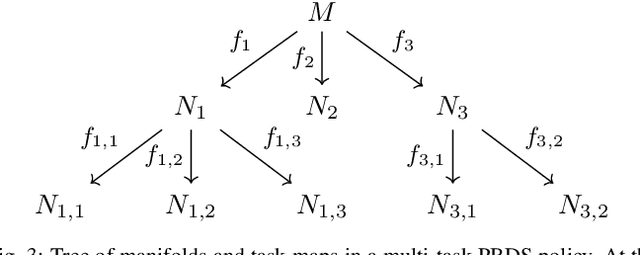

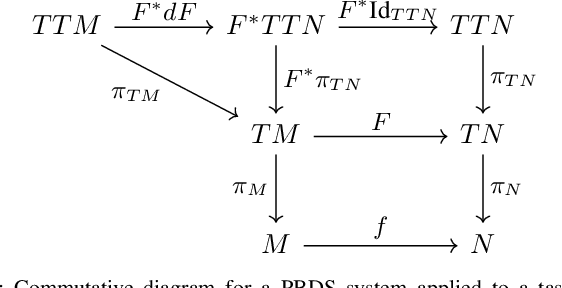

Composable Geometric Motion Policies using Multi-Task Pullback Bundle Dynamical Systems

Jan 05, 2021

Abstract:Despite decades of work in fast reactive planning and control, challenges remain in developing reactive motion policies on non-Euclidean manifolds and enforcing constraints while avoiding undesirable potential function local minima. This work presents a principled method for designing and fusing desired robot task behaviors into a stable robot motion policy, leveraging the geometric structure of non-Euclidean manifolds, which are prevalent in robot configuration and task spaces. Our Pullback Bundle Dynamical Systems (PBDS) framework drives desired task behaviors and prioritizes tasks using separate position-dependent and position/velocity-dependent Riemannian metrics, respectively, thus simplifying individual task design and modular composition of tasks. For enforcing constraints, we provide a class of metric-based tasks, eliminating local minima by imposing non-conflicting potential functions only for goal region attraction. We also provide a geometric optimization problem for combining tasks inspired by Riemannian Motion Policies (RMPs) that reduces to a simple least-squares problem, and we show that our approach is geometrically well-defined. We demonstrate the PBDS framework on the sphere $\mathbb S^2$ and at 300-500 Hz on a manipulator arm, and we provide task design guidance and an open-source Julia library implementation. Overall, this work presents a fast, easy-to-use framework for generating motion policies without unwanted potential function local minima on general manifolds.

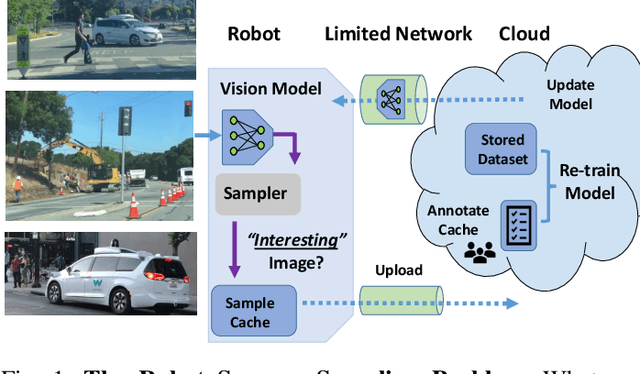

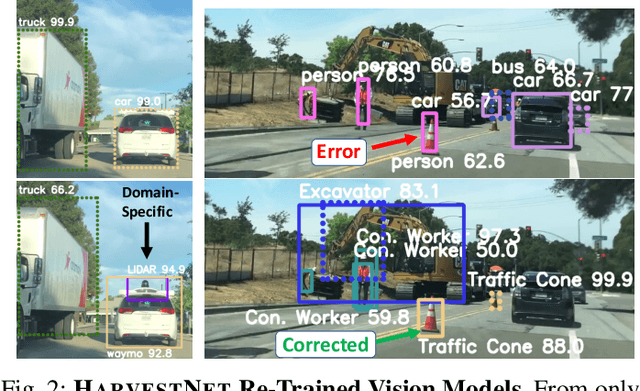

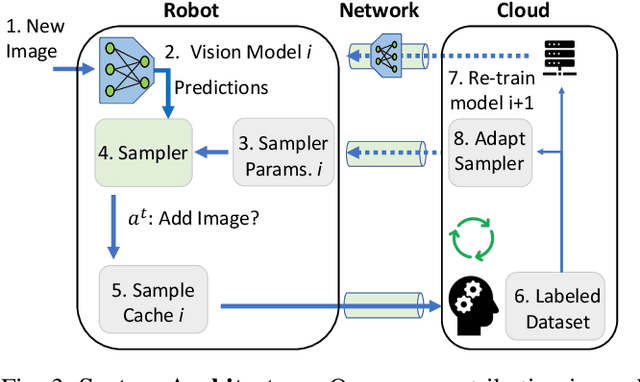

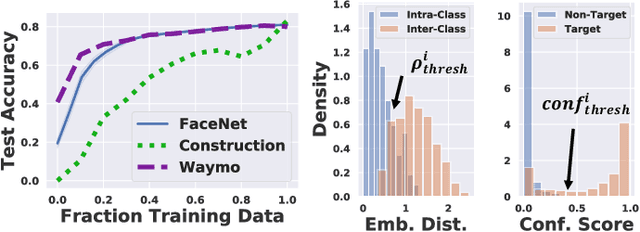

Sampling Training Data for Continual Learning Between Robots and the Cloud

Dec 12, 2020

Abstract:Today's robotic fleets are increasingly measuring high-volume video and LIDAR sensory streams, which can be mined for valuable training data, such as rare scenes of road construction sites, to steadily improve robotic perception models. However, re-training perception models on growing volumes of rich sensory data in central compute servers (or the "cloud") places an enormous time and cost burden on network transfer, cloud storage, human annotation, and cloud computing resources. Hence, we introduce HarvestNet, an intelligent sampling algorithm that resides on-board a robot and reduces system bottlenecks by only storing rare, useful events to steadily improve perception models re-trained in the cloud. HarvestNet significantly improves the accuracy of machine-learning models on our novel dataset of road construction sites, field testing of self-driving cars, and streaming face recognition, while reducing cloud storage, dataset annotation time, and cloud compute time by between 65.7-81.3%. Further, it is between 1.05-2.58x more accurate than baseline algorithms and scalably runs on embedded deep learning hardware. We provide a suite of compute-efficient perception models for the Google Edge Tensor Processing Unit (TPU), an extended technical report, and a novel video dataset to the research community at https://sites.google.com/view/harvestnet.

On Infusing Reachability-Based Safety Assurance within Planning Frameworks for Human-Robot Vehicle Interactions

Dec 06, 2020

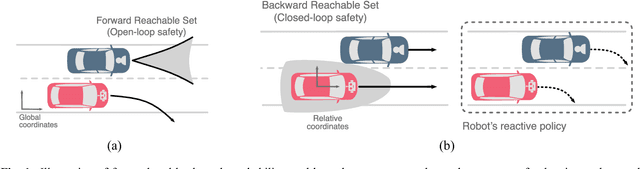

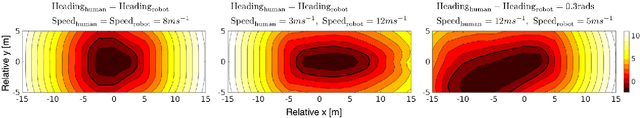

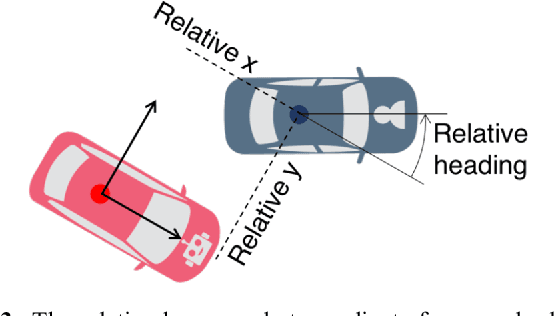

Abstract:Action anticipation, intent prediction, and proactive behavior are all desirable characteristics for autonomous driving policies in interactive scenarios. Paramount, however, is ensuring safety on the road -- a key challenge in doing so is accounting for uncertainty in human driver actions without unduly impacting planner performance. This paper introduces a minimally-interventional safety controller operating within an autonomous vehicle control stack with the role of ensuring collision-free interaction with an externally controlled (e.g., human-driven) counterpart while respecting static obstacles such as a road boundary wall. We leverage reachability analysis to construct a real-time (100Hz) controller that serves the dual role of (i) tracking an input trajectory from a higher-level planning algorithm using model predictive control, and (ii) assuring safety by maintaining the availability of a collision-free escape maneuver as a persistent constraint regardless of whatever future actions the other car takes. A full-scale steer-by-wire platform is used to conduct traffic weaving experiments wherein two cars, initially side-by-side, must swap lanes in a limited amount of time and distance, emulating cars merging onto/off of a highway. We demonstrate that, with our control stack, the autonomous vehicle is able to avoid collision even when the other car defies the planner's expectations and takes dangerous actions, either carelessly or with the intent to collide, and otherwise deviates minimally from the planned trajectory to the extent required to maintain safety.

* arXiv admin note: text overlap with arXiv:1812.11315

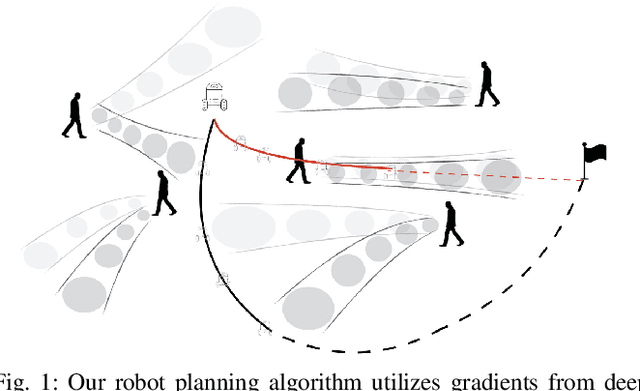

Leveraging Neural Network Gradients within Trajectory Optimization for Proactive Human-Robot Interactions

Dec 02, 2020

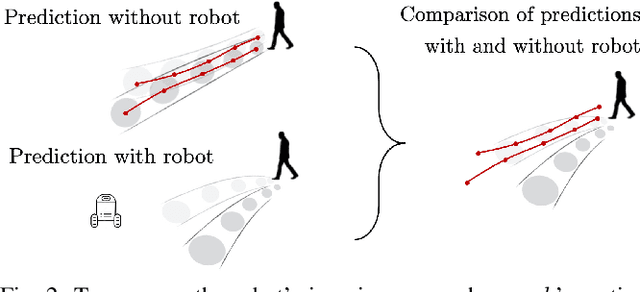

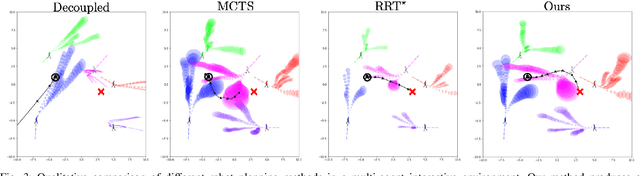

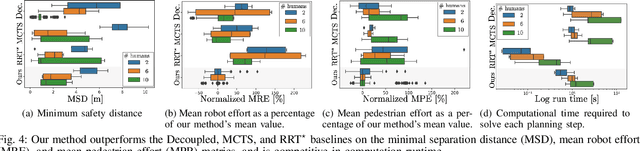

Abstract:To achieve seamless human-robot interactions, robots need to intimately reason about complex interaction dynamics and future human behaviors within their motion planning process. However, there is a disconnect between state-of-the-art neural network-based human behavior models and robot motion planners -- either the behavior models are limited in their consideration of downstream planning or a simplified behavior model is used to ensure tractability of the planning problem. In this work, we present a framework that fuses together the interpretability and flexibility of trajectory optimization (TO) with the predictive power of state-of-the-art human trajectory prediction models. In particular, we leverage gradient information from data-driven prediction models to explicitly reason about human-robot interaction dynamics within a gradient-based TO problem. We demonstrate the efficacy of our approach in a multi-agent scenario whereby a robot is required to safely and efficiently navigate through a crowd of up to ten pedestrians. We compare against a variety of planning methods, and show that by explicitly accounting for interaction dynamics within the planner, our method offers safer and more efficient behaviors, even yielding proactive and nuanced behaviors such as waiting for a pedestrian to pass before moving.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge