Lu Li

LLaTTE: Scaling Laws for Multi-Stage Sequence Modeling in Large-Scale Ads Recommendation

Jan 27, 2026Abstract:We present LLaTTE (LLM-Style Latent Transformers for Temporal Events), a scalable transformer architecture for production ads recommendation. Through systematic experiments, we demonstrate that sequence modeling in recommendation systems follows predictable power-law scaling similar to LLMs. Crucially, we find that semantic features bend the scaling curve: they are a prerequisite for scaling, enabling the model to effectively utilize the capacity of deeper and longer architectures. To realize the benefits of continued scaling under strict latency constraints, we introduce a two-stage architecture that offloads the heavy computation of large, long-context models to an asynchronous upstream user model. We demonstrate that upstream improvements transfer predictably to downstream ranking tasks. Deployed as the largest user model at Meta, this multi-stage framework drives a 4.3\% conversion uplift on Facebook Feed and Reels with minimal serving overhead, establishing a practical blueprint for harnessing scaling laws in industrial recommender systems.

Nodule-DETR: A Novel DETR Architecture with Frequency-Channel Attention for Ultrasound Thyroid Nodule Detection

Jan 05, 2026Abstract:Thyroid cancer is the most common endocrine malignancy, and its incidence is rising globally. While ultrasound is the preferred imaging modality for detecting thyroid nodules, its diagnostic accuracy is often limited by challenges such as low image contrast and blurred nodule boundaries. To address these issues, we propose Nodule-DETR, a novel detection transformer (DETR) architecture designed for robust thyroid nodule detection in ultrasound images. Nodule-DETR introduces three key innovations: a Multi-Spectral Frequency-domain Channel Attention (MSFCA) module that leverages frequency analysis to enhance features of low-contrast nodules; a Hierarchical Feature Fusion (HFF) module for efficient multi-scale integration; and Multi-Scale Deformable Attention (MSDA) to flexibly capture small and irregularly shaped nodules. We conducted extensive experiments on a clinical dataset of real-world thyroid ultrasound images. The results demonstrate that Nodule-DETR achieves state-of-the-art performance, outperforming the baseline model by a significant margin of 0.149 in mAP@0.5:0.95. The superior accuracy of Nodule-DETR highlights its significant potential for clinical application as an effective tool in computer-aided thyroid diagnosis. The code of work is available at https://github.com/wjj1wjj/Nodule-DETR.

Autonomously Unweaving Multiple Cables Using Visual Feedback

Dec 13, 2025

Abstract:Many cable management tasks involve separating out the different cables and removing tangles. Automating this task is challenging because cables are deformable and can have combinations of knots and multiple interwoven segments. Prior works have focused on untying knots in one cable, which is one subtask of cable management. However, in this paper, we focus on a different subtask called multi-cable unweaving, which refers to removing the intersections among multiple interwoven cables to separate them and facilitate further manipulation. We propose a method that utilizes visual feedback to unweave a bundle of loosely entangled cables. We formulate cable unweaving as a pick-and-place problem, where the grasp position is selected from discrete nodes in a graph-based cable state representation. Our cable state representation encodes both topological and geometric information about the cables from the visual image. To predict future cable states and identify valid actions, we present a novel state transition model that takes into account the straightening and bending of cables during manipulation. Using this state transition model, we select between two high-level action primitives and calculate predicted immediate costs to optimize the lower-level actions. We experimentally demonstrate that iterating the above perception-planning-action process enables unweaving electric cables and shoelaces with an 84% success rate on average.

Scaling Latent Reasoning via Looped Language Models

Oct 29, 2025

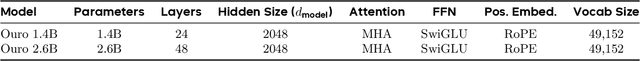

Abstract:Modern LLMs are trained to "think" primarily via explicit text generation, such as chain-of-thought (CoT), which defers reasoning to post-training and under-leverages pre-training data. We present and open-source Ouro, named after the recursive Ouroboros, a family of pre-trained Looped Language Models (LoopLM) that instead build reasoning into the pre-training phase through (i) iterative computation in latent space, (ii) an entropy-regularized objective for learned depth allocation, and (iii) scaling to 7.7T tokens. Ouro 1.4B and 2.6B models enjoy superior performance that match the results of up to 12B SOTA LLMs across a wide range of benchmarks. Through controlled experiments, we show this advantage stems not from increased knowledge capacity, but from superior knowledge manipulation capabilities. We also show that LoopLM yields reasoning traces more aligned with final outputs than explicit CoT. We hope our results show the potential of LoopLM as a novel scaling direction in the reasoning era. Our model could be found in: http://ouro-llm.github.io.

Bag-of-Word-Groups (BoWG): A Robust and Efficient Loop Closure Detection Method Under Perceptual Aliasing

Oct 26, 2025Abstract:Loop closure is critical in Simultaneous Localization and Mapping (SLAM) systems to reduce accumulative drift and ensure global mapping consistency. However, conventional methods struggle in perceptually aliased environments, such as narrow pipes, due to vector quantization, feature sparsity, and repetitive textures, while existing solutions often incur high computational costs. This paper presents Bag-of-Word-Groups (BoWG), a novel loop closure detection method that achieves superior precision-recall, robustness, and computational efficiency. The core innovation lies in the introduction of word groups, which captures the spatial co-occurrence and proximity of visual words to construct an online dictionary. Additionally, drawing inspiration from probabilistic transition models, we incorporate temporal consistency directly into similarity computation with an adaptive scheme, substantially improving precision-recall performance. The method is further strengthened by a feature distribution analysis module and dedicated post-verification mechanisms. To evaluate the effectiveness of our method, we conduct experiments on both public datasets and a confined-pipe dataset we constructed. Results demonstrate that BoWG surpasses state-of-the-art methods, including both traditional and learning-based approaches, in terms of precision-recall and computational efficiency. Our approach also exhibits excellent scalability, achieving an average processing time of 16 ms per image across 17,565 images in the Bicocca25b dataset.

The Three Regimes of Offline-to-Online Reinforcement Learning

Oct 01, 2025

Abstract:Offline-to-online reinforcement learning (RL) has emerged as a practical paradigm that leverages offline datasets for pretraining and online interactions for fine-tuning. However, its empirical behavior is highly inconsistent: design choices of online-fine tuning that work well in one setting can fail completely in another. We propose a stability--plasticity principle that can explain this inconsistency: we should preserve the knowledge of pretrained policy or offline dataset during online fine-tuning, whichever is better, while maintaining sufficient plasticity. This perspective identifies three regimes of online fine-tuning, each requiring distinct stability properties. We validate this framework through a large-scale empirical study, finding that the results strongly align with its predictions in 45 of 63 cases. This work provides a principled framework for guiding design choices in offline-to-online RL based on the relative performance of the offline dataset and the pretrained policy.

Empowering Clinical Trial Design through AI: A Randomized Evaluation of PowerGPT

Sep 15, 2025

Abstract:Sample size calculations for power analysis are critical for clinical research and trial design, yet their complexity and reliance on statistical expertise create barriers for many researchers. We introduce PowerGPT, an AI-powered system integrating large language models (LLMs) with statistical engines to automate test selection and sample size estimation in trial design. In a randomized trial to evaluate its effectiveness, PowerGPT significantly improved task completion rates (99.3% vs. 88.9% for test selection, 99.3% vs. 77.8% for sample size calculation) and accuracy (94.1% vs. 55.4% in sample size estimation, p < 0.001), while reducing average completion time (4.0 vs. 9.3 minutes, p < 0.001). These gains were consistent across various statistical tests and benefited both statisticians and non-statisticians as well as bridging expertise gaps. Already under deployment across multiple institutions, PowerGPT represents a scalable AI-driven approach that enhances accessibility, efficiency, and accuracy in statistical power analysis for clinical research.

Stable Gradients for Stable Learning at Scale in Deep Reinforcement Learning

Jun 18, 2025

Abstract:Scaling deep reinforcement learning networks is challenging and often results in degraded performance, yet the root causes of this failure mode remain poorly understood. Several recent works have proposed mechanisms to address this, but they are often complex and fail to highlight the causes underlying this difficulty. In this work, we conduct a series of empirical analyses which suggest that the combination of non-stationarity with gradient pathologies, due to suboptimal architectural choices, underlie the challenges of scale. We propose a series of direct interventions that stabilize gradient flow, enabling robust performance across a range of network depths and widths. Our interventions are simple to implement and compatible with well-established algorithms, and result in an effective mechanism that enables strong performance even at large scales. We validate our findings on a variety of agents and suites of environments.

SVD-Based Graph Fractional Fourier Transform on Directed Graphs and Its Application

Jun 04, 2025

Abstract:Graph fractional Fourier transform (GFRFT) is an extension of graph Fourier transform (GFT) that provides an additional fractional analysis tool for graph signal processing (GSP) by generalizing temporal-vertex domain Fourier analysis to fractional orders. In recent years, a large number of studies on GFRFT based on undirected graphs have emerged, but there are very few studies on directed graphs. Therefore, in this paper, one of our main contributions is to introduce two novel GFRFTs defined on Cartesian product graph of two directed graphs, by performing singular value decomposition on graph fractional Laplacian matrices. We prove that two proposed GFRFTs can effectively express spatial-temporal data sets on directed graphs with strong correlation. Moreover, we extend the theoretical results to a generalized Cartesian product graph, which is constructed by $m$ directed graphs. Finally, the denoising performance of our proposed two GFRFTs are testified through simulation by processing hourly temperature data sets collected from 32 weather stations in the Brest region of France.

Advancing Image Super-resolution Techniques in Remote Sensing: A Comprehensive Survey

May 29, 2025Abstract:Remote sensing image super-resolution (RSISR) is a crucial task in remote sensing image processing, aiming to reconstruct high-resolution (HR) images from their low-resolution (LR) counterparts. Despite the growing number of RSISR methods proposed in recent years, a systematic and comprehensive review of these methods is still lacking. This paper presents a thorough review of RSISR algorithms, covering methodologies, datasets, and evaluation metrics. We provide an in-depth analysis of RSISR methods, categorizing them into supervised, unsupervised, and quality evaluation approaches, to help researchers understand current trends and challenges. Our review also discusses the strengths, limitations, and inherent challenges of these techniques. Notably, our analysis reveals significant limitations in existing methods, particularly in preserving fine-grained textures and geometric structures under large-scale degradation. Based on these findings, we outline future research directions, highlighting the need for domain-specific architectures and robust evaluation protocols to bridge the gap between synthetic and real-world RSISR scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge