Li Wang

Northeast Normal University

Deep Factorization Model for Robust Recommendation

Nov 05, 2022Abstract:Recently, malevolent user hacking has become a huge problem for real-world companies. In order to learn predictive models for recommender systems, factorization techniques have been developed to deal with user-item ratings. In this paper, we suggest a broad architecture of a factorization model with adversarial training to get over these issues. The effectiveness of our systems is demonstrated by experimental findings on real-world datasets.

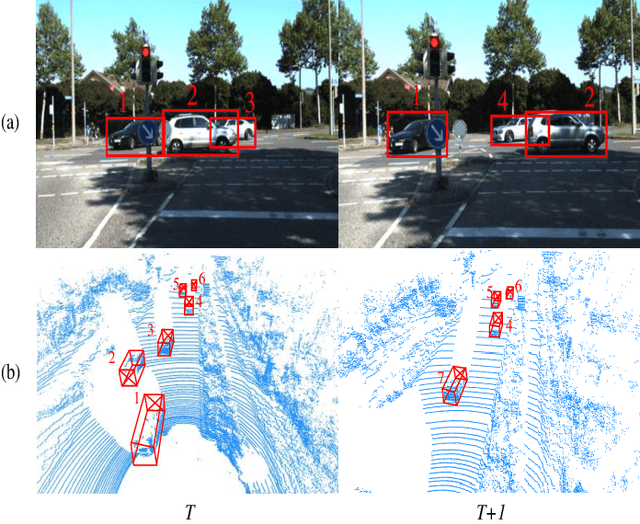

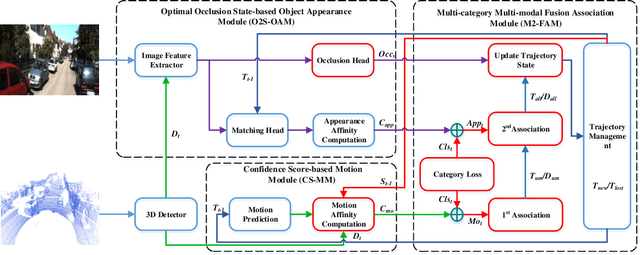

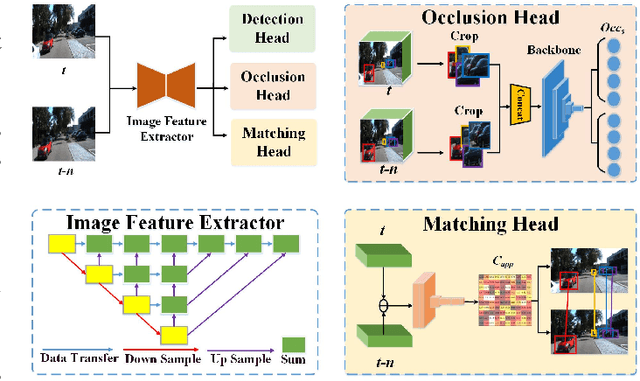

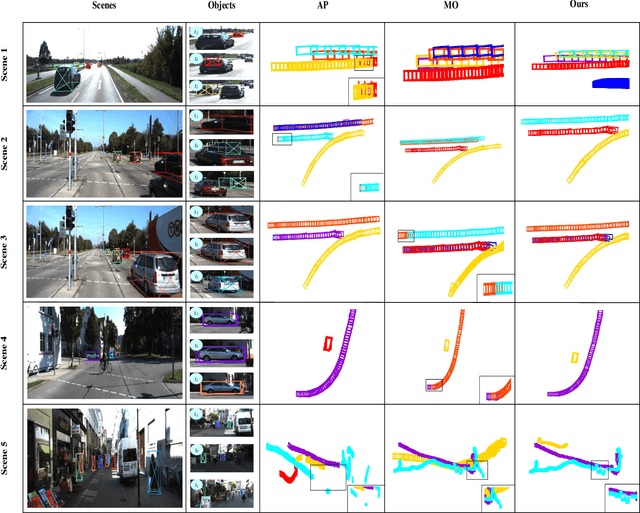

CAMO-MOT: Combined Appearance-Motion Optimization for 3D Multi-Object Tracking with Camera-LiDAR Fusion

Sep 12, 2022

Abstract:3D Multi-object tracking (MOT) ensures consistency during continuous dynamic detection, conducive to subsequent motion planning and navigation tasks in autonomous driving. However, camera-based methods suffer in the case of occlusions and it can be challenging to accurately track the irregular motion of objects for LiDAR-based methods. Some fusion methods work well but do not consider the untrustworthy issue of appearance features under occlusion. At the same time, the false detection problem also significantly affects tracking. As such, we propose a novel camera-LiDAR fusion 3D MOT framework based on the Combined Appearance-Motion Optimization (CAMO-MOT), which uses both camera and LiDAR data and significantly reduces tracking failures caused by occlusion and false detection. For occlusion problems, we are the first to propose an occlusion head to select the best object appearance features multiple times effectively, reducing the influence of occlusions. To decrease the impact of false detection in tracking, we design a motion cost matrix based on confidence scores which improve the positioning and object prediction accuracy in 3D space. As existing multi-object tracking methods only consider a single category, we also propose to build a multi-category loss to implement multi-object tracking in multi-category scenes. A series of validation experiments are conducted on the KITTI and nuScenes tracking benchmarks. Our proposed method achieves state-of-the-art performance and the lowest identity switches (IDS) value (23 for Car and 137 for Pedestrian) among all multi-modal MOT methods on the KITTI test dataset. And our proposed method achieves state-of-the-art performance among all algorithms on the nuScenes test dataset with 75.3% AMOTA.

Private, Efficient, and Accurate: Protecting Models Trained by Multi-party Learning with Differential Privacy

Aug 18, 2022

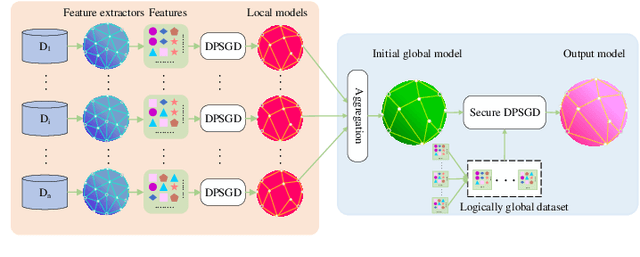

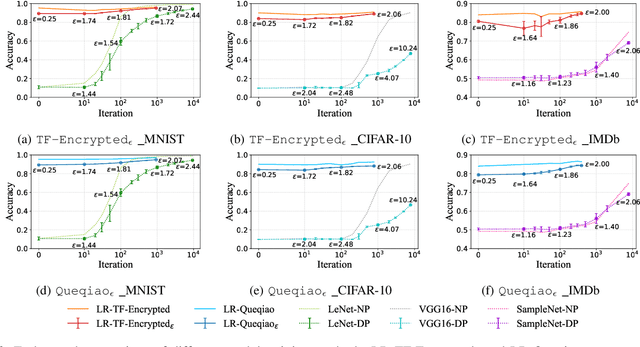

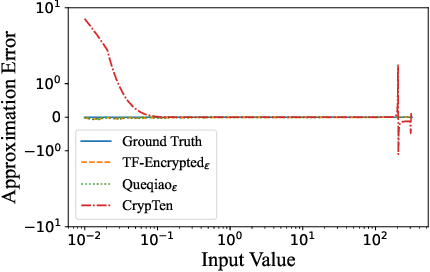

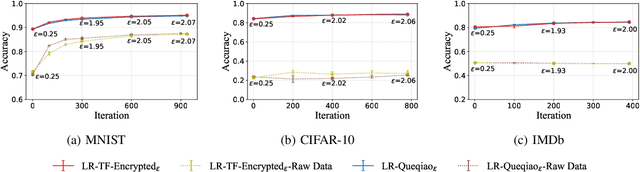

Abstract:Secure multi-party computation-based machine learning, referred to as MPL, has become an important technology to utilize data from multiple parties with privacy preservation. While MPL provides rigorous security guarantees for the computation process, the models trained by MPL are still vulnerable to attacks that solely depend on access to the models. Differential privacy could help to defend against such attacks. However, the accuracy loss brought by differential privacy and the huge communication overhead of secure multi-party computation protocols make it highly challenging to balance the 3-way trade-off between privacy, efficiency, and accuracy. In this paper, we are motivated to resolve the above issue by proposing a solution, referred to as PEA (Private, Efficient, Accurate), which consists of a secure DPSGD protocol and two optimization methods. First, we propose a secure DPSGD protocol to enforce DPSGD in secret sharing-based MPL frameworks. Second, to reduce the accuracy loss led by differential privacy noise and the huge communication overhead of MPL, we propose two optimization methods for the training process of MPL: (1) the data-independent feature extraction method, which aims to simplify the trained model structure; (2) the local data-based global model initialization method, which aims to speed up the convergence of the model training. We implement PEA in two open-source MPL frameworks: TF-Encrypted and Queqiao. The experimental results on various datasets demonstrate the efficiency and effectiveness of PEA. E.g. when ${\epsilon}$ = 2, we can train a differentially private classification model with an accuracy of 88% for CIFAR-10 within 7 minutes under the LAN setting. This result significantly outperforms the one from CryptGPU, one SOTA MPL framework: it costs more than 16 hours to train a non-private deep neural network model on CIFAR-10 with the same accuracy.

Scalable and Sparsity-Aware Privacy-Preserving K-means Clustering with Application to Fraud Detection

Aug 12, 2022

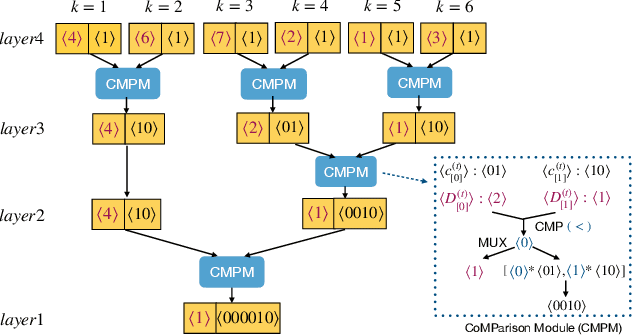

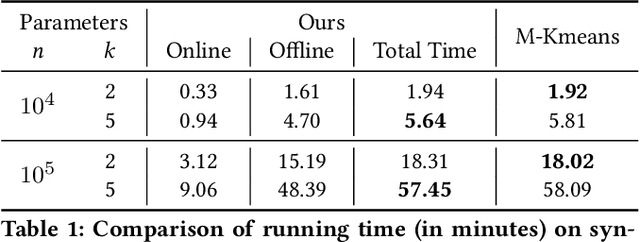

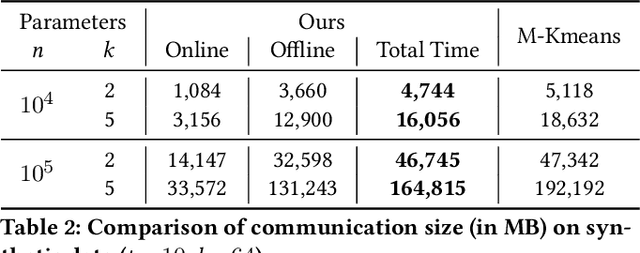

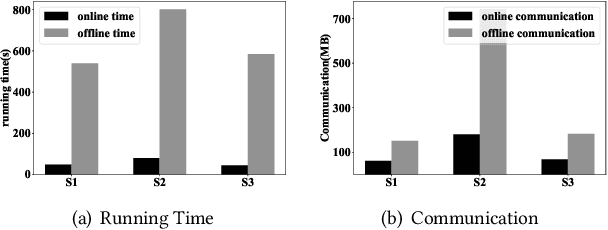

Abstract:K-means is one of the most widely used clustering models in practice. Due to the problem of data isolation and the requirement for high model performance, how to jointly build practical and secure K-means for multiple parties has become an important topic for many applications in the industry. Existing work on this is mainly of two types. The first type has efficiency advantages, but information leakage raises potential privacy risks. The second type is provable secure but is inefficient and even helpless for the large-scale data sparsity scenario. In this paper, we propose a new framework for efficient sparsity-aware K-means with three characteristics. First, our framework is divided into a data-independent offline phase and a much faster online phase, and the offline phase allows to pre-compute almost all cryptographic operations. Second, we take advantage of the vectorization techniques in both online and offline phases. Third, we adopt a sparse matrix multiplication for the data sparsity scenario to improve efficiency further. We conduct comprehensive experiments on three synthetic datasets and deploy our model in a real-world fraud detection task. Our experimental results show that, compared with the state-of-the-art solution, our model achieves competitive performance in terms of both running time and communication size, especially on sparse datasets.

Longitudinal Prediction of Postnatal Brain Magnetic Resonance Images via a Metamorphic Generative Adversarial Network

Aug 09, 2022

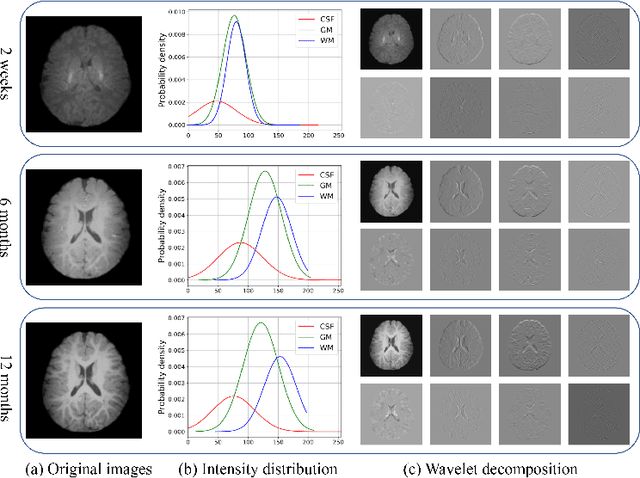

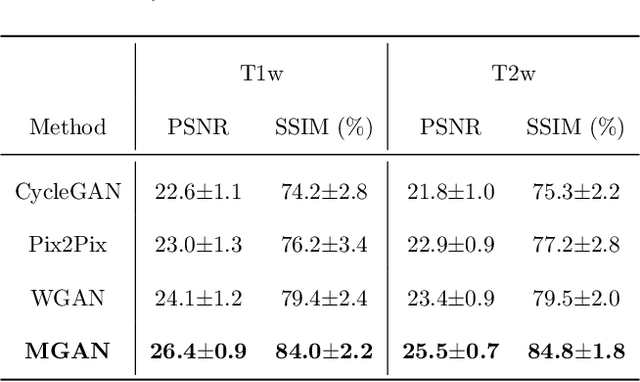

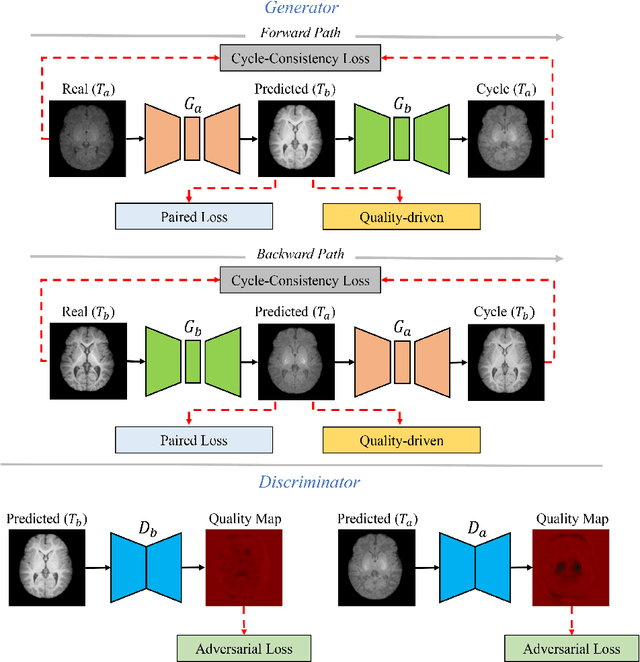

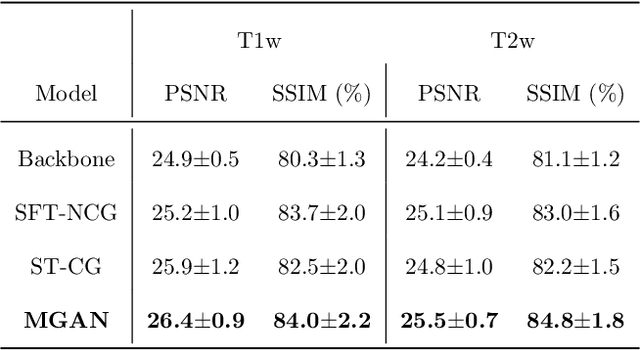

Abstract:Missing scans are inevitable in longitudinal studies due to either subject dropouts or failed scans. In this paper, we propose a deep learning framework to predict missing scans from acquired scans, catering to longitudinal infant studies. Prediction of infant brain MRI is challenging owing to the rapid contrast and structural changes particularly during the first year of life. We introduce a trustworthy metamorphic generative adversarial network (MGAN) for translating infant brain MRI from one time-point to another. MGAN has three key features: (i) Image translation leveraging spatial and frequency information for detail-preserving mapping; (ii) Quality-guided learning strategy that focuses attention on challenging regions. (iii) Multi-scale hybrid loss function that improves translation of tissue contrast and structural details. Experimental results indicate that MGAN outperforms existing GANs by accurately predicting both contrast and anatomical details.

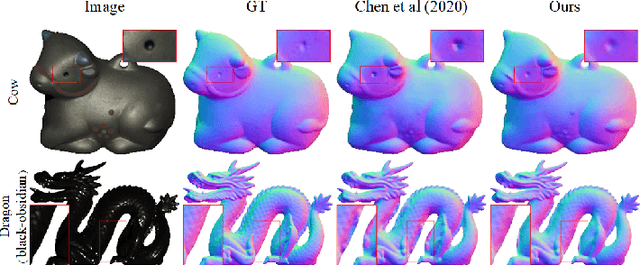

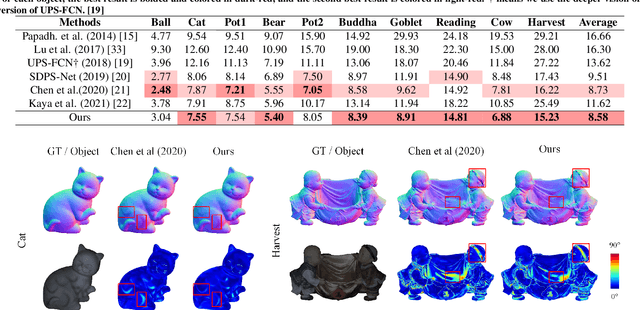

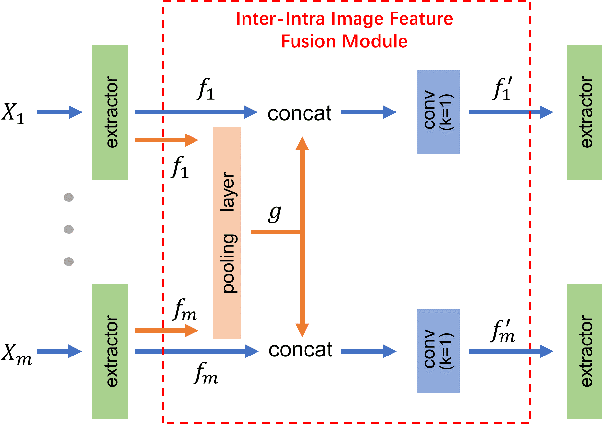

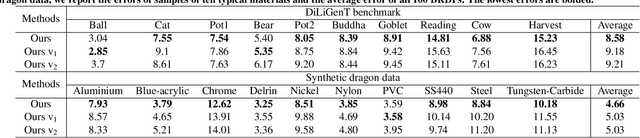

Deep Uncalibrated Photometric Stereo via Inter-Intra Image Feature Fusion

Aug 06, 2022

Abstract:Uncalibrated photometric stereo is proposed to estimate the detailed surface normal from images under varying and unknown lightings. Recently, deep learning brings powerful data priors to this underdetermined problem. This paper presents a new method for deep uncalibrated photometric stereo, which efficiently utilizes the inter-image representation to guide the normal estimation. Previous methods use optimization-based neural inverse rendering or a single size-independent pooling layer to deal with multiple inputs, which are inefficient for utilizing information among input images. Given multi-images under different lighting, we consider the intra-image and inter-image variations highly correlated. Motivated by the correlated variations, we designed an inter-intra image feature fusion module to introduce the inter-image representation into the per-image feature extraction. The extra representation is used to guide the per-image feature extraction and eliminate the ambiguity in normal estimation. We demonstrate the effect of our design on a wide range of samples, especially on dark materials. Our method produces significantly better results than the state-of-the-art methods on both synthetic and real data.

Convolutional Embedding Makes Hierarchical Vision Transformer Stronger

Aug 01, 2022

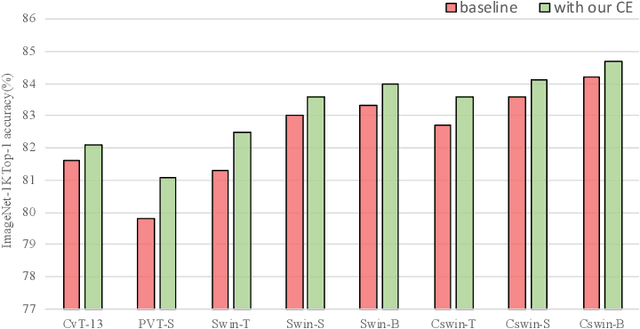

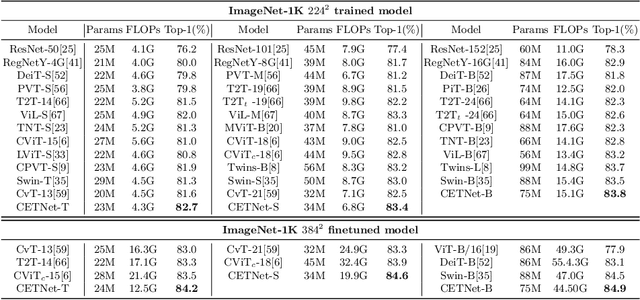

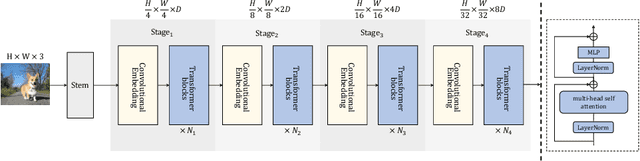

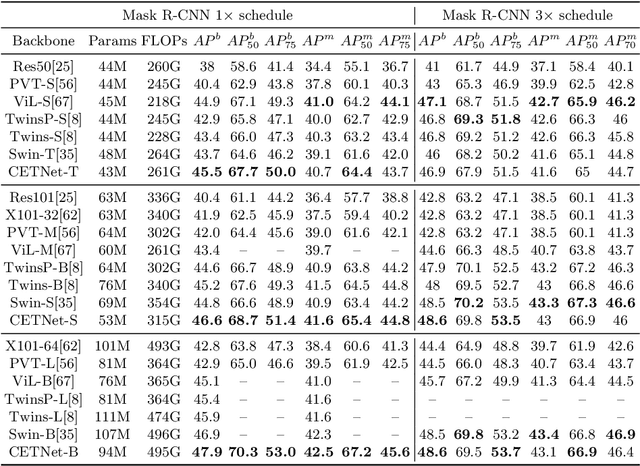

Abstract:Vision Transformers (ViTs) have recently dominated a range of computer vision tasks, yet it suffers from low training data efficiency and inferior local semantic representation capability without appropriate inductive bias. Convolutional neural networks (CNNs) inherently capture regional-aware semantics, inspiring researchers to introduce CNNs back into the architecture of the ViTs to provide desirable inductive bias for ViTs. However, is the locality achieved by the micro-level CNNs embedded in ViTs good enough? In this paper, we investigate the problem by profoundly exploring how the macro architecture of the hybrid CNNs/ViTs enhances the performances of hierarchical ViTs. Particularly, we study the role of token embedding layers, alias convolutional embedding (CE), and systemically reveal how CE injects desirable inductive bias in ViTs. Besides, we apply the optimal CE configuration to 4 recently released state-of-the-art ViTs, effectively boosting the corresponding performances. Finally, a family of efficient hybrid CNNs/ViTs, dubbed CETNets, are released, which may serve as generic vision backbones. Specifically, CETNets achieve 84.9% Top-1 accuracy on ImageNet-1K (training from scratch), 48.6% box mAP on the COCO benchmark, and 51.6% mIoU on the ADE20K, substantially improving the performances of the corresponding state-of-the-art baselines.

Intelligent Amphibious Ground-Aerial Vehicles: State of the Art Technology for Future Transportation

Jul 23, 2022

Abstract:Amphibious ground-aerial vehicles fuse flying and driving modes to enable more flexible air-land mobility and have received growing attention recently. By analyzing the existing amphibious vehicles, we highlight the autonomous fly-driving functionality for the effective uses of amphibious vehicles in complex three-dimensional urban transportation systems. We review and summarize the key enabling technologies for intelligent flying-driving in existing amphibious vehicle designs, identify major technological barriers and propose potential solutions for future research and innovation. This paper aims to serve as a guide for research and development of intelligent amphibious vehicles for urban transportation toward the future.

Mix-Teaching: A Simple, Unified and Effective Semi-Supervised Learning Framework for Monocular 3D Object Detection

Jul 10, 2022

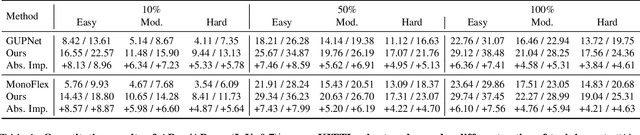

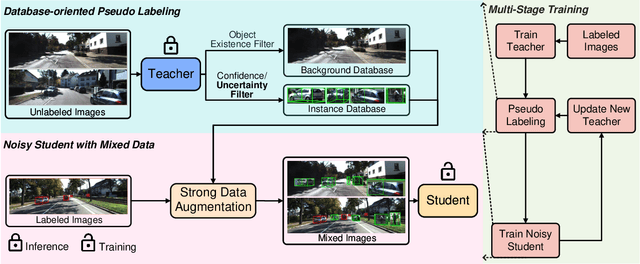

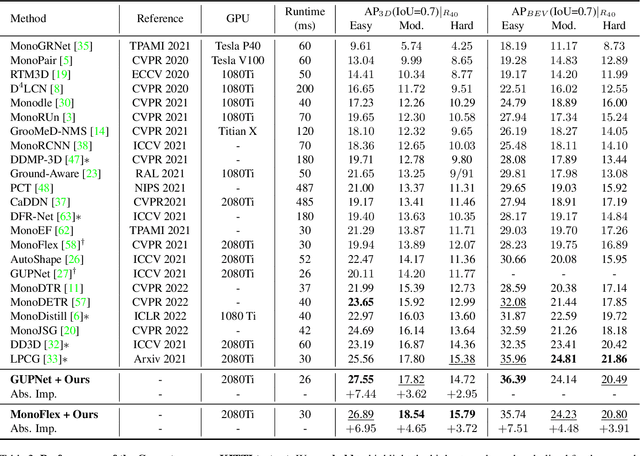

Abstract:Monocular 3D object detection is an essential perception task for autonomous driving. However, the high reliance on large-scale labeled data make it costly and time-consuming during model optimization. To reduce such over-reliance on human annotations, we propose Mix-Teaching, an effective semi-supervised learning framework applicable to employ both labeled and unlabeled images in training stage. Mix-Teaching first generates pseudo-labels for unlabeled images by self-training. The student model is then trained on the mixed images possessing much more intensive and precise labeling by merging instance-level image patches into empty backgrounds or labeled images. This is the first to break the image-level limitation and put high-quality pseudo labels from multi frames into one image for semi-supervised training. Besides, as a result of the misalignment between confidence score and localization quality, it's hard to discriminate high-quality pseudo-labels from noisy predictions using only confidence-based criterion. To that end, we further introduce an uncertainty-based filter to help select reliable pseudo boxes for the above mixing operation. To the best of our knowledge, this is the first unified SSL framework for monocular 3D object detection. Mix-Teaching consistently improves MonoFlex and GUPNet by significant margins under various labeling ratios on KITTI dataset. For example, our method achieves around +6.34% AP@0.7 improvement against the GUPNet baseline on validation set when using only 10% labeled data. Besides, by leveraging full training set and the additional 48K raw images of KITTI, it can further improve the MonoFlex by +4.65% improvement on AP@0.7 for car detection, reaching 18.54% AP@0.7, which ranks the 1st place among all monocular based methods on KITTI test leaderboard. The code and pretrained models will be released at https://github.com/yanglei18/Mix-Teaching.

Detecting fake news by enhanced text representation with multi-EDU-structure awareness

May 30, 2022

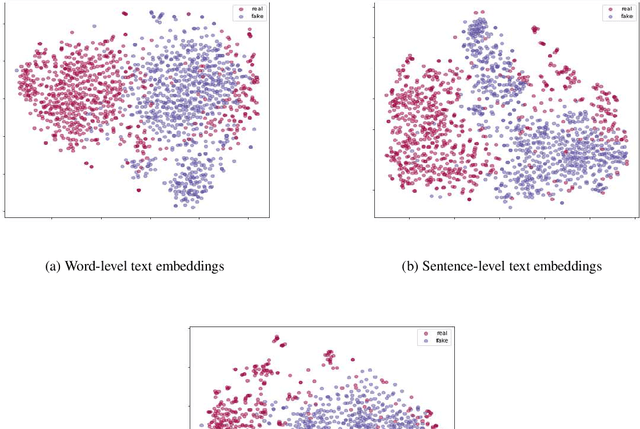

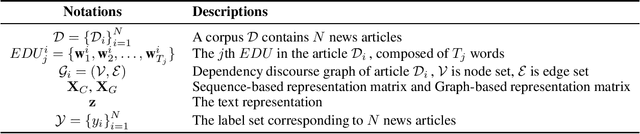

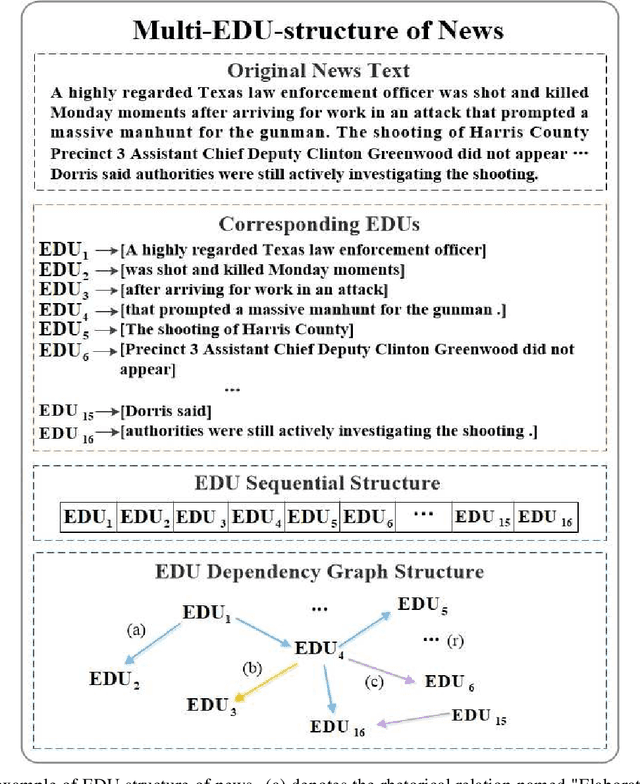

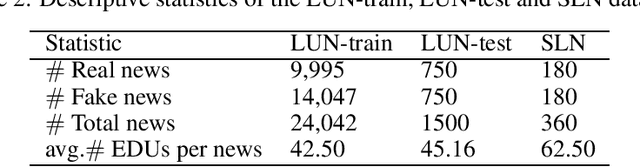

Abstract:Since fake news poses a serious threat to society and individuals, numerous studies have been brought by considering text, propagation and user profiles. Due to the data collection problem, these methods based on propagation and user profiles are less applicable in the early stages. A good alternative method is to detect news based on text as soon as they are released, and a lot of text-based methods were proposed, which usually utilized words, sentences or paragraphs as basic units. But, word is a too fine-grained unit to express coherent information well, sentence or paragraph is too coarse to show specific information. Which granularity is better and how to utilize it to enhance text representation for fake news detection are two key problems. In this paper, we introduce Elementary Discourse Unit (EDU) whose granularity is between word and sentence, and propose a multi-EDU-structure awareness model to improve text representation for fake news detection, namely EDU4FD. For the multi-EDU-structure awareness, we build the sequence-based EDU representations and the graph-based EDU representations. The former is gotten by modeling the coherence between consecutive EDUs with TextCNN that reflect the semantic coherence. For the latter, we first extract rhetorical relations to build the EDU dependency graph, which can show the global narrative logic and help deliver the main idea truthfully. Then a Relation Graph Attention Network (RGAT) is set to get the graph-based EDU representation. Finally, the two EDU representations are incorporated as the enhanced text representation for fake news detection, using a gated recursive unit combined with a global attention mechanism. Experiments on four cross-source fake news datasets show that our model outperforms the state-of-the-art text-based methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge