Guosheng Lin

Reliability-Adaptive Consistency Regularization for Weakly-Supervised Point Cloud Segmentation

Mar 09, 2023

Abstract:Weakly-supervised point cloud segmentation with extremely limited labels is highly desirable to alleviate the expensive costs of collecting densely annotated 3D points. This paper explores to apply the consistency regularization that is commonly used in weakly-supervised learning, for its point cloud counterpart with multiple data-specific augmentations, which has not been well studied. We observe that the straightforward way of applying consistency constraints to weakly-supervised point cloud segmentation has two major limitations: noisy pseudo labels due to the conventional confidence-based selection and insufficient consistency constraints due to discarding unreliable pseudo labels. Therefore, we propose a novel Reliability-Adaptive Consistency Network (RAC-Net) to use both prediction confidence and model uncertainty to measure the reliability of pseudo labels and apply consistency training on all unlabeled points while with different consistency constraints for different points based on the reliability of corresponding pseudo labels. Experimental results on the S3DIS and ScanNet-v2 benchmark datasets show that our model achieves superior performance in weakly-supervised point cloud segmentation. The code will be released.

Neural Vector Fields: Implicit Representation by Explicit Learning

Mar 08, 2023Abstract:Deep neural networks (DNNs) are widely applied for nowadays 3D surface reconstruction tasks and such methods can be further divided into two categories, which respectively warp templates explicitly by moving vertices or represent 3D surfaces implicitly as signed or unsigned distance functions. Taking advantage of both advanced explicit learning process and powerful representation ability of implicit functions, we propose a novel 3D representation method, Neural Vector Fields (NVF). It not only adopts the explicit learning process to manipulate meshes directly, but also leverages the implicit representation of unsigned distance functions (UDFs) to break the barriers in resolution and topology. Specifically, our method first predicts the displacements from queries towards the surface and models the shapes as \textit{Vector Fields}. Rather than relying on network differentiation to obtain direction fields as most existing UDF-based methods, the produced vector fields encode the distance and direction fields both and mitigate the ambiguity at "ridge" points, such that the calculation of direction fields is straightforward and differentiation-free. The differentiation-free characteristic enables us to further learn a shape codebook via Vector Quantization, which encodes the cross-object priors, accelerates the training procedure, and boosts model generalization on cross-category reconstruction. The extensive experiments on surface reconstruction benchmarks indicate that our method outperforms those state-of-the-art methods in different evaluation scenarios including watertight vs non-watertight shapes, category-specific vs category-agnostic reconstruction, category-unseen reconstruction, and cross-domain reconstruction. Our code will be publicly released.

Effective End-to-End Vision Language Pretraining with Semantic Visual Loss

Jan 18, 2023Abstract:Current vision language pretraining models are dominated by methods using region visual features extracted from object detectors. Given their good performance, the extract-then-process pipeline significantly restricts the inference speed and therefore limits their real-world use cases. However, training vision language models from raw image pixels is difficult, as the raw image pixels give much less prior knowledge than region features. In this paper, we systematically study how to leverage auxiliary visual pretraining tasks to help training end-to-end vision language models. We introduce three types of visual losses that enable much faster convergence and better finetuning accuracy. Compared with region feature models, our end-to-end models could achieve similar or better performance on downstream tasks and run more than 10 times faster during inference. Compared with other end-to-end models, our proposed method could achieve similar or better performance when pretrained for only 10% of the pretraining GPU hours.

Generalizable Person Re-Identification via Viewpoint Alignment and Fusion

Dec 05, 2022Abstract:In the current person Re-identification (ReID) methods, most domain generalization works focus on dealing with style differences between domains while largely ignoring unpredictable camera view change, which we identify as another major factor leading to a poor generalization of ReID methods. To tackle the viewpoint change, this work proposes to use a 3D dense pose estimation model and a texture mapping module to map the pedestrian images to canonical view images. Due to the imperfection of the texture mapping module, the canonical view images may lose the discriminative detail clues from the original images, and thus directly using them for ReID will inevitably result in poor performance. To handle this issue, we propose to fuse the original image and canonical view image via a transformer-based module. The key insight of this design is that the cross-attention mechanism in the transformer could be an ideal solution to align the discriminative texture clues from the original image with the canonical view image, which could compensate for the low-quality texture information of the canonical view image. Through extensive experiments, we show that our method can lead to superior performance over the existing approaches in various evaluation settings.

Unsupervised 3D Pose Transfer with Cross Consistency and Dual Reconstruction

Nov 18, 2022

Abstract:The goal of 3D pose transfer is to transfer the pose from the source mesh to the target mesh while preserving the identity information (e.g., face, body shape) of the target mesh. Deep learning-based methods improved the efficiency and performance of 3D pose transfer. However, most of them are trained under the supervision of the ground truth, whose availability is limited in real-world scenarios. In this work, we present X-DualNet, a simple yet effective approach that enables unsupervised 3D pose transfer. In X-DualNet, we introduce a generator $G$ which contains correspondence learning and pose transfer modules to achieve 3D pose transfer. We learn the shape correspondence by solving an optimal transport problem without any key point annotations and generate high-quality meshes with our elastic instance normalization (ElaIN) in the pose transfer module. With $G$ as the basic component, we propose a cross consistency learning scheme and a dual reconstruction objective to learn the pose transfer without supervision. Besides that, we also adopt an as-rigid-as-possible deformer in the training process to fine-tune the body shape of the generated results. Extensive experiments on human and animal data demonstrate that our framework can successfully achieve comparable performance as the state-of-the-art supervised approaches.

IntegratedPIFu: Integrated Pixel Aligned Implicit Function for Single-view Human Reconstruction

Nov 15, 2022Abstract:We propose IntegratedPIFu, a new pixel aligned implicit model that builds on the foundation set by PIFuHD. IntegratedPIFu shows how depth and human parsing information can be predicted and capitalised upon in a pixel-aligned implicit model. In addition, IntegratedPIFu introduces depth oriented sampling, a novel training scheme that improve any pixel aligned implicit model ability to reconstruct important human features without noisy artefacts. Lastly, IntegratedPIFu presents a new architecture that, despite using less model parameters than PIFuHD, is able to improves the structural correctness of reconstructed meshes. Our results show that IntegratedPIFu significantly outperforms existing state of the arts methods on single view human reconstruction. Our code has been made available online.

Indoor Smartphone SLAM with Learned Echoic Location Features

Oct 16, 2022

Abstract:Indoor self-localization is a highly demanded system function for smartphones. The current solutions based on inertial, radio frequency, and geomagnetic sensing may have degraded performance when their limiting factors take effect. In this paper, we present a new indoor simultaneous localization and mapping (SLAM) system that utilizes the smartphone's built-in audio hardware and inertial measurement unit (IMU). Our system uses a smartphone's loudspeaker to emit near-inaudible chirps and then the microphone to record the acoustic echoes from the indoor environment. Our profiling measurements show that the echoes carry location information with sub-meter granularity. To enable SLAM, we apply contrastive learning to construct an echoic location feature (ELF) extractor, such that the loop closures on the smartphone's trajectory can be accurately detected from the associated ELF trace. The detection results effectively regulate the IMU-based trajectory reconstruction. Extensive experiments show that our ELF-based SLAM achieves median localization errors of $0.1\,\text{m}$, $0.53\,\text{m}$, and $0.4\,\text{m}$ on the reconstructed trajectories in a living room, an office, and a shopping mall, and outperforms the Wi-Fi and geomagnetic SLAM systems.

ManiCLIP: Multi-Attribute Face Manipulation from Text

Oct 02, 2022

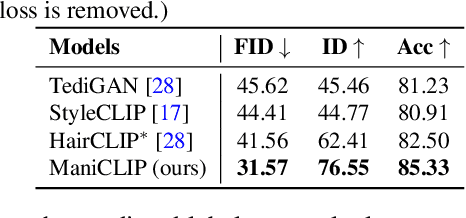

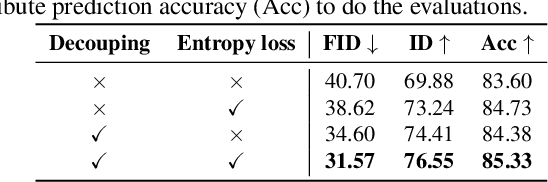

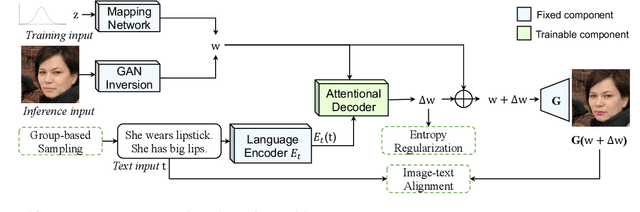

Abstract:In this paper we present a novel multi-attribute face manipulation method based on textual descriptions. Previous text-based image editing methods either require test-time optimization for each individual image or are restricted to single attribute editing. Extending these methods to multi-attribute face image editing scenarios will introduce undesired excessive attribute change, e.g., text-relevant attributes are overly manipulated and text-irrelevant attributes are also changed. In order to address these challenges and achieve natural editing over multiple face attributes, we propose a new decoupling training scheme where we use group sampling to get text segments from same attribute categories, instead of whole complex sentences. Further, to preserve other existing face attributes, we encourage the model to edit the latent code of each attribute separately via a entropy constraint. During the inference phase, our model is able to edit new face images without any test-time optimization, even from complex textual prompts. We show extensive experiments and analysis to demonstrate the efficacy of our method, which generates natural manipulated faces with minimal text-irrelevant attribute editing. Code and pre-trained model will be released.

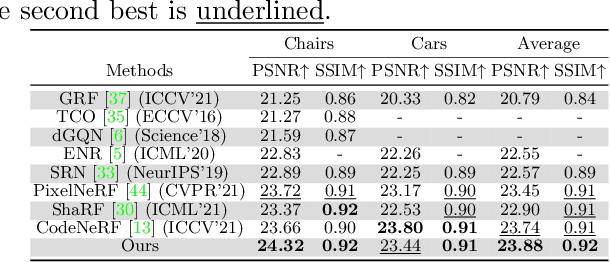

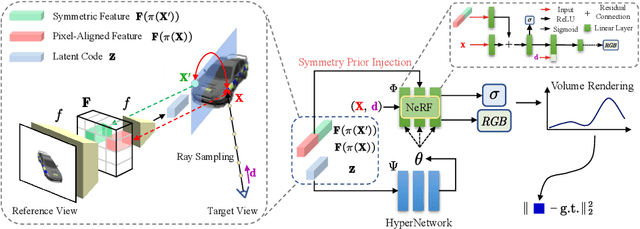

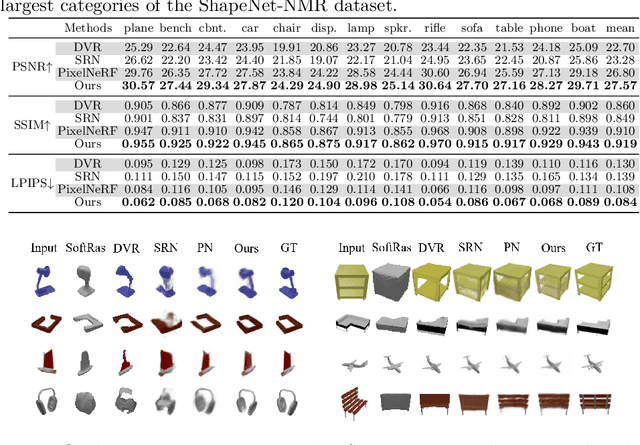

SymmNeRF: Learning to Explore Symmetry Prior for Single-View View Synthesis

Sep 29, 2022

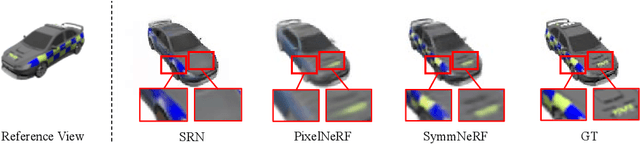

Abstract:We study the problem of novel view synthesis of objects from a single image. Existing methods have demonstrated the potential in single-view view synthesis. However, they still fail to recover the fine appearance details, especially in self-occluded areas. This is because a single view only provides limited information. We observe that manmade objects usually exhibit symmetric appearances, which introduce additional prior knowledge. Motivated by this, we investigate the potential performance gains of explicitly embedding symmetry into the scene representation. In this paper, we propose SymmNeRF, a neural radiance field (NeRF) based framework that combines local and global conditioning under the introduction of symmetry priors. In particular, SymmNeRF takes the pixel-aligned image features and the corresponding symmetric features as extra inputs to the NeRF, whose parameters are generated by a hypernetwork. As the parameters are conditioned on the image-encoded latent codes, SymmNeRF is thus scene-independent and can generalize to new scenes. Experiments on synthetic and realworld datasets show that SymmNeRF synthesizes novel views with more details regardless of the pose transformation, and demonstrates good generalization when applied to unseen objects. Code is available at: https://github.com/xingyi-li/SymmNeRF.

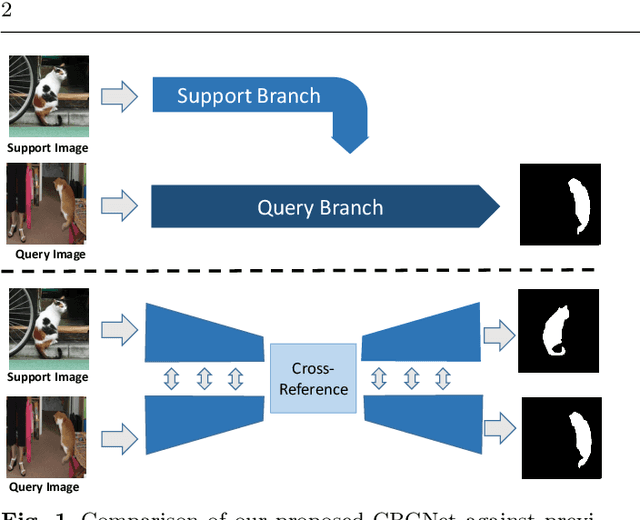

CRCNet: Few-shot Segmentation with Cross-Reference and Region-Global Conditional Networks

Aug 23, 2022

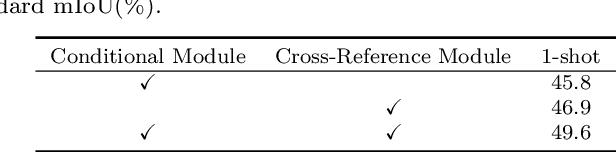

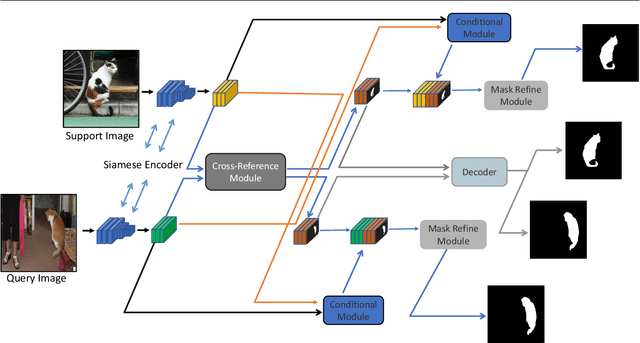

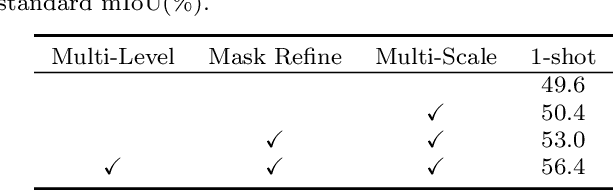

Abstract:Few-shot segmentation aims to learn a segmentation model that can be generalized to novel classes with only a few training images. In this paper, we propose a Cross-Reference and Local-Global Conditional Networks (CRCNet) for few-shot segmentation. Unlike previous works that only predict the query image's mask, our proposed model concurrently makes predictions for both the support image and the query image. Our network can better find the co-occurrent objects in the two images with a cross-reference mechanism, thus helping the few-shot segmentation task. To further improve feature comparison, we develop a local-global conditional module to capture both global and local relations. We also develop a mask refinement module to refine the prediction of the foreground regions recurrently. Experiments on the PASCAL VOC 2012, MS COCO, and FSS-1000 datasets show that our network achieves new state-of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge