Deheng Ye

Tencent Inc

RLTF: Reinforcement Learning from Unit Test Feedback

Jul 10, 2023

Abstract:The goal of program synthesis, or code generation, is to generate executable code based on given descriptions. Recently, there has been an increasing number of studies employing reinforcement learning (RL) to improve the performance of large language models (LLMs) for code. However, these RL methods have only used offline frameworks, limiting their exploration of new sample spaces. Additionally, current approaches that utilize unit test signals are rather simple, not accounting for specific error locations within the code. To address these issues, we proposed RLTF, i.e., Reinforcement Learning from Unit Test Feedback, a novel online RL framework with unit test feedback of multi-granularity for refining code LLMs. Our approach generates data in real-time during training and simultaneously utilizes fine-grained feedback signals to guide the model towards producing higher-quality code. Extensive experiments show that RLTF achieves state-of-the-art performance on the APPS and the MBPP benchmarks. Our code can be found at: https://github.com/Zyq-scut/RLTF.

Future-conditioned Unsupervised Pretraining for Decision Transformer

May 26, 2023

Abstract:Recent research in offline reinforcement learning (RL) has demonstrated that return-conditioned supervised learning is a powerful paradigm for decision-making problems. While promising, return conditioning is limited to training data labeled with rewards and therefore faces challenges in learning from unsupervised data. In this work, we aim to utilize generalized future conditioning to enable efficient unsupervised pretraining from reward-free and sub-optimal offline data. We propose Pretrained Decision Transformer (PDT), a conceptually simple approach for unsupervised RL pretraining. PDT leverages future trajectory information as a privileged context to predict actions during training. The ability to make decisions based on both present and future factors enhances PDT's capability for generalization. Besides, this feature can be easily incorporated into a return-conditioned framework for online finetuning, by assigning return values to possible futures and sampling future embeddings based on their respective values. Empirically, PDT outperforms or performs on par with its supervised pretraining counterpart, especially when dealing with sub-optimal data. Further analysis reveals that PDT can extract diverse behaviors from offline data and controllably sample high-return behaviors by online finetuning. Code is available at here.

Deploying Offline Reinforcement Learning with Human Feedback

Mar 13, 2023

Abstract:Reinforcement learning (RL) has shown promise for decision-making tasks in real-world applications. One practical framework involves training parameterized policy models from an offline dataset and subsequently deploying them in an online environment. However, this approach can be risky since the offline training may not be perfect, leading to poor performance of the RL models that may take dangerous actions. To address this issue, we propose an alternative framework that involves a human supervising the RL models and providing additional feedback in the online deployment phase. We formalize this online deployment problem and develop two approaches. The first approach uses model selection and the upper confidence bound algorithm to adaptively select a model to deploy from a candidate set of trained offline RL models. The second approach involves fine-tuning the model in the online deployment phase when a supervision signal arrives. We demonstrate the effectiveness of these approaches for robot locomotion control and traffic light control tasks through empirical validation.

Sample Dropout: A Simple yet Effective Variance Reduction Technique in Deep Policy Optimization

Feb 05, 2023Abstract:Recent success in Deep Reinforcement Learning (DRL) methods has shown that policy optimization with respect to an off-policy distribution via importance sampling is effective for sample reuse. In this paper, we show that the use of importance sampling could introduce high variance in the objective estimate. Specifically, we show in a principled way that the variance of importance sampling estimate grows quadratically with importance ratios and the large ratios could consequently jeopardize the effectiveness of surrogate objective optimization. We then propose a technique called sample dropout to bound the estimation variance by dropping out samples when their ratio deviation is too high. We instantiate this sample dropout technique on representative policy optimization algorithms, including TRPO, PPO, and ESPO, and demonstrate that it consistently boosts the performance of those DRL algorithms on both continuous and discrete action controls, including MuJoCo, DMControl and Atari video games. Our code is open-sourced at \url{https://github.com/LinZichuan/sdpo.git}.

Revisiting Estimation Bias in Policy Gradients for Deep Reinforcement Learning

Jan 20, 2023Abstract:We revisit the estimation bias in policy gradients for the discounted episodic Markov decision process (MDP) from Deep Reinforcement Learning (DRL) perspective. The objective is formulated theoretically as the expected returns discounted over the time horizon. One of the major policy gradient biases is the state distribution shift: the state distribution used to estimate the gradients differs from the theoretical formulation in that it does not take into account the discount factor. Existing discussion of the influence of this bias was limited to the tabular and softmax cases in the literature. Therefore, in this paper, we extend it to the DRL setting where the policy is parameterized and demonstrate how this bias can lead to suboptimal policies theoretically. We then discuss why the empirically inaccurate implementations with shifted state distribution can still be effective. We show that, despite such state distribution shift, the policy gradient estimation bias can be reduced in the following three ways: 1) a small learning rate; 2) an adaptive-learning-rate-based optimizer; and 3) KL regularization. Specifically, we show that a smaller learning rate, or, an adaptive learning rate, such as that used by Adam and RSMProp optimizers, makes the policy optimization robust to the bias. We further draw connections between optimizers and the optimization regularization to show that both the KL and the reverse KL regularization can significantly rectify this bias. Moreover, we provide extensive experiments on continuous control tasks to support our analysis. Our paper sheds light on how successful PG algorithms optimize policies in the DRL setting, and contributes insights into the practical issues in DRL.

A Survey on Transformers in Reinforcement Learning

Jan 08, 2023Abstract:Transformer has been considered the dominating neural architecture in NLP and CV, mostly under a supervised setting. Recently, a similar surge of using Transformers has appeared in the domain of reinforcement learning (RL), but it is faced with unique design choices and challenges brought by the nature of RL. However, the evolution of Transformers in RL has not yet been well unraveled. Hence, in this paper, we seek to systematically review motivations and progress on using Transformers in RL, provide a taxonomy on existing works, discuss each sub-field, and summarize future prospects.

RLogist: Fast Observation Strategy on Whole-slide Images with Deep Reinforcement Learning

Dec 13, 2022Abstract:Whole-slide images (WSI) in computational pathology have high resolution with gigapixel size, but are generally with sparse regions of interest, which leads to weak diagnostic relevance and data inefficiency for each area in the slide. Most of the existing methods rely on a multiple instance learning framework that requires densely sampling local patches at high magnification. The limitation is evident in the application stage as the heavy computation for extracting patch-level features is inevitable. In this paper, we develop RLogist, a benchmarking deep reinforcement learning (DRL) method for fast observation strategy on WSIs. Imitating the diagnostic logic of human pathologists, our RL agent learns how to find regions of observation value and obtain representative features across multiple resolution levels, without having to analyze each part of the WSI at the high magnification. We benchmark our method on two whole-slide level classification tasks, including detection of metastases in WSIs of lymph node sections, and subtyping of lung cancer. Experimental results demonstrate that RLogist achieves competitive classification performance compared to typical multiple instance learning algorithms, while having a significantly short observation path. In addition, the observation path given by RLogist provides good decision-making interpretability, and its ability of reading path navigation can potentially be used by pathologists for educational/assistive purposes. Our code is available at: \url{https://github.com/tencent-ailab/RLogist}.

Pretraining in Deep Reinforcement Learning: A Survey

Nov 08, 2022Abstract:The past few years have seen rapid progress in combining reinforcement learning (RL) with deep learning. Various breakthroughs ranging from games to robotics have spurred the interest in designing sophisticated RL algorithms and systems. However, the prevailing workflow in RL is to learn tabula rasa, which may incur computational inefficiency. This precludes continuous deployment of RL algorithms and potentially excludes researchers without large-scale computing resources. In many other areas of machine learning, the pretraining paradigm has shown to be effective in acquiring transferable knowledge, which can be utilized for a variety of downstream tasks. Recently, we saw a surge of interest in Pretraining for Deep RL with promising results. However, much of the research has been based on different experimental settings. Due to the nature of RL, pretraining in this field is faced with unique challenges and hence requires new design principles. In this survey, we seek to systematically review existing works in pretraining for deep reinforcement learning, provide a taxonomy of these methods, discuss each sub-field, and bring attention to open problems and future directions.

Curriculum-based Asymmetric Multi-task Reinforcement Learning

Nov 07, 2022

Abstract:We introduce CAMRL, the first curriculum-based asymmetric multi-task learning (AMTL) algorithm for dealing with multiple reinforcement learning (RL) tasks altogether. To mitigate the negative influence of customizing the one-off training order in curriculum-based AMTL, CAMRL switches its training mode between parallel single-task RL and asymmetric multi-task RL (MTRL), according to an indicator regarding the training time, the overall performance, and the performance gap among tasks. To leverage the multi-sourced prior knowledge flexibly and to reduce negative transfer in AMTL, we customize a composite loss with multiple differentiable ranking functions and optimize the loss through alternating optimization and the Frank-Wolfe algorithm. The uncertainty-based automatic adjustment of hyper-parameters is also applied to eliminate the need of laborious hyper-parameter analysis during optimization. By optimizing the composite loss, CAMRL predicts the next training task and continuously revisits the transfer matrix and network weights. We have conducted experiments on a wide range of benchmarks in multi-task RL, covering Gym-minigrid, Meta-world, Atari video games, vision-based PyBullet tasks, and RLBench, to show the improvements of CAMRL over the corresponding single-task RL algorithm and state-of-the-art MTRL algorithms. The code is available at: https://github.com/huanghanchi/CAMRL

Robust Offline Reinforcement Learning with Gradient Penalty and Constraint Relaxation

Oct 19, 2022

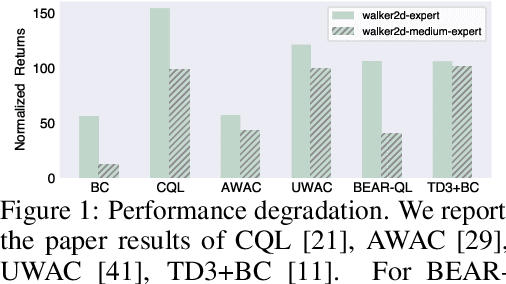

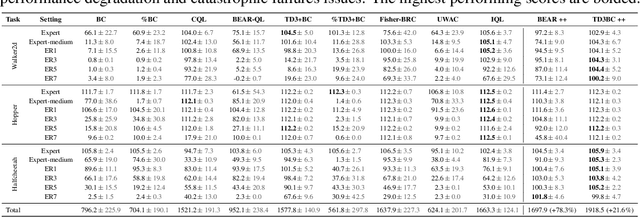

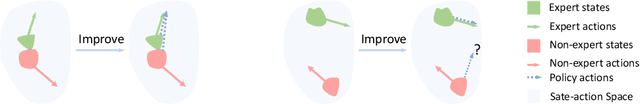

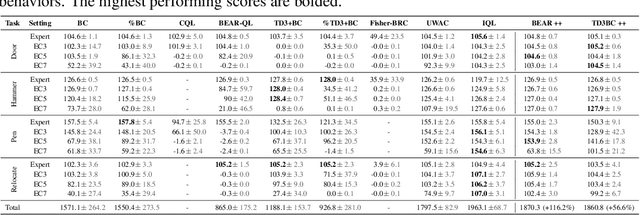

Abstract:A promising paradigm for offline reinforcement learning (RL) is to constrain the learned policy to stay close to the dataset behaviors, known as policy constraint offline RL. However, existing works heavily rely on the purity of the data, exhibiting performance degradation or even catastrophic failure when learning from contaminated datasets containing impure trajectories of diverse levels. e.g., expert level, medium level, etc., while offline contaminated data logs exist commonly in the real world. To mitigate this, we first introduce gradient penalty over the learned value function to tackle the exploding Q-functions. We then relax the closeness constraints towards non-optimal actions with critic weighted constraint relaxation. Experimental results show that the proposed techniques effectively tame the non-optimal trajectories for policy constraint offline RL methods, evaluated on a set of contaminated D4RL Mujoco and Adroit datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge