music generation

Music generation is the task of generating music or music-like sounds from a model or algorithm.

Papers and Code

Coupling the Heart to Musical Machines

May 05, 2025

Biofeedback is being used more recently as a general control paradigm for human-computer interfaces (HCIs). While biofeedback especially from breath has seen increasing uptake as a controller for novel musical interfaces, new interfaces for musical expression (NIMEs), the community has not given as much attention to the heart. The heart is just as intimate a part of music as breath and it is argued that the heart determines our perception of time and so indirectly our perception of music. Inspired by this I demonstrate a photoplethysmogram (PPG)-based NIME controller using heart rate as a 1D control parameter to transform the qualities of sounds in real-time over a Bluetooth wireless HCI. I apply time scaling to "warp" audio buffers inbound to the sound card, and play these transformed audio buffers back to the listener wearing the PPG sensor, creating a hypothetical perceptual biofeedback loop: changes in sound change heart rate to change PPG measurements to change sound. I discuss how a sound-heart-PPG biofeedback loop possibly affords greater control and/or variety of movements with a 1D controller, how controlling the space and/or time scale of sound playback with biofeedback makes for possibilities in performance ambience, and I briefly discuss generative latent spaces as a possible way to extend a 1D PPG control space.

Exploiting Vulnerabilities in Speech Translation Systems through Targeted Adversarial Attacks

Mar 05, 2025As speech translation (ST) systems become increasingly prevalent, understanding their vulnerabilities is crucial for ensuring robust and reliable communication. However, limited work has explored this issue in depth. This paper explores methods of compromising these systems through imperceptible audio manipulations. Specifically, we present two innovative approaches: (1) the injection of perturbation into source audio, and (2) the generation of adversarial music designed to guide targeted translation, while also conducting more practical over-the-air attacks in the physical world. Our experiments reveal that carefully crafted audio perturbations can mislead translation models to produce targeted, harmful outputs, while adversarial music achieve this goal more covertly, exploiting the natural imperceptibility of music. These attacks prove effective across multiple languages and translation models, highlighting a systemic vulnerability in current ST architectures. The implications of this research extend beyond immediate security concerns, shedding light on the interpretability and robustness of neural speech processing systems. Our findings underscore the need for advanced defense mechanisms and more resilient architectures in the realm of audio systems. More details and samples can be found at https://adv-st.github.io.

TALKPLAY: Multimodal Music Recommendation with Large Language Models

Feb 20, 2025We present TalkPlay, a multimodal music recommendation system that reformulates the recommendation task as large language model token generation. TalkPlay represents music through an expanded token vocabulary that encodes multiple modalities - audio, lyrics, metadata, semantic tags, and playlist co-occurrence. Using these rich representations, the model learns to generate recommendations through next-token prediction on music recommendation conversations, that requires learning the associations natural language query and response, as well as music items. In other words, the formulation transforms music recommendation into a natural language understanding task, where the model's ability to predict conversation tokens directly optimizes query-item relevance. Our approach eliminates traditional recommendation-dialogue pipeline complexity, enabling end-to-end learning of query-aware music recommendations. In the experiment, TalkPlay is successfully trained and outperforms baseline methods in various aspects, demonstrating strong context understanding as a conversational music recommender.

A Computational Cognitive Model for Processing Repetitions of Hierarchical Relations

Apr 14, 2025Patterns are fundamental to human cognition, enabling the recognition of structure and regularity across diverse domains. In this work, we focus on structural repeats, patterns that arise from the repetition of hierarchical relations within sequential data, and develop a candidate computational model of how humans detect and understand such structural repeats. Based on a weighted deduction system, our model infers the minimal generative process of a given sequence in the form of a Template program, a formalism that enriches the context-free grammar with repetition combinators. Such representation efficiently encodes the repetition of sub-computations in a recursive manner. As a proof of concept, we demonstrate the expressiveness of our model on short sequences from music and action planning. The proposed model offers broader insights into the mental representations and cognitive mechanisms underlying human pattern recognition.

Global Position Aware Group Choreography using Large Language Model

Mar 12, 2025

Dance serves as a profound and universal expression of human culture, conveying emotions and stories through movements synchronized with music. Although some current works have achieved satisfactory results in the task of single-person dance generation, the field of multi-person dance generation remains relatively novel. In this work, we present a group choreography framework that leverages recent advancements in Large Language Models (LLM) by modeling the group dance generation problem as a sequence-to-sequence translation task. Our framework consists of a tokenizer that transforms continuous features into discrete tokens, and an LLM that is fine-tuned to predict motion tokens given the audio tokens. We show that by proper tokenization of input modalities and careful design of the LLM training strategies, our framework can generate realistic and diverse group dances while maintaining strong music correlation and dancer-wise consistency. Extensive experiments and evaluations demonstrate that our framework achieves state-of-the-art performance.

Designing Neural Synthesizers for Low Latency Interaction

Mar 14, 2025Neural Audio Synthesis (NAS) models offer interactive musical control over high-quality, expressive audio generators. While these models can operate in real-time, they often suffer from high latency, making them unsuitable for intimate musical interaction. The impact of architectural choices in deep learning models on audio latency remains largely unexplored in the NAS literature. In this work, we investigate the sources of latency and jitter typically found in interactive NAS models. We then apply this analysis to the task of timbre transfer using RAVE, a convolutional variational autoencoder for audio waveforms introduced by Caillon et al. in 2021. Finally, we present an iterative design approach for optimizing latency. This culminates with a model we call BRAVE (Bravely Realtime Audio Variational autoEncoder), which is low-latency and exhibits better pitch and loudness replication while showing timbre modification capabilities similar to RAVE. We implement it in a specialized inference framework for low-latency, real-time inference and present a proof-of-concept audio plugin compatible with audio signals from musical instruments. We expect the challenges and guidelines described in this document to support NAS researchers in designing models for low-latency inference from the ground up, enriching the landscape of possibilities for musicians.

Solving Copyright Infringement on Short Video Platforms: Novel Datasets and an Audio Restoration Deep Learning Pipeline

Apr 30, 2025Short video platforms like YouTube Shorts and TikTok face significant copyright compliance challenges, as infringers frequently embed arbitrary background music (BGM) to obscure original soundtracks (OST) and evade content originality detection. To tackle this issue, we propose a novel pipeline that integrates Music Source Separation (MSS) and cross-modal video-music retrieval (CMVMR). Our approach effectively separates arbitrary BGM from the original OST, enabling the restoration of authentic video audio tracks. To support this work, we introduce two domain-specific datasets: OASD-20K for audio separation and OSVAR-160 for pipeline evaluation. OASD-20K contains 20,000 audio clips featuring mixed BGM and OST pairs, while OSVAR160 is a unique benchmark dataset comprising 1,121 video and mixed-audio pairs, specifically designed for short video restoration tasks. Experimental results demonstrate that our pipeline not only removes arbitrary BGM with high accuracy but also restores OSTs, ensuring content integrity. This approach provides an ethical and scalable solution to copyright challenges in user-generated content on short video platforms.

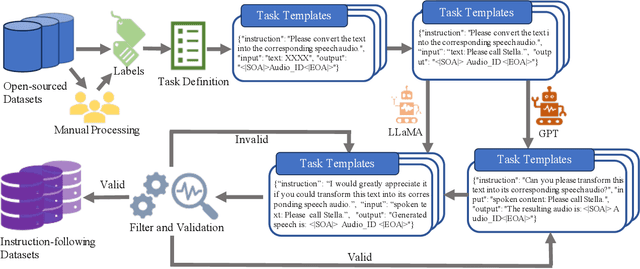

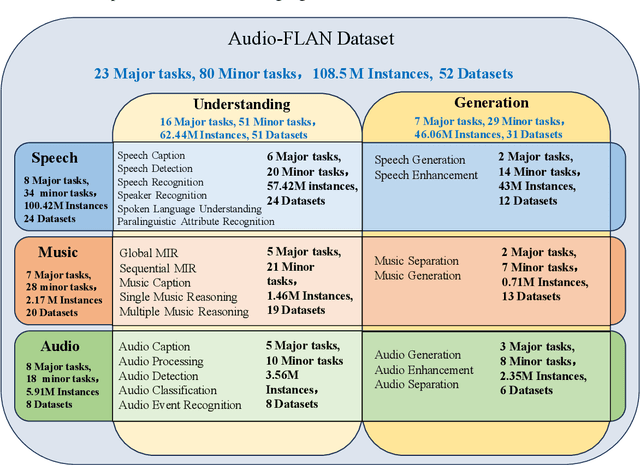

Audio-FLAN: A Preliminary Release

Feb 23, 2025

Recent advancements in audio tokenization have significantly enhanced the integration of audio capabilities into large language models (LLMs). However, audio understanding and generation are often treated as distinct tasks, hindering the development of truly unified audio-language models. While instruction tuning has demonstrated remarkable success in improving generalization and zero-shot learning across text and vision, its application to audio remains largely unexplored. A major obstacle is the lack of comprehensive datasets that unify audio understanding and generation. To address this, we introduce Audio-FLAN, a large-scale instruction-tuning dataset covering 80 diverse tasks across speech, music, and sound domains, with over 100 million instances. Audio-FLAN lays the foundation for unified audio-language models that can seamlessly handle both understanding (e.g., transcription, comprehension) and generation (e.g., speech, music, sound) tasks across a wide range of audio domains in a zero-shot manner. The Audio-FLAN dataset is available on HuggingFace and GitHub and will be continuously updated.

Kimi-Audio Technical Report

Apr 25, 2025We present Kimi-Audio, an open-source audio foundation model that excels in audio understanding, generation, and conversation. We detail the practices in building Kimi-Audio, including model architecture, data curation, training recipe, inference deployment, and evaluation. Specifically, we leverage a 12.5Hz audio tokenizer, design a novel LLM-based architecture with continuous features as input and discrete tokens as output, and develop a chunk-wise streaming detokenizer based on flow matching. We curate a pre-training dataset that consists of more than 13 million hours of audio data covering a wide range of modalities including speech, sound, and music, and build a pipeline to construct high-quality and diverse post-training data. Initialized from a pre-trained LLM, Kimi-Audio is continual pre-trained on both audio and text data with several carefully designed tasks, and then fine-tuned to support a diverse of audio-related tasks. Extensive evaluation shows that Kimi-Audio achieves state-of-the-art performance on a range of audio benchmarks including speech recognition, audio understanding, audio question answering, and speech conversation. We release the codes, model checkpoints, as well as the evaluation toolkits in https://github.com/MoonshotAI/Kimi-Audio.

A Reservoir-based Model for Human-like Perception of Complex Rhythm Pattern

Mar 16, 2025Rhythm is a fundamental aspect of human behaviour, present from infancy and deeply embedded in cultural practices. Rhythm anticipation is a spontaneous cognitive process that typically occurs before the onset of actual beats. While most research in both neuroscience and artificial intelligence has focused on metronome-based rhythm tasks, studies investigating the perception of complex musical rhythm patterns remain limited. To address this gap, we propose a hierarchical oscillator-based model to better understand the perception of complex musical rhythms in biological systems. The model consists of two types of coupled neurons that generate oscillations, with different layers tuned to respond to distinct perception levels. We evaluate the model using several representative rhythm patterns spanning the upper, middle, and lower bounds of human musical perception. Our findings demonstrate that, while maintaining a high degree of synchronization accuracy, the model exhibits human-like rhythmic behaviours. Additionally, the beta band neuronal activity in the model mirrors patterns observed in the human brain, further validating the biological plausibility of the approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge