facial recognition

Facial recognition is an AI-based technique for identifying or confirming an individual's identity using their face. It maps facial features from an image or video and then compares the information with a collection of known faces to find a match.

Papers and Code

EmoSign: A Multimodal Dataset for Understanding Emotions in American Sign Language

May 20, 2025Unlike spoken languages where the use of prosodic features to convey emotion is well studied, indicators of emotion in sign language remain poorly understood, creating communication barriers in critical settings. Sign languages present unique challenges as facial expressions and hand movements simultaneously serve both grammatical and emotional functions. To address this gap, we introduce EmoSign, the first sign video dataset containing sentiment and emotion labels for 200 American Sign Language (ASL) videos. We also collect open-ended descriptions of emotion cues. Annotations were done by 3 Deaf ASL signers with professional interpretation experience. Alongside the annotations, we include baseline models for sentiment and emotion classification. This dataset not only addresses a critical gap in existing sign language research but also establishes a new benchmark for understanding model capabilities in multimodal emotion recognition for sign languages. The dataset is made available at https://huggingface.co/datasets/catfang/emosign.

Breaking the Barriers: Video Vision Transformers for Word-Level Sign Language Recognition

Apr 10, 2025Sign language is a fundamental means of communication for the deaf and hard-of-hearing (DHH) community, enabling nuanced expression through gestures, facial expressions, and body movements. Despite its critical role in facilitating interaction within the DHH population, significant barriers persist due to the limited fluency in sign language among the hearing population. Overcoming this communication gap through automatic sign language recognition (SLR) remains a challenge, particularly at a dynamic word-level, where temporal and spatial dependencies must be effectively recognized. While Convolutional Neural Networks have shown potential in SLR, they are computationally intensive and have difficulties in capturing global temporal dependencies between video sequences. To address these limitations, we propose a Video Vision Transformer (ViViT) model for word-level American Sign Language (ASL) recognition. Transformer models make use of self-attention mechanisms to effectively capture global relationships across spatial and temporal dimensions, which makes them suitable for complex gesture recognition tasks. The VideoMAE model achieves a Top-1 accuracy of 75.58% on the WLASL100 dataset, highlighting its strong performance compared to traditional CNNs with 65.89%. Our study demonstrates that transformer-based architectures have great potential to advance SLR, overcome communication barriers and promote the inclusion of DHH individuals.

Attributes-aware Visual Emotion Representation Learning

Apr 09, 2025

Visual emotion analysis or recognition has gained considerable attention due to the growing interest in understanding how images can convey rich semantics and evoke emotions in human perception. However, visual emotion analysis poses distinctive challenges compared to traditional vision tasks, especially due to the intricate relationship between general visual features and the different affective states they evoke, known as the affective gap. Researchers have used deep representation learning methods to address this challenge of extracting generalized features from entire images. However, most existing methods overlook the importance of specific emotional attributes such as brightness, colorfulness, scene understanding, and facial expressions. Through this paper, we introduce A4Net, a deep representation network to bridge the affective gap by leveraging four key attributes: brightness (Attribute 1), colorfulness (Attribute 2), scene context (Attribute 3), and facial expressions (Attribute 4). By fusing and jointly training all aspects of attribute recognition and visual emotion analysis, A4Net aims to provide a better insight into emotional content in images. Experimental results show the effectiveness of A4Net, showcasing competitive performance compared to state-of-the-art methods across diverse visual emotion datasets. Furthermore, visualizations of activation maps generated by A4Net offer insights into its ability to generalize across different visual emotion datasets.

Multimodal Representation Learning Techniques for Comprehensive Facial State Analysis

Apr 14, 2025

Multimodal foundation models have significantly improved feature representation by integrating information from multiple modalities, making them highly suitable for a broader set of applications. However, the exploration of multimodal facial representation for understanding perception has been limited. Understanding and analyzing facial states, such as Action Units (AUs) and emotions, require a comprehensive and robust framework that bridges visual and linguistic modalities. In this paper, we present a comprehensive pipeline for multimodal facial state analysis. First, we compile a new Multimodal Face Dataset (MFA) by generating detailed multilevel language descriptions of face, incorporating Action Unit (AU) and emotion descriptions, by leveraging GPT-4o. Second, we introduce a novel Multilevel Multimodal Face Foundation model (MF^2) tailored for Action Unit (AU) and emotion recognition. Our model incorporates comprehensive visual feature modeling at both local and global levels of face image, enhancing its ability to represent detailed facial appearances. This design aligns visual representations with structured AU and emotion descriptions, ensuring effective cross-modal integration. Third, we develop a Decoupled Fine-Tuning Network (DFN) that efficiently adapts MF^2 across various tasks and datasets. This approach not only reduces computational overhead but also broadens the applicability of the foundation model to diverse scenarios. Experimentation show superior performance for AU and emotion detection tasks.

* Accepted by ICME2025

Fréchet Cumulative Covariance Net for Deep Nonlinear Sufficient Dimension Reduction with Random Objects

Feb 21, 2025

Nonlinear sufficient dimension reduction\citep{libing_generalSDR}, which constructs nonlinear low-dimensional representations to summarize essential features of high-dimensional data, is an important branch of representation learning. However, most existing methods are not applicable when the response variables are complex non-Euclidean random objects, which are frequently encountered in many recent statistical applications. In this paper, we introduce a new statistical dependence measure termed Fr\'echet Cumulative Covariance (FCCov) and develop a novel nonlinear SDR framework based on FCCov. Our approach is not only applicable to complex non-Euclidean data, but also exhibits robustness against outliers. We further incorporate Feedforward Neural Networks (FNNs) and Convolutional Neural Networks (CNNs) to estimate nonlinear sufficient directions in the sample level. Theoretically, we prove that our method with squared Frobenius norm regularization achieves unbiasedness at the $\sigma$-field level. Furthermore, we establish non-asymptotic convergence rates for our estimators based on FNNs and ResNet-type CNNs, which match the minimax rate of nonparametric regression up to logarithmic factors. Intensive simulation studies verify the performance of our methods in both Euclidean and non-Euclidean settings. We apply our method to facial expression recognition datasets and the results underscore more realistic and broader applicability of our proposal.

Siformer: Feature-isolated Transformer for Efficient Skeleton-based Sign Language Recognition

Mar 26, 2025Sign language recognition (SLR) refers to interpreting sign language glosses from given videos automatically. This research area presents a complex challenge in computer vision because of the rapid and intricate movements inherent in sign languages, which encompass hand gestures, body postures, and even facial expressions. Recently, skeleton-based action recognition has attracted increasing attention due to its ability to handle variations in subjects and backgrounds independently. However, current skeleton-based SLR methods exhibit three limitations: 1) they often neglect the importance of realistic hand poses, where most studies train SLR models on non-realistic skeletal representations; 2) they tend to assume complete data availability in both training or inference phases, and capture intricate relationships among different body parts collectively; 3) these methods treat all sign glosses uniformly, failing to account for differences in complexity levels regarding skeletal representations. To enhance the realism of hand skeletal representations, we present a kinematic hand pose rectification method for enforcing constraints. Mitigating the impact of missing data, we propose a feature-isolated mechanism to focus on capturing local spatial-temporal context. This method captures the context concurrently and independently from individual features, thus enhancing the robustness of the SLR model. Additionally, to adapt to varying complexity levels of sign glosses, we develop an input-adaptive inference approach to optimise computational efficiency and accuracy. Experimental results demonstrate the effectiveness of our approach, as evidenced by achieving a new state-of-the-art (SOTA) performance on WLASL100 and LSA64. For WLASL100, we achieve a top-1 accuracy of 86.50\%, marking a relative improvement of 2.39% over the previous SOTA. For LSA64, we achieve a top-1 accuracy of 99.84%.

Enhancing Facial Privacy Protection via Weakening Diffusion Purification

Mar 13, 2025The rapid growth of social media has led to the widespread sharing of individual portrait images, which pose serious privacy risks due to the capabilities of automatic face recognition (AFR) systems for mass surveillance. Hence, protecting facial privacy against unauthorized AFR systems is essential. Inspired by the generation capability of the emerging diffusion models, recent methods employ diffusion models to generate adversarial face images for privacy protection. However, they suffer from the diffusion purification effect, leading to a low protection success rate (PSR). In this paper, we first propose learning unconditional embeddings to increase the learning capacity for adversarial modifications and then use them to guide the modification of the adversarial latent code to weaken the diffusion purification effect. Moreover, we integrate an identity-preserving structure to maintain structural consistency between the original and generated images, allowing human observers to recognize the generated image as having the same identity as the original. Extensive experiments conducted on two public datasets, i.e., CelebA-HQ and LADN, demonstrate the superiority of our approach. The protected faces generated by our method outperform those produced by existing facial privacy protection approaches in terms of transferability and natural appearance.

Data Synthesis with Diverse Styles for Face Recognition via 3DMM-Guided Diffusion

Apr 01, 2025

Identity-preserving face synthesis aims to generate synthetic face images of virtual subjects that can substitute real-world data for training face recognition models. While prior arts strive to create images with consistent identities and diverse styles, they face a trade-off between them. Identifying their limitation of treating style variation as subject-agnostic and observing that real-world persons actually have distinct, subject-specific styles, this paper introduces MorphFace, a diffusion-based face generator. The generator learns fine-grained facial styles, e.g., shape, pose and expression, from the renderings of a 3D morphable model (3DMM). It also learns identities from an off-the-shelf recognition model. To create virtual faces, the generator is conditioned on novel identities of unlabeled synthetic faces, and novel styles that are statistically sampled from a real-world prior distribution. The sampling especially accounts for both intra-subject variation and subject distinctiveness. A context blending strategy is employed to enhance the generator's responsiveness to identity and style conditions. Extensive experiments show that MorphFace outperforms the best prior arts in face recognition efficacy.

MELLM: Exploring LLM-Powered Micro-Expression Understanding Enhanced by Subtle Motion Perception

May 11, 2025

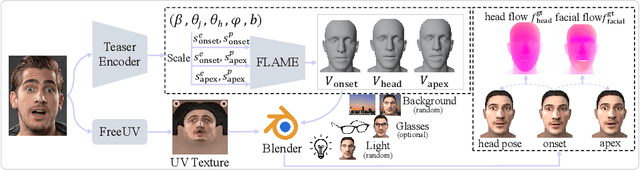

Micro-expressions (MEs) are crucial psychological responses with significant potential for affective computing. However, current automatic micro-expression recognition (MER) research primarily focuses on discrete emotion classification, neglecting a convincing analysis of the subtle dynamic movements and inherent emotional cues. The rapid progress in multimodal large language models (MLLMs), known for their strong multimodal comprehension and language generation abilities, offers new possibilities. MLLMs have shown success in various vision-language tasks, indicating their potential to understand MEs comprehensively, including both fine-grained motion patterns and underlying emotional semantics. Nevertheless, challenges remain due to the subtle intensity and short duration of MEs, as existing MLLMs are not designed to capture such delicate frame-level facial dynamics. In this paper, we propose a novel Micro-Expression Large Language Model (MELLM), which incorporates a subtle facial motion perception strategy with the strong inference capabilities of MLLMs, representing the first exploration of MLLMs in the domain of ME analysis. Specifically, to explicitly guide the MLLM toward motion-sensitive regions, we construct an interpretable motion-enhanced color map by fusing onset-apex optical flow dynamics with the corresponding grayscale onset frame as the model input. Additionally, specialized fine-tuning strategies are incorporated to further enhance the model's visual perception of MEs. Furthermore, we construct an instruction-description dataset based on Facial Action Coding System (FACS) annotations and emotion labels to train our MELLM. Comprehensive evaluations across multiple benchmark datasets demonstrate that our model exhibits superior robustness and generalization capabilities in ME understanding (MEU). Code is available at https://github.com/zyzhangUstc/MELLM.

Silent Speech Sentence Recognition with Six-Axis Accelerometers using Conformer and CTC Algorithm

Feb 25, 2025

Silent speech interfaces (SSI) are being actively developed to assist individuals with communication impairments who have long suffered from daily hardships and a reduced quality of life. However, silent sentences are difficult to segment and recognize due to elision and linking. A novel silent speech sentence recognition method is proposed to convert the facial motion signals collected by six-axis accelerometers into transcribed words and sentences. A Conformer-based neural network with the Connectionist-Temporal-Classification algorithm is used to gain contextual understanding and translate the non-acoustic signals into words sequences, solely requesting the constituent words in the database. Test results show that the proposed method achieves a 97.17% accuracy in sentence recognition, surpassing the existing silent speech recognition methods with a typical accuracy of 85%-95%, and demonstrating the potential of accelerometers as an available SSI modality for high-accuracy silent speech sentence recognition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge