Decoupling Object Detection from Human-Object Interaction Recognition

Dec 13, 2021Ying Jin, Yinpeng Chen, Lijuan Wang, Jianfeng Wang, Pei Yu, Lin Liang, Jenq-Neng Hwang, Zicheng Liu

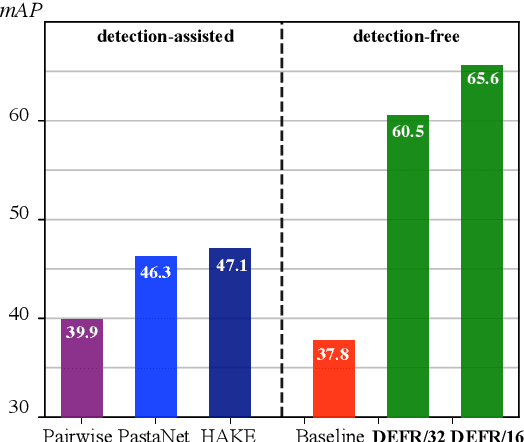

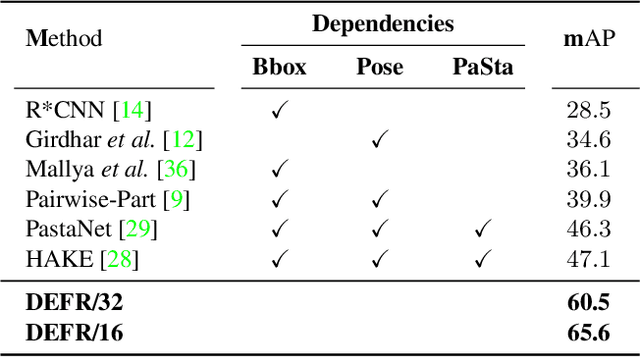

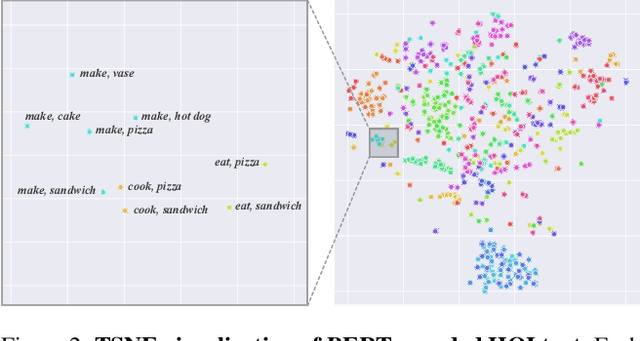

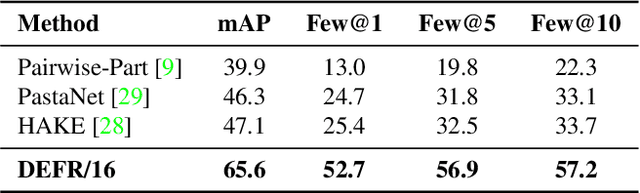

We propose DEFR, a DEtection-FRee method to recognize Human-Object Interactions (HOI) at image level without using object location or human pose. This is challenging as the detector is an integral part of existing methods. In this paper, we propose two findings to boost the performance of the detection-free approach, which significantly outperforms the detection-assisted state of the arts. Firstly, we find it crucial to effectively leverage the semantic correlations among HOI classes. Remarkable gain can be achieved by using language embeddings of HOI labels to initialize the linear classifier, which encodes the structure of HOIs to guide training. Further, we propose Log-Sum-Exp Sign (LSE-Sign) loss to facilitate multi-label learning on a long-tailed dataset by balancing gradients over all classes in a softmax format. Our detection-free approach achieves 65.6 mAP in HOI classification on HICO, outperforming the detection-assisted state of the art (SOTA) by 18.5 mAP, and 52.7 mAP in one-shot classes, surpassing the SOTA by 27.3 mAP. Different from previous work, our classification model (DEFR) can be directly used in HOI detection without any additional training, by connecting to an off-the-shelf object detector whose bounding box output is converted to binary masks for DEFR. Surprisingly, such a simple connection of two decoupled models achieves SOTA performance (32.35 mAP).

Improving Vision Transformers for Incremental Learning

Dec 12, 2021Pei Yu, Yinpeng Chen, Ying Jin, Zicheng Liu

This paper studies using Vision Transformers (ViT) in class incremental learning. Surprisingly, naive application of ViT to replace convolutional neural networks (CNNs) results in performance degradation. Our analysis reveals three issues of naively using ViT: (a) ViT has very slow convergence when class number is small, (b) more bias towards new classes is observed in ViT than CNN-based models, and (c) the proper learning rate of ViT is too low to learn a good classifier. Base on this analysis, we show these issues can be simply addressed by using existing techniques: using convolutional stem, balanced finetuning to correct bias, and higher learning rate for the classifier. Our simple solution, named ViTIL (ViT for Incremental Learning), achieves the new state-of-the-art for all three class incremental learning setups by a clear margin, providing a strong baseline for the research community. For instance, on ImageNet-1000, our ViTIL achieves 69.20% top-1 accuracy for the protocol of 500 initial classes with 5 incremental steps (100 new classes for each), outperforming LUCIR+DDE by 1.69%. For more challenging protocol of 10 incremental steps (100 new classes), our method outperforms PODNet by 7.27% (65.13% vs. 57.86%).

Injecting Semantic Concepts into End-to-End Image Captioning

Dec 09, 2021Zhiyuan Fang, Jianfeng Wang, Xiaowei Hu, Lin Liang, Zhe Gan, Lijuan Wang, Yezhou Yang, Zicheng Liu

Tremendous progress has been made in recent years in developing better image captioning models, yet most of them rely on a separate object detector to extract regional features. Recent vision-language studies are shifting towards the detector-free trend by leveraging grid representations for more flexible model training and faster inference speed. However, such development is primarily focused on image understanding tasks, and remains less investigated for the caption generation task. In this paper, we are concerned with a better-performing detector-free image captioning model, and propose a pure vision transformer-based image captioning model, dubbed as ViTCAP, in which grid representations are used without extracting the regional features. For improved performance, we introduce a novel Concept Token Network (CTN) to predict the semantic concepts and then incorporate them into the end-to-end captioning. In particular, the CTN is built on the basis of a vision transformer and is designed to predict the concept tokens through a classification task, from which the rich semantic information contained greatly benefits the captioning task. Compared with the previous detector-based models, ViTCAP drastically simplifies the architectures and at the same time achieves competitive performance on various challenging image captioning datasets. In particular, ViTCAP reaches 138.1 CIDEr scores on COCO-caption Karpathy-split, 93.8 and 108.6 CIDEr scores on nocaps, and Google-CC captioning datasets, respectively.

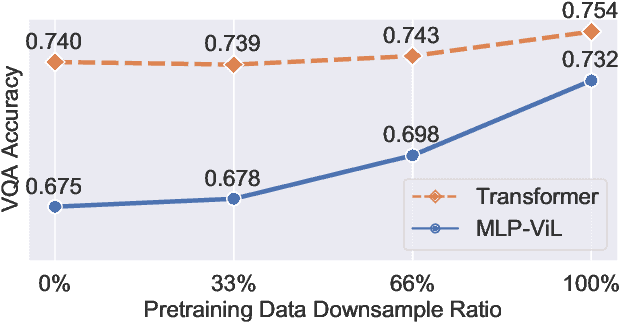

MLP Architectures for Vision-and-Language Modeling: An Empirical Study

Dec 08, 2021Yixin Nie, Linjie Li, Zhe Gan, Shuohang Wang, Chenguang Zhu, Michael Zeng, Zicheng Liu, Mohit Bansal, Lijuan Wang

We initiate the first empirical study on the use of MLP architectures for vision-and-language (VL) fusion. Through extensive experiments on 5 VL tasks and 5 robust VQA benchmarks, we find that: (i) Without pre-training, using MLPs for multimodal fusion has a noticeable performance gap compared to transformers; (ii) However, VL pre-training can help close the performance gap; (iii) Instead of heavy multi-head attention, adding tiny one-head attention to MLPs is sufficient to achieve comparable performance to transformers. Moreover, we also find that the performance gap between MLPs and transformers is not widened when being evaluated on the harder robust VQA benchmarks, suggesting using MLPs for VL fusion can generalize roughly to a similar degree as using transformers. These results hint that MLPs can effectively learn to align vision and text features extracted from lower-level encoders without heavy reliance on self-attention. Based on this, we ask an even bolder question: can we have an all-MLP architecture for VL modeling, where both VL fusion and the vision encoder are replaced with MLPs? Our result shows that an all-MLP VL model is sub-optimal compared to state-of-the-art full-featured VL models when both of them get pre-trained. However, pre-training an all-MLP can surprisingly achieve a better average score than full-featured transformer models without pre-training. This indicates the potential of large-scale pre-training of MLP-like architectures for VL modeling and inspires the future research direction on simplifying well-established VL modeling with less inductive design bias. Our code is publicly available at: https://github.com/easonnie/mlp-vil

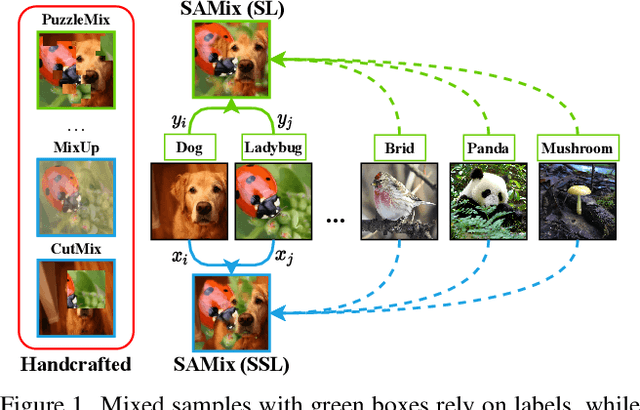

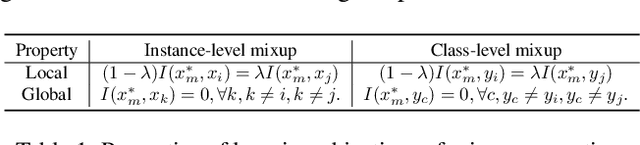

Boosting Discriminative Visual Representation Learning with Scenario-Agnostic Mixup

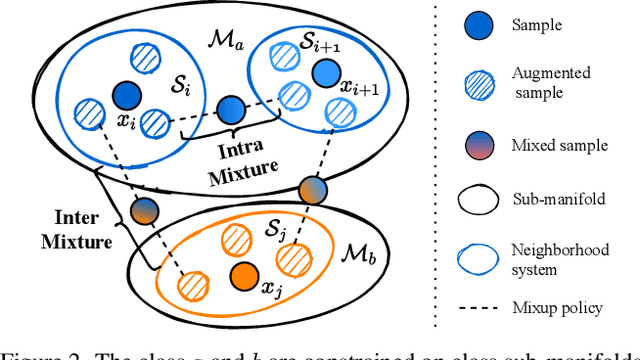

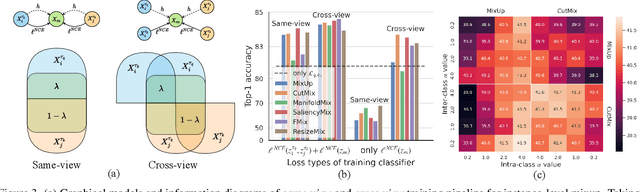

Nov 30, 2021Siyuan Li, Zicheng Liu, Di Wu, Zihan Liu, Stan Z. Li

Mixup is a popular data-dependent augmentation technique for deep neural networks, which contains two sub-tasks, mixup generation and classification. The community typically confines mixup to supervised learning (SL) and the objective of generation sub-task is fixed to the sampled pairs instead of considering the whole data manifold. To overcome such limitations, we systematically study the objectives of two sub-tasks and propose Scenario-Agostic Mixup for both SL and Self-supervised Learning (SSL) scenarios, named SAMix. Specifically, we hypothesize and verify the core objective of mixup generation as optimizing the local smoothness between two classes subject to global discrimination from other classes. Based on this discovery, $\eta$-balanced mixup loss is proposed for complementary training of the two sub-tasks. Meanwhile, the generation sub-task is parameterized as an optimizable module, Mixer, which utilizes an attention mechanism to generate mixed samples without label dependency. Extensive experiments on SL and SSL tasks demonstrate that SAMix consistently outperforms leading methods by a large margin.

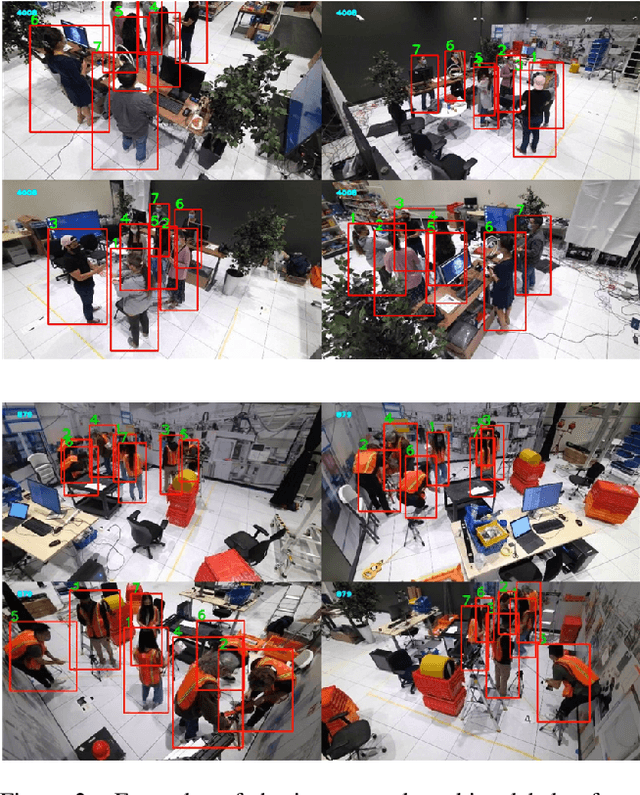

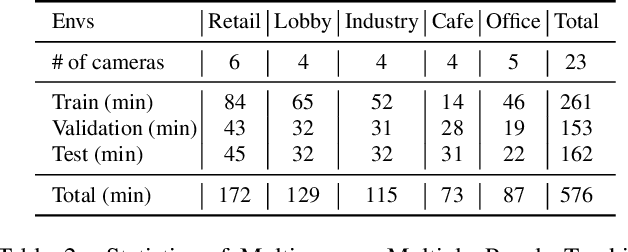

MMPTRACK: Large-scale Densely Annotated Multi-camera Multiple People Tracking Benchmark

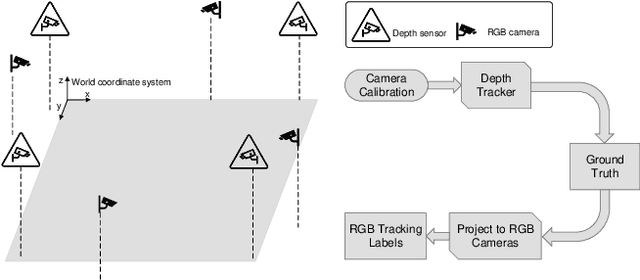

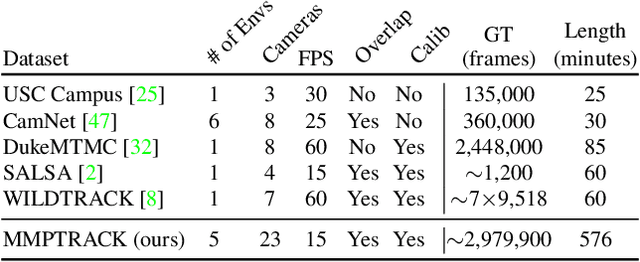

Nov 30, 2021Xiaotian Han, Quanzeng You, Chunyu Wang, Zhizheng Zhang, Peng Chu, Houdong Hu, Jiang Wang, Zicheng Liu

Multi-camera tracking systems are gaining popularity in applications that demand high-quality tracking results, such as frictionless checkout because monocular multi-object tracking (MOT) systems often fail in cluttered and crowded environments due to occlusion. Multiple highly overlapped cameras can significantly alleviate the problem by recovering partial 3D information. However, the cost of creating a high-quality multi-camera tracking dataset with diverse camera settings and backgrounds has limited the dataset scale in this domain. In this paper, we provide a large-scale densely-labeled multi-camera tracking dataset in five different environments with the help of an auto-annotation system. The system uses overlapped and calibrated depth and RGB cameras to build a high-performance 3D tracker that automatically generates the 3D tracking results. The 3D tracking results are projected to each RGB camera view using camera parameters to create 2D tracking results. Then, we manually check and correct the 3D tracking results to ensure the label quality, which is much cheaper than fully manual annotation. We have conducted extensive experiments using two real-time multi-camera trackers and a person re-identification (ReID) model with different settings. This dataset provides a more reliable benchmark of multi-camera, multi-object tracking systems in cluttered and crowded environments. Also, our results demonstrate that adapting the trackers and ReID models on this dataset significantly improves their performance. Our dataset will be publicly released upon the acceptance of this work.

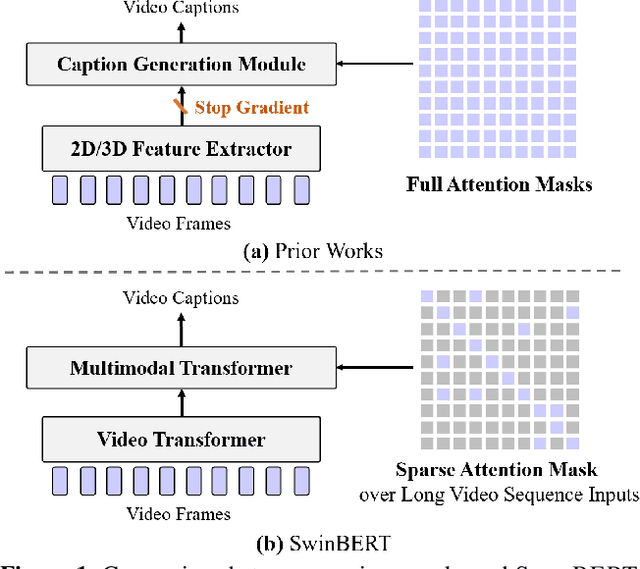

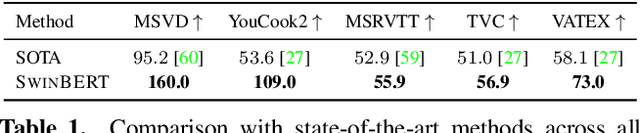

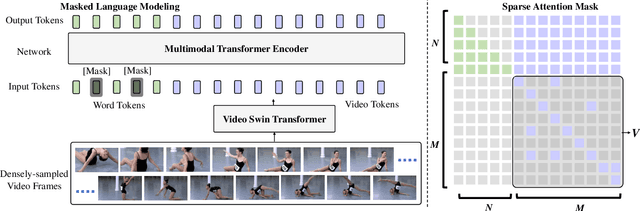

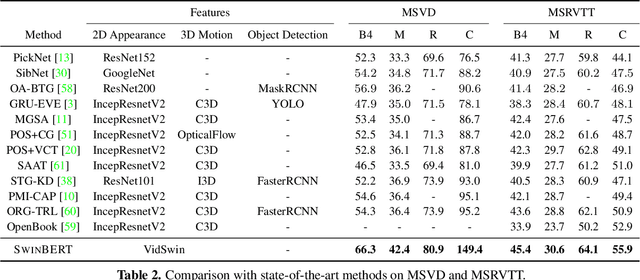

SwinBERT: End-to-End Transformers with Sparse Attention for Video Captioning

Nov 25, 2021Kevin Lin, Linjie Li, Chung-Ching Lin, Faisal Ahmed, Zhe Gan, Zicheng Liu, Yumao Lu, Lijuan Wang

The canonical approach to video captioning dictates a caption generation model to learn from offline-extracted dense video features. These feature extractors usually operate on video frames sampled at a fixed frame rate and are often trained on image/video understanding tasks, without adaption to video captioning data. In this work, we present SwinBERT, an end-to-end transformer-based model for video captioning, which takes video frame patches directly as inputs, and outputs a natural language description. Instead of leveraging multiple 2D/3D feature extractors, our method adopts a video transformer to encode spatial-temporal representations that can adapt to variable lengths of video input without dedicated design for different frame rates. Based on this model architecture, we show that video captioning can benefit significantly from more densely sampled video frames as opposed to previous successes with sparsely sampled video frames for video-and-language understanding tasks (e.g., video question answering). Moreover, to avoid the inherent redundancy in consecutive video frames, we propose adaptively learning a sparse attention mask and optimizing it for task-specific performance improvement through better long-range video sequence modeling. Through extensive experiments on 5 video captioning datasets, we show that SwinBERT achieves across-the-board performance improvements over previous methods, often by a large margin. The learned sparse attention masks in addition push the limit to new state of the arts, and can be transferred between different video lengths and between different datasets.

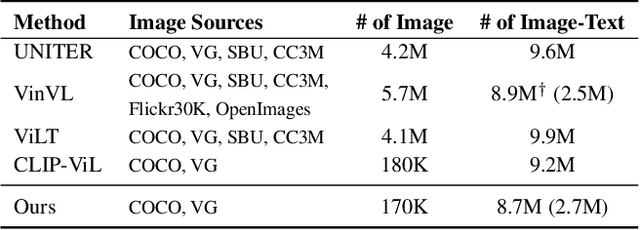

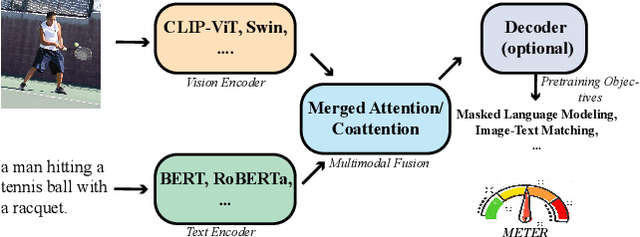

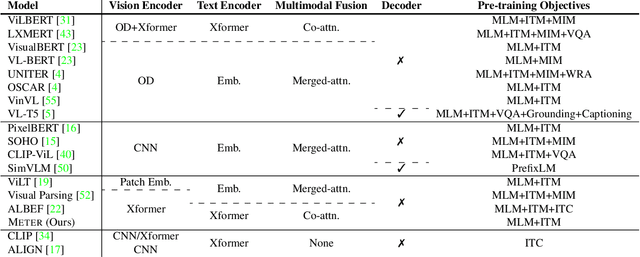

An Empirical Study of Training End-to-End Vision-and-Language Transformers

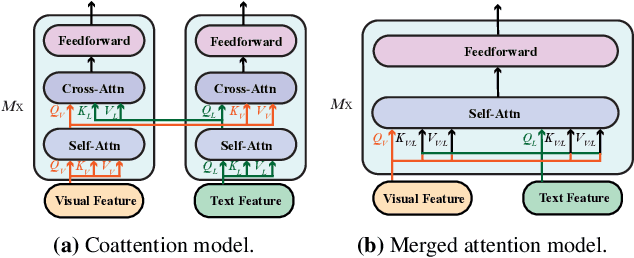

Nov 25, 2021Zi-Yi Dou, Yichong Xu, Zhe Gan, Jianfeng Wang, Shuohang Wang, Lijuan Wang, Chenguang Zhu, Pengchuan Zhang, Lu Yuan, Nanyun Peng, Zicheng Liu, Michael Zeng

Vision-and-language (VL) pre-training has proven to be highly effective on various VL downstream tasks. While recent work has shown that fully transformer-based VL models can be more efficient than previous region-feature-based methods, their performance on downstream tasks often degrades significantly. In this paper, we present METER, a Multimodal End-to-end TransformER framework, through which we investigate how to design and pre-train a fully transformer-based VL model in an end-to-end manner. Specifically, we dissect the model designs along multiple dimensions: vision encoders (e.g., CLIPViT, Swin transformer), text encoders (e.g., RoBERTa, DeBERTa), multimodal fusion module (e.g., merged attention vs. co-attention), architectural design (e.g., encoder-only vs. encoder-decoder), and pre-training objectives (e.g., masked image modeling). We conduct comprehensive experiments and provide insights on how to train a performant VL transformer while maintaining fast inference speed. Notably, our best model achieves an accuracy of 77.64% on the VQAv2 test-std set using only 4M images for pre-training, surpassing the state-of-the-art region-feature-based model by 1.04%, and outperforming the previous best fully transformer-based model by 1.6%. Code and models are released at https://github.com/zdou0830/METER.

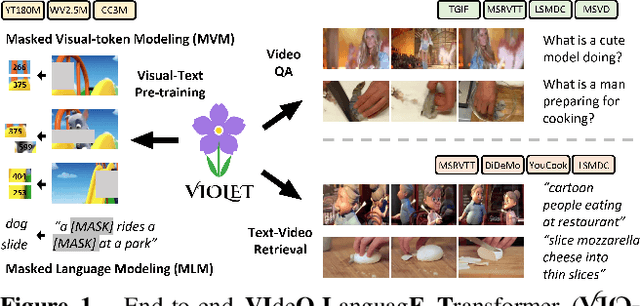

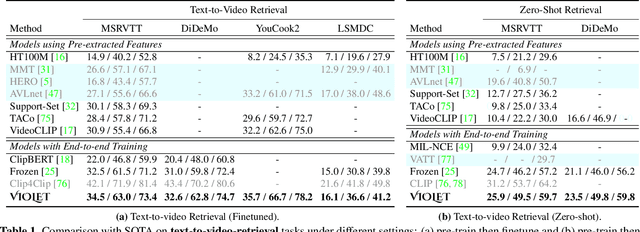

VIOLET : End-to-End Video-Language Transformers with Masked Visual-token Modeling

Nov 24, 2021Tsu-Jui Fu, Linjie Li, Zhe Gan, Kevin Lin, William Yang Wang, Lijuan Wang, Zicheng Liu

A great challenge in video-language (VidL) modeling lies in the disconnection between fixed video representations extracted from image/video understanding models and downstream VidL data. Recent studies try to mitigate this disconnection via end-to-end training. To make it computationally feasible, prior works tend to "imagify" video inputs, i.e., a handful of sparsely sampled frames are fed into a 2D CNN, followed by a simple mean-pooling or concatenation to obtain the overall video representations. Although achieving promising results, such simple approaches may lose temporal information that is essential for performing downstream VidL tasks. In this work, we present VIOLET, a fully end-to-end VIdeO-LanguagE Transformer, which adopts a video transformer to explicitly model the temporal dynamics of video inputs. Further, unlike previous studies that found pre-training tasks on video inputs (e.g., masked frame modeling) not very effective, we design a new pre-training task, Masked Visual-token Modeling (MVM), for better video modeling. Specifically, the original video frame patches are "tokenized" into discrete visual tokens, and the goal is to recover the original visual tokens based on the masked patches. Comprehensive analysis demonstrates the effectiveness of both explicit temporal modeling via video transformer and MVM. As a result, VIOLET achieves new state-of-the-art performance on 5 video question answering tasks and 4 text-to-video retrieval tasks.

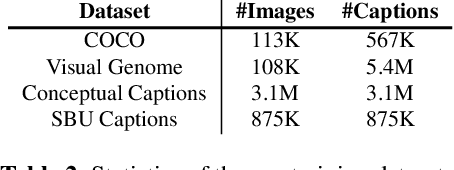

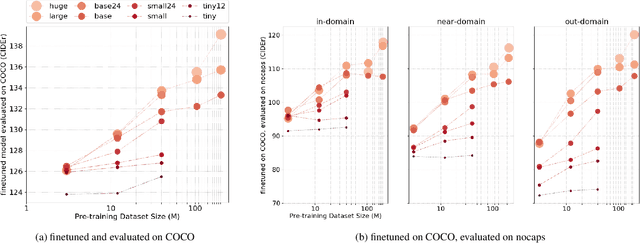

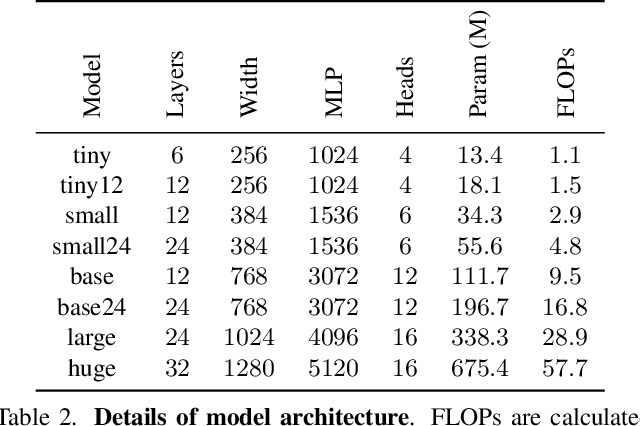

Scaling Up Vision-Language Pre-training for Image Captioning

Nov 24, 2021Xiaowei Hu, Zhe Gan, Jianfeng Wang, Zhengyuan Yang, Zicheng Liu, Yumao Lu, Lijuan Wang

In recent years, we have witnessed significant performance boost in the image captioning task based on vision-language pre-training (VLP). Scale is believed to be an important factor for this advance. However, most existing work only focuses on pre-training transformers with moderate sizes (e.g., 12 or 24 layers) on roughly 4 million images. In this paper, we present LEMON, a LargE-scale iMage captiONer, and provide the first empirical study on the scaling behavior of VLP for image captioning. We use the state-of-the-art VinVL model as our reference model, which consists of an image feature extractor and a transformer model, and scale the transformer both up and down, with model sizes ranging from 13 to 675 million parameters. In terms of data, we conduct experiments with up to 200 million image-text pairs which are automatically collected from web based on the alt attribute of the image (dubbed as ALT200M). Extensive analysis helps to characterize the performance trend as the model size and the pre-training data size increase. We also compare different training recipes, especially for training on large-scale noisy data. As a result, LEMON achieves new state of the arts on several major image captioning benchmarks, including COCO Caption, nocaps, and Conceptual Captions. We also show LEMON can generate captions with long-tail visual concepts when used in a zero-shot manner.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge