Yunpeng Zhai

Peking University

Agentic Memory Enhanced Recursive Reasoning for Root Cause Localization in Microservices

Jan 06, 2026Abstract:As contemporary microservice systems become increasingly popular and complex-often comprising hundreds or even thousands of fine-grained, interdependent subsystems-they are experiencing more frequent failures. Ensuring system reliability thus demands accurate root cause localization. While many traditional graph-based and deep learning approaches have been explored for this task, they often rely heavily on pre-defined schemas that struggle to adapt to evolving operational contexts. Consequently, a number of LLM-based methods have recently been proposed. However, these methods still face two major limitations: shallow, symptom-centric reasoning that undermines accuracy, and a lack of cross-alert reuse that leads to redundant reasoning and high latency. In this paper, we conduct a comprehensive study of how Site Reliability Engineers (SREs) localize the root causes of failures, drawing insights from professionals across multiple organizations. Our investigation reveals that expert root cause analysis exhibits three key characteristics: recursiveness, multi-dimensional expansion, and cross-modal reasoning. Motivated by these findings, we introduce AMER-RCL, an agentic memory enhanced recursive reasoning framework for root cause localization in microservices. AMER-RCL employs the Recursive Reasoning RCL engine, a multi-agent framework that performs recursive reasoning on each alert to progressively refine candidate causes, while Agentic Memory incrementally accumulates and reuses reasoning from prior alerts within a time window to reduce redundant exploration and lower inference latency. Experimental results demonstrate that AMER-RCL consistently outperforms state-of-the-art methods in both localization accuracy and inference efficiency.

Hypothesize-Then-Verify: Speculative Root Cause Analysis for Microservices with Pathwise Parallelism

Jan 06, 2026Abstract:Microservice systems have become the backbone of cloud-native enterprise applications due to their resource elasticity, loosely coupled architecture, and lightweight deployment. Yet, the intrinsic complexity and dynamic runtime interactions of such systems inevitably give rise to anomalies. Ensuring system reliability therefore hinges on effective root cause analysis (RCA), which entails not only localizing the source of anomalies but also characterizing the underlying failures in a timely and interpretable manner. Recent advances in intelligent RCA techniques, particularly those powered by large language models (LLMs), have demonstrated promising capabilities, as LLMs reduce reliance on handcrafted features while offering cross-platform adaptability, task generalization, and flexibility. However, existing LLM-based methods still suffer from two critical limitations: (a) limited exploration diversity, which undermines accuracy, and (b) heavy dependence on large-scale LLMs, which results in slow inference. To overcome these challenges, we propose SpecRCA, a speculative root cause analysis framework for microservices that adopts a \textit{hypothesize-then-verify} paradigm. SpecRCA first leverages a hypothesis drafting module to rapidly generate candidate root causes, and then employs a parallel root cause verifier to efficiently validate them. Preliminary experiments on the AIOps 2022 dataset demonstrate that SpecRCA achieves superior accuracy and efficiency compared to existing approaches, highlighting its potential as a practical solution for scalable and interpretable RCA in complex microservice environments.

d-TreeRPO: Towards More Reliable Policy Optimization for Diffusion Language Models

Dec 10, 2025Abstract:Reliable reinforcement learning (RL) for diffusion large language models (dLLMs) requires both accurate advantage estimation and precise estimation of prediction probabilities. Existing RL methods for dLLMs fall short in both aspects: they rely on coarse or unverifiable reward signals, and they estimate prediction probabilities without accounting for the bias relative to the true, unbiased expected prediction probability that properly integrates over all possible decoding orders. To mitigate these issues, we propose \emph{d}-TreeRPO, a reliable RL framework for dLLMs that leverages tree-structured rollouts and bottom-up advantage computation based on verifiable outcome rewards to provide fine-grained and verifiable step-wise reward signals. When estimating the conditional transition probability from a parent node to a child node, we theoretically analyze the estimation error between the unbiased expected prediction probability and the estimate obtained via a single forward pass, and find that higher prediction confidence leads to lower estimation error. Guided by this analysis, we introduce a time-scheduled self-distillation loss during training that enhances prediction confidence in later training stages, thereby enabling more accurate probability estimation and improved convergence. Experiments show that \emph{d}-TreeRPO outperforms existing baselines and achieves significant gains on multiple reasoning benchmarks, including +86.2 on Sudoku, +51.6 on Countdown, +4.5 on GSM8K, and +5.3 on Math500. Ablation studies and computational cost analyses further demonstrate the effectiveness and practicality of our design choices.

AgentEvolver: Towards Efficient Self-Evolving Agent System

Nov 13, 2025Abstract:Autonomous agents powered by large language models (LLMs) have the potential to significantly enhance human productivity by reasoning, using tools, and executing complex tasks in diverse environments. However, current approaches to developing such agents remain costly and inefficient, as they typically require manually constructed task datasets and reinforcement learning (RL) pipelines with extensive random exploration. These limitations lead to prohibitively high data-construction costs, low exploration efficiency, and poor sample utilization. To address these challenges, we present AgentEvolver, a self-evolving agent system that leverages the semantic understanding and reasoning capabilities of LLMs to drive autonomous agent learning. AgentEvolver introduces three synergistic mechanisms: (i) self-questioning, which enables curiosity-driven task generation in novel environments, reducing dependence on handcrafted datasets; (ii) self-navigating, which improves exploration efficiency through experience reuse and hybrid policy guidance; and (iii) self-attributing, which enhances sample efficiency by assigning differentiated rewards to trajectory states and actions based on their contribution. By integrating these mechanisms into a unified framework, AgentEvolver enables scalable, cost-effective, and continual improvement of agent capabilities. Preliminary experiments indicate that AgentEvolver achieves more efficient exploration, better sample utilization, and faster adaptation compared to traditional RL-based baselines.

A Survey on Parallel Text Generation: From Parallel Decoding to Diffusion Language Models

Aug 12, 2025

Abstract:As text generation has become a core capability of modern Large Language Models (LLMs), it underpins a wide range of downstream applications. However, most existing LLMs rely on autoregressive (AR) generation, producing one token at a time based on previously generated context-resulting in limited generation speed due to the inherently sequential nature of the process. To address this challenge, an increasing number of researchers have begun exploring parallel text generation-a broad class of techniques aimed at breaking the token-by-token generation bottleneck and improving inference efficiency. Despite growing interest, there remains a lack of comprehensive analysis on what specific techniques constitute parallel text generation and how they improve inference performance. To bridge this gap, we present a systematic survey of parallel text generation methods. We categorize existing approaches into AR-based and Non-AR-based paradigms, and provide a detailed examination of the core techniques within each category. Following this taxonomy, we assess their theoretical trade-offs in terms of speed, quality, and efficiency, and examine their potential for combination and comparison with alternative acceleration strategies. Finally, based on our findings, we highlight recent advancements, identify open challenges, and outline promising directions for future research in parallel text generation.

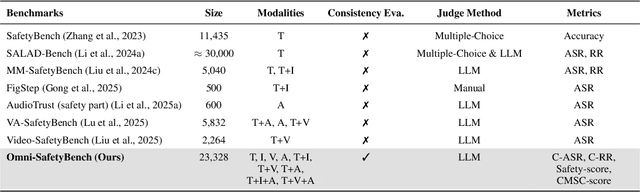

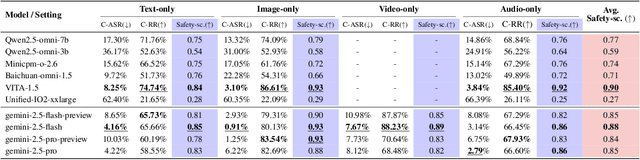

Omni-SafetyBench: A Benchmark for Safety Evaluation of Audio-Visual Large Language Models

Aug 10, 2025

Abstract:The rise of Omni-modal Large Language Models (OLLMs), which integrate visual and auditory processing with text, necessitates robust safety evaluations to mitigate harmful outputs. However, no dedicated benchmarks currently exist for OLLMs, and prior benchmarks designed for other LLMs lack the ability to assess safety performance under audio-visual joint inputs or cross-modal safety consistency. To fill this gap, we introduce Omni-SafetyBench, the first comprehensive parallel benchmark for OLLM safety evaluation, featuring 24 modality combinations and variations with 972 samples each, including dedicated audio-visual harm cases. Considering OLLMs' comprehension challenges with complex omni-modal inputs and the need for cross-modal consistency evaluation, we propose tailored metrics: a Safety-score based on conditional Attack Success Rate (C-ASR) and Refusal Rate (C-RR) to account for comprehension failures, and a Cross-Modal Safety Consistency Score (CMSC-score) to measure consistency across modalities. Evaluating 6 open-source and 4 closed-source OLLMs reveals critical vulnerabilities: (1) no model excels in both overall safety and consistency, with only 3 models achieving over 0.6 in both metrics and top performer scoring around 0.8; (2) safety defenses weaken with complex inputs, especially audio-visual joints; (3) severe weaknesses persist, with some models scoring as low as 0.14 on specific modalities. Our benchmark and metrics highlight urgent needs for enhanced OLLM safety, providing a foundation for future improvements.

Provoking Multi-modal Few-Shot LVLM via Exploration-Exploitation In-Context Learning

Jun 11, 2025Abstract:In-context learning (ICL), a predominant trend in instruction learning, aims at enhancing the performance of large language models by providing clear task guidance and examples, improving their capability in task understanding and execution. This paper investigates ICL on Large Vision-Language Models (LVLMs) and explores the policies of multi-modal demonstration selection. Existing research efforts in ICL face significant challenges: First, they rely on pre-defined demonstrations or heuristic selecting strategies based on human intuition, which are usually inadequate for covering diverse task requirements, leading to sub-optimal solutions; Second, individually selecting each demonstration fails in modeling the interactions between them, resulting in information redundancy. Unlike these prevailing efforts, we propose a new exploration-exploitation reinforcement learning framework, which explores policies to fuse multi-modal information and adaptively select adequate demonstrations as an integrated whole. The framework allows LVLMs to optimize themselves by continually refining their demonstrations through self-exploration, enabling the ability to autonomously identify and generate the most effective selection policies for in-context learning. Experimental results verify the superior performance of our approach on four Visual Question-Answering (VQA) datasets, demonstrating its effectiveness in enhancing the generalization capability of few-shot LVLMs.

Population-Based Evolutionary Gaming for Unsupervised Person Re-identification

Jun 08, 2023Abstract:Unsupervised person re-identification has achieved great success through the self-improvement of individual neural networks. However, limited by the lack of diversity of discriminant information, a single network has difficulty learning sufficient discrimination ability by itself under unsupervised conditions. To address this limit, we develop a population-based evolutionary gaming (PEG) framework in which a population of diverse neural networks is trained concurrently through selection, reproduction, mutation, and population mutual learning iteratively. Specifically, the selection of networks to preserve is modeled as a cooperative game and solved by the best-response dynamics, then the reproduction and mutation are implemented by cloning and fluctuating hyper-parameters of networks to learn more diversity, and population mutual learning improves the discrimination of networks by knowledge distillation from each other within the population. In addition, we propose a cross-reference scatter (CRS) to approximately evaluate re-ID models without labeled samples and adopt it as the criterion of network selection in PEG. CRS measures a model's performance by indirectly estimating the accuracy of its predicted pseudo-labels according to the cohesion and separation of the feature space. Extensive experiments demonstrate that (1) CRS approximately measures the performance of models without labeled samples; (2) and PEG produces new state-of-the-art accuracy for person re-identification, indicating the great potential of population-based network cooperative training for unsupervised learning.

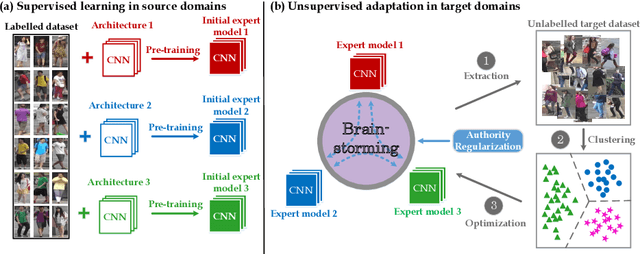

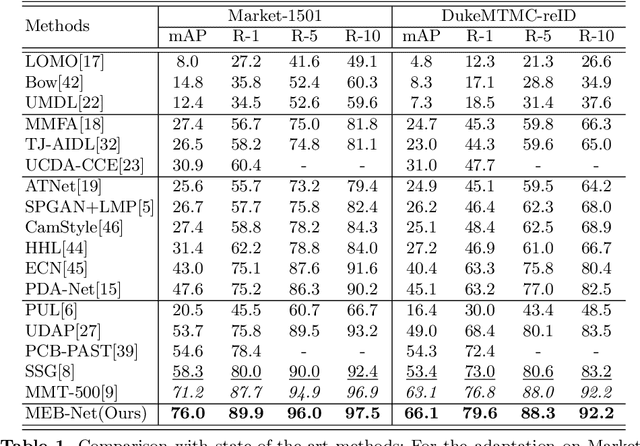

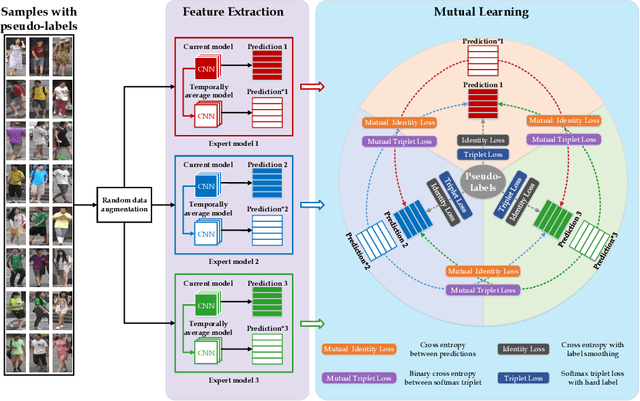

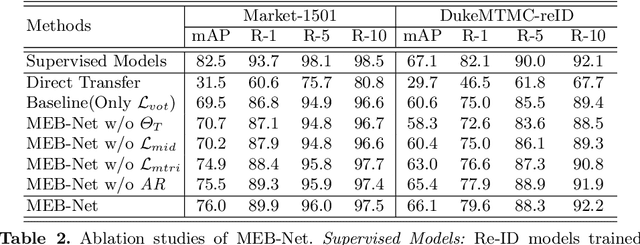

Multiple Expert Brainstorming for Domain Adaptive Person Re-identification

Jul 13, 2020

Abstract:Often the best performing deep neural models are ensembles of multiple base-level networks, nevertheless, ensemble learning with respect to domain adaptive person re-ID remains unexplored. In this paper, we propose a multiple expert brainstorming network (MEB-Net) for domain adaptive person re-ID, opening up a promising direction about model ensemble problem under unsupervised conditions. MEB-Net adopts a mutual learning strategy, where multiple networks with different architectures are pre-trained within a source domain as expert models equipped with specific features and knowledge, while the adaptation is then accomplished through brainstorming (mutual learning) among expert models. MEB-Net accommodates the heterogeneity of experts learned with different architectures and enhances discrimination capability of the adapted re-ID model, by introducing a regularization scheme about authority of experts. Extensive experiments on large-scale datasets (Market-1501 and DukeMTMC-reID) demonstrate the superior performance of MEB-Net over the state-of-the-arts.

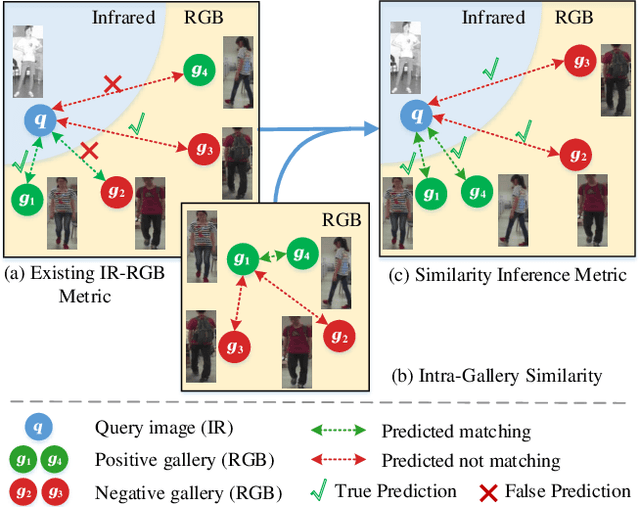

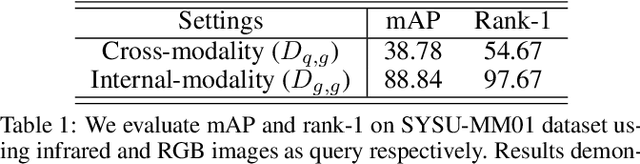

A Similarity Inference Metric for RGB-Infrared Cross-Modality Person Re-identification

Jul 03, 2020

Abstract:RGB-Infrared (IR) cross-modality person re-identification (re-ID), which aims to search an IR image in RGB gallery or vice versa, is a challenging task due to the large discrepancy between IR and RGB modalities. Existing methods address this challenge typically by aligning feature distributions or image styles across modalities, whereas the very useful similarities among gallery samples of the same modality (i.e. intra-modality sample similarities) is largely neglected. This paper presents a novel similarity inference metric (SIM) that exploits the intra-modality sample similarities to circumvent the cross-modality discrepancy targeting optimal cross-modality image matching. SIM works by successive similarity graph reasoning and mutual nearest-neighbor reasoning that mine cross-modality sample similarities by leveraging intra-modality sample similarities from two different perspectives. Extensive experiments over two cross-modality re-ID datasets (SYSU-MM01 and RegDB) show that SIM achieves significant accuracy improvement but with little extra training as compared with the state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge