Wei-Shi Zheng

PSLT: A Light-weight Vision Transformer with Ladder Self-Attention and Progressive Shift

Apr 07, 2023Abstract:Vision Transformer (ViT) has shown great potential for various visual tasks due to its ability to model long-range dependency. However, ViT requires a large amount of computing resource to compute the global self-attention. In this work, we propose a ladder self-attention block with multiple branches and a progressive shift mechanism to develop a light-weight transformer backbone that requires less computing resources (e.g. a relatively small number of parameters and FLOPs), termed Progressive Shift Ladder Transformer (PSLT). First, the ladder self-attention block reduces the computational cost by modelling local self-attention in each branch. In the meanwhile, the progressive shift mechanism is proposed to enlarge the receptive field in the ladder self-attention block by modelling diverse local self-attention for each branch and interacting among these branches. Second, the input feature of the ladder self-attention block is split equally along the channel dimension for each branch, which considerably reduces the computational cost in the ladder self-attention block (with nearly 1/3 the amount of parameters and FLOPs), and the outputs of these branches are then collaborated by a pixel-adaptive fusion. Therefore, the ladder self-attention block with a relatively small number of parameters and FLOPs is capable of modelling long-range interactions. Based on the ladder self-attention block, PSLT performs well on several vision tasks, including image classification, objection detection and person re-identification. On the ImageNet-1k dataset, PSLT achieves a top-1 accuracy of 79.9% with 9.2M parameters and 1.9G FLOPs, which is comparable to several existing models with more than 20M parameters and 4G FLOPs. Code is available at https://isee-ai.cn/wugaojie/PSLT.html.

* Accepted to IEEE Transaction on Pattern Analysis and Machine Intelligence, 2023 (Submission date: 08-Jul-202)

DilateFormer: Multi-Scale Dilated Transformer for Visual Recognition

Feb 03, 2023Abstract:As a de facto solution, the vanilla Vision Transformers (ViTs) are encouraged to model long-range dependencies between arbitrary image patches while the global attended receptive field leads to quadratic computational cost. Another branch of Vision Transformers exploits local attention inspired by CNNs, which only models the interactions between patches in small neighborhoods. Although such a solution reduces the computational cost, it naturally suffers from small attended receptive fields, which may limit the performance. In this work, we explore effective Vision Transformers to pursue a preferable trade-off between the computational complexity and size of the attended receptive field. By analyzing the patch interaction of global attention in ViTs, we observe two key properties in the shallow layers, namely locality and sparsity, indicating the redundancy of global dependency modeling in shallow layers of ViTs. Accordingly, we propose Multi-Scale Dilated Attention (MSDA) to model local and sparse patch interaction within the sliding window. With a pyramid architecture, we construct a Multi-Scale Dilated Transformer (DilateFormer) by stacking MSDA blocks at low-level stages and global multi-head self-attention blocks at high-level stages. Our experiment results show that our DilateFormer achieves state-of-the-art performance on various vision tasks. On ImageNet-1K classification task, DilateFormer achieves comparable performance with 70% fewer FLOPs compared with existing state-of-the-art models. Our DilateFormer-Base achieves 85.6% top-1 accuracy on ImageNet-1K classification task, 53.5% box mAP/46.1% mask mAP on COCO object detection/instance segmentation task and 51.1% MS mIoU on ADE20K semantic segmentation task.

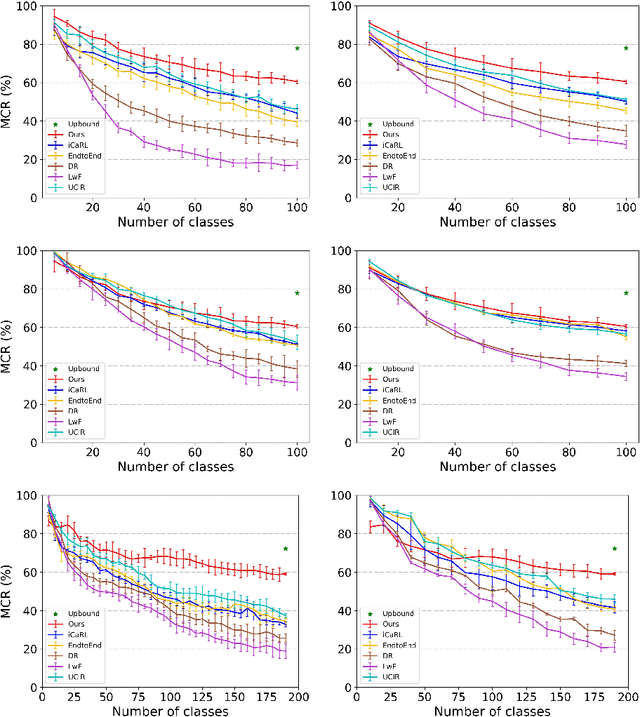

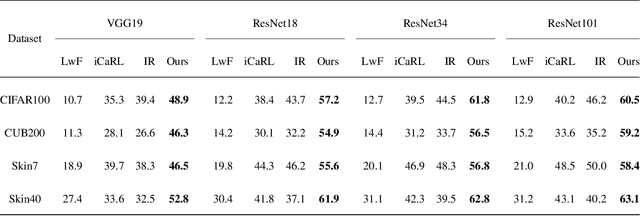

Adaptively Integrated Knowledge Distillation and Prediction Uncertainty for Continual Learning

Jan 18, 2023

Abstract:Current deep learning models often suffer from catastrophic forgetting of old knowledge when continually learning new knowledge. Existing strategies to alleviate this issue often fix the trade-off between keeping old knowledge (stability) and learning new knowledge (plasticity). However, the stability-plasticity trade-off during continual learning may need to be dynamically changed for better model performance. In this paper, we propose two novel ways to adaptively balance model stability and plasticity. The first one is to adaptively integrate multiple levels of old knowledge and transfer it to each block level in the new model. The second one uses prediction uncertainty of old knowledge to naturally tune the importance of learning new knowledge during model training. To our best knowledge, this is the first time to connect model prediction uncertainty and knowledge distillation for continual learning. In addition, this paper applies a modified CutMix particularly to augment the data for old knowledge, further alleviating the catastrophic forgetting issue. Extensive evaluations on the CIFAR100 and the ImageNet datasets confirmed the effectiveness of the proposed method for continual learning.

Text-Adaptive Multiple Visual Prototype Matching for Video-Text Retrieval

Sep 27, 2022

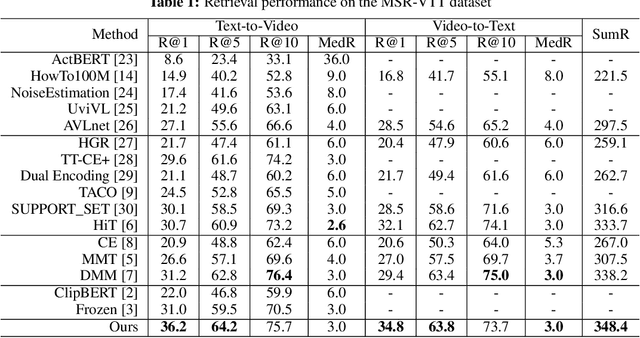

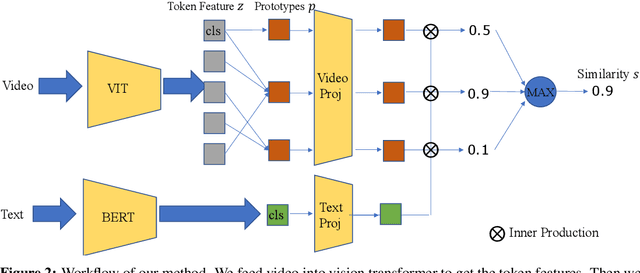

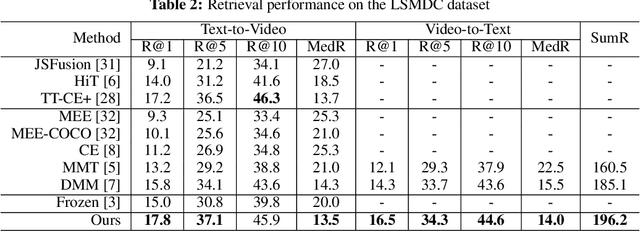

Abstract:Cross-modal retrieval between videos and texts has gained increasing research interest due to the rapid emergence of videos on the web. Generally, a video contains rich instance and event information and the query text only describes a part of the information. Thus, a video can correspond to multiple different text descriptions and queries. We call this phenomenon the ``Video-Text Correspondence Ambiguity'' problem. Current techniques mostly concentrate on mining local or multi-level alignment between contents of a video and text (\textit{e.g.}, object to entity and action to verb). It is difficult for these methods to alleviate the video-text correspondence ambiguity by describing a video using only one single feature, which is required to be matched with multiple different text features at the same time. To address this problem, we propose a Text-Adaptive Multiple Visual Prototype Matching model, which automatically captures multiple prototypes to describe a video by adaptive aggregation of video token features. Given a query text, the similarity is determined by the most similar prototype to find correspondence in the video, which is termed text-adaptive matching. To learn diverse prototypes for representing the rich information in videos, we propose a variance loss to encourage different prototypes to attend to different contents of the video. Our method outperforms state-of-the-art methods on four public video retrieval datasets.

* NIPS2022

Learning Discriminative Representation via Metric Learning for Imbalanced Medical Image Classification

Jul 14, 2022

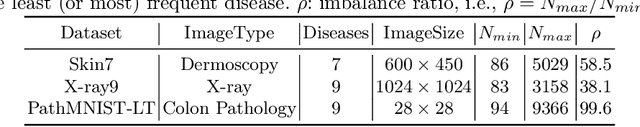

Abstract:Data imbalance between common and rare diseases during model training often causes intelligent diagnosis systems to have biased predictions towards common diseases. The state-of-the-art approaches apply a two-stage learning framework to alleviate the class-imbalance issue, where the first stage focuses on training of a general feature extractor and the second stage focuses on fine-tuning the classifier head for class rebalancing. However, existing two-stage approaches do not consider the fine-grained property between different diseases, often causing the first stage less effective for medical image classification than for natural image classification tasks. In this study, we propose embedding metric learning into the first stage of the two-stage framework specially to help the feature extractor learn to extract more discriminative feature representations. Extensive experiments mainly on three medical image datasets show that the proposed approach consistently outperforms existing onestage and two-stage approaches, suggesting that metric learning can be used as an effective plug-in component in the two-stage framework for fine-grained class-imbalanced image classification tasks.

STVGFormer: Spatio-Temporal Video Grounding with Static-Dynamic Cross-Modal Understanding

Jul 06, 2022

Abstract:In this technical report, we introduce our solution to human-centric spatio-temporal video grounding task. We propose a concise and effective framework named STVGFormer, which models spatiotemporal visual-linguistic dependencies with a static branch and a dynamic branch. The static branch performs cross-modal understanding in a single frame and learns to localize the target object spatially according to intra-frame visual cues like object appearances. The dynamic branch performs cross-modal understanding across multiple frames. It learns to predict the starting and ending time of the target moment according to dynamic visual cues like motions. Both the static and dynamic branches are designed as cross-modal transformers. We further design a novel static-dynamic interaction block to enable the static and dynamic branches to transfer useful and complementary information from each other, which is shown to be effective to improve the prediction on hard cases. Our proposed method achieved 39.6% vIoU and won the first place in the HC-STVG track of the 4th Person in Context Challenge.

Weakly-supervised Action Localization via Hierarchical Mining

Jun 22, 2022

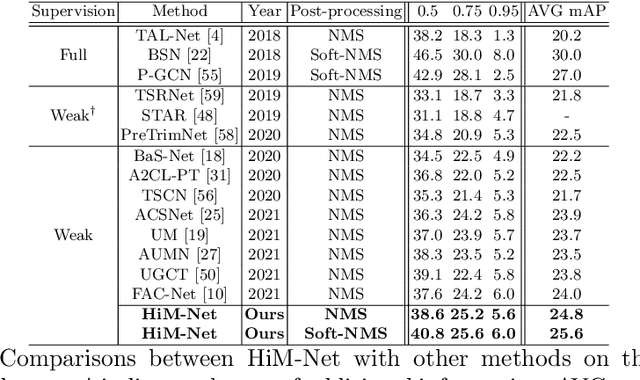

Abstract:Weakly-supervised action localization aims to localize and classify action instances in the given videos temporally with only video-level categorical labels. Thus, the crucial issue of existing weakly-supervised action localization methods is the limited supervision from the weak annotations for precise predictions. In this work, we propose a hierarchical mining strategy under video-level and snippet-level manners, i.e., hierarchical supervision and hierarchical consistency mining, to maximize the usage of the given annotations and prediction-wise consistency. To this end, a Hierarchical Mining Network (HiM-Net) is proposed. Concretely, it mines hierarchical supervision for classification in two grains: one is the video-level existence for ground truth categories captured by multiple instance learning; the other is the snippet-level inexistence for each negative-labeled category from the perspective of complementary labels, which is optimized by our proposed complementary label learning. As for hierarchical consistency, HiM-Net explores video-level co-action feature similarity and snippet-level foreground-background opposition, for discriminative representation learning and consistent foreground-background separation. Specifically, prediction variance is viewed as uncertainty to select the pairs with high consensus for proposed foreground-background collaborative learning. Comprehensive experimental results show that HiM-Net outperforms existing methods on THUMOS14 and ActivityNet1.3 datasets with large margins by hierarchically mining the supervision and consistency. Code will be available on GitHub.

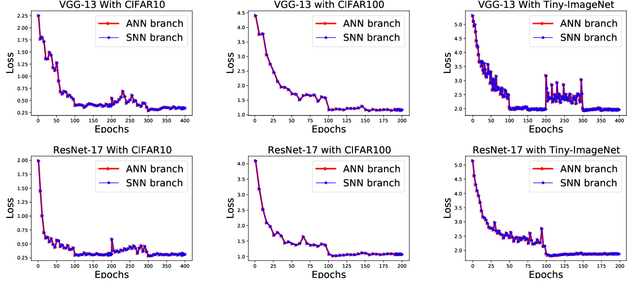

SNN2ANN: A Fast and Memory-Efficient Training Framework for Spiking Neural Networks

Jun 19, 2022

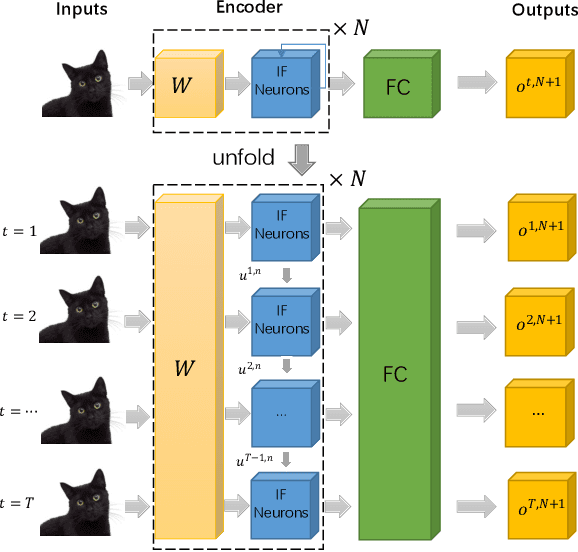

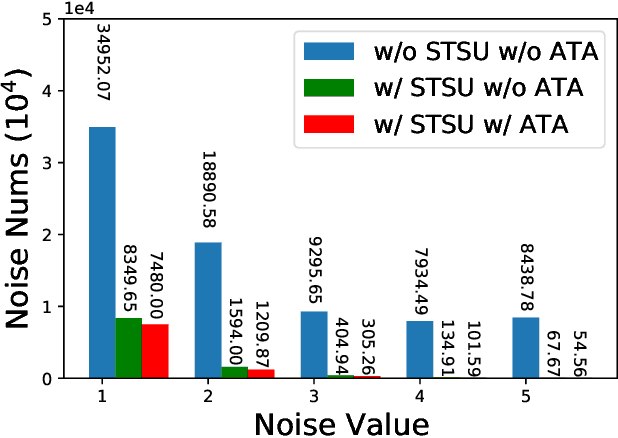

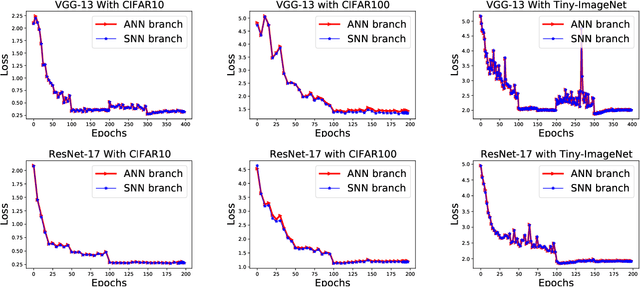

Abstract:Spiking neural networks are efficient computation models for low-power environments. Spike-based BP algorithms and ANN-to-SNN (ANN2SNN) conversions are successful techniques for SNN training. Nevertheless, the spike-base BP training is slow and requires large memory costs. Though ANN2NN provides a low-cost way to train SNNs, it requires many inference steps to mimic the well-trained ANN for good performance. In this paper, we propose a SNN-to-ANN (SNN2ANN) framework to train the SNN in a fast and memory-efficient way. The SNN2ANN consists of 2 components: a) a weight sharing architecture between ANN and SNN and b) spiking mapping units. Firstly, the architecture trains the weight-sharing parameters on the ANN branch, resulting in fast training and low memory costs for SNN. Secondly, the spiking mapping units ensure that the activation values of the ANN are the spiking features. As a result, the classification error of the SNN can be optimized by training the ANN branch. Besides, we design an adaptive threshold adjustment (ATA) algorithm to address the noisy spike problem. Experiment results show that our SNN2ANN-based models perform well on the benchmark datasets (CIFAR10, CIFAR100, and Tiny-ImageNet). Moreover, the SNN2ANN can achieve comparable accuracy under 0.625x time steps, 0.377x training time, 0.27x GPU memory costs, and 0.33x spike activities of the Spike-based BP model.

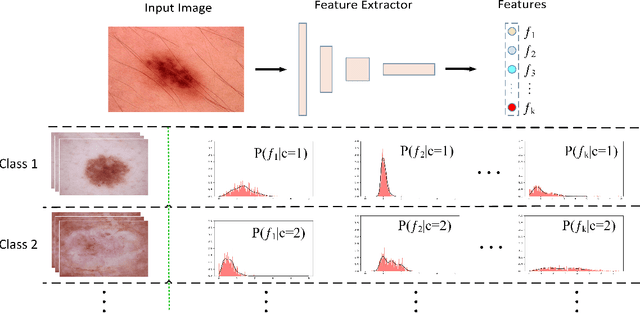

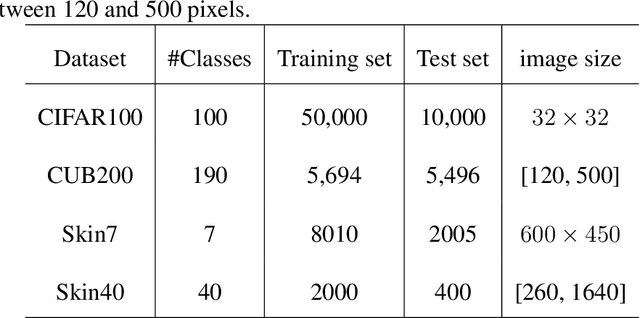

Continual Learning with Bayesian Model based on a Fixed Pre-trained Feature Extractor

Apr 28, 2022

Abstract:Deep learning has shown its human-level performance in various applications. However, current deep learning models are characterised by catastrophic forgetting of old knowledge when learning new classes. This poses a challenge particularly in intelligent diagnosis systems where initially only training data of a limited number of diseases are available. In this case, updating the intelligent system with data of new diseases would inevitably downgrade its performance on previously learned diseases. Inspired by the process of learning new knowledge in human brains, we propose a Bayesian generative model for continual learning built on a fixed pre-trained feature extractor. In this model, knowledge of each old class can be compactly represented by a collection of statistical distributions, e.g. with Gaussian mixture models, and naturally kept from forgetting in continual learning over time. Unlike existing class-incremental learning methods, the proposed approach is not sensitive to the continual learning process and can be additionally well applied to the data-incremental learning scenario. Experiments on multiple medical and natural image classification tasks showed that the proposed approach outperforms state-of-the-art approaches which even keep some images of old classes during continual learning of new classes.

Global Trajectory Helps Person Retrieval in a Camera Network

Apr 27, 2022

Abstract:We are concerned about retrieving a query person from the videos taken by a non-overlapping camera network. Existing methods often rely on pure visual matching or consider temporal constraint, but ignore the spatial information of the camera network. To address this problem, we propose a framework of person retrieval based on cross-camera trajectory generation which integrates both temporal and spatial information. To obtain the pedestrian trajectories, we propose a new cross-camera spatio-temporal model that integrates the walking habits of pedestrians and the path layout between cameras, forming a joint probability distribution. Such a spatio-temporal model among a camera network can be specified using sparsely sampled pedestrian data. Based on the spatio-temporal model, the cross-camera trajectories of a specific pedestrian can be extracted by the conditional random field model, and further optimized by the restricted nonnegative matrix factorization. Finally, a trajectory re-ranking technology is proposed to improve the person retrieval results. To verify the effectiveness of our approach, we build the first dataset of cross-camera pedestrian trajectories over an actual monitoring scenario, namely the Person Trajectory Dataset. Extensive experiments have verified the effectiveness and robustness of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge