Reasoning About Physical Interactions with Object-Oriented Prediction and Planning

Jan 07, 2019Michael Janner, Sergey Levine, William T. Freeman, Joshua B. Tenenbaum, Chelsea Finn, Jiajun Wu

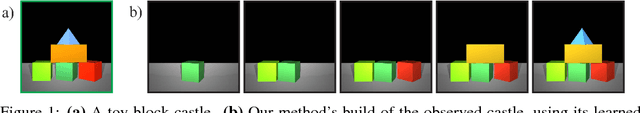

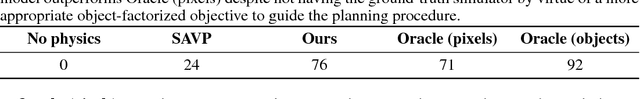

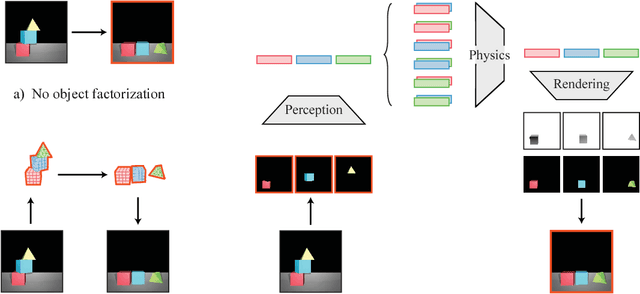

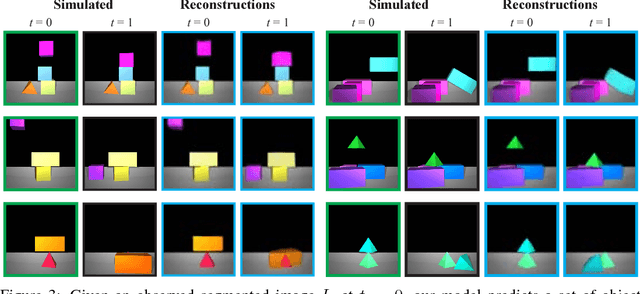

Object-based factorizations provide a useful level of abstraction for interacting with the world. Building explicit object representations, however, often requires supervisory signals that are difficult to obtain in practice. We present a paradigm for learning object-centric representations for physical scene understanding without direct supervision of object properties. Our model, Object-Oriented Prediction and Planning (O2P2), jointly learns a perception function to map from image observations to object representations, a pairwise physics interaction function to predict the time evolution of a collection of objects, and a rendering function to map objects back to pixels. For evaluation, we consider not only the accuracy of the physical predictions of the model, but also its utility for downstream tasks that require an actionable representation of intuitive physics. After training our model on an image prediction task, we can use its learned representations to build block towers more complicated than those observed during training.

Robustness to Out-of-Distribution Inputs via Task-Aware Generative Uncertainty

Dec 27, 2018Rowan McAllister, Gregory Kahn, Jeff Clune, Sergey Levine

Deep learning provides a powerful tool for machine perception when the observations resemble the training data. However, real-world robotic systems must react intelligently to their observations even in unexpected circumstances. This requires a system to reason about its own uncertainty given unfamiliar, out-of-distribution observations. Approximate Bayesian approaches are commonly used to estimate uncertainty for neural network predictions, but can struggle with out-of-distribution observations. Generative models can in principle detect out-of-distribution observations as those with a low estimated density. However, the mere presence of an out-of-distribution input does not by itself indicate an unsafe situation. In this paper, we present a method for uncertainty-aware robotic perception that combines generative modeling and model uncertainty to cope with uncertainty stemming from out-of-distribution states. Our method estimates an uncertainty measure about the model's prediction, taking into account an explicit (generative) model of the observation distribution to handle out-of-distribution inputs. This is accomplished by probabilistically projecting observations onto the training distribution, such that out-of-distribution inputs map to uncertain in-distribution observations, which in turn produce uncertain task-related predictions, but only if task-relevant parts of the image change. We evaluate our method on an action-conditioned collision prediction task with both simulated and real data, and demonstrate that our method of projecting out-of-distribution observations improves the performance of four standard Bayesian and non-Bayesian neural network approaches, offering more favorable trade-offs between the proportion of time a robot can remain autonomous and the proportion of impending crashes successfully avoided.

Learning to Walk via Deep Reinforcement Learning

Dec 26, 2018Tuomas Haarnoja, Aurick Zhou, Sehoon Ha, Jie Tan, George Tucker, Sergey Levine

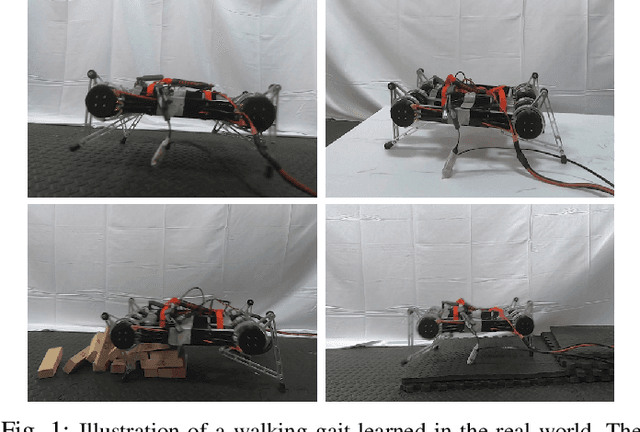

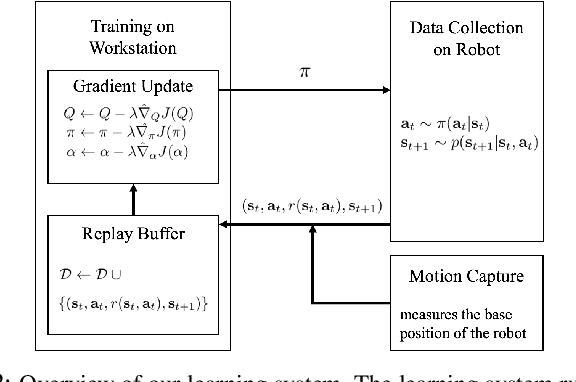

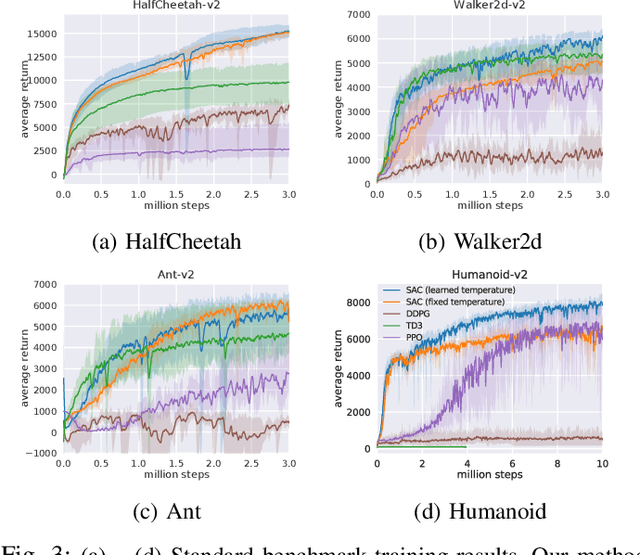

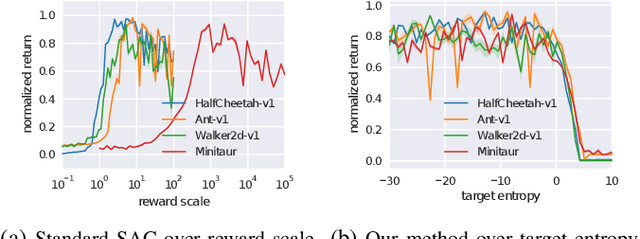

Deep reinforcement learning suggests the promise of fully automated learning of robotic control policies that directly map sensory inputs to low-level actions. However, applying deep reinforcement learning methods on real-world robots is exceptionally difficult, due both to the sample complexity and, just as importantly, the sensitivity of such methods to hyperparameters. While hyperparameter tuning can be performed in parallel in simulated domains, it is usually impractical to tune hyperparameters directly on real-world robotic platforms, especially legged platforms like quadrupedal robots that can be damaged through extensive trial-and-error learning. In this paper, we develop a stable variant of the soft actor-critic deep reinforcement learning algorithm that requires minimal hyperparameter tuning, while also requiring only a modest number of trials to learn multilayer neural network policies. This algorithm is based on the framework of maximum entropy reinforcement learning, and automatically trades off exploration against exploitation by dynamically and automatically tuning a temperature parameter that determines the stochasticity of the policy. We show that this method achieves state-of-the-art performance on four standard benchmark environments. We then demonstrate that it can be used to learn quadrupedal locomotion gaits on a real-world Minitaur robot, learning to walk from scratch directly in the real world in two hours of training.

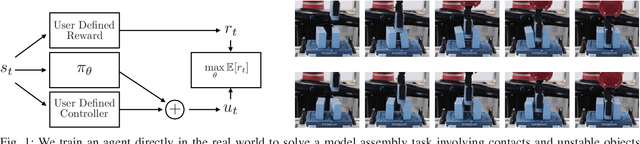

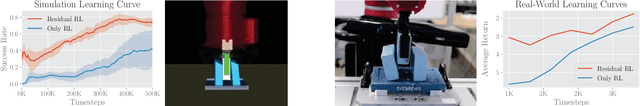

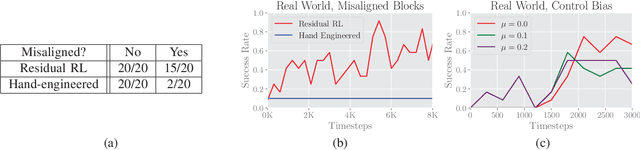

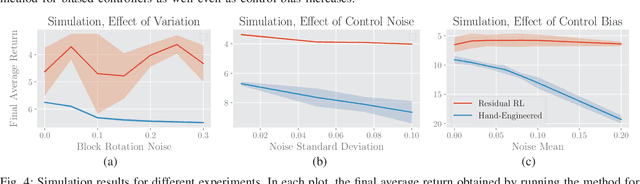

Residual Reinforcement Learning for Robot Control

Dec 18, 2018Tobias Johannink, Shikhar Bahl, Ashvin Nair, Jianlan Luo, Avinash Kumar, Matthias Loskyll, Juan Aparicio Ojea, Eugen Solowjow, Sergey Levine

Conventional feedback control methods can solve various types of robot control problems very efficiently by capturing the structure with explicit models, such as rigid body equations of motion. However, many control problems in modern manufacturing deal with contacts and friction, which are difficult to capture with first-order physical modeling. Hence, applying control design methodologies to these kinds of problems often results in brittle and inaccurate controllers, which have to be manually tuned for deployment. Reinforcement learning (RL) methods have been demonstrated to be capable of learning continuous robot controllers from interactions with the environment, even for problems that include friction and contacts. In this paper, we study how we can solve difficult control problems in the real world by decomposing them into a part that is solved efficiently by conventional feedback control methods, and the residual which is solved with RL. The final control policy is a superposition of both control signals. We demonstrate our approach by training an agent to successfully perform a real-world block assembly task involving contacts and unstable objects.

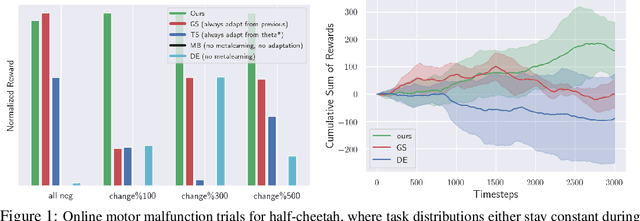

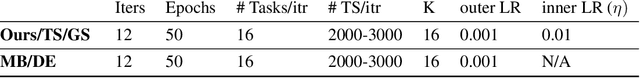

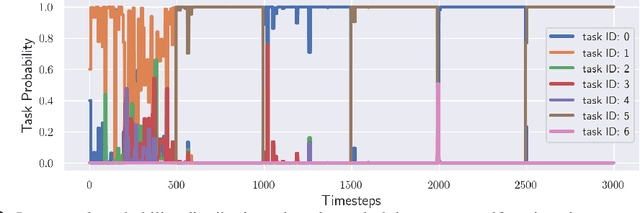

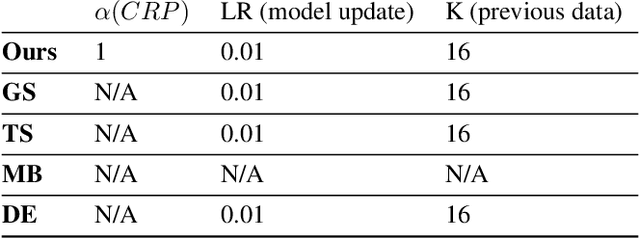

Deep Online Learning via Meta-Learning: Continual Adaptation for Model-Based RL

Dec 18, 2018Anusha Nagabandi, Chelsea Finn, Sergey Levine

Humans and animals can learn complex predictive models that allow them to accurately and reliably reason about real-world phenomena, and they can adapt such models extremely quickly in the face of unexpected changes. Deep neural network models allow us to represent very complex functions, but lack this capacity for rapid online adaptation. The goal in this paper is to develop a method for continual online learning from an incoming stream of data, using deep neural network models. We formulate an online learning procedure that uses stochastic gradient descent to update model parameters, and an expectation maximization algorithm with a Chinese restaurant process prior to develop and maintain a mixture of models to handle non-stationary task distributions. This allows for all models to be adapted as necessary, with new models instantiated for task changes and old models recalled when previously seen tasks are encountered again. Furthermore, we observe that meta-learning can be used to meta-train a model such that this direct online adaptation with SGD is effective, which is otherwise not the case for large function approximators. In this work, we apply our meta-learning for online learning (MOLe) approach to model-based reinforcement learning, where adapting the predictive model is critical for control; we demonstrate that MOLe outperforms alternative prior methods, and enables effective continuous adaptation in non-stationary task distributions such as varying terrains, motor failures, and unexpected disturbances.

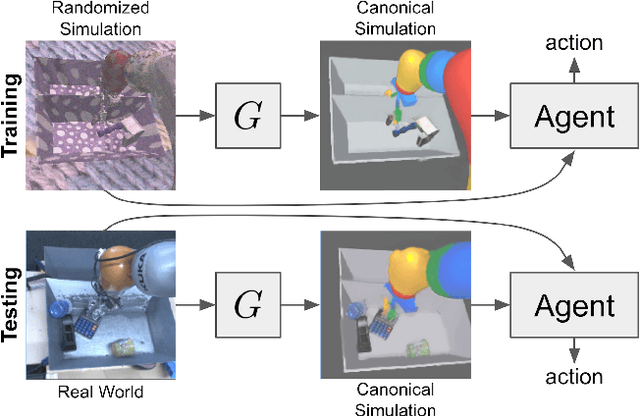

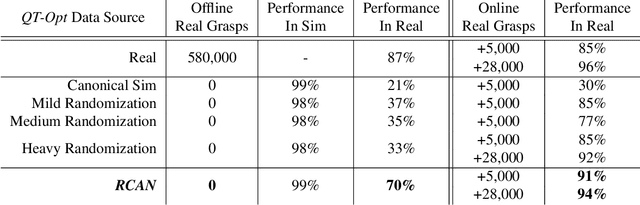

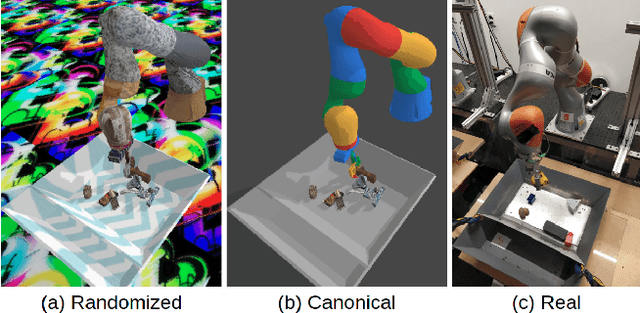

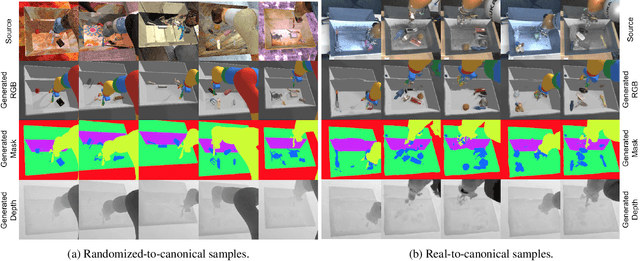

Sim-to-Real via Sim-to-Sim: Data-efficient Robotic Grasping via Randomized-to-Canonical Adaptation Networks

Dec 18, 2018Stephen James, Paul Wohlhart, Mrinal Kalakrishnan, Dmitry Kalashnikov, Alex Irpan, Julian Ibarz, Sergey Levine, Raia Hadsell, Konstantinos Bousmalis

Real world data, especially in the domain of robotics, is notoriously costly to collect. One way to circumvent this can be to leverage the power of simulation in order to produce large amounts of labelled data. However, training models on simulated images does not readily transfer to real-world ones. Using domain adaptation methods to cross this "reality gap" requires at best a large amount of unlabelled real-world data, whilst domain randomization alone can waste modeling power, rendering certain reinforcement learning (RL) methods unable to learn the task of interest. In this paper, we present Randomized-to-Canonical Adaptation Networks (RCANs), a novel approach to crossing the visual reality gap that uses no real-world data. Our method learns to translate randomized rendered images into their equivalent non-randomized, canonical versions. This in turn allows for real images to also be translated into canonical sim images. We demonstrate the effectiveness of this sim-to-real approach by training a vision-based closed-loop grasping reinforcement learning agent in simulation, and then transferring it to the real world to attain 70% zero-shot grasp success on unseen objects, a result that almost doubles the success of learning the same task directly on domain randomization alone. Additionally, by joint finetuning in the real-world with only 5,000 real-world grasps, our method achieves 91%, outperforming a state-of-the-art system trained with 580,000 real-world grasps, resulting in a reduction of real-world data by more than 99%.

Soft Actor-Critic Algorithms and Applications

Dec 13, 2018Tuomas Haarnoja, Aurick Zhou, Kristian Hartikainen, George Tucker, Sehoon Ha, Jie Tan, Vikash Kumar, Henry Zhu, Abhishek Gupta, Pieter Abbeel, Sergey Levine

Model-free deep reinforcement learning (RL) algorithms have been successfully applied to a range of challenging sequential decision making and control tasks. However, these methods typically suffer from two major challenges: high sample complexity and brittleness to hyperparameters. Both of these challenges limit the applicability of such methods to real-world domains. In this paper, we describe Soft Actor-Critic (SAC), our recently introduced off-policy actor-critic algorithm based on the maximum entropy RL framework. In this framework, the actor aims to simultaneously maximize expected return and entropy. That is, to succeed at the task while acting as randomly as possible. We extend SAC to incorporate a number of modifications that accelerate training and improve stability with respect to the hyperparameters, including a constrained formulation that automatically tunes the temperature hyperparameter. We systematically evaluate SAC on a range of benchmark tasks, as well as real-world challenging tasks such as locomotion for a quadrupedal robot and robotic manipulation with a dexterous hand. With these improvements, SAC achieves state-of-the-art performance, outperforming prior on-policy and off-policy methods in sample-efficiency and asymptotic performance. Furthermore, we demonstrate that, in contrast to other off-policy algorithms, our approach is very stable, achieving similar performance across different random seeds. These results suggest that SAC is a promising candidate for learning in real-world robotics tasks.

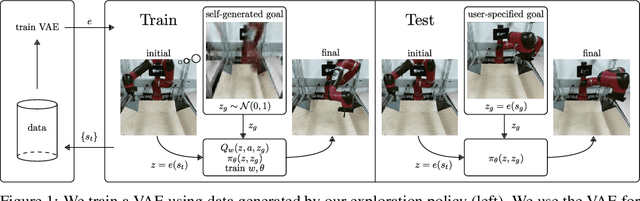

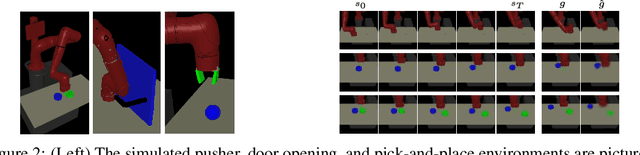

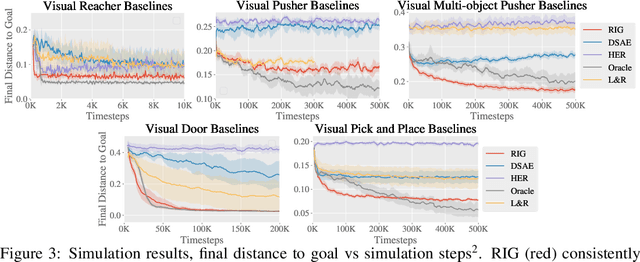

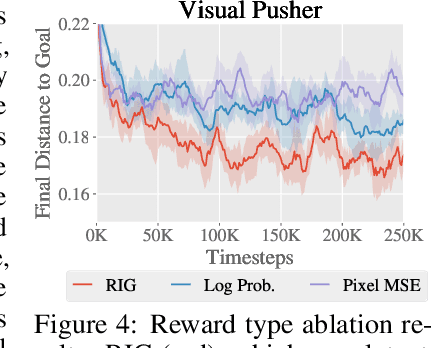

Visual Reinforcement Learning with Imagined Goals

Dec 04, 2018Ashvin Nair, Vitchyr Pong, Murtaza Dalal, Shikhar Bahl, Steven Lin, Sergey Levine

For an autonomous agent to fulfill a wide range of user-specified goals at test time, it must be able to learn broadly applicable and general-purpose skill repertoires. Furthermore, to provide the requisite level of generality, these skills must handle raw sensory input such as images. In this paper, we propose an algorithm that acquires such general-purpose skills by combining unsupervised representation learning and reinforcement learning of goal-conditioned policies. Since the particular goals that might be required at test-time are not known in advance, the agent performs a self-supervised "practice" phase where it imagines goals and attempts to achieve them. We learn a visual representation with three distinct purposes: sampling goals for self-supervised practice, providing a structured transformation of raw sensory inputs, and computing a reward signal for goal reaching. We also propose a retroactive goal relabeling scheme to further improve the sample-efficiency of our method. Our off-policy algorithm is efficient enough to learn policies that operate on raw image observations and goals for a real-world robotic system, and substantially outperforms prior techniques.

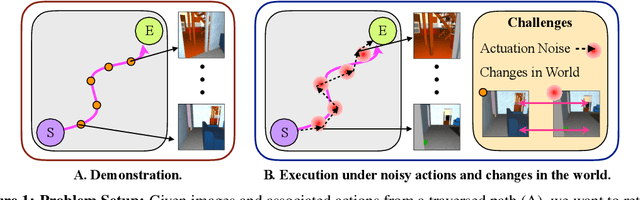

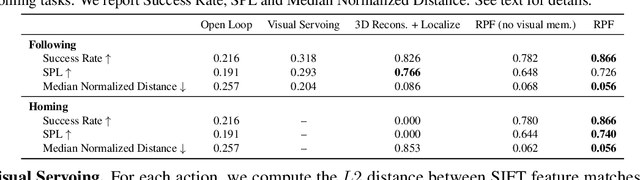

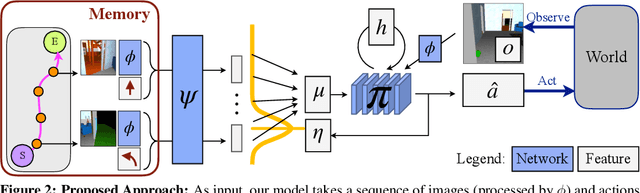

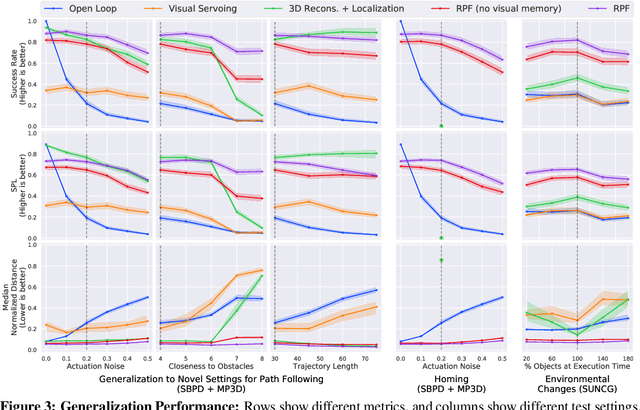

Visual Memory for Robust Path Following

Dec 03, 2018Ashish Kumar, Saurabh Gupta, David Fouhey, Sergey Levine, Jitendra Malik

Humans routinely retrace paths in a novel environment both forwards and backwards despite uncertainty in their motion. This paper presents an approach for doing so. Given a demonstration of a path, a first network generates a path abstraction. Equipped with this abstraction, a second network observes the world and decides how to act to retrace the path under noisy actuation and a changing environment. The two networks are optimized end-to-end at training time. We evaluate the method in two realistic simulators, performing path following and homing under actuation noise and environmental changes. Our experiments show that our approach outperforms classical approaches and other learning based baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge