Haibin Ling

Consistency and Diversity induced Human Motion Segmentation

Feb 10, 2022

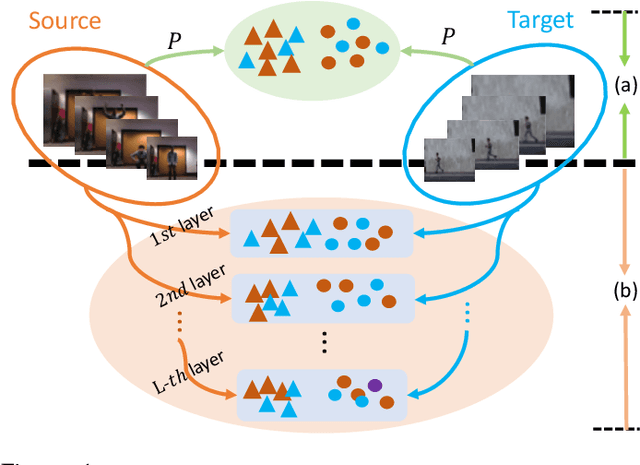

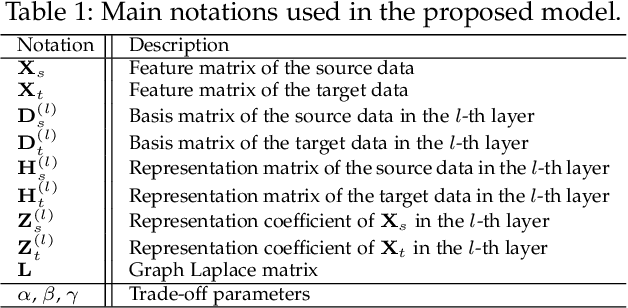

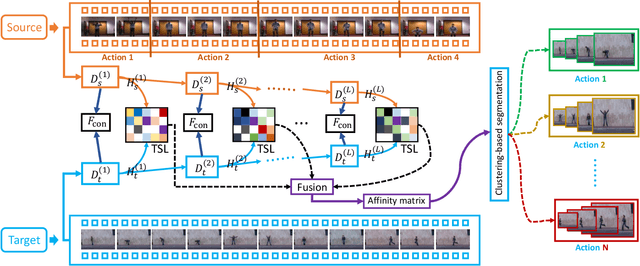

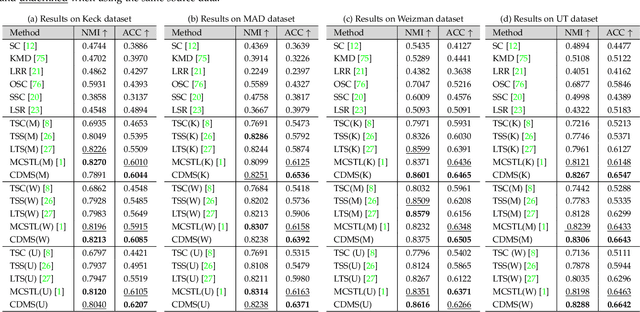

Abstract:Subspace clustering is a classical technique that has been widely used for human motion segmentation and other related tasks. However, existing segmentation methods often cluster data without guidance from prior knowledge, resulting in unsatisfactory segmentation results. To this end, we propose a novel Consistency and Diversity induced human Motion Segmentation (CDMS) algorithm. Specifically, our model factorizes the source and target data into distinct multi-layer feature spaces, in which transfer subspace learning is conducted on different layers to capture multi-level information. A multi-mutual consistency learning strategy is carried out to reduce the domain gap between the source and target data. In this way, the domain-specific knowledge and domain-invariant properties can be explored simultaneously. Besides, a novel constraint based on the Hilbert Schmidt Independence Criterion (HSIC) is introduced to ensure the diversity of multi-level subspace representations, which enables the complementarity of multi-level representations to be explored to boost the transfer learning performance. Moreover, to preserve the temporal correlations, an enhanced graph regularizer is imposed on the learned representation coefficients and the multi-level representations of the source data. The proposed model can be efficiently solved using the Alternating Direction Method of Multipliers (ADMM) algorithm. Extensive experimental results on public human motion datasets demonstrate the effectiveness of our method against several state-of-the-art approaches.

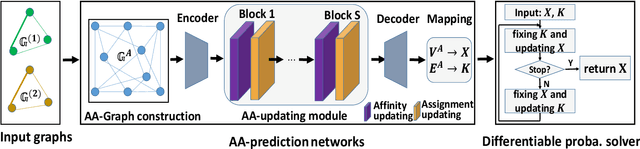

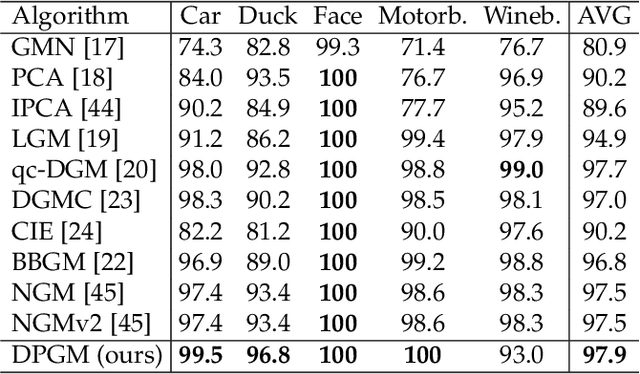

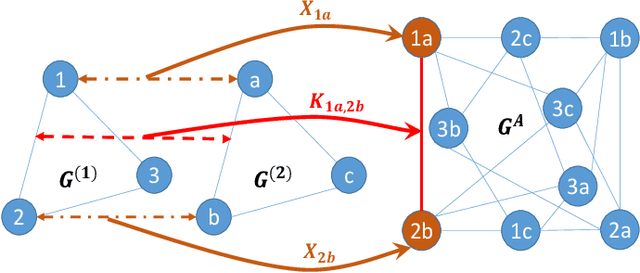

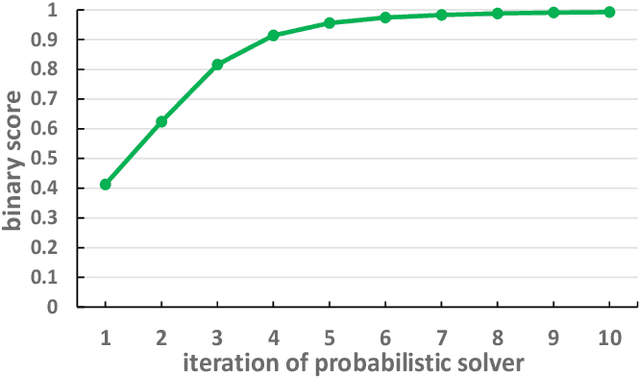

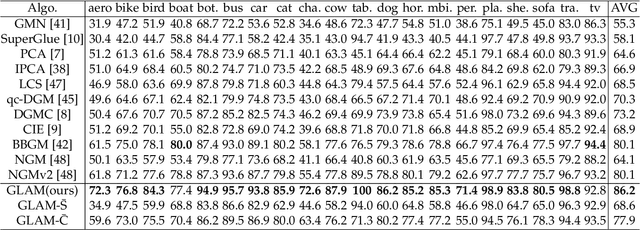

Deep Probabilistic Graph Matching

Jan 05, 2022

Abstract:Most previous learning-based graph matching algorithms solve the \textit{quadratic assignment problem} (QAP) by dropping one or more of the matching constraints and adopting a relaxed assignment solver to obtain sub-optimal correspondences. Such relaxation may actually weaken the original graph matching problem, and in turn hurt the matching performance. In this paper we propose a deep learning-based graph matching framework that works for the original QAP without compromising on the matching constraints. In particular, we design an affinity-assignment prediction network to jointly learn the pairwise affinity and estimate the node assignments, and we then develop a differentiable solver inspired by the probabilistic perspective of the pairwise affinities. Aiming to obtain better matching results, the probabilistic solver refines the estimated assignments in an iterative manner to impose both discrete and one-to-one matching constraints. The proposed method is evaluated on three popularly tested benchmarks (Pascal VOC, Willow Object and SPair-71k), and it outperforms all previous state-of-the-arts on all benchmarks.

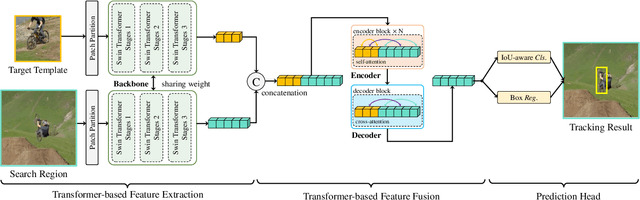

SwinTrack: A Simple and Strong Baseline for Transformer Tracking

Dec 08, 2021

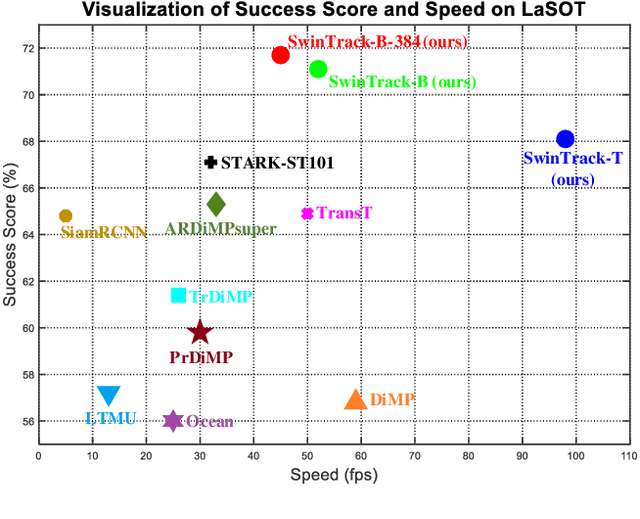

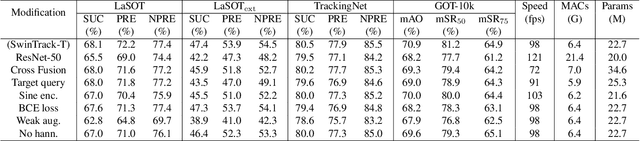

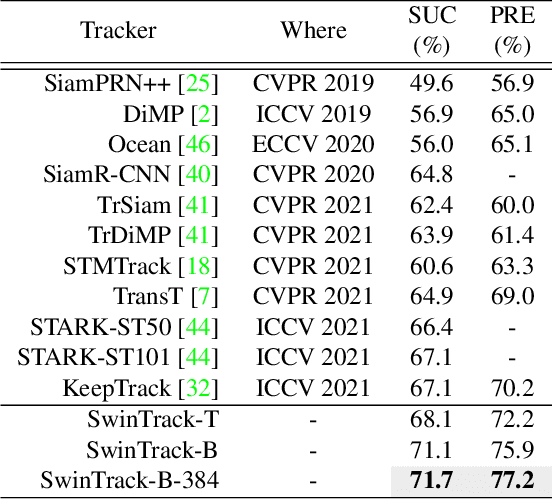

Abstract:Transformer has recently demonstrated clear potential in improving visual tracking algorithms. Nevertheless, existing transformer-based trackers mostly use Transformer to fuse and enhance the features generated by convolutional neural networks (CNNs). By contrast, in this paper, we propose a fully attentional-based Transformer tracking algorithm, Swin-Transformer Tracker (SwinTrack). SwinTrack uses Transformer for both feature extraction and feature fusion, allowing full interactions between the target object and the search region for tracking. To further improve performance, we investigate comprehensively different strategies for feature fusion, position encoding, and training loss. All these efforts make SwinTrack a simple yet solid baseline. In our thorough experiments, SwinTrack sets a new record with 0.702 SUC on LaSOT, surpassing STARK by 3.1% while still running at 45 FPS. Besides, it achieves state-of-the-art performances with 0.476 SUC, 0.840 SUC and 0.694 AO on other challenging LaSOT$_{ext}$, TrackingNet, and GOT-10k datasets. Our implementation and trained models are available at https://github.com/LitingLin/SwinTrack.

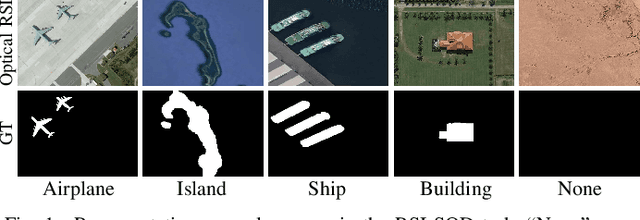

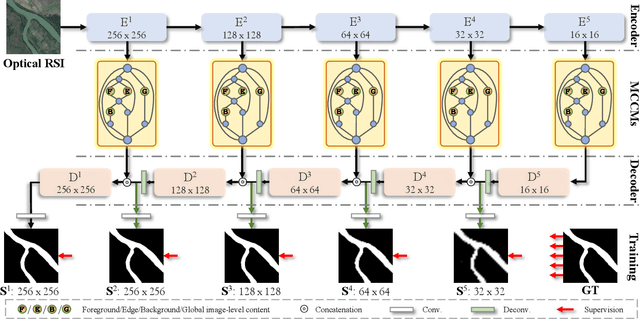

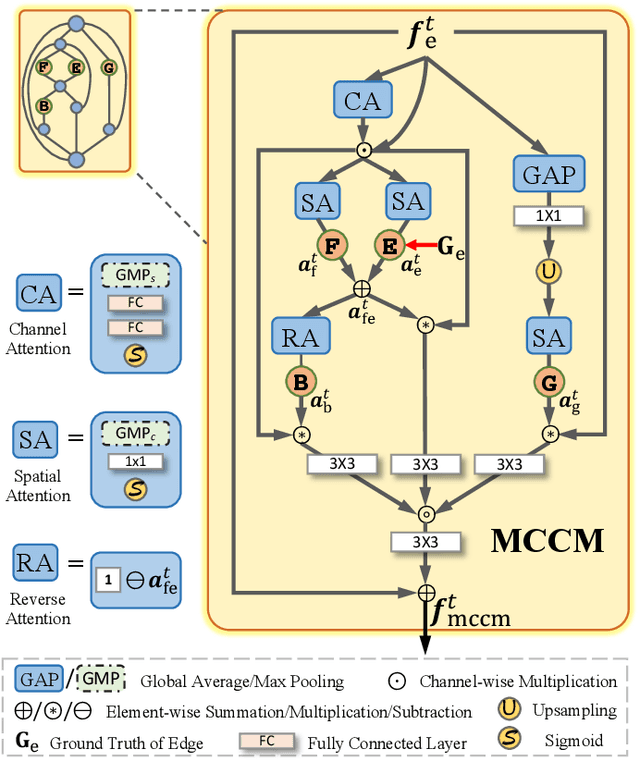

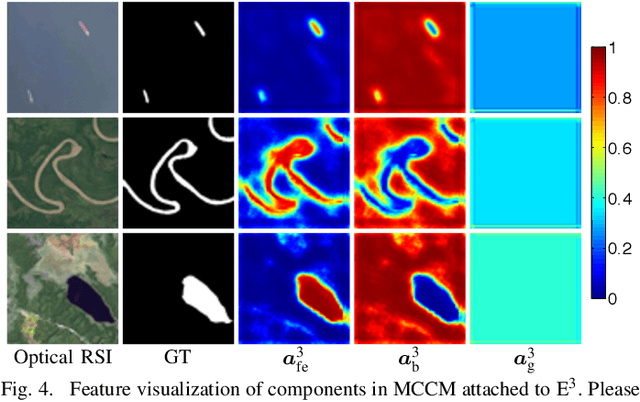

Multi-Content Complementation Network for Salient Object Detection in Optical Remote Sensing Images

Dec 02, 2021

Abstract:In the computer vision community, great progresses have been achieved in salient object detection from natural scene images (NSI-SOD); by contrast, salient object detection in optical remote sensing images (RSI-SOD) remains to be a challenging emerging topic. The unique characteristics of optical RSIs, such as scales, illuminations and imaging orientations, bring significant differences between NSI-SOD and RSI-SOD. In this paper, we propose a novel Multi-Content Complementation Network (MCCNet) to explore the complementarity of multiple content for RSI-SOD. Specifically, MCCNet is based on the general encoder-decoder architecture, and contains a novel key component named Multi-Content Complementation Module (MCCM), which bridges the encoder and the decoder. In MCCM, we consider multiple types of features that are critical to RSI-SOD, including foreground features, edge features, background features, and global image-level features, and exploit the content complementarity between them to highlight salient regions over various scales in RSI features through the attention mechanism. Besides, we comprehensively introduce pixel-level, map-level and metric-aware losses in the training phase. Extensive experiments on two popular datasets demonstrate that the proposed MCCNet outperforms 23 state-of-the-art methods, including both NSI-SOD and RSI-SOD methods. The code and results of our method are available at https://github.com/MathLee/MCCNet.

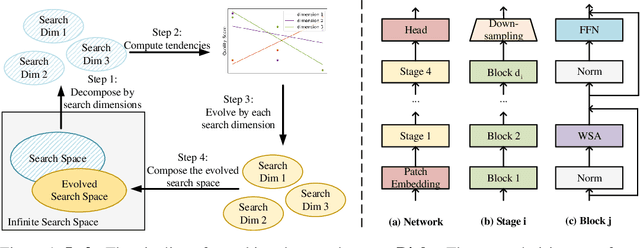

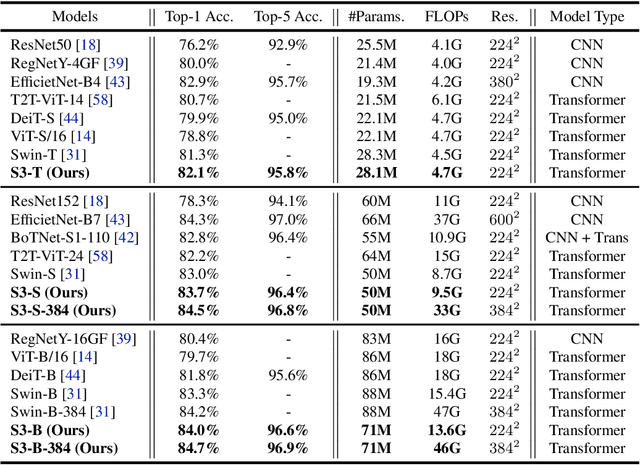

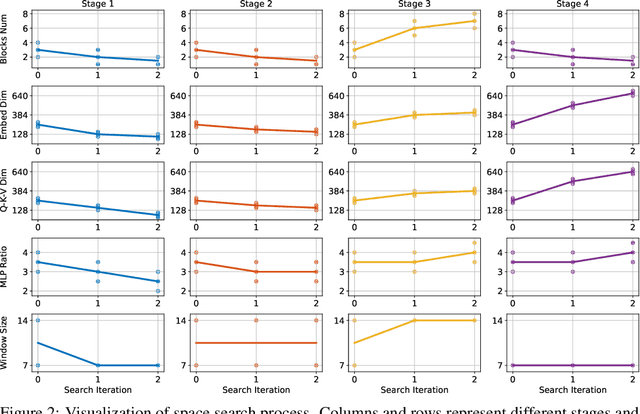

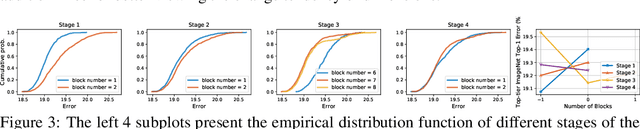

Searching the Search Space of Vision Transformer

Nov 29, 2021

Abstract:Vision Transformer has shown great visual representation power in substantial vision tasks such as recognition and detection, and thus been attracting fast-growing efforts on manually designing more effective architectures. In this paper, we propose to use neural architecture search to automate this process, by searching not only the architecture but also the search space. The central idea is to gradually evolve different search dimensions guided by their E-T Error computed using a weight-sharing supernet. Moreover, we provide design guidelines of general vision transformers with extensive analysis according to the space searching process, which could promote the understanding of vision transformer. Remarkably, the searched models, named S3 (short for Searching the Search Space), from the searched space achieve superior performance to recently proposed models, such as Swin, DeiT and ViT, when evaluated on ImageNet. The effectiveness of S3 is also illustrated on object detection, semantic segmentation and visual question answering, demonstrating its generality to downstream vision and vision-language tasks. Code and models will be available at https://github.com/microsoft/Cream.

Osteoporosis Prescreening using Panoramic Radiographs through a Deep Convolutional Neural Network with Attention Mechanism

Oct 19, 2021

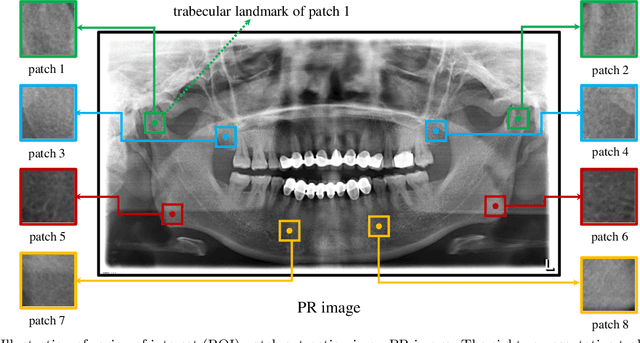

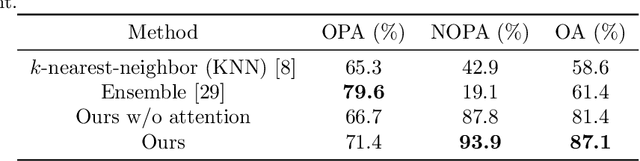

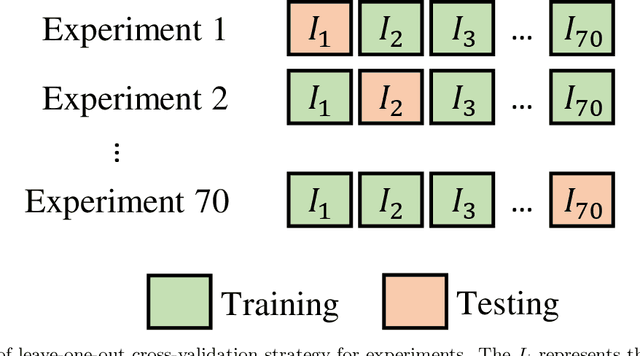

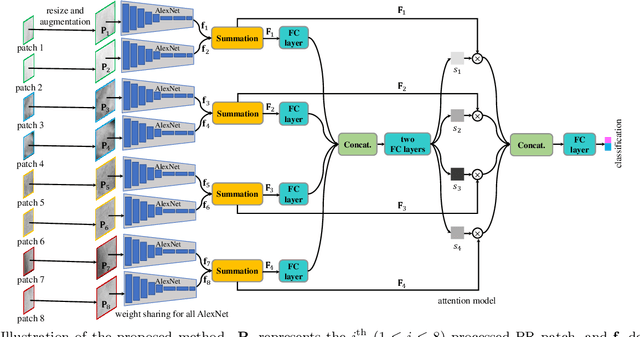

Abstract:Objectives. The aim of this study was to investigate whether a deep convolutional neural network (CNN) with an attention module can detect osteoporosis on panoramic radiographs. Study Design. A dataset of 70 panoramic radiographs (PRs) from 70 different subjects of age between 49 to 60 was used, including 49 subjects with osteoporosis and 21 normal subjects. We utilized the leave-one-out cross-validation approach to generate 70 training and test splits. Specifically, for each split, one image was used for testing and the remaining 69 images were used for training. A deep convolutional neural network (CNN) using the Siamese architecture was implemented through a fine-tuning process to classify an PR image using patches extracted from eight representative trabecula bone areas (Figure 1). In order to automatically learn the importance of different PR patches, an attention module was integrated into the deep CNN. Three metrics, including osteoporosis accuracy (OPA), non-osteoporosis accuracy (NOPA) and overall accuracy (OA), were utilized for performance evaluation. Results. The proposed baseline CNN approach achieved the OPA, NOPA and OA scores of 0.667, 0.878 and 0.814, respectively. With the help of the attention module, the OPA, NOPA and OA scores were further improved to 0.714, 0.939 and 0.871, respectively. Conclusions. The proposed method obtained promising results using deep CNN with an attention module, which might be applied to osteoporosis prescreening.

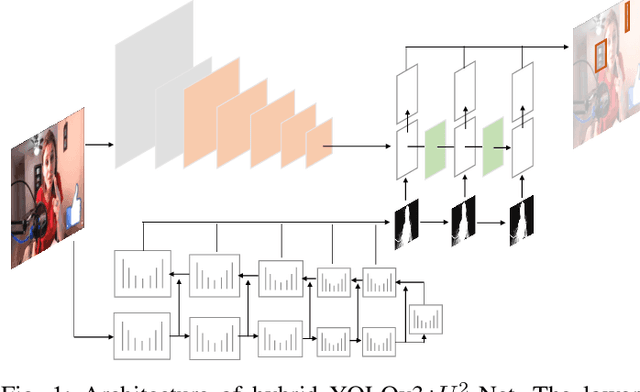

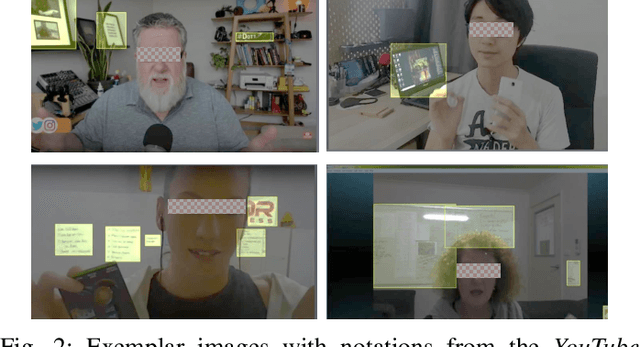

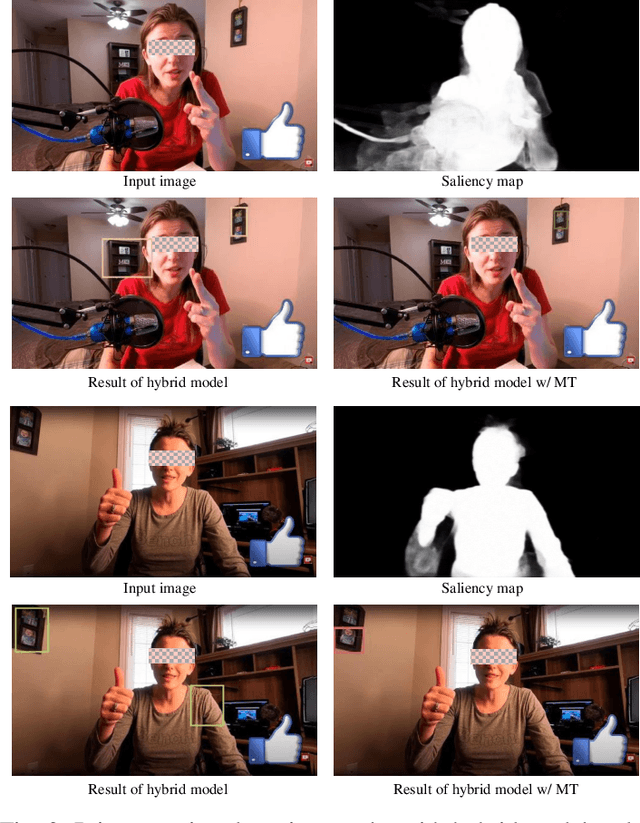

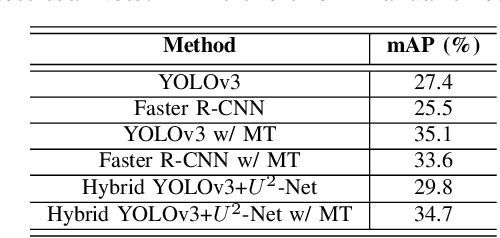

Deep Learning Approach Protecting Privacy in Camera-Based Critical Applications

Oct 04, 2021

Abstract:Many critical applications rely on cameras to capture video footage for analytical purposes. This has led to concerns about these cameras accidentally capturing more information than is necessary. In this paper, we propose a deep learning approach towards protecting privacy in camera-based systems. Instead of specifying specific objects (e.g. faces) are privacy sensitive, our technique distinguishes between salient (visually prominent) and non-salient objects based on the intuition that the latter is unlikely to be needed by the application.

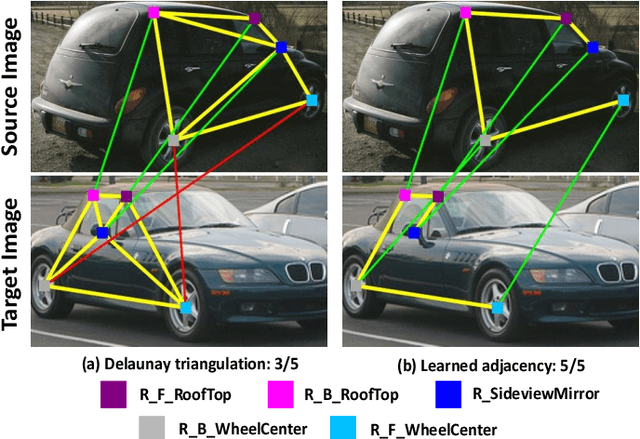

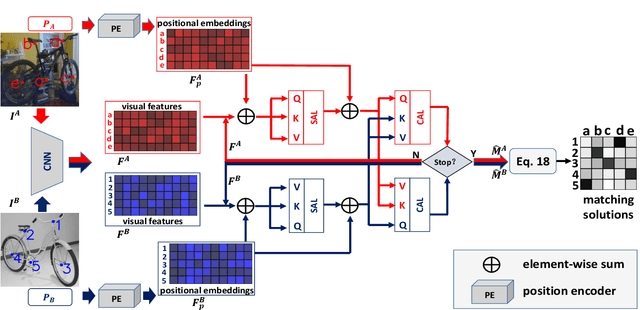

Joint Graph Learning and Matching for Semantic Feature Correspondence

Sep 01, 2021

Abstract:In recent years, powered by the learned discriminative representation via graph neural network (GNN) models, deep graph matching methods have made great progresses in the task of matching semantic features. However, these methods usually rely on heuristically generated graph patterns, which may introduce unreliable relationships to hurt the matching performance. In this paper, we propose a joint \emph{graph learning and matching} network, named GLAM, to explore reliable graph structures for boosting graph matching. GLAM adopts a pure attention-based framework for both graph learning and graph matching. Specifically, it employs two types of attention mechanisms, self-attention and cross-attention for the task. The self-attention discovers the relationships between features and to further update feature representations over the learnt structures; and the cross-attention computes cross-graph correlations between the two feature sets to be matched for feature reconstruction. Moreover, the final matching solution is directly derived from the output of the cross-attention layer, without employing a specific matching decision module. The proposed method is evaluated on three popular visual matching benchmarks (Pascal VOC, Willow Object and SPair-71k), and it outperforms previous state-of-the-art graph matching methods by significant margins on all benchmarks. Furthermore, the graph patterns learnt by our model are validated to be able to remarkably enhance previous deep graph matching methods by replacing their handcrafted graph structures with the learnt ones.

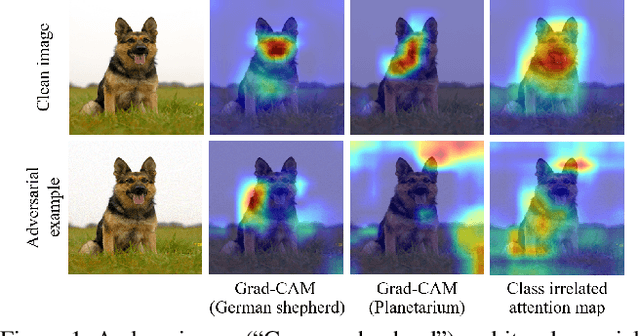

AGKD-BML: Defense Against Adversarial Attack by Attention Guided Knowledge Distillation and Bi-directional Metric Learning

Aug 13, 2021

Abstract:While deep neural networks have shown impressive performance in many tasks, they are fragile to carefully designed adversarial attacks. We propose a novel adversarial training-based model by Attention Guided Knowledge Distillation and Bi-directional Metric Learning (AGKD-BML). The attention knowledge is obtained from a weight-fixed model trained on a clean dataset, referred to as a teacher model, and transferred to a model that is under training on adversarial examples (AEs), referred to as a student model. In this way, the student model is able to focus on the correct region, as well as correcting the intermediate features corrupted by AEs to eventually improve the model accuracy. Moreover, to efficiently regularize the representation in feature space, we propose a bidirectional metric learning. Specifically, given a clean image, it is first attacked to its most confusing class to get the forward AE. A clean image in the most confusing class is then randomly picked and attacked back to the original class to get the backward AE. A triplet loss is then used to shorten the representation distance between original image and its AE, while enlarge that between the forward and backward AEs. We conduct extensive adversarial robustness experiments on two widely used datasets with different attacks. Our proposed AGKD-BML model consistently outperforms the state-of-the-art approaches. The code of AGKD-BML will be available at: https://github.com/hongw579/AGKD-BML.

RINDNet: Edge Detection for Discontinuity in Reflectance, Illumination, Normal and Depth

Aug 02, 2021

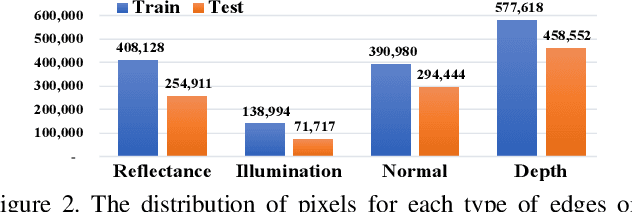

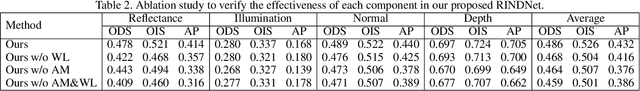

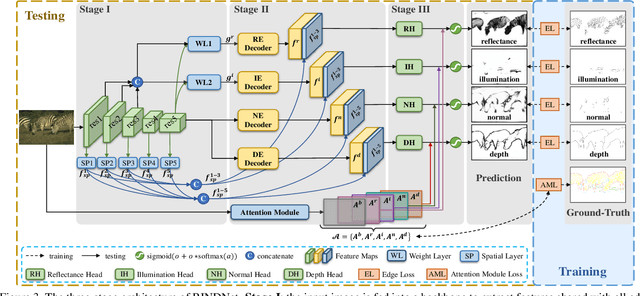

Abstract:As a fundamental building block in computer vision, edges can be categorised into four types according to the discontinuity in surface-Reflectance, Illumination, surface-Normal or Depth. While great progress has been made in detecting generic or individual types of edges, it remains under-explored to comprehensively study all four edge types together. In this paper, we propose a novel neural network solution, RINDNet, to jointly detect all four types of edges. Taking into consideration the distinct attributes of each type of edges and the relationship between them, RINDNet learns effective representations for each of them and works in three stages. In stage I, RINDNet uses a common backbone to extract features shared by all edges. Then in stage II it branches to prepare discriminative features for each edge type by the corresponding decoder. In stage III, an independent decision head for each type aggregates the features from previous stages to predict the initial results. Additionally, an attention module learns attention maps for all types to capture the underlying relations between them, and these maps are combined with initial results to generate the final edge detection results. For training and evaluation, we construct the first public benchmark, BSDS-RIND, with all four types of edges carefully annotated. In our experiments, RINDNet yields promising results in comparison with state-of-the-art methods. Additional analysis is presented in supplementary material.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge