Yuchen Zhou

Neo

AIForge-Doc: A Benchmark for Detecting AI-Forged Tampering in Financial and Form Documents

Feb 24, 2026Abstract:We present AIForge-Doc, the first dedicated benchmark targeting exclusively diffusion-model-based inpainting in financial and form documents with pixel-level annotation. Existing document forgery datasets rely on traditional digital editing tools (e.g., Adobe Photoshop, GIMP), creating a critical gap: state-of-the-art detectors are blind to the rapidly growing threat of AI-forged document fraud. AIForge-Doc addresses this gap by systematically forging numeric fields in real-world receipt and form images using two AI inpainting APIs -- Gemini 2.5 Flash Image and Ideogram v2 Edit -- yielding 4,061 forged images from four public document datasets (CORD, WildReceipt, SROIE, XFUND) across nine languages, annotated with pixel-precise tampered-region masks in DocTamper-compatible format. We benchmark three representative detectors -- TruFor, DocTamper, and a zero-shot GPT-4o judge -- and find that all existing methods degrade substantially: TruFor achieves AUC=0.751 (zero-shot, out-of-distribution) vs. AUC=0.96 on NIST16; DocTamper achieves AUC=0.563 vs. AUC=0.98 in-distribution, with pixel-level IoU=0.020; GPT-4o achieves only 0.509 -- essentially at chance -- confirming that AI-forged values are indistinguishable to automated detectors and VLMs. These results demonstrate that AIForge-Doc represents a qualitatively new and unsolved challenge for document forensics.

How well are open sourced AI-generated image detection models out-of-the-box: A comprehensive benchmark study

Feb 08, 2026Abstract:As AI-generated images proliferate across digital platforms, reliable detection methods have become critical for combating misinformation and maintaining content authenticity. While numerous deepfake detection methods have been proposed, existing benchmarks predominantly evaluate fine-tuned models, leaving a critical gap in understanding out-of-the-box performance -- the most common deployment scenario for practitioners. We present the first comprehensive zero-shot evaluation of 16 state-of-the-art detection methods, comprising 23 pretrained detector variants (due to multiple released versions of certain detectors), across 12 diverse datasets, comprising 2.6~million image samples spanning 291 unique generators including modern diffusion models. Our systematic analysis reveals striking findings: (1)~no universal winner exists, with detector rankings exhibiting substantial instability (Spearman~$ρ$: 0.01 -- 0.87 across dataset pairs); (2)~a 37~percentage-point performance gap separates the best detector (75.0\% mean accuracy) from the worst (37.5\%); (3)~training data alignment critically impacts generalization, causing up to 20--60\% performance variance within architecturally identical detector families; (4)~modern commercial generators (Flux~Dev, Firefly~v4, Midjourney~v7) defeat most detectors, achieving only 18--30\% average accuracy; and (5)~we identify three systematic failure patterns affecting cross-dataset generalization. Statistical analysis confirms significant performance differences between detectors (Friedman test: $χ^2$=121.01, $p<10^{-16}$, Kendall~$W$=0.524). Our findings challenge the ``one-size-fits-all'' detector paradigm and provide actionable deployment guidelines, demonstrating that practitioners must carefully select detectors based on their specific threat landscape rather than relying on published benchmark performance.

Optimal Convergence Analysis of DDPM for General Distributions

Oct 31, 2025Abstract:Score-based diffusion models have achieved remarkable empirical success in generating high-quality samples from target data distributions. Among them, the Denoising Diffusion Probabilistic Model (DDPM) is one of the most widely used samplers, generating samples via estimated score functions. Despite its empirical success, a tight theoretical understanding of DDPM -- especially its convergence properties -- remains limited. In this paper, we provide a refined convergence analysis of the DDPM sampler and establish near-optimal convergence rates under general distributional assumptions. Specifically, we introduce a relaxed smoothness condition parameterized by a constant $L$, which is small for many practical distributions (e.g., Gaussian mixture models). We prove that the DDPM sampler with accurate score estimates achieves a convergence rate of $$\widetilde{O}\left(\frac{d\min\{d,L^2\}}{T^2}\right)~\text{in Kullback-Leibler divergence},$$ where $d$ is the data dimension, $T$ is the number of iterations, and $\widetilde{O}$ hides polylogarithmic factors in $T$. This result substantially improves upon the best-known $d^2/T^2$ rate when $L < \sqrt{d}$. By establishing a matching lower bound, we show that our convergence analysis is tight for a wide array of target distributions. Moreover, it reveals that DDPM and DDIM share the same dependence on $d$, raising an interesting question of why DDIM often appears empirically faster.

RePainter: Empowering E-commerce Object Removal via Spatial-matting Reinforcement Learning

Oct 09, 2025Abstract:In web data, product images are central to boosting user engagement and advertising efficacy on e-commerce platforms, yet the intrusive elements such as watermarks and promotional text remain major obstacles to delivering clear and appealing product visuals. Although diffusion-based inpainting methods have advanced, they still face challenges in commercial settings due to unreliable object removal and limited domain-specific adaptation. To tackle these challenges, we propose Repainter, a reinforcement learning framework that integrates spatial-matting trajectory refinement with Group Relative Policy Optimization (GRPO). Our approach modulates attention mechanisms to emphasize background context, generating higher-reward samples and reducing unwanted object insertion. We also introduce a composite reward mechanism that balances global, local, and semantic constraints, effectively reducing visual artifacts and reward hacking. Additionally, we contribute EcomPaint-100K, a high-quality, large-scale e-commerce inpainting dataset, and a standardized benchmark EcomPaint-Bench for fair evaluation. Extensive experiments demonstrate that Repainter significantly outperforms state-of-the-art methods, especially in challenging scenes with intricate compositions. We will release our code and weights upon acceptance.

ORIC: Benchmarking Object Recognition in Incongruous Context for Large Vision-Language Models

Sep 19, 2025Abstract:Large Vision-Language Models (LVLMs) have made significant strides in image caption, visual question answering, and robotics by integrating visual and textual information. However, they remain prone to errors in incongruous contexts, where objects appear unexpectedly or are absent when contextually expected. This leads to two key recognition failures: object misidentification and hallucination. To systematically examine this issue, we introduce the Object Recognition in Incongruous Context Benchmark (ORIC), a novel benchmark that evaluates LVLMs in scenarios where object-context relationships deviate from expectations. ORIC employs two key strategies: (1) LLM-guided sampling, which identifies objects that are present but contextually incongruous, and (2) CLIP-guided sampling, which detects plausible yet nonexistent objects that are likely to be hallucinated, thereby creating an incongruous context. Evaluating 18 LVLMs and two open-vocabulary detection models, our results reveal significant recognition gaps, underscoring the challenges posed by contextual incongruity. This work provides critical insights into LVLMs' limitations and encourages further research on context-aware object recognition.

Careful Queries, Credible Results: Teaching RAG Models Advanced Web Search Tools with Reinforcement Learning

Aug 11, 2025

Abstract:Retrieval-Augmented Generation (RAG) enhances large language models (LLMs) by integrating up-to-date external knowledge, yet real-world web environments present unique challenges. These limitations manifest as two key challenges: pervasive misinformation in the web environment, which introduces unreliable or misleading content that can degrade retrieval accuracy, and the underutilization of web tools, which, if effectively employed, could enhance query precision and help mitigate this noise, ultimately improving the retrieval results in RAG systems. To address these issues, we propose WebFilter, a novel RAG framework that generates source-restricted queries and filters out unreliable content. This approach combines a retrieval filtering mechanism with a behavior- and outcome-driven reward strategy, optimizing both query formulation and retrieval outcomes. Extensive experiments demonstrate that WebFilter improves answer quality and retrieval precision, outperforming existing RAG methods on both in-domain and out-of-domain benchmarks.

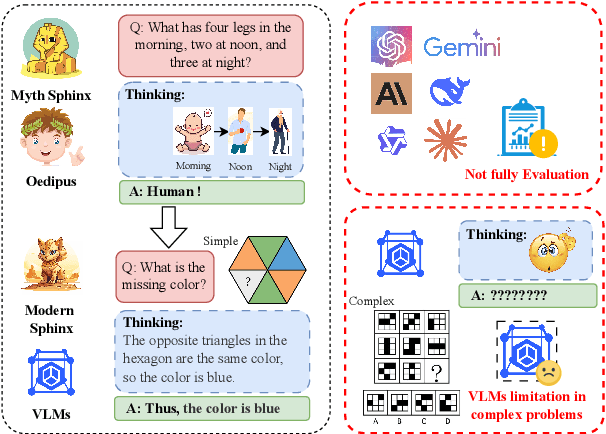

Oedipus and the Sphinx: Benchmarking and Improving Visual Language Models for Complex Graphic Reasoning

Aug 01, 2025

Abstract:Evaluating the performance of visual language models (VLMs) in graphic reasoning tasks has become an important research topic. However, VLMs still show obvious deficiencies in simulating human-level graphic reasoning capabilities, especially in complex graphic reasoning and abstract problem solving, which are less studied and existing studies only focus on simple graphics. To evaluate the performance of VLMs in complex graphic reasoning, we propose ReasonBench, the first evaluation benchmark focused on structured graphic reasoning tasks, which includes 1,613 questions from real-world intelligence tests. ReasonBench covers reasoning dimensions related to location, attribute, quantity, and multi-element tasks, providing a comprehensive evaluation of the performance of VLMs in spatial, relational, and abstract reasoning capabilities. We benchmark 11 mainstream VLMs (including closed-source and open-source models) and reveal significant limitations of current models. Based on these findings, we propose a dual optimization strategy: Diagrammatic Reasoning Chain (DiaCoT) enhances the interpretability of reasoning by decomposing layers, and ReasonTune enhances the task adaptability of model reasoning through training, all of which improves VLM performance by 33.5\%. All experimental data and code are in the repository: https://huggingface.co/datasets/cistine/ReasonBench.

DA-Occ: Efficient 3D Voxel Occupancy Prediction via Directional 2D for Geometric Structure Preservation

Jul 31, 2025

Abstract:Efficient and high-accuracy 3D occupancy prediction is crucial for ensuring the performance of autonomous driving (AD) systems. However, many current methods focus on high accuracy at the expense of real-time processing needs. To address this challenge of balancing accuracy and inference speed, we propose a directional pure 2D approach. Our method involves slicing 3D voxel features to preserve complete vertical geometric information. This strategy compensates for the loss of height cues in Bird's-Eye View (BEV) representations, thereby maintaining the integrity of the 3D geometric structure. By employing a directional attention mechanism, we efficiently extract geometric features from different orientations, striking a balance between accuracy and computational efficiency. Experimental results highlight the significant advantages of our approach for autonomous driving. On the Occ3D-nuScenes, the proposed method achieves an mIoU of 39.3% and an inference speed of 27.7 FPS, effectively balancing accuracy and efficiency. In simulations on edge devices, the inference speed reaches 14.8 FPS, further demonstrating the method's applicability for real-time deployment in resource-constrained environments.

Self-Supervised Contrastive Graph Clustering Network via Structural Information Fusion

Aug 08, 2024

Abstract:Graph clustering, a classical task in graph learning, involves partitioning the nodes of a graph into distinct clusters. This task has applications in various real-world scenarios, such as anomaly detection, social network analysis, and community discovery. Current graph clustering methods commonly rely on module pre-training to obtain a reliable prior distribution for the model, which is then used as the optimization objective. However, these methods often overlook deeper supervised signals, leading to sub-optimal reliability of the prior distribution. To address this issue, we propose a novel deep graph clustering method called CGCN. Our approach introduces contrastive signals and deep structural information into the pre-training process. Specifically, CGCN utilizes a contrastive learning mechanism to foster information interoperability among multiple modules and allows the model to adaptively adjust the degree of information aggregation for different order structures. Our CGCN method has been experimentally validated on multiple real-world graph datasets, showcasing its ability to boost the dependability of prior clustering distributions acquired through pre-training. As a result, we observed notable enhancements in the performance of the model.

* 6 pages, 3 figures

Point-SAM: Promptable 3D Segmentation Model for Point Clouds

Jun 25, 2024Abstract:The development of 2D foundation models for image segmentation has been significantly advanced by the Segment Anything Model (SAM). However, achieving similar success in 3D models remains a challenge due to issues such as non-unified data formats, lightweight models, and the scarcity of labeled data with diverse masks. To this end, we propose a 3D promptable segmentation model (Point-SAM) focusing on point clouds. Our approach utilizes a transformer-based method, extending SAM to the 3D domain. We leverage part-level and object-level annotations and introduce a data engine to generate pseudo labels from SAM, thereby distilling 2D knowledge into our 3D model. Our model outperforms state-of-the-art models on several indoor and outdoor benchmarks and demonstrates a variety of applications, such as 3D annotation. Codes and demo can be found at https://github.com/zyc00/Point-SAM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge