Yong Wang

Graph-based Approximate NN Search: A Revisit

Apr 02, 2022

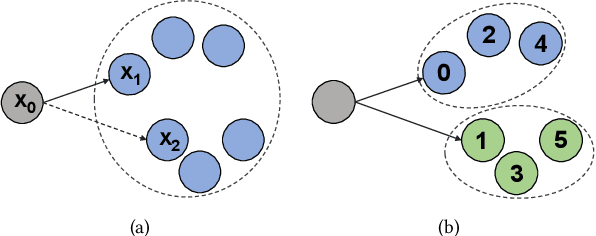

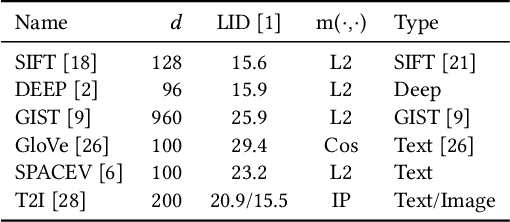

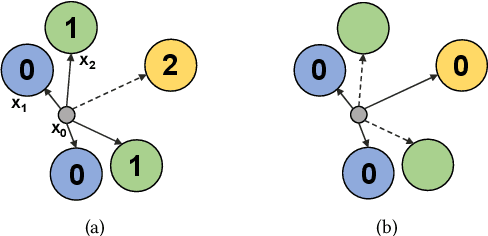

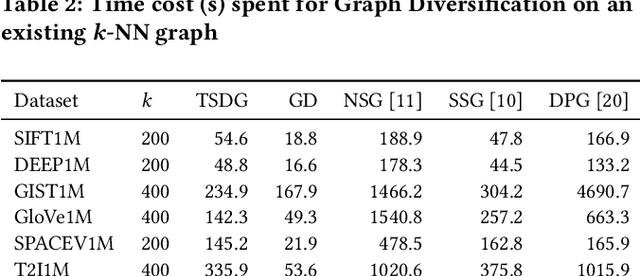

Abstract:Nearest neighbor search plays a fundamental role in many disciplines such as multimedia information retrieval, data-mining, and machine learning. The graph-based search approaches show superior performance over other types of approaches in recent studies. In this paper, the graph-based NN search is revisited. We optimize two key components in the approach, namely the search procedure and the graph that supports the search. For the graph construction, a two-stage graph diversification scheme is proposed, which makes a good trade-off between the efficiency and reachability for the search procedure that builds upon it. Moreover, the proposed diversification scheme allows the search procedure to decide dynamically how many nodes should be visited in one node's neighborhood. By this way, the computing power of the devices is fully utilized when the search is carried out under different circumstances. Furthermore, two NN search procedures are designed respectively for small and large batch queries on the GPU. The optimized NN search, when being supported by the two-stage diversified graph, outperforms all the state-of-the-art approaches on both the CPU and the GPU across all the considered large-scale datasets.

A Novel Anomaly Detection Method for Multimodal WSN Data Flow via a Dynamic Graph Neural Network

Feb 19, 2022

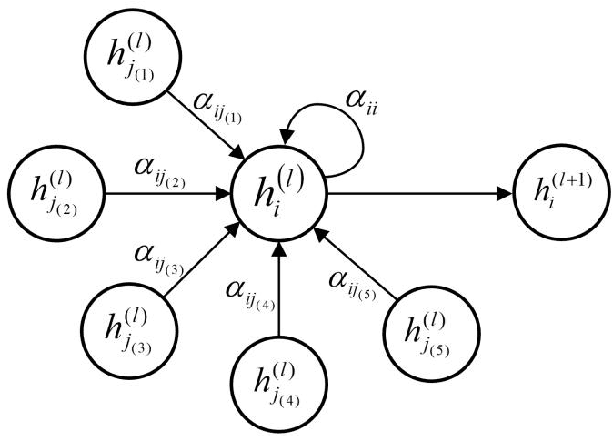

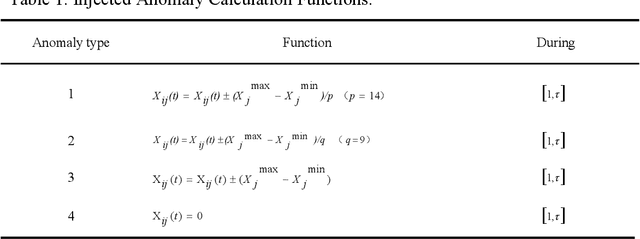

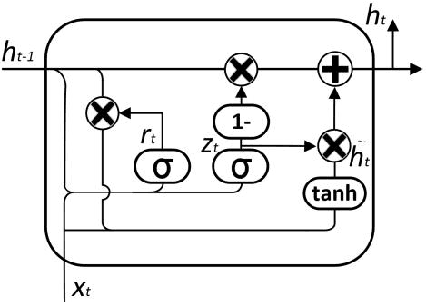

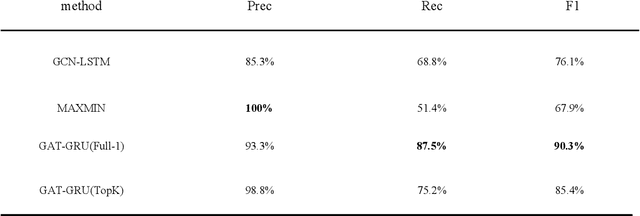

Abstract:Anomaly detection is widely used to distinguish system anomalies by analyzing the temporal and spatial features of wireless sensor network (WSN) data streams; it is one of critical technique that ensures the reliability of WSNs. Currently, graph neural networks (GNNs) have become popular state-of-the-art methods for conducting anomaly detection on WSN data streams. However, the existing anomaly detection methods based on GNNs do not consider the temporal and spatial features of WSN data streams simultaneously, such as multi-node, multi-modal and multi-time features, seriously impacting their effectiveness. In this paper, a novel anomaly detection model is proposed for multimodal WSN data flows, where three GNNs are used to separately extract the temporal features of WSN data flows, the correlation features between different modes and the spatial features between sensor node positions. Specifically, first, the temporal features and modal correlation features extracted from each sensor node are fused into one vector representation, which is further aggregated with the spatial features, i.e., the spatial position relationships of the nodes; finally, the current time-series data of WSN nodes are predicted, and abnormal states are identified according to the fusion features. The simulation results obtained on a public dataset show that the proposed approach is able to significantly improve upon the existing methods in terms of its robustness, and its F1 score reaches 0.90, which is 14.2% higher than that of the graph convolution network (GCN) with long short-term memory (LSTM).

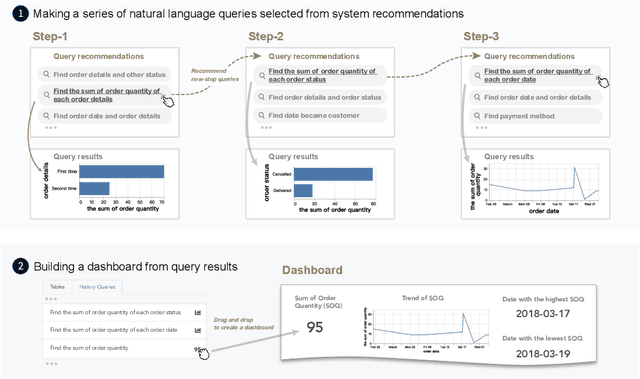

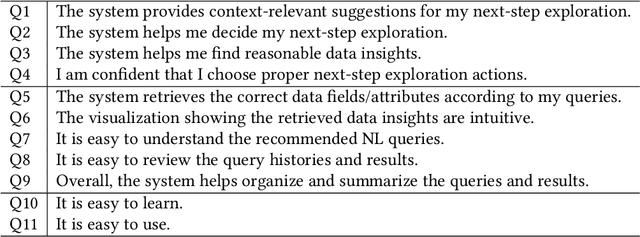

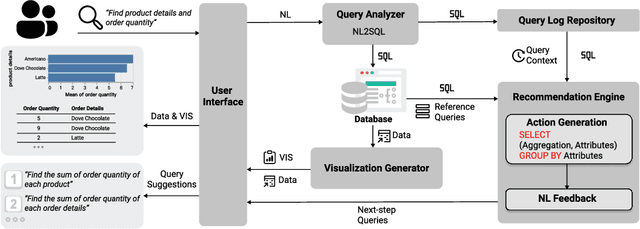

Interactive Data Analysis with Next-step Natural Language Query Recommendation

Jan 13, 2022

Abstract:Natural language interfaces (NLIs) provide users with a convenient way to interactively analyze data through natural language queries. Nevertheless, interactive data analysis is a demanding process, especially for novice data analysts. When exploring large and complex datasets from different domains, data analysts do not necessarily have sufficient knowledge about data and application domains. It makes them unable to efficiently elicit a series of queries and extensively derive desirable data insights. In this paper, we develop an NLI with a step-wise query recommendation module to assist users in choosing appropriate next-step exploration actions. The system adopts a data-driven approach to generate step-wise semantically relevant and context-aware query suggestions for application domains of users' interest based on their query logs. Also, the system helps users organize query histories and results into a dashboard to communicate the discovered data insights. With a comparative user study, we show that our system can facilitate a more effective and systematic data analysis process than a baseline without the recommendation module.

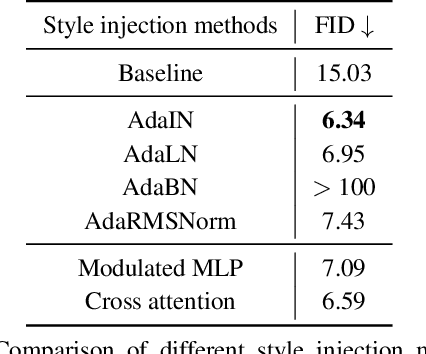

StyleSwin: Transformer-based GAN for High-resolution Image Generation

Dec 20, 2021

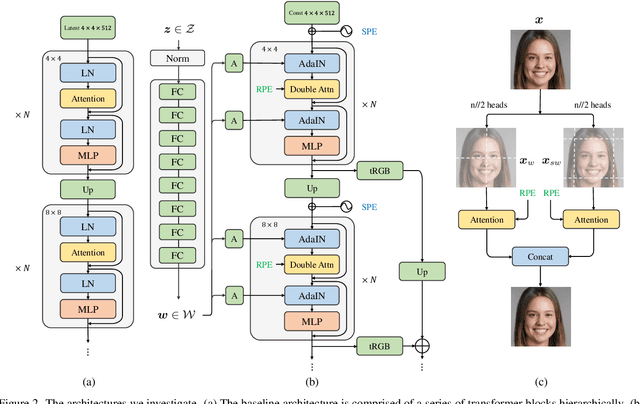

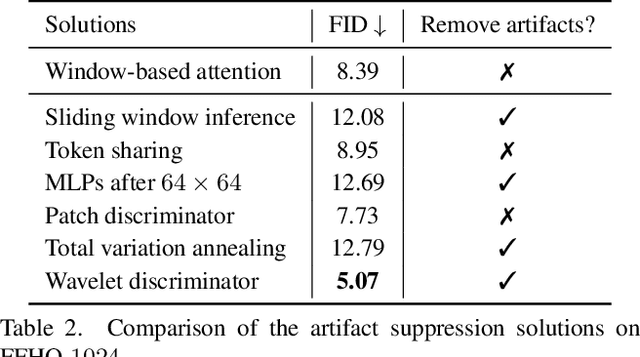

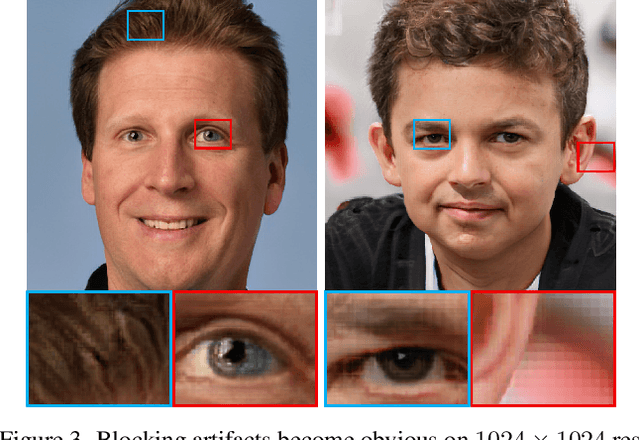

Abstract:Despite the tantalizing success in a broad of vision tasks, transformers have not yet demonstrated on-par ability as ConvNets in high-resolution image generative modeling. In this paper, we seek to explore using pure transformers to build a generative adversarial network for high-resolution image synthesis. To this end, we believe that local attention is crucial to strike the balance between computational efficiency and modeling capacity. Hence, the proposed generator adopts Swin transformer in a style-based architecture. To achieve a larger receptive field, we propose double attention which simultaneously leverages the context of the local and the shifted windows, leading to improved generation quality. Moreover, we show that offering the knowledge of the absolute position that has been lost in window-based transformers greatly benefits the generation quality. The proposed StyleSwin is scalable to high resolutions, with both the coarse geometry and fine structures benefit from the strong expressivity of transformers. However, blocking artifacts occur during high-resolution synthesis because performing the local attention in a block-wise manner may break the spatial coherency. To solve this, we empirically investigate various solutions, among which we find that employing a wavelet discriminator to examine the spectral discrepancy effectively suppresses the artifacts. Extensive experiments show the superiority over prior transformer-based GANs, especially on high resolutions, e.g., 1024x1024. The StyleSwin, without complex training strategies, excels over StyleGAN on CelebA-HQ 1024, and achieves on-par performance on FFHQ-1024, proving the promise of using transformers for high-resolution image generation. The code and models will be available at https://github.com/microsoft/StyleSwin.

Optimized Deployment of Unmanned Aerial Vehicles for Wildfire Detection and Monitoring

Nov 21, 2021

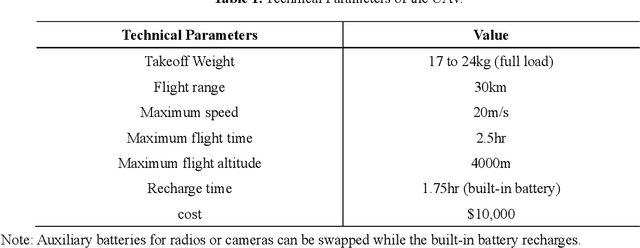

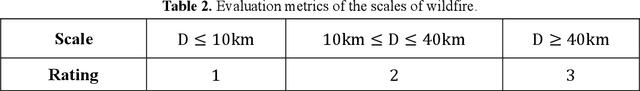

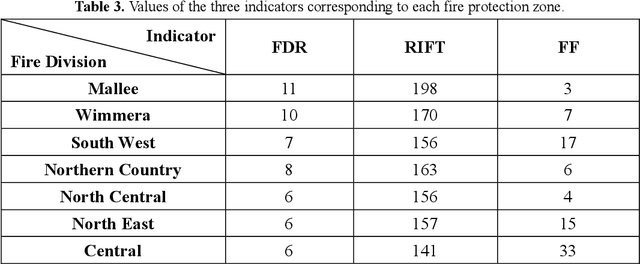

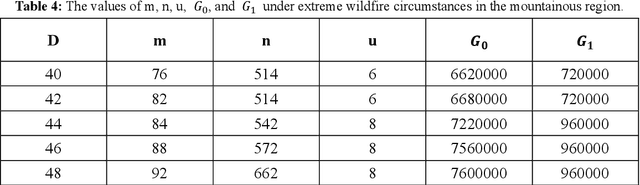

Abstract:In recent years, increased wildfires have caused irreversible damage to forest resources worldwide, threatening wildlives and human living conditions. The lack of accurate frontline information in real-time can pose great risks to firefighters. Though a plethora of machine learning algorithms have been developed to detect wildfires using aerial images and videos captured by drones, there is a lack of methods corresponding to drone deployment. We propose a wildfire rapid response system that optimizes the number and relative positions of drones to achieve full coverage of the whole wildfire area. Trained on the data from historical wildfire events, our model evaluates the possibility of wildfires at different scales and accordingly allocates the resources. It adopts plane geometry to deploy drones while balancing the capability and safety with inequality constrained nonlinear programming. The method can flexibly adapt to different terrains and the dynamic extension of the wildfire area. Lastly, the operation cost under extreme wildfire circumstances can be assessed upon the completion of the deployment. We applied our model to the wildfire data collected from eastern Victoria, Australia, and demonstrated its great potential in the real world.

3rd Place Solution to Google Landmark Recognition Competition 2021

Oct 07, 2021

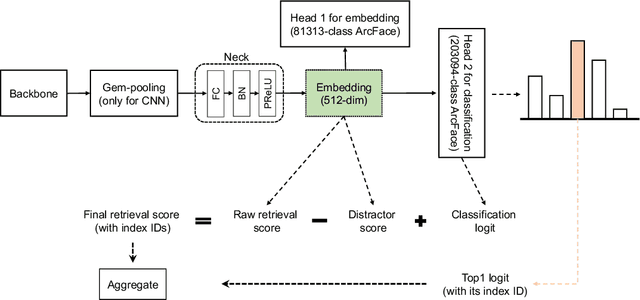

Abstract:In this paper, we show our solution to the Google Landmark Recognition 2021 Competition. Firstly, embeddings of images are extracted via various architectures (i.e. CNN-, Transformer- and hybrid-based), which are optimized by ArcFace loss. Then we apply an efficient pipeline to re-rank predictions by adjusting the retrieval score with classification logits and non-landmark distractors. Finally, the ensembled model scores 0.489 on the private leaderboard, achieving the 3rd place in the 2021 edition of the Google Landmark Recognition Competition.

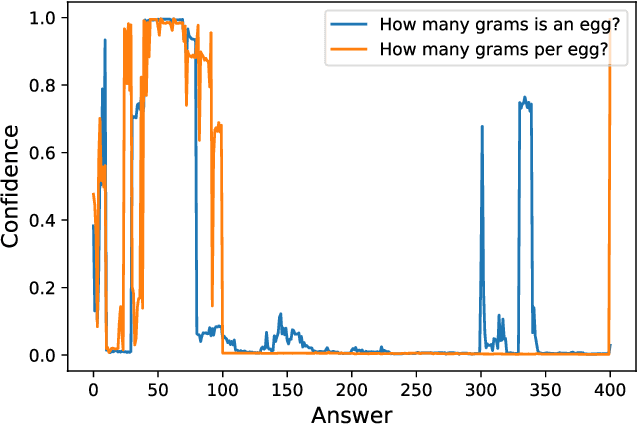

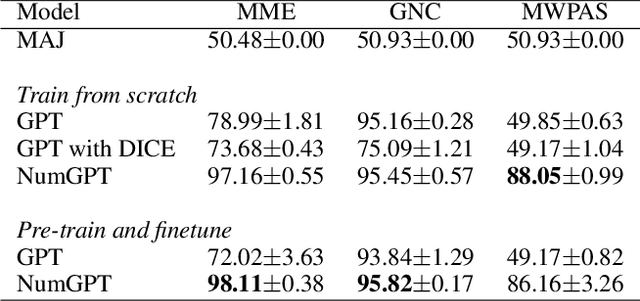

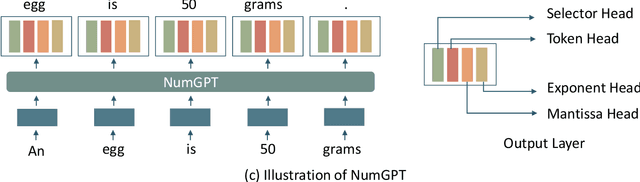

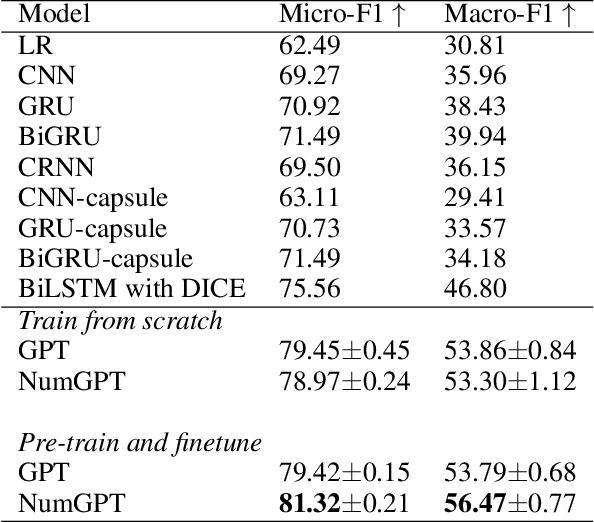

NumGPT: Improving Numeracy Ability of Generative Pre-trained Models

Sep 07, 2021

Abstract:Existing generative pre-trained language models (e.g., GPT) focus on modeling the language structure and semantics of general texts. However, those models do not consider the numerical properties of numbers and cannot perform robustly on numerical reasoning tasks (e.g., math word problems and measurement estimation). In this paper, we propose NumGPT, a generative pre-trained model that explicitly models the numerical properties of numbers in texts. Specifically, it leverages a prototype-based numeral embedding to encode the mantissa of the number and an individual embedding to encode the exponent of the number. A numeral-aware loss function is designed to integrate numerals into the pre-training objective of NumGPT. We conduct extensive experiments on four different datasets to evaluate the numeracy ability of NumGPT. The experiment results show that NumGPT outperforms baseline models (e.g., GPT and GPT with DICE) on a range of numerical reasoning tasks such as measurement estimation, number comparison, math word problems, and magnitude classification. Ablation studies are also conducted to evaluate the impact of pre-training and model hyperparameters on the performance.

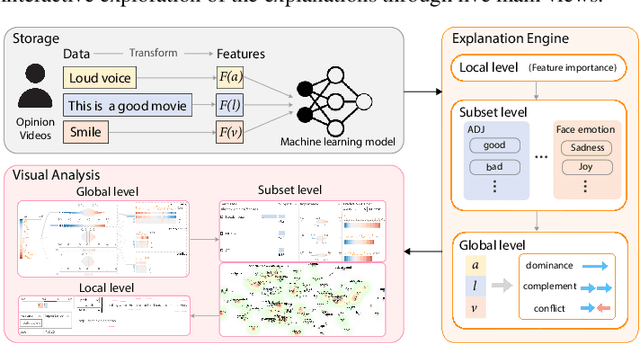

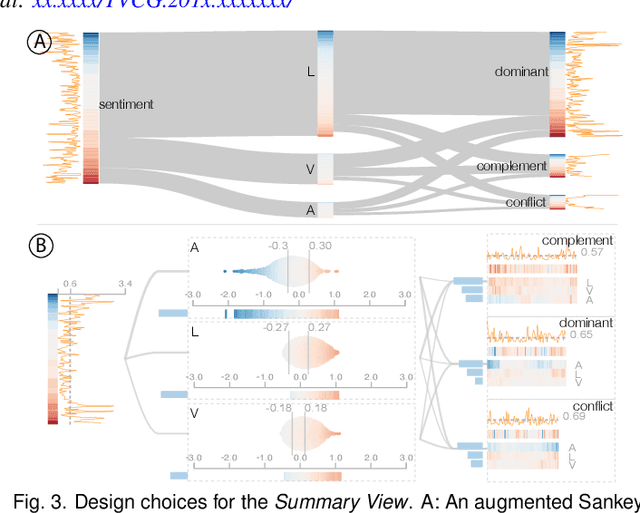

M2Lens: Visualizing and Explaining Multimodal Models for Sentiment Analysis

Aug 01, 2021

Abstract:Multimodal sentiment analysis aims to recognize people's attitudes from multiple communication channels such as verbal content (i.e., text), voice, and facial expressions. It has become a vibrant and important research topic in natural language processing. Much research focuses on modeling the complex intra- and inter-modal interactions between different communication channels. However, current multimodal models with strong performance are often deep-learning-based techniques and work like black boxes. It is not clear how models utilize multimodal information for sentiment predictions. Despite recent advances in techniques for enhancing the explainability of machine learning models, they often target unimodal scenarios (e.g., images, sentences), and little research has been done on explaining multimodal models. In this paper, we present an interactive visual analytics system, M2Lens, to visualize and explain multimodal models for sentiment analysis. M2Lens provides explanations on intra- and inter-modal interactions at the global, subset, and local levels. Specifically, it summarizes the influence of three typical interaction types (i.e., dominance, complement, and conflict) on the model predictions. Moreover, M2Lens identifies frequent and influential multimodal features and supports the multi-faceted exploration of model behaviors from language, acoustic, and visual modalities. Through two case studies and expert interviews, we demonstrate our system can help users gain deep insights into the multimodal models for sentiment analysis.

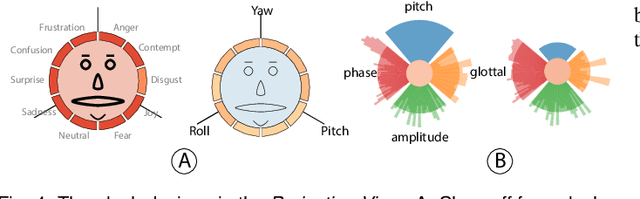

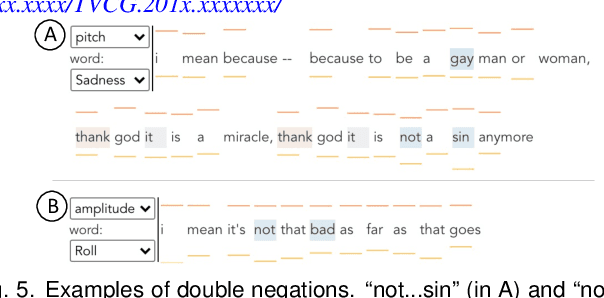

DeHumor: Visual Analytics for Decomposing Humor

Jul 18, 2021

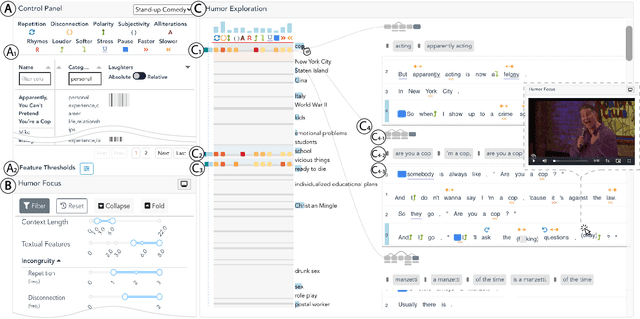

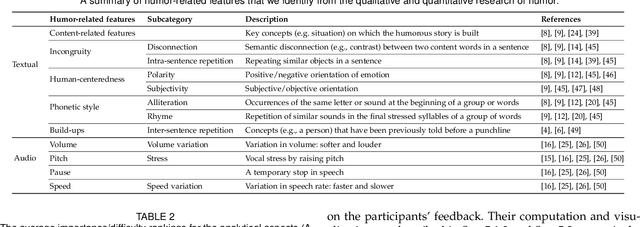

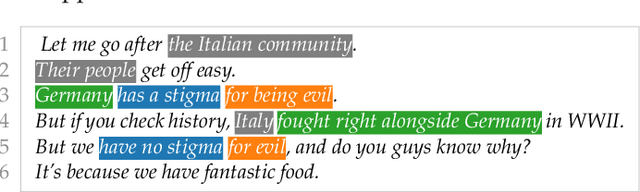

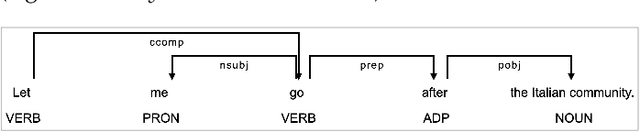

Abstract:Despite being a critical communication skill, grasping humor is challenging -- a successful use of humor requires a mixture of both engaging content build-up and an appropriate vocal delivery (e.g., pause). Prior studies on computational humor emphasize the textual and audio features immediately next to the punchline, yet overlooking longer-term context setup. Moreover, the theories are usually too abstract for understanding each concrete humor snippet. To fill in the gap, we develop DeHumor, a visual analytical system for analyzing humorous behaviors in public speaking. To intuitively reveal the building blocks of each concrete example, DeHumor decomposes each humorous video into multimodal features and provides inline annotations of them on the video script. In particular, to better capture the build-ups, we introduce content repetition as a complement to features introduced in theories of computational humor and visualize them in a context linking graph. To help users locate the punchlines that have the desired features to learn, we summarize the content (with keywords) and humor feature statistics on an augmented time matrix. With case studies on stand-up comedy shows and TED talks, we show that DeHumor is able to highlight various building blocks of humor examples. In addition, expert interviews with communication coaches and humor researchers demonstrate the effectiveness of DeHumor for multimodal humor analysis of speech content and vocal delivery.

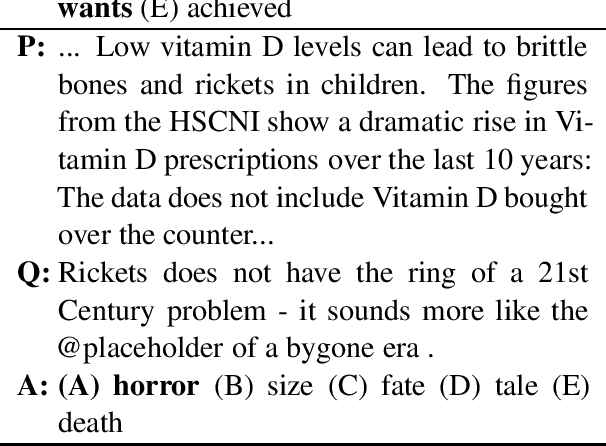

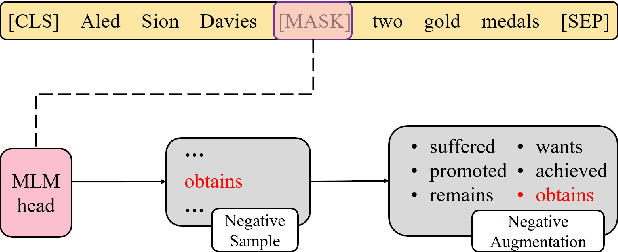

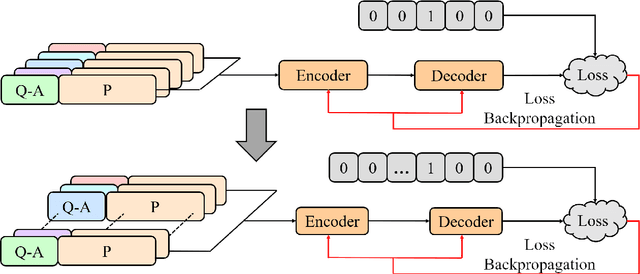

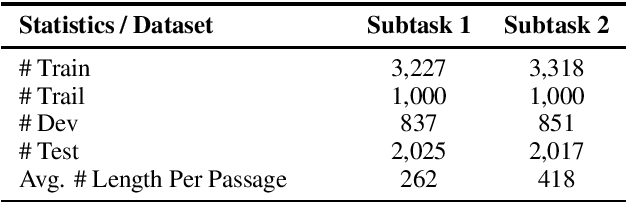

ZJUKLAB at SemEval-2021 Task 4: Negative Augmentation with Language Model for Reading Comprehension of Abstract Meaning

Feb 25, 2021

Abstract:This paper presents our systems for the three Subtasks of SemEval Task4: Reading Comprehension of Abstract Meaning (ReCAM). We explain the algorithms used to learn our models and the process of tuning the algorithms and selecting the best model. Inspired by the similarity of the ReCAM task and the language pre-training, we propose a simple yet effective technology, namely, negative augmentation with language model. Evaluation results demonstrate the effectiveness of our proposed approach. Our models achieve the 4th rank on both official test sets of Subtask 1 and Subtask 2 with an accuracy of 87.9% and an accuracy of 92.8%, respectively. We further conduct comprehensive model analysis and observe interesting error cases, which may promote future researches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge