Yifu Li

CTIS-QA: Clinical Template-Informed Slide-level Question Answering for Pathology

Jan 05, 2026Abstract:In this paper, we introduce a clinical diagnosis template-based pipeline to systematically collect and structure pathological information. In collaboration with pathologists and guided by the the College of American Pathologists (CAP) Cancer Protocols, we design a Clinical Pathology Report Template (CPRT) that ensures comprehensive and standardized extraction of diagnostic elements from pathology reports. We validate the effectiveness of our pipeline on TCGA-BRCA. First, we extract pathological features from reports using CPRT. These features are then used to build CTIS-Align, a dataset of 80k slide-description pairs from 804 WSIs for vision-language alignment training, and CTIS-Bench, a rigorously curated VQA benchmark comprising 977 WSIs and 14,879 question-answer pairs. CTIS-Bench emphasizes clinically grounded, closed-ended questions (e.g., tumor grade, receptor status) that reflect real diagnostic workflows, minimize non-visual reasoning, and require genuine slide understanding. We further propose CTIS-QA, a Slide-level Question Answering model, featuring a dual-stream architecture that mimics pathologists' diagnostic approach. One stream captures global slide-level context via clustering-based feature aggregation, while the other focuses on salient local regions through attention-guided patch perception module. Extensive experiments on WSI-VQA, CTIS-Bench, and slide-level diagnostic tasks show that CTIS-QA consistently outperforms existing state-of-the-art models across multiple metrics. Code and data are available at https://github.com/HLSvois/CTIS-QA.

Seedance 1.5 pro: A Native Audio-Visual Joint Generation Foundation Model

Dec 23, 2025Abstract:Recent strides in video generation have paved the way for unified audio-visual generation. In this work, we present Seedance 1.5 pro, a foundational model engineered specifically for native, joint audio-video generation. Leveraging a dual-branch Diffusion Transformer architecture, the model integrates a cross-modal joint module with a specialized multi-stage data pipeline, achieving exceptional audio-visual synchronization and superior generation quality. To ensure practical utility, we implement meticulous post-training optimizations, including Supervised Fine-Tuning (SFT) on high-quality datasets and Reinforcement Learning from Human Feedback (RLHF) with multi-dimensional reward models. Furthermore, we introduce an acceleration framework that boosts inference speed by over 10X. Seedance 1.5 pro distinguishes itself through precise multilingual and dialect lip-syncing, dynamic cinematic camera control, and enhanced narrative coherence, positioning it as a robust engine for professional-grade content creation. Seedance 1.5 pro is now accessible on Volcano Engine at https://console.volcengine.com/ark/region:ark+cn-beijing/experience/vision?type=GenVideo.

A 24-GHz CMOS Transformer-Based Three-Tline Series Doherty Power Amplifier Achieving 39% PAE

Nov 15, 2025

Abstract:This paper presents a transformer-based three- transmission-line (Tline) series Doherty power amplifier (PA) implemented in 65-nm CMOS, targeting broadband K/Ka-band applications. By integrating an impedance-scaling network into the output matching structure, the design enables effective load modulation and reduced impedance transformation ratio (ITR) at power back-off when employing stacked cascode transistors. The PA demonstrates a -3-dB small-signal gain bandwidth from 22 to 32.5 GHz, a saturated output power (Psat) of 21.6 dBm, and a peak power-added efficiency (PAE) of 39%. At 6dB back-off, the PAE remains above 24%, validating its suitability for high- efficiency mm-wave phased-array transmitters in next-generation wireless systems.

Seedance 1.0: Exploring the Boundaries of Video Generation Models

Jun 10, 2025

Abstract:Notable breakthroughs in diffusion modeling have propelled rapid improvements in video generation, yet current foundational model still face critical challenges in simultaneously balancing prompt following, motion plausibility, and visual quality. In this report, we introduce Seedance 1.0, a high-performance and inference-efficient video foundation generation model that integrates several core technical improvements: (i) multi-source data curation augmented with precision and meaningful video captioning, enabling comprehensive learning across diverse scenarios; (ii) an efficient architecture design with proposed training paradigm, which allows for natively supporting multi-shot generation and jointly learning of both text-to-video and image-to-video tasks. (iii) carefully-optimized post-training approaches leveraging fine-grained supervised fine-tuning, and video-specific RLHF with multi-dimensional reward mechanisms for comprehensive performance improvements; (iv) excellent model acceleration achieving ~10x inference speedup through multi-stage distillation strategies and system-level optimizations. Seedance 1.0 can generate a 5-second video at 1080p resolution only with 41.4 seconds (NVIDIA-L20). Compared to state-of-the-art video generation models, Seedance 1.0 stands out with high-quality and fast video generation having superior spatiotemporal fluidity with structural stability, precise instruction adherence in complex multi-subject contexts, native multi-shot narrative coherence with consistent subject representation.

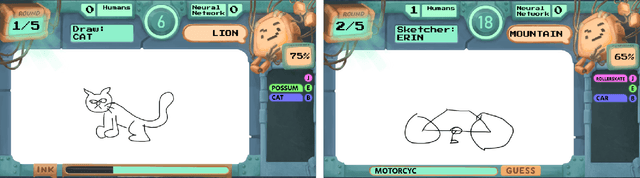

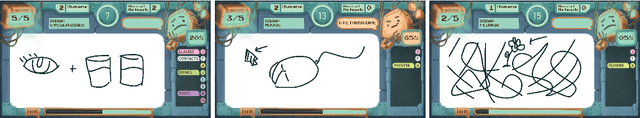

iNNk: A Multi-Player Game to Deceive a Neural Network

Jul 17, 2020

Abstract:This paper presents \textit{iNNK}, a multiplayer drawing game where human players team up against an NN. The players need to successfully communicate a secret code word to each other through drawings, without being deciphered by the NN. With this game, we aim to foster a playful environment where players can, in a small way, go from passive consumers of NN applications to creative thinkers and critical challengers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge