Vijay Kumar

Adaptive Sampling of Latent Phenomena using Heterogeneous Robot Teams (ASLaP-HR)

Aug 11, 2022

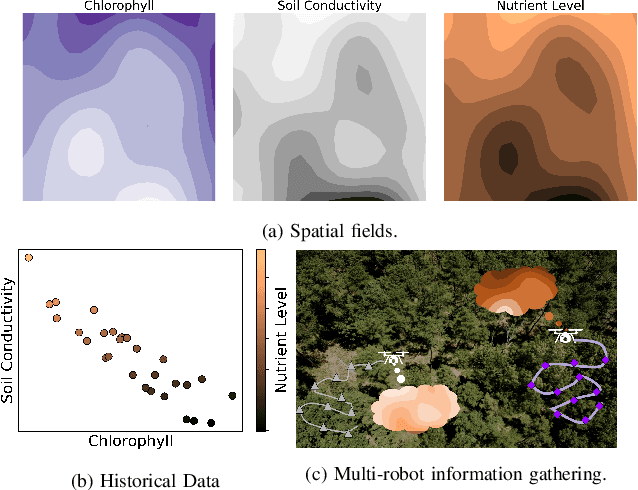

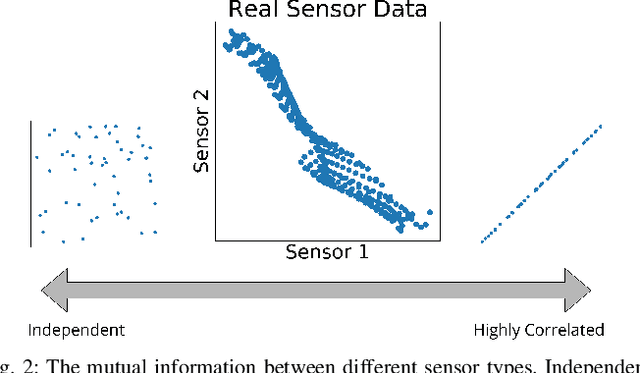

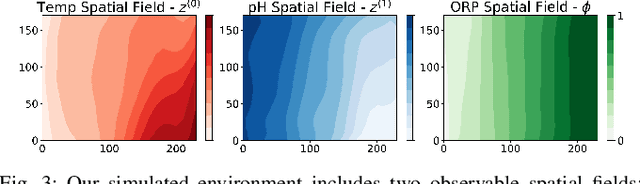

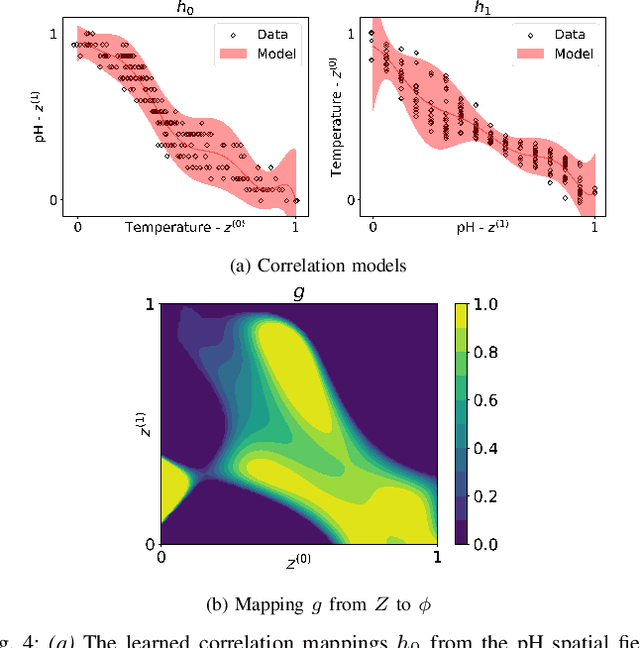

Abstract:In this paper, we present an online adaptive planning strategy for a team of robots with heterogeneous sensors to sample from a latent spatial field using a learned model for decision making. Current robotic sampling methods seek to gather information about an observable spatial field. However, many applications, such as environmental monitoring and precision agriculture, involve phenomena that are not directly observable or are costly to measure, called latent phenomena. In our approach, we seek to reason about the latent phenomenon in real-time by effectively sampling the observable spatial fields using a team of robots with heterogeneous sensors, where each robot has a distinct sensor to measure a different observable field. The information gain is estimated using a learned model that maps from the observable spatial fields to the latent phenomenon. This model captures aleatoric uncertainty in the relationship to allow for information theoretic measures. Additionally, we explicitly consider the correlations among the observable spatial fields, capturing the relationship between sensor types whose observations are not independent. We show it is possible to learn these correlations, and investigate the impact of the learned correlation models on the performance of our sampling approach. Through our qualitative and quantitative results, we illustrate that empirically learned correlations improve the overall sampling efficiency of the team. We simulate our approach using a data set of sensor measurements collected on Lac Hertel, in Quebec, which we make publicly available.

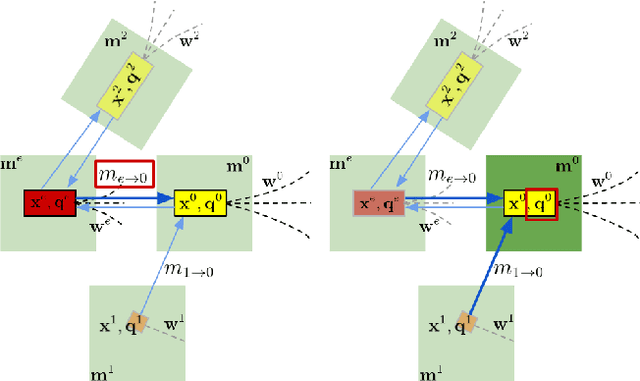

Decentralized Risk-Aware Tracking of Multiple Targets

Aug 04, 2022

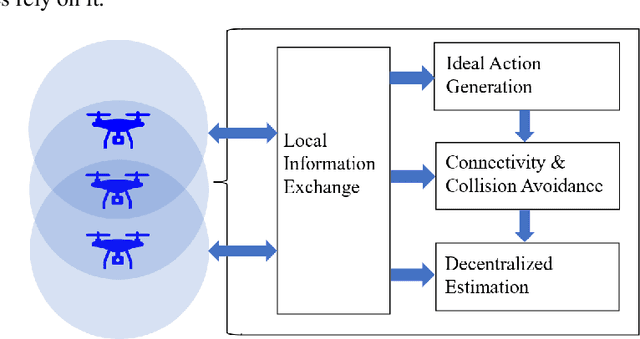

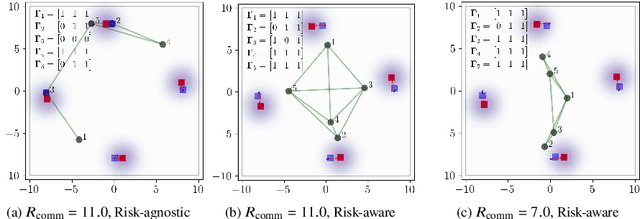

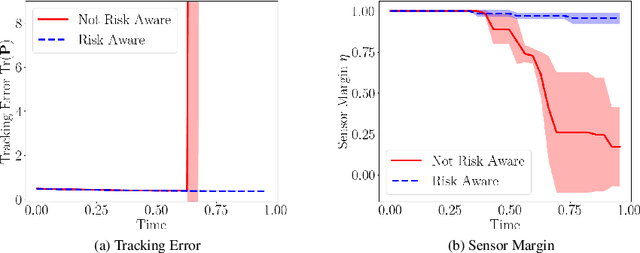

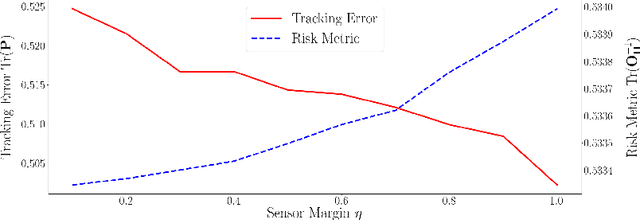

Abstract:We consider the setting where a team of robots is tasked with tracking multiple targets with the following property: approaching the targets enables more accurate target position estimation, but also increases the risk of sensor failures. Therefore, it is essential to address the trade-off between tracking quality maximization and risk minimization. In our previous work, a centralized controller is developed to plan motions for all the robots -- however, this is not a scalable approach. Here, we present a decentralized and risk-aware multi-target tracking framework, in which each robot plans its motion trading off tracking accuracy maximization and aversion to risk, while only relying on its own information and information exchanged with its neighbors. We use the control barrier function to guarantee network connectivity throughout the tracking process. Extensive numerical experiments demonstrate that our system can achieve similar tracking accuracy and risk-awareness to its centralized counterpart.

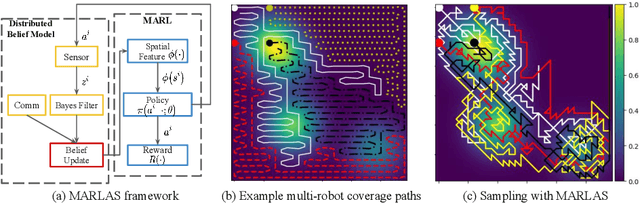

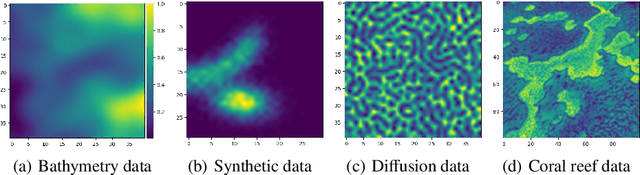

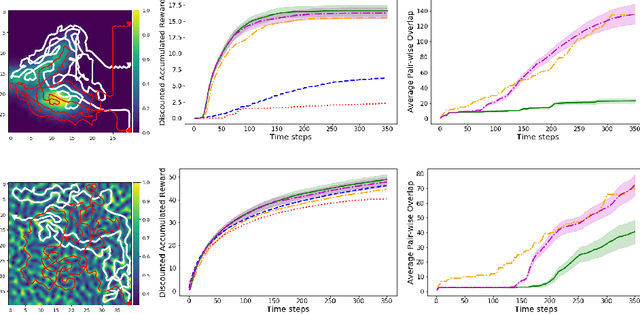

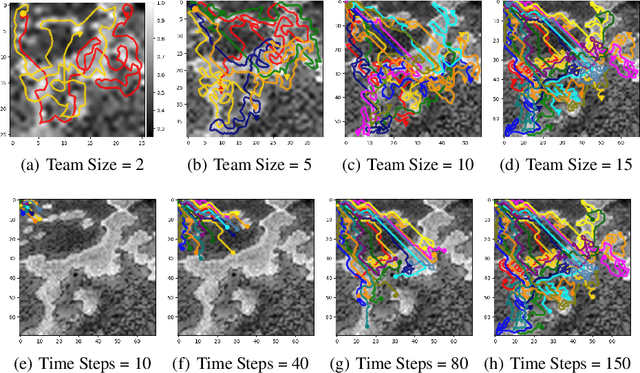

MARLAS: Multi Agent Reinforcement Learning for cooperated Adaptive Sampling

Jul 15, 2022

Abstract:The multi-robot adaptive sampling problem aims at finding trajectories for a team of robots to efficiently sample the phenomenon of interest within a given endurance budget of the robots. In this paper, we propose a robust and scalable approach using decentralized Multi-Agent Reinforcement Learning for cooperated Adaptive Sampling (MARLAS) of quasi-static environmental processes. Given a prior on the field being sampled, the proposed method learns decentralized policies for a team of robots to sample high-utility regions within a fixed budget. The multi-robot adaptive sampling problem requires the robots to coordinate with each other to avoid overlapping sampling trajectories. Therefore, we encode the estimates of neighbor positions and intermittent communication between robots into the learning process. We evaluated MARLAS over multiple performance metrics and found it to outperform other baseline multi-robot sampling techniques. We further demonstrate robustness to communication failures and scalability with both the size of the robot team and the size of the region being sampled. The experimental evaluations are conducted both in simulations on real data and in real robot experiments on demo environmental setup.

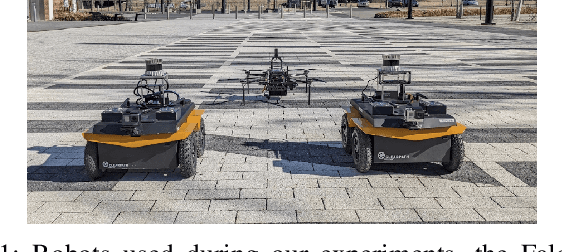

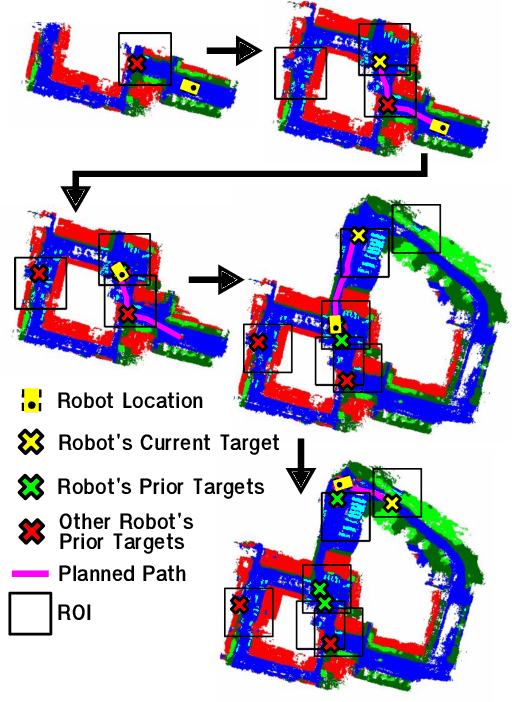

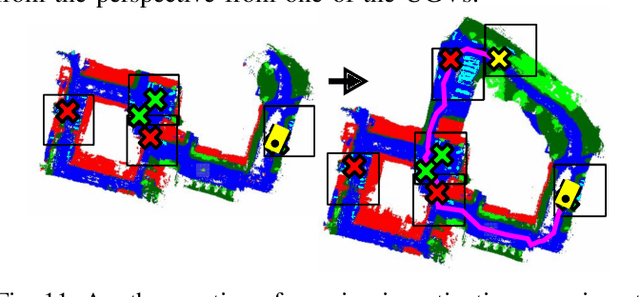

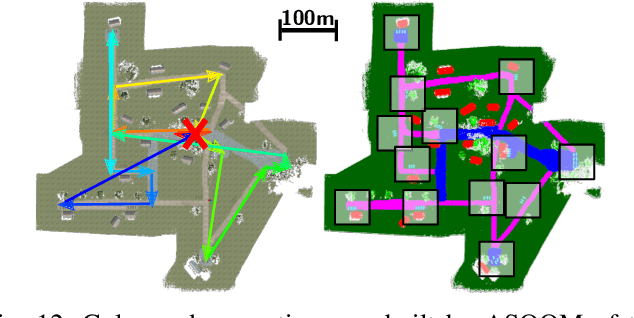

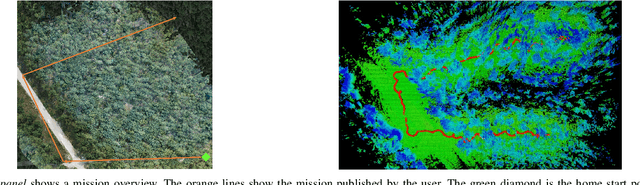

Stronger Together: Air-Ground Robotic Collaboration Using Semantics

Jun 28, 2022

Abstract:In this work, we present an end-to-end heterogeneous multi-robot system framework where ground robots are able to localize, plan, and navigate in a semantic map created in real time by a high-altitude quadrotor. The ground robots choose and deconflict their targets independently, without any external intervention. Moreover, they perform cross-view localization by matching their local maps with the overhead map using semantics. The communication backbone is opportunistic and distributed, allowing the entire system to operate with no external infrastructure aside from GPS for the quadrotor. We extensively tested our system by performing different missions on top of our framework over multiple experiments in different environments. Our ground robots travelled over 6 km autonomously with minimal intervention in the real world and over 96 km in simulation without interventions.

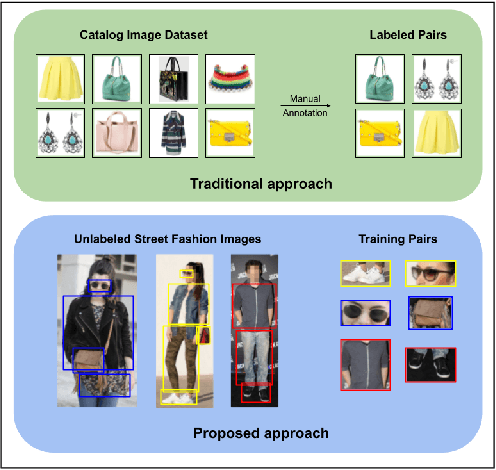

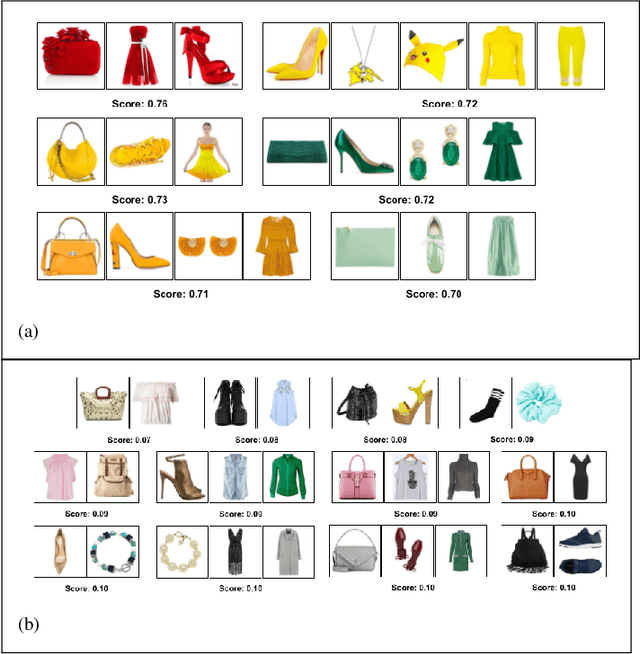

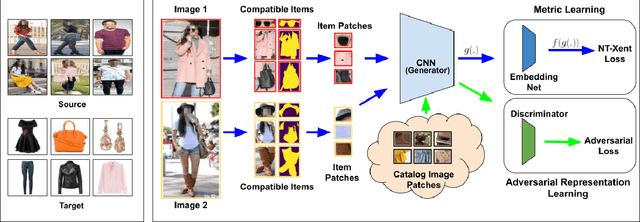

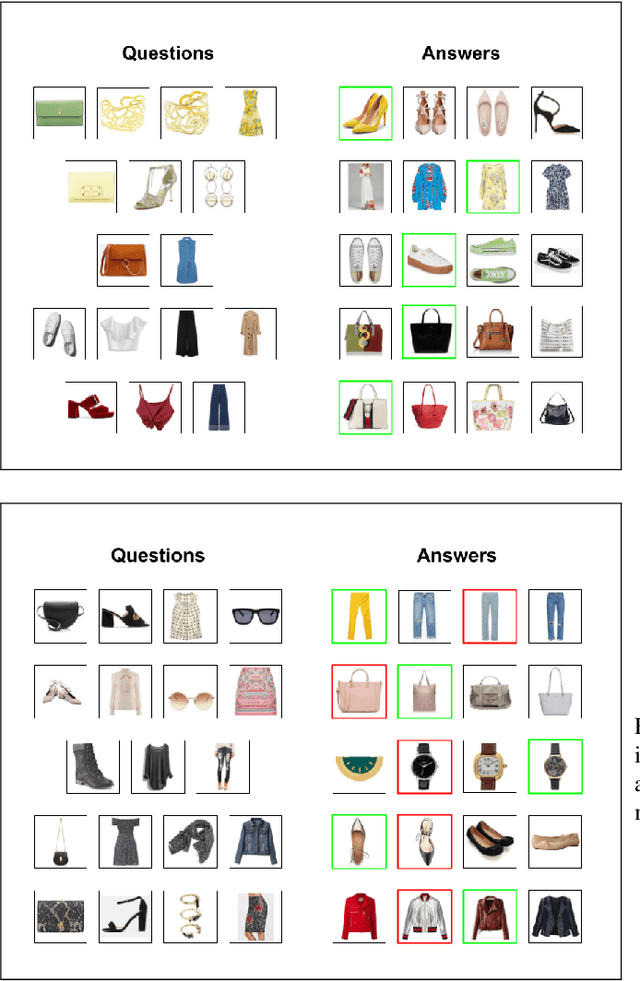

Learning Fashion Compatibility from In-the-wild Images

Jun 13, 2022

Abstract:Complementary fashion recommendation aims at identifying items from different categories (e.g. shirt, footwear, etc.) that "go well together" as an outfit. Most existing approaches learn representation for this task using labeled outfit datasets containing manually curated compatible item combinations. In this work, we propose to learn representations for compatibility prediction from in-the-wild street fashion images through self-supervised learning by leveraging the fact that people often wear compatible outfits. Our pretext task is formulated such that the representations of different items worn by the same person are closer compared to those worn by other people. Additionally, to reduce the domain gap between in-the-wild and catalog images during inference, we introduce an adversarial loss that minimizes the difference in feature distribution between the two domains. We conduct our experiments on two popular fashion compatibility benchmarks - Polyvore and Polyvore-Disjoint outfits, and outperform existing self-supervised approaches, particularly significant in cross-dataset setting where training and testing images are from different sources.

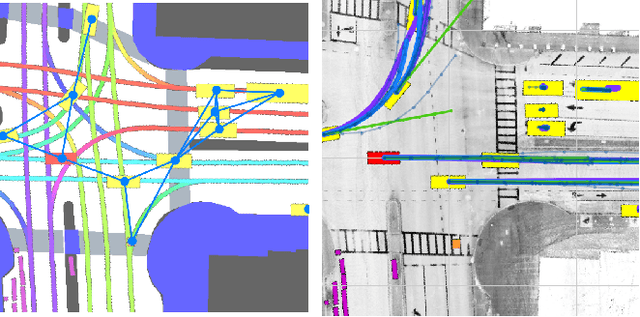

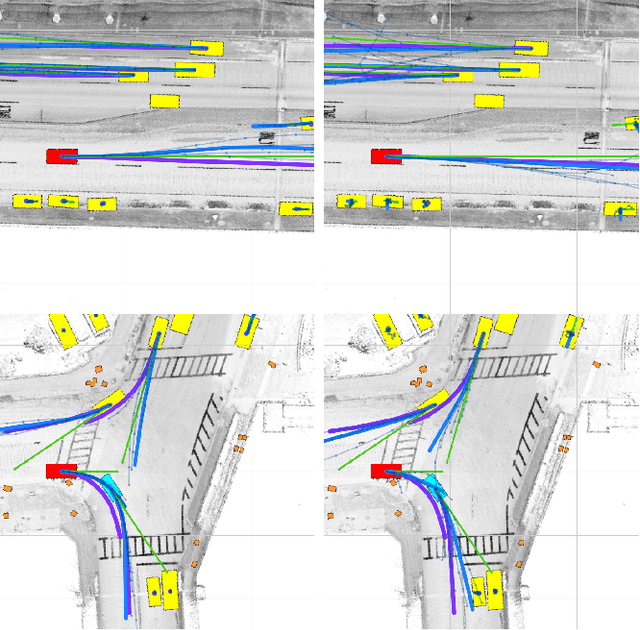

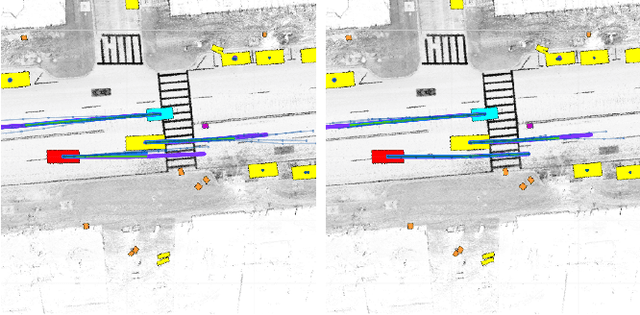

Any Way You Look At It: Semantic Crossview Localization and Mapping with LiDAR

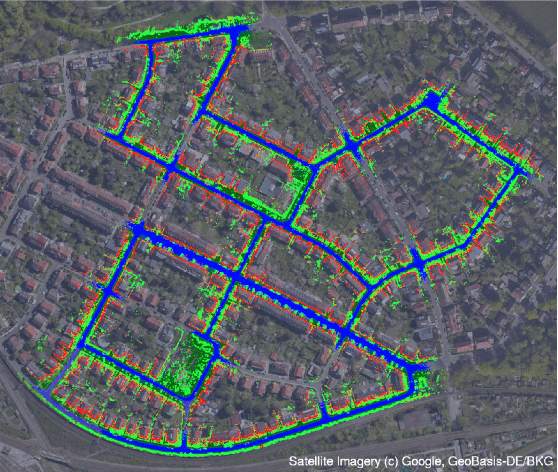

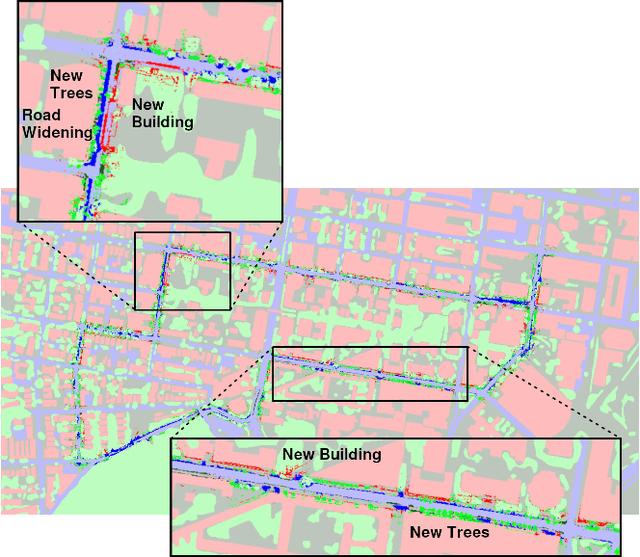

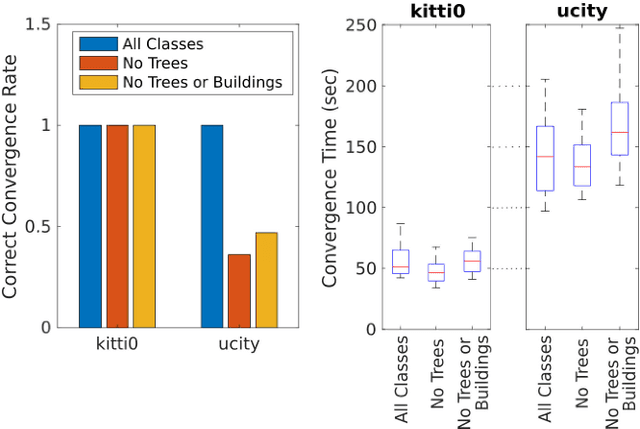

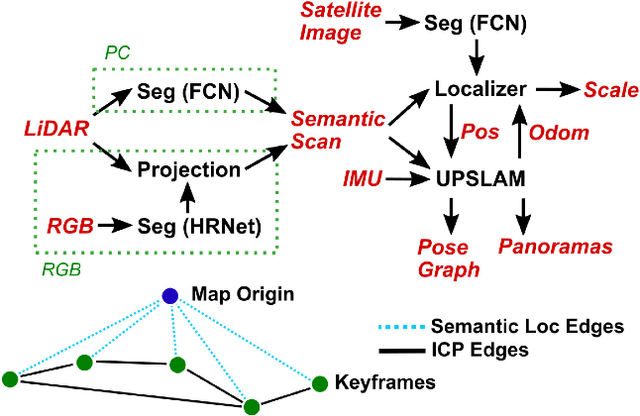

Mar 16, 2022

Abstract:Currently, GPS is by far the most popular global localization method. However, it is not always reliable or accurate in all environments. SLAM methods enable local state estimation but provide no means of registering the local map to a global one, which can be important for inter-robot collaboration or human interaction. In this work, we present a real-time method for utilizing semantics to globally localize a robot using only egocentric 3D semantically labelled LiDAR and IMU as well as top-down RGB images obtained from satellites or aerial robots. Additionally, as it runs, our method builds a globally registered, semantic map of the environment. We validate our method on KITTI as well as our own challenging datasets, and show better than 10 meter accuracy, a high degree of robustness, and the ability to estimate the scale of a top-down map on the fly if it is initially unknown.

* Published in the IEEE Robotics and Automation Letters and presented at the IEEE 2021 International Conference on Robotics and Automation. See https://www.youtube.com/watch?v=_qwAoYK9iGU for accompanying video

Experiments in Adaptive Replanning for Fast Autonomous Flight in Forests

Mar 02, 2022

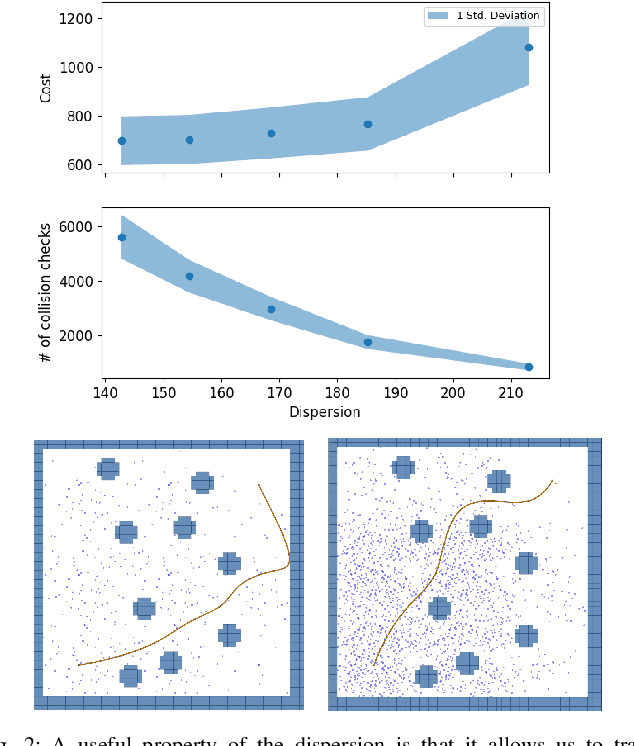

Abstract:Fast, autonomous flight in unstructured, cluttered environments such as forests is challenging because it requires the robot to compute new plans in realtime on a computationally-constrained platform. In this paper, we enable this capability with a search-based planning framework that adapts sampling density in realtime to find dynamically-feasible plans while remaining computationally tractable. A paramount challenge in search-based planning is that dense obstacles both necessitate large graphs (to guarantee completeness) and reduce the efficiency of graph search (as heuristics become less accurate). To address this, we develop a planning framework with two parts: one that maximizes planner completeness for a given graph size, and a second that dynamically maximizes graph size subject to computational constraints. This framework is enabled by motion planning graphs that are defined by a single parameter, dispersion, which quantifies the maximum trajectory cost to reach an arbitrary state from the graph. We show through real and simulated experiments how the dispersion can be adapted to different environments in realtime, allowing operation in environments with varying density. The simulated experiment demonstrates improved performance over a baseline search-based planning algorithm. We also demonstrate flight speeds of up to 2.5m/s in real-world cluttered pine forests.

RTGNN: A Novel Approach to Model Stochastic Traffic Dynamics

Feb 21, 2022

Abstract:Modeling stochastic traffic dynamics is critical to developing self-driving cars. Because it is difficult to develop first principle models of cars driven by humans, there is great potential for using data driven approaches in developing traffic dynamical models. While there is extensive literature on this subject, previous works mainly address the prediction accuracy of data-driven models. Moreover, it is often difficult to apply these models to common planning frameworks since they fail to meet the assumptions therein. In this work, we propose a new stochastic traffic model, Recurrent Traffic Graph Neural Network (RTGNN), by enforcing additional structures on the model so that the proposed model can be seamlessly integrated with existing motion planning algorithms. RTGNN is a Markovian model and is able to infer future traffic states conditioned on the motion of the ego vehicle. Specifically, RTGNN uses a definition of the traffic state that includes the state of all players in a local region and is therefore able to make joint predictions for all agents of interest. Meanwhile, we explicitly model the hidden states of agents, "intentions," as part of the traffic state to reflect the inherent partial observability of traffic dynamics. The above mentioned properties are critical for integrating RTGNN with motion planning algorithms coupling prediction and decision making. Despite the additional structures, we show that RTGNN is able to achieve state-of-the-art accuracy through comparisons with other similar works.

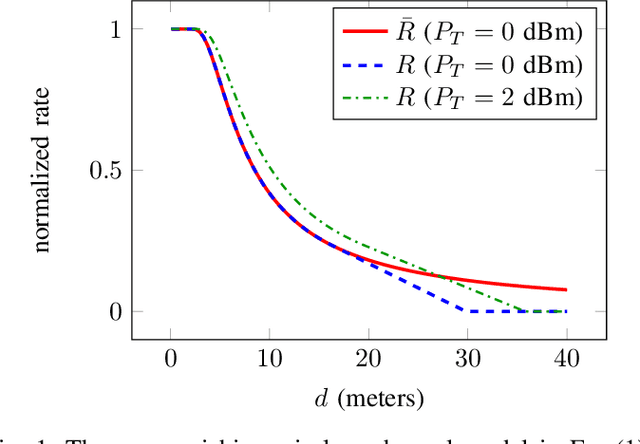

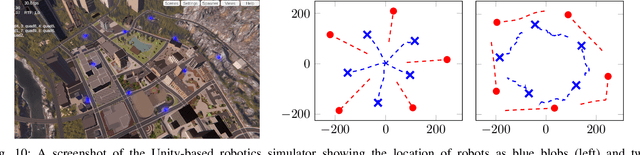

Learning Connectivity-Maximizing Network Configurations

Dec 14, 2021

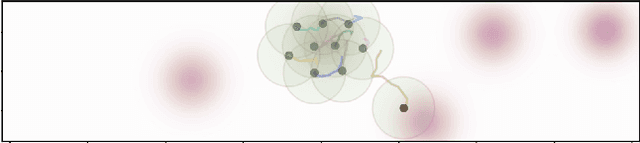

Abstract:In this work we propose a data-driven approach to optimizing the algebraic connectivity of a team of robots. While a considerable amount of research has been devoted to this problem, we lack a method that scales in a manner suitable for online applications for more than a handful of agents. To that end, we propose a supervised learning approach with a convolutional neural network (CNN) that learns to place communication agents from an expert that uses an optimization-based strategy. We demonstrate the performance of our CNN on canonical line and ring topologies, 105k randomly generated test cases, and larger teams not seen during training. We also show how our system can be applied to dynamic robot teams through a Unity-based simulation. After training, our system produces connected configurations 2 orders of magnitude faster than the optimization-based scheme for teams of 10-20 agents.

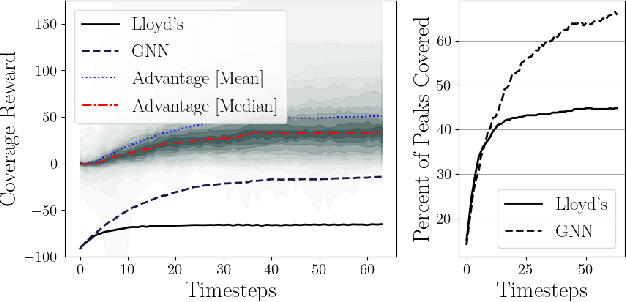

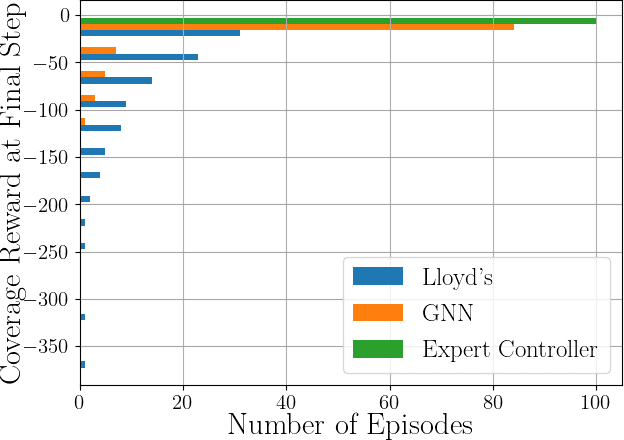

Coverage Control in Multi-Robot Systems via Graph Neural Networks

Sep 30, 2021

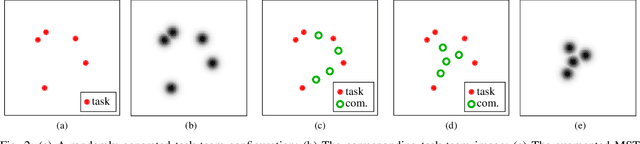

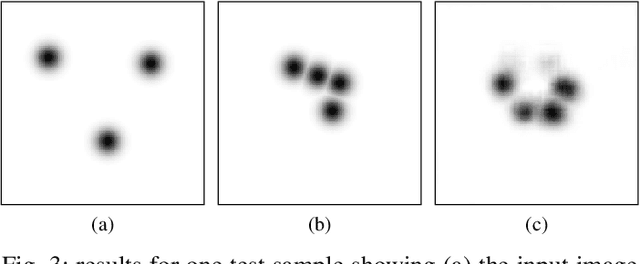

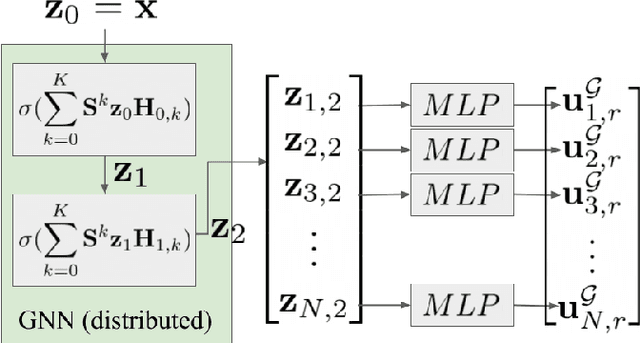

Abstract:This paper develops a decentralized approach to mobile sensor coverage by a multi-robot system. We consider a scenario where a team of robots with limited sensing range must position itself to effectively detect events of interest in a region characterized by areas of varying importance. Towards this end, we develop a decentralized control policy for the robots -- realized via a Graph Neural Network -- which uses inter-robot communication to leverage non-local information for control decisions. By explicitly sharing information between multi-hop neighbors, the decentralized controller achieves a higher quality of coverage when compared to classical approaches that do not communicate and leverage only local information available to each robot. Simulated experiments demonstrate the efficacy of multi-hop communication for multi-robot coverage and evaluate the scalability and transferability of the learning-based controllers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge