Liwei Wang

N3C Natural Language Processing

Combinatorial Semi-Bandit in the Non-Stationary Environment

Feb 10, 2020Abstract:In this paper, we investigate the non-stationary combinatorial semi-bandit problem, both in the switching case and in the dynamic case. In the general case where (a) the reward function is non-linear, (b) arms may be probabilistically triggered, and (c) only approximate offline oracle exists \cite{wang2017improving}, our algorithm achieves $\tilde{\mathcal{O}}(\sqrt{\mathcal{S} T})$ distribution-dependent regret in the switching case, and $\tilde{\mathcal{O}}(\mathcal{V}^{1/3}T^{2/3})$ in the dynamic case, where $\mathcal S$ is the number of switchings and $\mathcal V$ is the sum of the total ``distribution changes''. The regret bounds in both scenarios are nearly optimal, but our algorithm needs to know the parameter $\mathcal S$ or $\mathcal V$ in advance. We further show that by employing another technique, our algorithm no longer needs to know the parameters $\mathcal S$ or $\mathcal V$ but the regret bounds could become suboptimal. In a special case where the reward function is linear and we have an exact oracle, we design a parameter-free algorithm that achieves nearly optimal regret both in the switching case and in the dynamic case without knowing the parameters in advance.

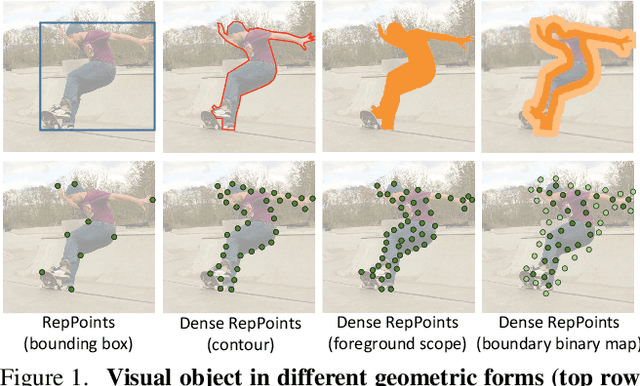

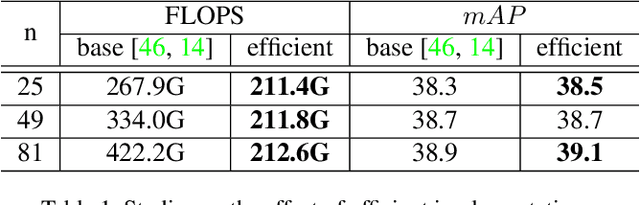

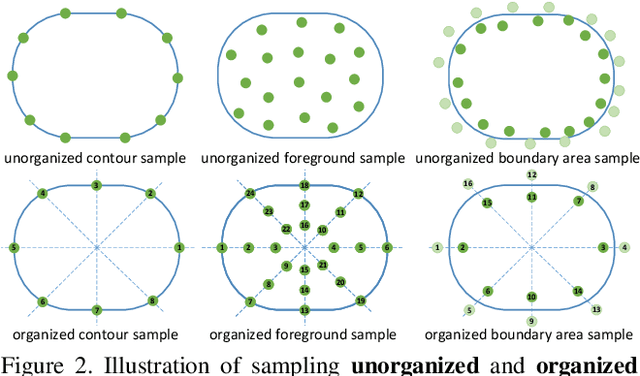

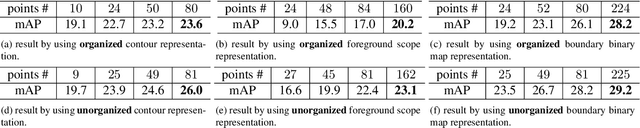

Dense RepPoints: Representing Visual Objects with Dense Point Sets

Dec 24, 2019

Abstract:We present an object representation, called \textbf{Dense RepPoints}, for flexible and detailed modeling of object appearance and geometry. In contrast to the coarse geometric localization and feature extraction of bounding boxes, Dense RepPoints adaptively distributes a dense set of points to semantically and geometrically significant positions on an object, providing informative cues for object analysis. Techniques are developed to address challenges related to supervised training for dense point sets from image segments annotations and making this extensive representation computationally practical. In addition, the versatility of this representation is exploited to model object structure over multiple levels of granularity. Dense RepPoints significantly improves performance on geometrically-oriented visual understanding tasks, including a $1.6$ AP gain in object detection on the challenging COCO benchmark.

Defective Convolutional Layers Learn Robust CNNs

Nov 19, 2019

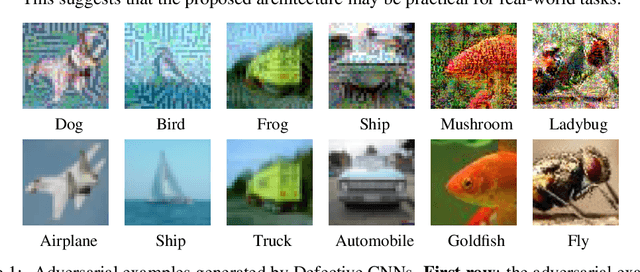

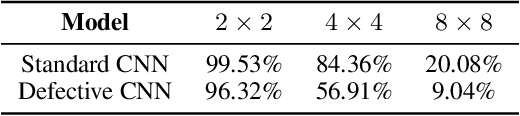

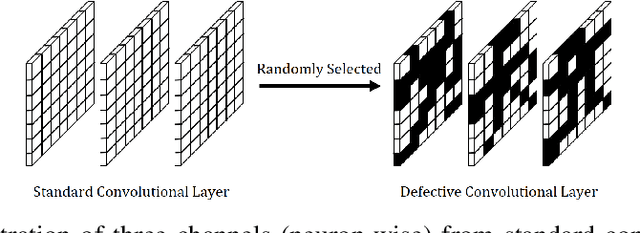

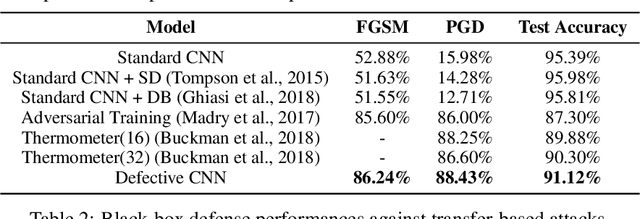

Abstract:Robustness of convolutional neural networks has recently been highlighted by the adversarial examples, i.e., inputs added with well-designed perturbations which are imperceptible to humans but can cause the network to give incorrect outputs. Recent research suggests that the noises in adversarial examples break the textural structure, which eventually leads to wrong predictions by convolutional neural networks. To help a convolutional neural network make predictions relying less on textural information, we propose defective convolutional layers which contain defective neurons whose activations are set to be a constant function. As the defective neurons contain no information and are far different from the standard neurons in its spatial neighborhood, the textural features cannot be accurately extracted and the model has to seek for other features for classification, such as the shape. We first show that predictions made by the defective CNN are less dependent on textural information, but more on shape information, and further find that adversarial examples generated by the defective CNN appear to have semantic shapes. Experimental results demonstrate the defective CNN has higher defense ability than the standard CNN against various types of attack. In particular, it achieves state-of-the-art performance against transfer-based attacks without applying any adversarial training.

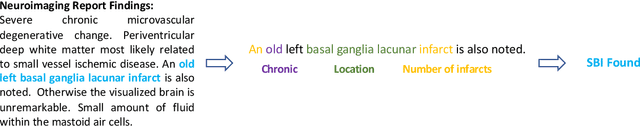

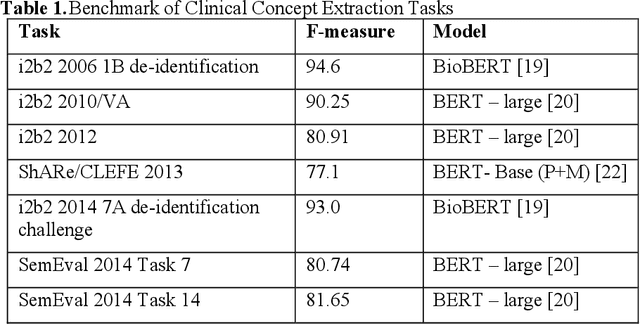

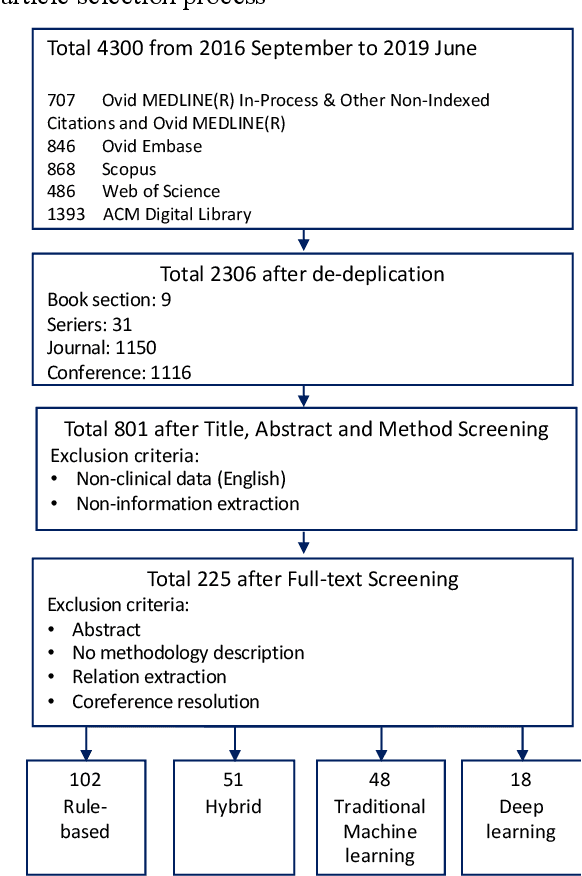

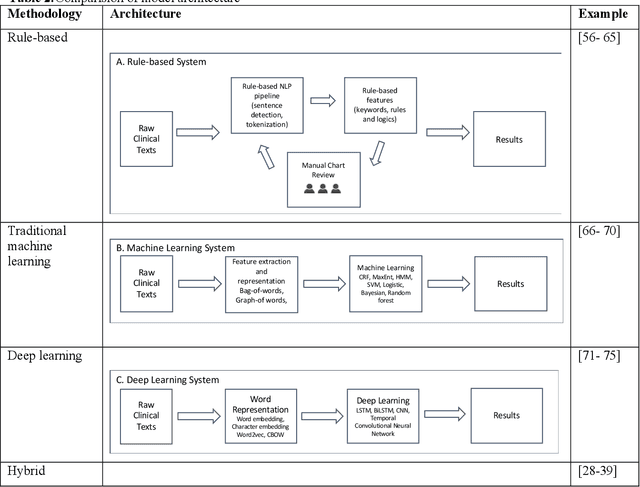

Development of Clinical Concept Extraction Applications: A Methodology Review

Oct 28, 2019

Abstract:Our study provided a review of the development of clinical concept extraction applications from January 2009 to June 2019. We hope, through the studying of different approaches with variant clinical context, can enhance the decision making for the development of clinical concept extraction.

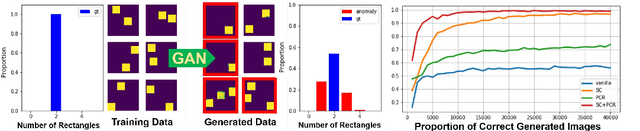

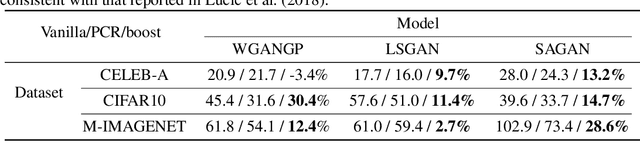

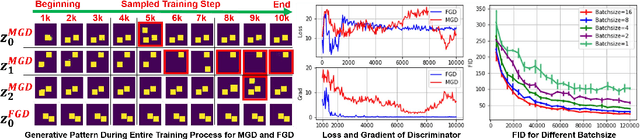

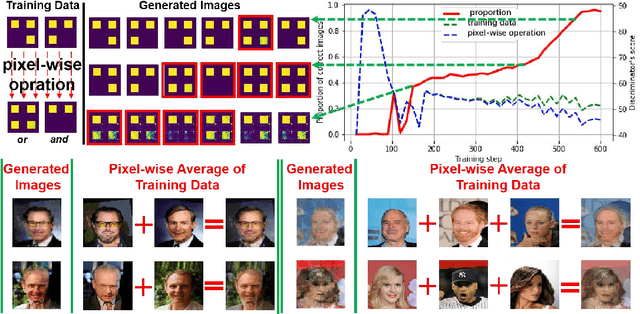

On the Anomalous Generalization of GANs

Oct 06, 2019

Abstract:Generative models, especially Generative Adversarial Networks (GANs), have received significant attention recently. However, it has been observed that in terms of some attributes, e.g. the number of simple geometric primitives in an image, GANs are not able to learn the target distribution in practice. Motivated by this observation, we discover two specific problems of GANs leading to anomalous generalization behaviour, which we refer to as the sample insufficiency and the pixel-wise combination. For the first problem of sample insufficiency, we show theoretically and empirically that the batchsize of the training samples in practice may be insufficient for the discriminator to learn an accurate discrimination function. It could result in unstable training dynamics for the generator, leading to anomalous generalization. For the second problem of pixel-wise combination, we find that besides recognizing the positive training samples as real, under certain circumstances, the discriminator could be fooled to recognize the pixel-wise combinations (e.g. pixel-wise average) of the positive training samples as real. However, those combinations could be visually different from the real samples in the target distribution. With the fooled discriminator as reference, the generator would obtain biased supervision further, leading to the anomalous generalization behaviour. Additionally, in this paper, we propose methods to mitigate the anomalous generalization of GANs. Extensive experiments on benchmark show our proposed methods improve the FID score up to 30\% on natural image dataset.

Robust Local Features for Improving the Generalization of Adversarial Training

Sep 23, 2019

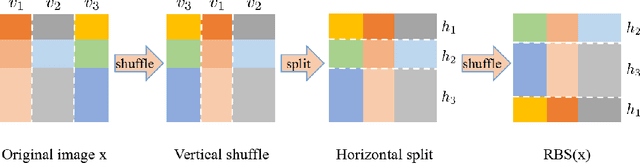

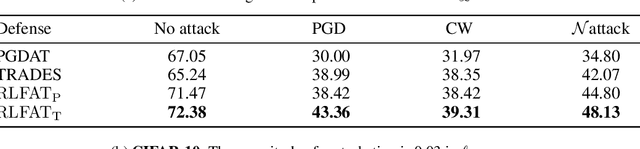

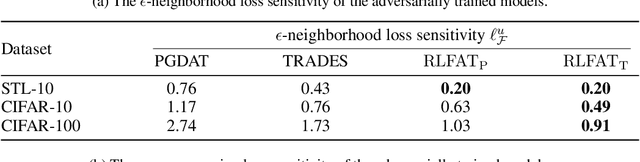

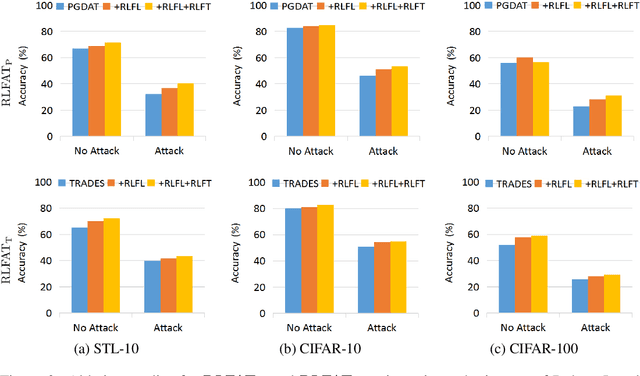

Abstract:Adversarial training has been demonstrated as one of the most effective methods for training robust models so as to defend against adversarial examples. However, adversarial training often lacks adversarially robust generalization on unseen data. Recent works show that adversarially trained models may be more biased towards global structure features. Instead, in this work, we would like to investigate the relationship between the generalization of adversarial training and the robust local features, as the local features generalize well for unseen shape variation. To learn the robust local features, we develop a Random Block Shuffle (RBS) transformation to break up the global structure features on normal adversarial examples. We continue to propose a new approach called Robust Local Features for Adversarial Training (RLFAT), which first learns the robust local features by adversarial training on the RBS-transformed adversarial examples, and then transfers the robust local features into the training of normal adversarial examples. Finally, we implement RLFAT in two currently state-of-the-art adversarial training frameworks. Extensive experiments on STL-10, CIFAR-10, CIFAR-100 datasets show that RLFAT improves the adversarially robust generalization as well as the standard generalization of adversarial training. Additionally, we demonstrate that our method captures more local features of the object, aligning better with human perception.

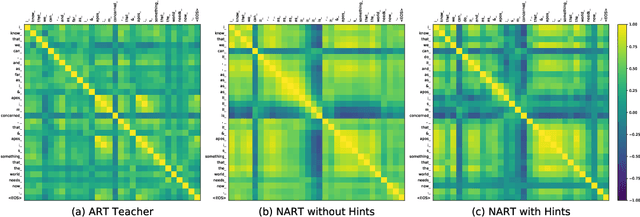

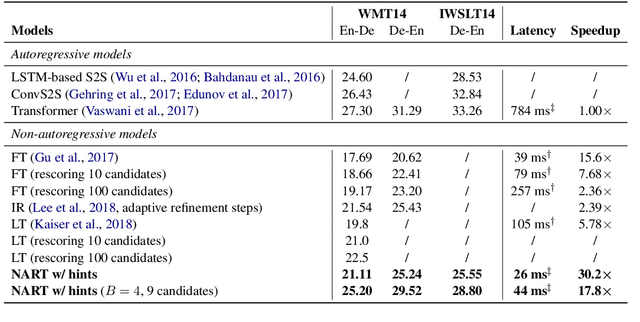

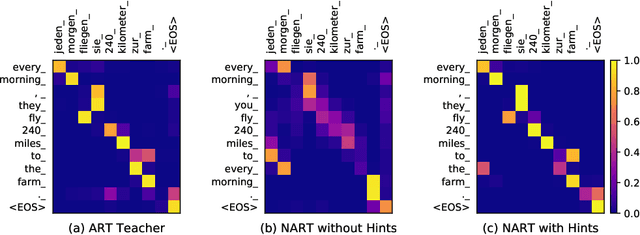

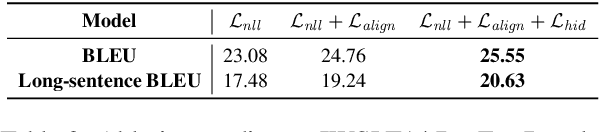

Hint-Based Training for Non-Autoregressive Machine Translation

Sep 15, 2019

Abstract:Due to the unparallelizable nature of the autoregressive factorization, AutoRegressive Translation (ART) models have to generate tokens sequentially during decoding and thus suffer from high inference latency. Non-AutoRegressive Translation (NART) models were proposed to reduce the inference time, but could only achieve inferior translation accuracy. In this paper, we proposed a novel approach to leveraging the hints from hidden states and word alignments to help the training of NART models. The results achieve significant improvement over previous NART models for the WMT14 En-De and De-En datasets and are even comparable to a strong LSTM-based ART baseline but one order of magnitude faster in inference.

McDiarmid-Type Inequalities for Graph-Dependent Variables and Stability Bounds

Sep 09, 2019

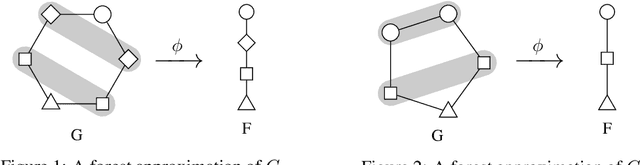

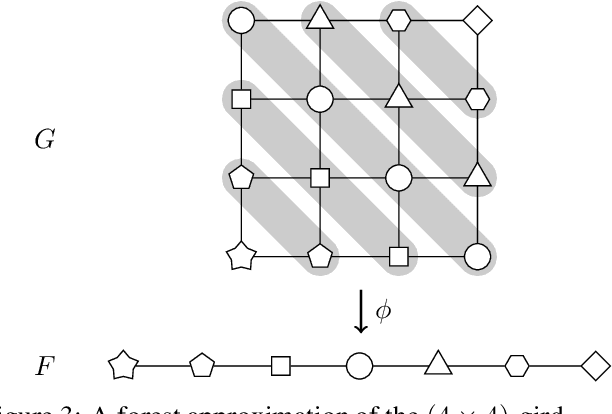

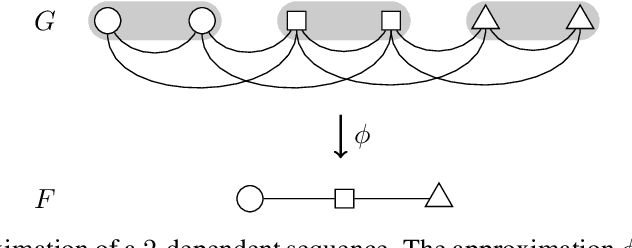

Abstract:A crucial assumption in most statistical learning theory is that samples are independently and identically distributed (i.i.d.). However, for many real applications, the i.i.d. assumption does not hold. We consider learning problems in which examples are dependent and their dependency relation is characterized by a graph. To establish algorithm-dependent generalization theory for learning with non-i.i.d. data, we first prove novel McDiarmid-type concentration inequalities for Lipschitz functions of graph-dependent random variables. We show that concentration relies on the forest complexity of the graph, which characterizes the strength of the dependency. We demonstrate that for many types of dependent data, the forest complexity is small and thus implies good concentration. Based on our new inequalities we are able to build stability bounds for learning from graph-dependent data.

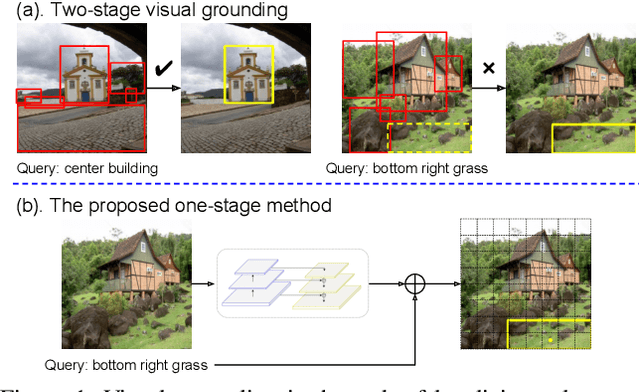

A Fast and Accurate One-Stage Approach to Visual Grounding

Aug 18, 2019

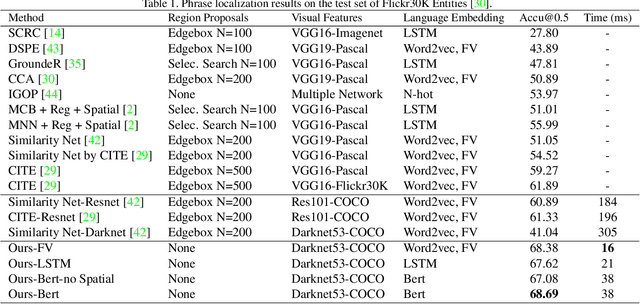

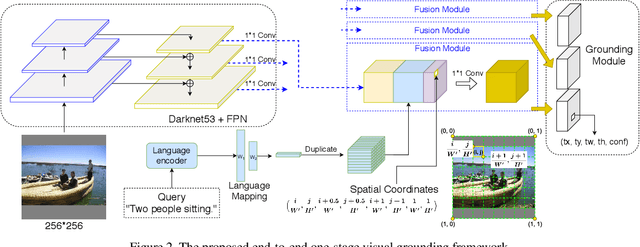

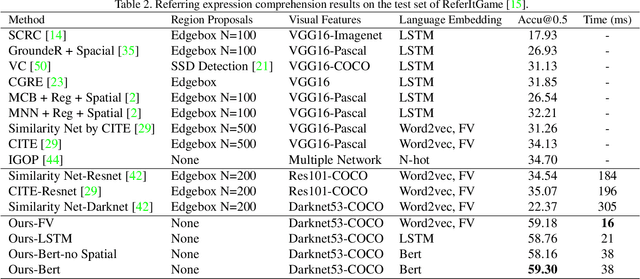

Abstract:We propose a simple, fast, and accurate one-stage approach to visual grounding, inspired by the following insight. The performances of existing propose-and-rank two-stage methods are capped by the quality of the region candidates they propose in the first stage --- if none of the candidates could cover the ground truth region, there is no hope in the second stage to rank the right region to the top. To avoid this caveat, we propose a one-stage model that enables end-to-end joint optimization. The main idea is as straightforward as fusing a text query's embedding into the YOLOv3 object detector, augmented by spatial features so as to account for spatial mentions in the query. Despite being simple, this one-stage approach shows great potential in terms of both accuracy and speed for both phrase localization and referring expression comprehension, according to our experiments. Given these results along with careful investigations into some popular region proposals, we advocate for visual grounding a paradigm shift from the conventional two-stage methods to the one-stage framework.

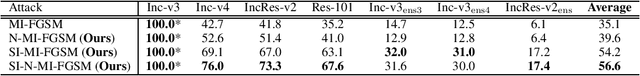

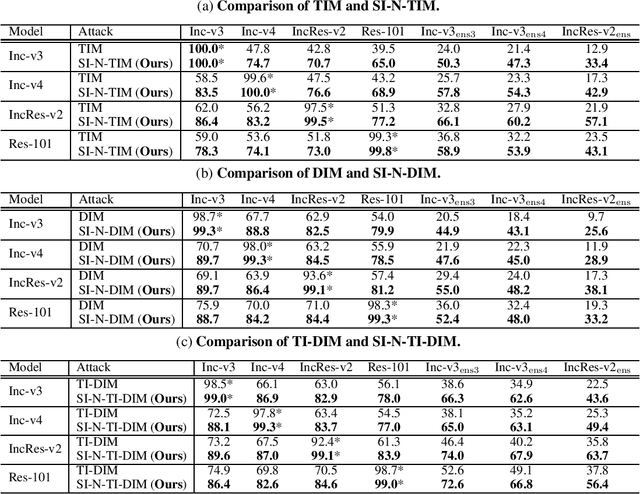

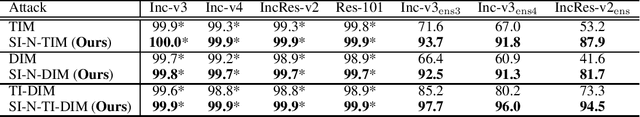

Nesterov Accelerated Gradient and Scale Invariance for Improving Transferability of Adversarial Examples

Aug 17, 2019

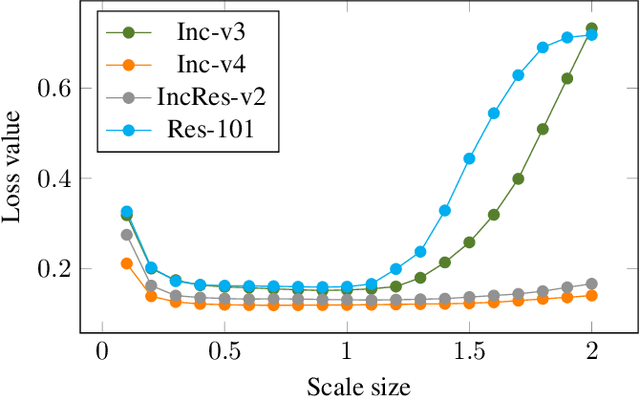

Abstract:Recent evidence suggests that deep neural networks (DNNs) are vulnerable to adversarial examples, which are crafted by adding human-imperceptible perturbations to legitimate examples. However, most of the existing adversarial attacks generate adversarial examples with weak transferability, making it difficult to evaluate the robustness of DNNs under the challenging black-box setting. To address this issue, we propose two methods: Nesterov momentum iterative fast gradient sign method (N-MI-FGSM) and scale-invariant attack method (SIM), to improve the transferability of adversarial examples. N-MI-FGSM tries a better optimizer by applying the idea of Nesterov accelerated gradient to gradient-based attack method. SIM leverages the scale-invariant property of DNNs and optimizes the generated adversarial example by a set of scaled images as the inputs. Further, the two methods can be naturally combined to form a strong attack and enhance existing gradient attack methods. Empirical results on ImageNet and NIPS 2017 adversarial competition show that the proposed methods can generate adversarial examples with higher transferability than existing competing baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge