Liang Lin

FRAME Revisited: An Interpretation View Based on Particle Evolution

Jan 16, 2019

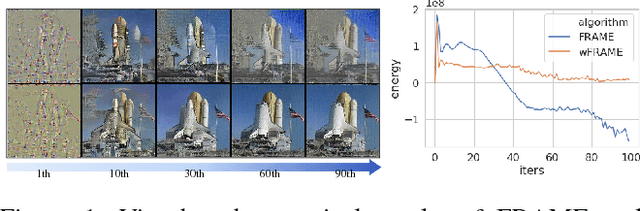

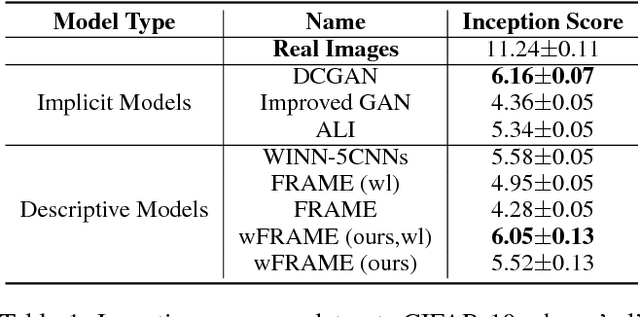

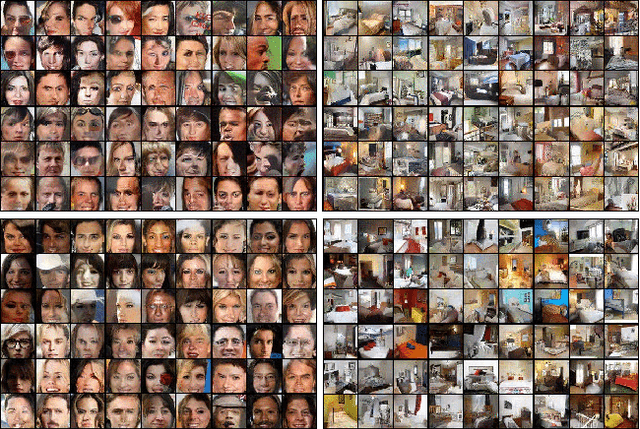

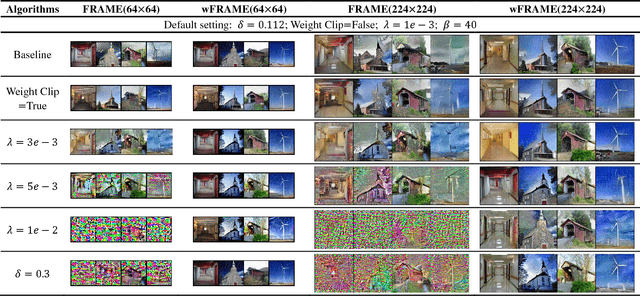

Abstract:FRAME (Filters, Random fields, And Maximum Entropy) is an energy-based descriptive model that synthesizes visual realism by capturing mutual patterns from structural input signals. The maximum likelihood estimation (MLE) is applied by default, yet conventionally causes the unstable training energy that wrecks the generated structures, which remains unexplained. In this paper, we provide a new theoretical insight to analyze FRAME, from a perspective of particle physics ascribing the weird phenomenon to KL-vanishing issue. In order to stabilize the energy dissipation, we propose an alternative Wasserstein distance in discrete time based on the conclusion that the Jordan-Kinderlehrer-Otto (JKO) discrete flow approximates KL discrete flow when the time step size tends to 0. Besides, this metric can still maintain the model's statistical consistency. Quantitative and qualitative experiments have been respectively conducted on several widely used datasets. The empirical studies have evidenced the effectiveness and superiority of our method.

3D Human Pose Machines with Self-supervised Learning

Jan 15, 2019

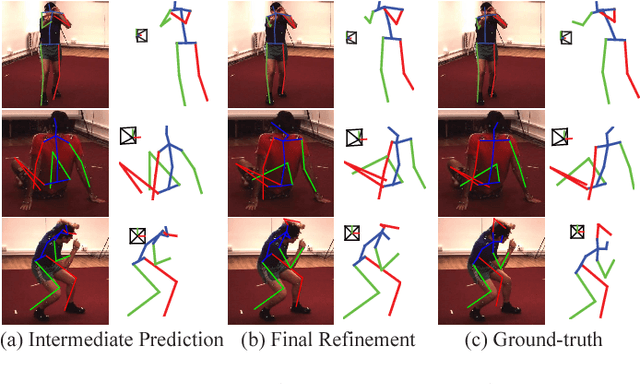

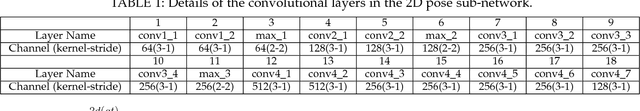

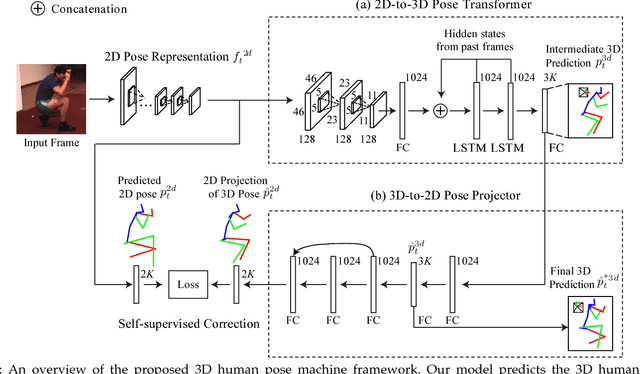

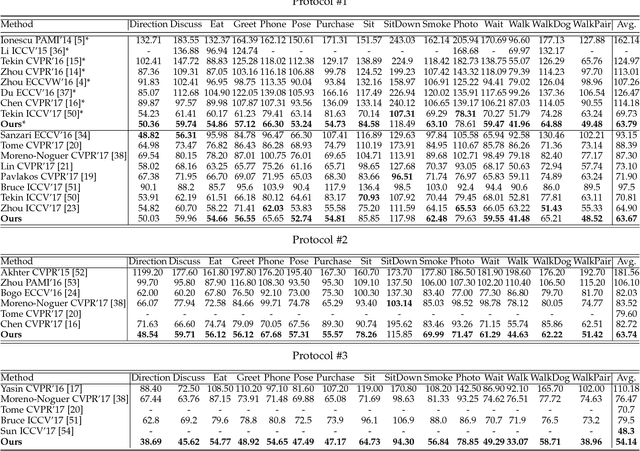

Abstract:Driven by recent computer vision and robotic applications, recovering 3D human poses has become increasingly important and attracted growing interests. In fact, completing this task is quite challenging due to the diverse appearances, viewpoints, occlusions and inherently geometric ambiguities inside monocular images. Most of the existing methods focus on designing some elaborate priors /constraints to directly regress 3D human poses based on the corresponding 2D human pose-aware features or 2D pose predictions. However, due to the insufficient 3D pose data for training and the domain gap between 2D space and 3D space, these methods have limited scalabilities for all practical scenarios (e.g., outdoor scene). Attempt to address this issue, this paper proposes a simple yet effective self-supervised correction mechanism to learn all intrinsic structures of human poses from abundant images. Specifically, the proposed mechanism involves two dual learning tasks, i.e., the 2D-to-3D pose transformation and 3D-to-2D pose projection, to serve as a bridge between 3D and 2D human poses in a type of "free" self-supervision for accurate 3D human pose estimation. The 2D-to-3D pose implies to sequentially regress intermediate 3D poses by transforming the pose representation from the 2D domain to the 3D domain under the sequence-dependent temporal context, while the 3D-to-2D pose projection contributes to refining the intermediate 3D poses by maintaining geometric consistency between the 2D projections of 3D poses and the estimated 2D poses. We further apply our self-supervised correction mechanism to develop a 3D human pose machine, which jointly integrates the 2D spatial relationship, temporal smoothness of predictions and 3D geometric knowledge. Extensive evaluations demonstrate the superior performance and efficiency of our framework over all the compared competing methods.

SNAS: Stochastic Neural Architecture Search

Jan 12, 2019

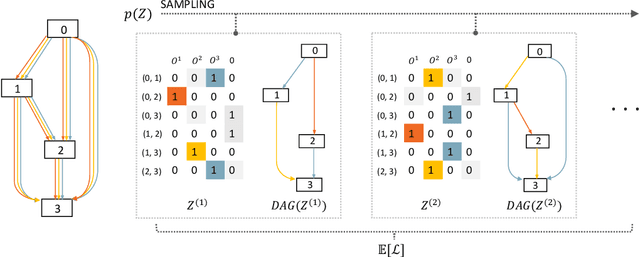

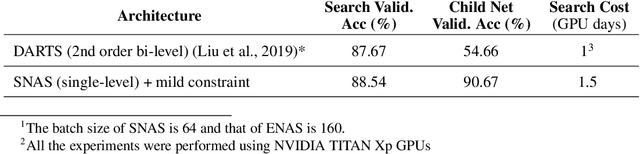

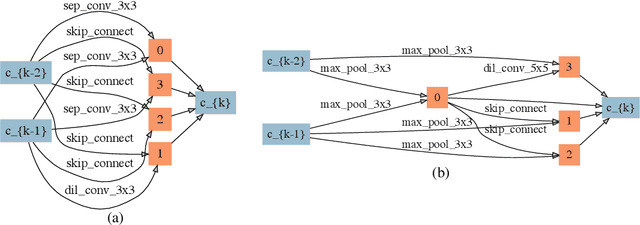

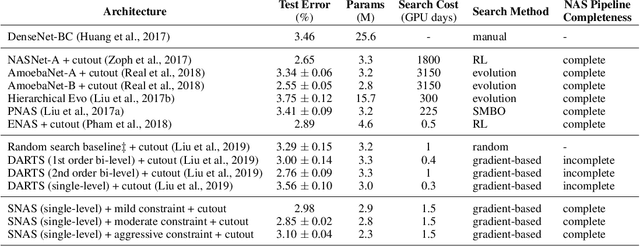

Abstract:We propose Stochastic Neural Architecture Search (SNAS), an economical end-to-end solution to Neural Architecture Search (NAS) that trains neural operation parameters and architecture distribution parameters in same round of back-propagation, while maintaining the completeness and differentiability of the NAS pipeline. In this work, NAS is reformulated as an optimization problem on parameters of a joint distribution for the search space in a cell. To leverage the gradient information in generic differentiable loss for architecture search, a novel search gradient is proposed. We prove that this search gradient optimizes the same objective as reinforcement-learning-based NAS, but assigns credits to structural decisions more efficiently. This credit assignment is further augmented with locally decomposable reward to enforce a resource-efficient constraint. In experiments on CIFAR-10, SNAS takes less epochs to find a cell architecture with state-of-the-art accuracy than non-differentiable evolution-based and reinforcement-learning-based NAS, which is also transferable to ImageNet. It is also shown that child networks of SNAS can maintain the validation accuracy in searching, with which attention-based NAS requires parameter retraining to compete, exhibiting potentials to stride towards efficient NAS on big datasets.

NADPEx: An on-policy temporally consistent exploration method for deep reinforcement learning

Dec 24, 2018

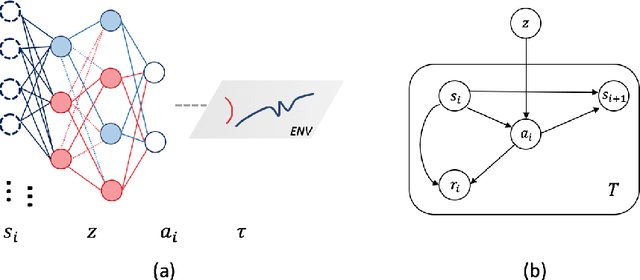

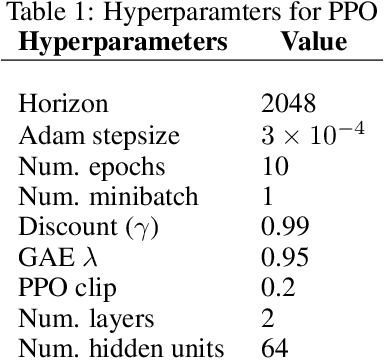

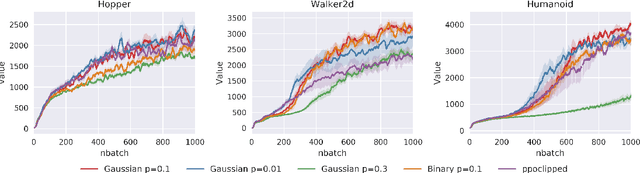

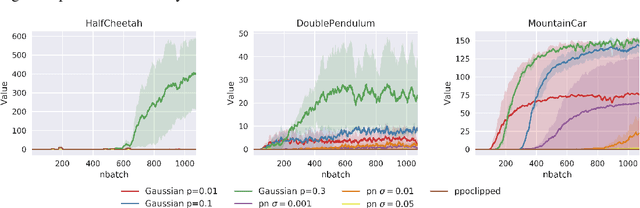

Abstract:Reinforcement learning agents need exploratory behaviors to escape from local optima. These behaviors may include both immediate dithering perturbation and temporally consistent exploration. To achieve these, a stochastic policy model that is inherently consistent through a period of time is in desire, especially for tasks with either sparse rewards or long term information. In this work, we introduce a novel on-policy temporally consistent exploration strategy - Neural Adaptive Dropout Policy Exploration (NADPEx) - for deep reinforcement learning agents. Modeled as a global random variable for conditional distribution, dropout is incorporated to reinforcement learning policies, equipping them with inherent temporal consistency, even when the reward signals are sparse. Two factors, gradients' alignment with the objective and KL constraint in policy space, are discussed to guarantee NADPEx policy's stable improvement. Our experiments demonstrate that NADPEx solves tasks with sparse reward while naive exploration and parameter noise fail. It yields as well or even faster convergence in the standard mujoco benchmark for continuous control.

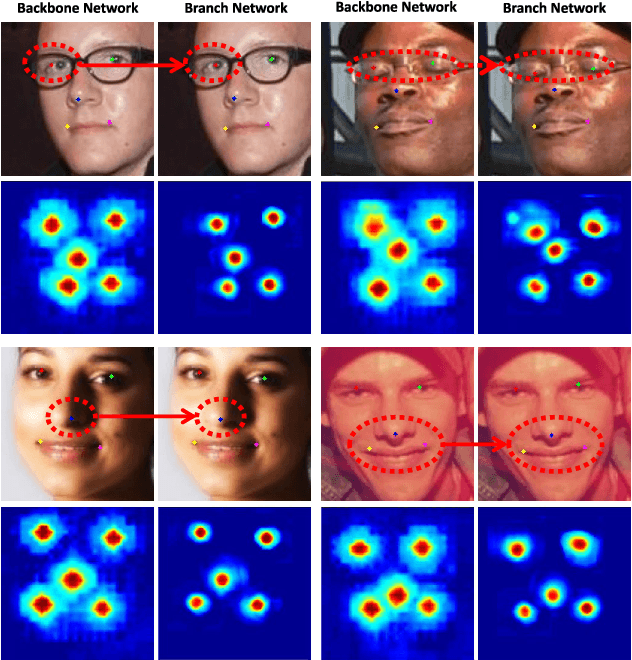

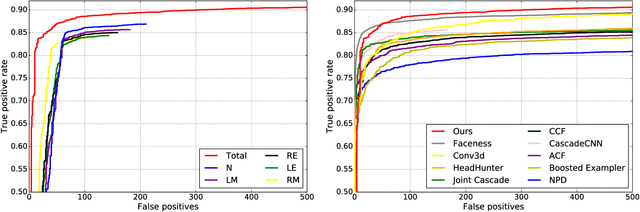

Facial Landmark Machines: A Backbone-Branches Architecture with Progressive Representation Learning

Dec 10, 2018

Abstract:Facial landmark localization plays a critical role in face recognition and analysis. In this paper, we propose a novel cascaded backbone-branches fully convolutional neural network~(BB-FCN) for rapidly and accurately localizing facial landmarks in unconstrained and cluttered settings. Our proposed BB-FCN generates facial landmark response maps directly from raw images without any preprocessing. BB-FCN follows a coarse-to-fine cascaded pipeline, which consists of a backbone network for roughly detecting the locations of all facial landmarks and one branch network for each type of detected landmark for further refining their locations. Furthermore, to facilitate the facial landmark localization under unconstrained settings, we propose a large-scale benchmark named SYSU16K, which contains 16000 faces with large variations in pose, expression, illumination and resolution. Extensive experimental evaluations demonstrate that our proposed BB-FCN can significantly outperform the state-of-the-art under both constrained (i.e., within detected facial regions only) and unconstrained settings. We further confirm that high-quality facial landmarks localized with our proposed network can also improve the precision and recall of face detection.

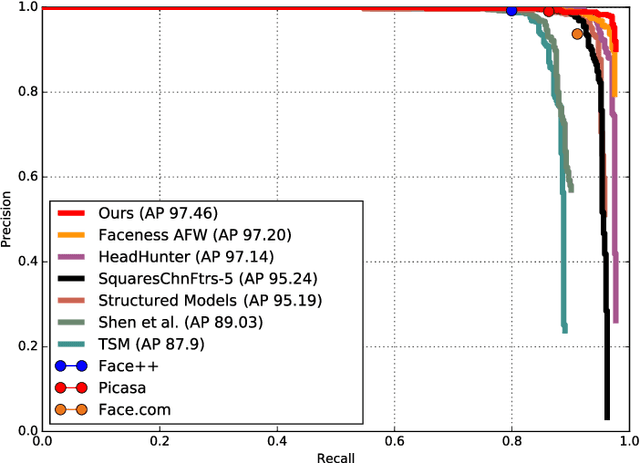

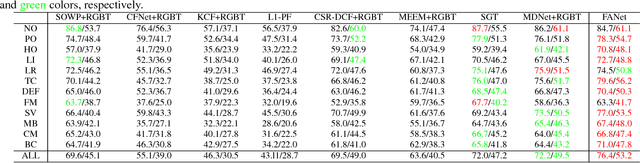

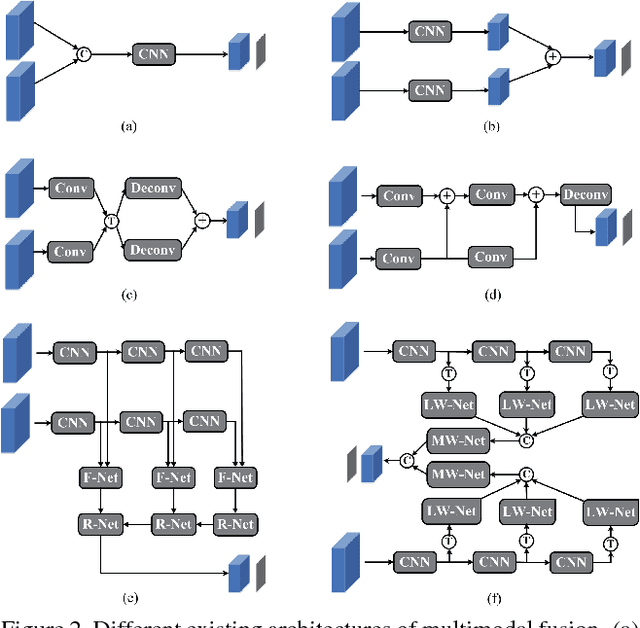

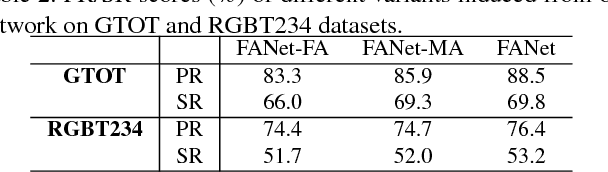

FANet: Quality-Aware Feature Aggregation Network for RGB-T Tracking

Nov 24, 2018

Abstract:This paper investigates how to perform robust visual tracking in adverse and challenging conditions using complementary visual and thermal infrared data (RGB-T tracking). We propose a novel deep network architecture "quality-aware Feature Aggregation Network (FANet)" to achieve quality-aware aggregations of both hierarchical features and multimodal information for robust online RGB-T tracking. Unlike existing works that directly concatenate hierarchical deep features, our FANet learns the layer weights to adaptively aggregate them to handle the challenge of significant appearance changes caused by deformation, abrupt motion, background clutter and occlusion within each modality. Moreover, we employ the operations of max pooling, interpolation upsampling and convolution to transform these hierarchical and multi-resolution features into a uniform space at the same resolution for more effective feature aggregation. In different modalities, we elaborately design a multimodal aggregation sub-network to integrate all modalities collaboratively based on the predicted reliability degrees. Extensive experiments on large-scale benchmark datasets demonstrate that our FANet significantly outperforms other state-of-the-art RGB-T tracking methods.

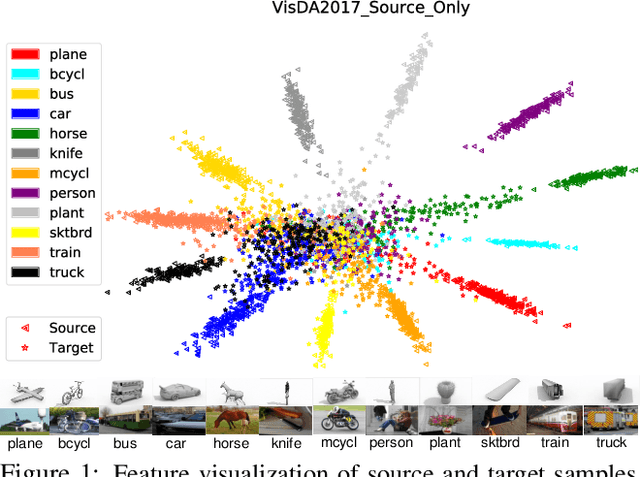

Unsupervised Domain Adaptation: An Adaptive Feature Norm Approach

Nov 19, 2018

Abstract:Unsupervised domain adaptation aims to mitigate the domain shift when transferring knowledge from a supervised source domain to an unsupervised target domain. Adversarial Feature Alignment has been successfully explored to minimize the domain discrepancy. However, existing methods are usually struggling to optimize mixed learning objectives and vulnerable to negative transfer when two domains do not share the identical label space. In this paper, we empirically reveal that the erratic discrimination of target domain mainly reflects in its much lower feature norm value with respect to that of the source domain. We present a non-parametric Adaptive Feature Norm AFN approach, which is independent of the association between label spaces of the two domains. We demonstrate that adapting feature norms of source and target domains to achieve equilibrium over a large range of values can result in significant domain transfer gains. Without bells and whistles but a few lines of code, our method largely lifts the discrimination of target domain (23.7\% from the Source Only in VisDA2017) and achieves the new state of the art under the vanilla setting. Furthermore, as our approach does not require to deliberately align the feature distributions, it is robust to negative transfer and can outperform the existing approaches under the partial setting by an extremely large margin (9.8\% on Office-Home and 14.1\% on VisDA2017). Code is available at https://github.com/jihanyang/AFN. We are responsible for the reproducibility of our method.

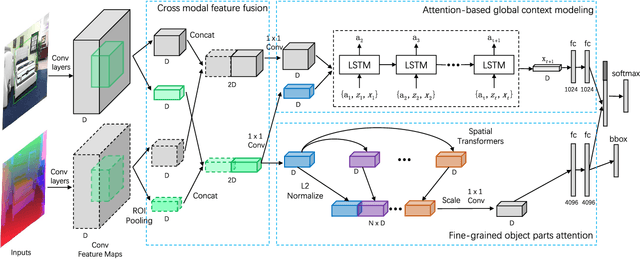

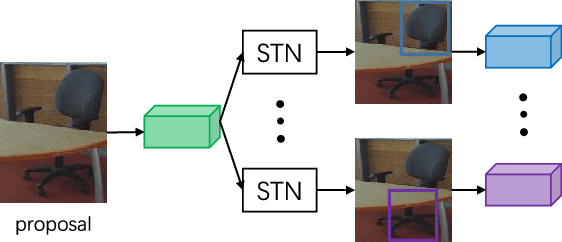

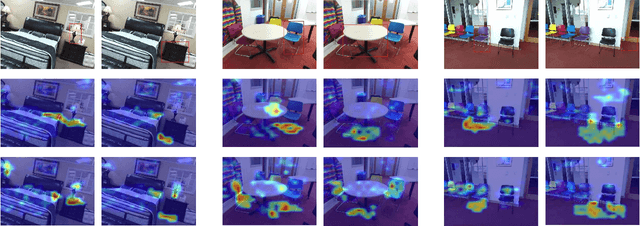

Cross-Modal Attentional Context Learning for RGB-D Object Detection

Oct 30, 2018

Abstract:Recognizing objects from simultaneously sensed photometric (RGB) and depth channels is a fundamental yet practical problem in many machine vision applications such as robot grasping and autonomous driving. In this paper, we address this problem by developing a Cross-Modal Attentional Context (CMAC) learning framework, which enables the full exploitation of the context information from both RGB and depth data. Compared to existing RGB-D object detection frameworks, our approach has several appealing properties. First, it consists of an attention-based global context model for exploiting adaptive contextual information and incorporating this information into a region-based CNN (e.g., Fast RCNN) framework to achieve improved object detection performance. Second, our CMAC framework further contains a fine-grained object part attention module to harness multiple discriminative object parts inside each possible object region for superior local feature representation. While greatly improving the accuracy of RGB-D object detection, the effective cross-modal information fusion as well as attentional context modeling in our proposed model provide an interpretable visualization scheme. Experimental results demonstrate that the proposed method significantly improves upon the state of the art on all public benchmarks.

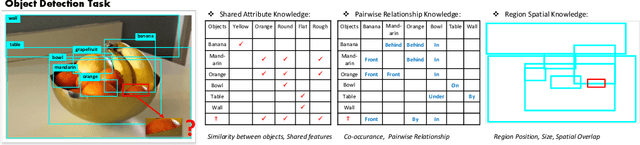

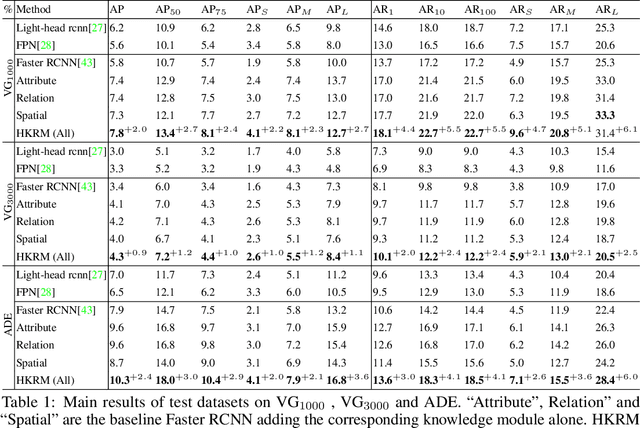

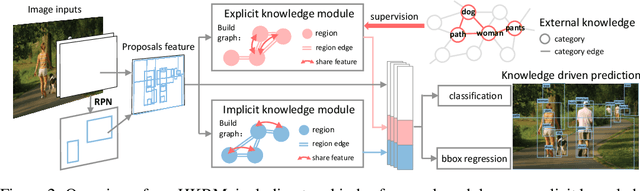

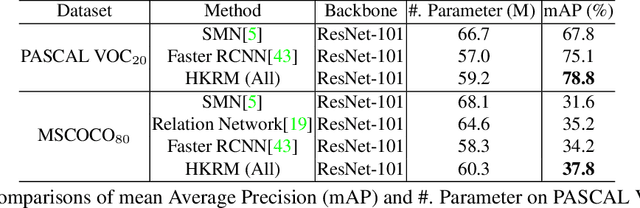

Hybrid Knowledge Routed Modules for Large-scale Object Detection

Oct 30, 2018

Abstract:The dominant object detection approaches treat the recognition of each region separately and overlook crucial semantic correlations between objects in one scene. This paradigm leads to substantial performance drop when facing heavy long-tail problems, where very few samples are available for rare classes and plenty of confusing categories exists. We exploit diverse human commonsense knowledge for reasoning over large-scale object categories and reaching semantic coherency within one image. Particularly, we present Hybrid Knowledge Routed Modules (HKRM) that incorporates the reasoning routed by two kinds of knowledge forms: an explicit knowledge module for structured constraints that are summarized with linguistic knowledge (e.g. shared attributes, relationships) about concepts; and an implicit knowledge module that depicts some implicit constraints (e.g. common spatial layouts). By functioning over a region-to-region graph, both modules can be individualized and adapted to coordinate with visual patterns in each image, guided by specific knowledge forms. HKRM are light-weight, general-purpose and extensible by easily incorporating multiple knowledge to endow any detection networks the ability of global semantic reasoning. Experiments on large-scale object detection benchmarks show HKRM obtains around 34.5% improvement on VisualGenome (1000 categories) and 30.4% on ADE in terms of mAP. Codes and trained model can be found in https://github.com/chanyn/HKRM.

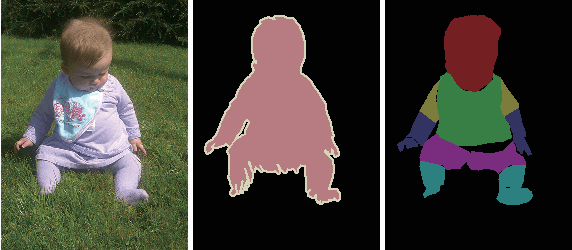

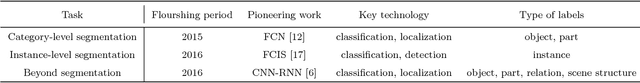

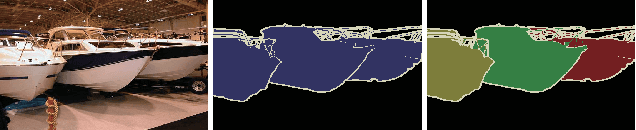

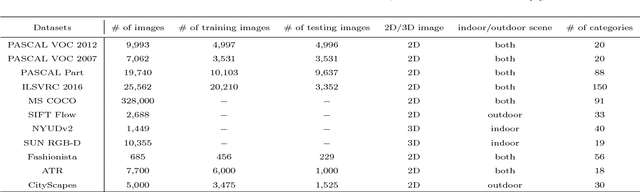

Learning Deep Representations for Semantic Image Parsing: a Comprehensive Overview

Oct 10, 2018

Abstract:Semantic image parsing, which refers to the process of decomposing images into semantic regions and constructing the structure representation of the input, has recently aroused widespread interest in the field of computer vision. The recent application of deep representation learning has driven this field into a new stage of development. In this paper, we summarize three aspects of the progress of research on semantic image parsing, i.e., category-level semantic segmentation, instance-level semantic segmentation, and beyond segmentation. Specifically, we first review the general frameworks for each task and introduce the relevant variants. The advantages and limitations of each method are also discussed. Moreover, we present a comprehensive comparison of different benchmark datasets and evaluation metrics. Finally, we explore the future trends and challenges of semantic image parsing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge