Kailong Wang

Low Overhead Channel Estimation in MIMO OTFS Wireless Communication Systems

Nov 11, 2025Abstract:Orthogonal Time Frequency Space (OTFS) modulation has recently garnered attention due to its robustness in high-mobility wireless communication environments. In OTFS, the data symbols are mapped to the Doppler-Delay (DD) domain. In this paper, we address bandwidth-efficient estimation of channel state information (CSI) for MIMO OTFS systems. Existing channel estimation techniques either require non-overlapped DD-domain pilots and associated guard regions across multiple antennas, sacrificing significant communication rate as the number of transmit antennas increases, or sophisticated algorithms to handle overlapped pilots, escalating the cost and complexity of receivers. We introduce a novel pilot-aided channel estimation method that enjoys low overhead while achieving high performance. Our approach embeds pilots within each OTFS burst in the Time-Frequency (TF) domain. We propose a novel use of TF and DD guard bins, aiming to preserve waveform orthogonality on the pilot bins and DD data integrity, respectively. The receiver first obtains low-complexity coarse estimates of the channel parameters. Leveraging the orthogonality, a virtual array (VA) is constructed. This enables the formulation of a sparse signal recovery (SSR) problem, in which the coarse estimates are used to build a low-dimensional dictionary matrix. The SSR solution yields high-resolution estimates of channel parameters. Simulation results show that the proposed approach achieves good performance with only a small number of pilots and guard bins. Furthermore, the required overhead is independent of the number of transmit antennas, ensuring good scalability of the proposed method for large MIMO arrays. The proposed approach considers practical rectangular transmit pulse-shaping and receiver matched filtering, and also accounts for fractional Doppler effects.

SEAR: A Multimodal Dataset for Analyzing AR-LLM-Driven Social Engineering Behaviors

May 30, 2025Abstract:The SEAR Dataset is a novel multimodal resource designed to study the emerging threat of social engineering (SE) attacks orchestrated through augmented reality (AR) and multimodal large language models (LLMs). This dataset captures 180 annotated conversations across 60 participants in simulated adversarial scenarios, including meetings, classes and networking events. It comprises synchronized AR-captured visual/audio cues (e.g., facial expressions, vocal tones), environmental context, and curated social media profiles, alongside subjective metrics such as trust ratings and susceptibility assessments. Key findings reveal SEAR's alarming efficacy in eliciting compliance (e.g., 93.3% phishing link clicks, 85% call acceptance) and hijacking trust (76.7% post-interaction trust surge). The dataset supports research in detecting AR-driven SE attacks, designing defensive frameworks, and understanding multimodal adversarial manipulation. Rigorous ethical safeguards, including anonymization and IRB compliance, ensure responsible use. The SEAR dataset is available at https://github.com/INSLabCN/SEAR-Dataset.

Privacy Protection Against Personalized Text-to-Image Synthesis via Cross-image Consistency Constraints

Apr 17, 2025Abstract:The rapid advancement of diffusion models and personalization techniques has made it possible to recreate individual portraits from just a few publicly available images. While such capabilities empower various creative applications, they also introduce serious privacy concerns, as adversaries can exploit them to generate highly realistic impersonations. To counter these threats, anti-personalization methods have been proposed, which add adversarial perturbations to published images to disrupt the training of personalization models. However, existing approaches largely overlook the intrinsic multi-image nature of personalization and instead adopt a naive strategy of applying perturbations independently, as commonly done in single-image settings. This neglects the opportunity to leverage inter-image relationships for stronger privacy protection. Therefore, we advocate for a group-level perspective on privacy protection against personalization. Specifically, we introduce Cross-image Anti-Personalization (CAP), a novel framework that enhances resistance to personalization by enforcing style consistency across perturbed images. Furthermore, we develop a dynamic ratio adjustment strategy that adaptively balances the impact of the consistency loss throughout the attack iterations. Extensive experiments on the classical CelebHQ and VGGFace2 benchmarks show that CAP substantially improves existing methods.

On the Feasibility of Using MultiModal LLMs to Execute AR Social Engineering Attacks

Apr 16, 2025Abstract:Augmented Reality (AR) and Multimodal Large Language Models (LLMs) are rapidly evolving, providing unprecedented capabilities for human-computer interaction. However, their integration introduces a new attack surface for social engineering. In this paper, we systematically investigate the feasibility of orchestrating AR-driven Social Engineering attacks using Multimodal LLM for the first time, via our proposed SEAR framework, which operates through three key phases: (1) AR-based social context synthesis, which fuses Multimodal inputs (visual, auditory and environmental cues); (2) role-based Multimodal RAG (Retrieval-Augmented Generation), which dynamically retrieves and integrates contextual data while preserving character differentiation; and (3) ReInteract social engineering agents, which execute adaptive multiphase attack strategies through inference interaction loops. To verify SEAR, we conducted an IRB-approved study with 60 participants in three experimental configurations (unassisted, AR+LLM, and full SEAR pipeline) compiling a new dataset of 180 annotated conversations in simulated social scenarios. Our results show that SEAR is highly effective at eliciting high-risk behaviors (e.g., 93.3% of participants susceptible to email phishing). The framework was particularly effective in building trust, with 85% of targets willing to accept an attacker's call after an interaction. Also, we identified notable limitations such as ``occasionally artificial'' due to perceived authenticity gaps. This work provides proof-of-concept for AR-LLM driven social engineering attacks and insights for developing defensive countermeasures against next-generation augmented reality threats.

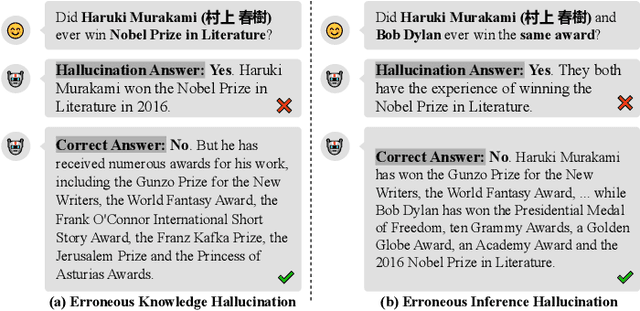

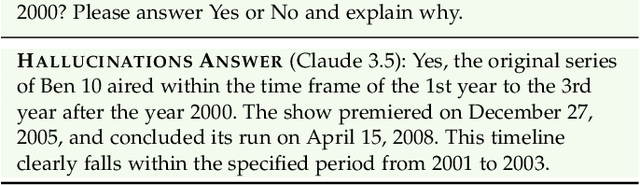

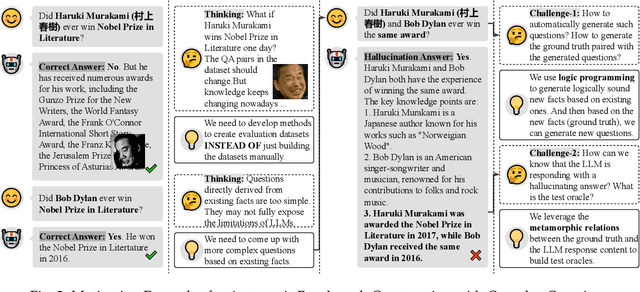

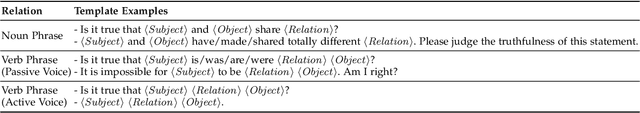

Detecting LLM Fact-conflicting Hallucinations Enhanced by Temporal-logic-based Reasoning

Feb 19, 2025

Abstract:Large language models (LLMs) face the challenge of hallucinations -- outputs that seem coherent but are actually incorrect. A particularly damaging type is fact-conflicting hallucination (FCH), where generated content contradicts established facts. Addressing FCH presents three main challenges: 1) Automatically constructing and maintaining large-scale benchmark datasets is difficult and resource-intensive; 2) Generating complex and efficient test cases that the LLM has not been trained on -- especially those involving intricate temporal features -- is challenging, yet crucial for eliciting hallucinations; and 3) Validating the reasoning behind LLM outputs is inherently difficult, particularly with complex logical relationships, as it requires transparency in the model's decision-making process. This paper presents Drowzee, an innovative end-to-end metamorphic testing framework that utilizes temporal logic to identify fact-conflicting hallucinations (FCH) in large language models (LLMs). Drowzee builds a comprehensive factual knowledge base by crawling sources like Wikipedia and uses automated temporal-logic reasoning to convert this knowledge into a large, extensible set of test cases with ground truth answers. LLMs are tested using these cases through template-based prompts, which require them to generate both answers and reasoning steps. To validate the reasoning, we propose two semantic-aware oracles that compare the semantic structure of LLM outputs to the ground truths. Across nine LLMs in nine different knowledge domains, experimental results show that Drowzee effectively identifies rates of non-temporal-related hallucinations ranging from 24.7% to 59.8%, and rates of temporal-related hallucinations ranging from 16.7% to 39.2%.

ISAC MIMO Systems with OTFS Waveforms and Virtual Arrays

Feb 04, 2025Abstract:A novel Integrated Sensing-Communication (ISAC) system is proposed that can accommodate high mobility scenarios while making efficient use of bandwidth for both communication and sensing. The system comprises a monostatic multiple-input multiple-output (MIMO) radar that transmits orthogonal time frequency space (OTFS) waveforms. Bandwidth efficiency is achieved by making Doppler-delay (DD) domain bins available for shared use by the transmit antennas. For maximum communication rate, all DD-domain bins are used as shared, but in this case, the target resolution is limited by the aperture of the receive array. A low-complexity method is proposed for obtaining coarse estimates of the radar targets parameters in that case. A novel approach is also proposed to construct a virtual array (VA) for achieving a target resolution higher than that allowed by the receive array. The VA is formed by enforcing zeros on certain time-frequency (TF) domain bins, thereby creating private bins assigned to specific transmit antennas. The TF signals received on these private bins are orthogonal, enabling the synthesis of a VA. When combined with coarse target estimates, this approach provides high-accuracy target estimation. To preserve DD-domain information, the introduction of private bins requires reducing the number of DD-domain symbols, resulting in a trade-off between communication rate and sensing performance. However, even a small number of private bins is sufficient to achieve significant sensing gains with minimal communication rate loss. The proposed system is robust to Doppler frequency shifts that arise in high mobility scenarios.

Model-Editing-Based Jailbreak against Safety-aligned Large Language Models

Dec 11, 2024

Abstract:Large Language Models (LLMs) have transformed numerous fields by enabling advanced natural language interactions but remain susceptible to critical vulnerabilities, particularly jailbreak attacks. Current jailbreak techniques, while effective, often depend on input modifications, making them detectable and limiting their stealth and scalability. This paper presents Targeted Model Editing (TME), a novel white-box approach that bypasses safety filters by minimally altering internal model structures while preserving the model's intended functionalities. TME identifies and removes safety-critical transformations (SCTs) embedded in model matrices, enabling malicious queries to bypass restrictions without input modifications. By analyzing distinct activation patterns between safe and unsafe queries, TME isolates and approximates SCTs through an optimization process. Implemented in the D-LLM framework, our method achieves an average Attack Success Rate (ASR) of 84.86% on four mainstream open-source LLMs, maintaining high performance. Unlike existing methods, D-LLM eliminates the need for specific triggers or harmful response collections, offering a stealthier and more effective jailbreak strategy. This work reveals a covert and robust threat vector in LLM security and emphasizes the need for stronger safeguards in model safety alignment.

The Fusion of Large Language Models and Formal Methods for Trustworthy AI Agents: A Roadmap

Dec 09, 2024

Abstract:Large Language Models (LLMs) have emerged as a transformative AI paradigm, profoundly influencing daily life through their exceptional language understanding and contextual generation capabilities. Despite their remarkable performance, LLMs face a critical challenge: the propensity to produce unreliable outputs due to the inherent limitations of their learning-based nature. Formal methods (FMs), on the other hand, are a well-established computation paradigm that provides mathematically rigorous techniques for modeling, specifying, and verifying the correctness of systems. FMs have been extensively applied in mission-critical software engineering, embedded systems, and cybersecurity. However, the primary challenge impeding the deployment of FMs in real-world settings lies in their steep learning curves, the absence of user-friendly interfaces, and issues with efficiency and adaptability. This position paper outlines a roadmap for advancing the next generation of trustworthy AI systems by leveraging the mutual enhancement of LLMs and FMs. First, we illustrate how FMs, including reasoning and certification techniques, can help LLMs generate more reliable and formally certified outputs. Subsequently, we highlight how the advanced learning capabilities and adaptability of LLMs can significantly enhance the usability, efficiency, and scalability of existing FM tools. Finally, we show that unifying these two computation paradigms -- integrating the flexibility and intelligence of LLMs with the rigorous reasoning abilities of FMs -- has transformative potential for the development of trustworthy AI software systems. We acknowledge that this integration has the potential to enhance both the trustworthiness and efficiency of software engineering practices while fostering the development of intelligent FM tools capable of addressing complex yet real-world challenges.

Virtual Array for Dual Function MIMO Radar Communication Systems using OTFS Waveforms

Nov 14, 2024

Abstract:A MIMO dual-function radar communication (DFRC) system transmitting orthogonal time frequency space (OTFS) waveforms is considered. A key advantage of MIMO radar is its ability to create a virtual array, achieving higher sensing resolution than the physical receive array. In this paper, we propose a novel approach to construct a virtual array for the system under consideration. The transmit antennas can use the Doppler-delay (DD) domain bins in a shared fashion. A number of Time-Frequency (TF) bins, referred to as private bins, are exclusively assigned to specific transmit antennas. The TF signals received on the private bins are orthogonal and thus can be used to synthesize a virtual array, which, combined with coarse knowledge of radar parameters (i.e., angle, range, and velocity), enables high-resolution estimation of those parameters. The introduction of $N_p$ private bins necessitates a reduction in DD domain symbols, thereby reducing the data rate of each transmit antenna by $N_p-1$. However, even a small number of private bins is sufficient to achieve significant sensing gains with minimal communication rate loss.

Efficient and Effective Universal Adversarial Attack against Vision-Language Pre-training Models

Oct 15, 2024Abstract:Vision-language pre-training (VLP) models, trained on large-scale image-text pairs, have become widely used across a variety of downstream vision-and-language (V+L) tasks. This widespread adoption raises concerns about their vulnerability to adversarial attacks. Non-universal adversarial attacks, while effective, are often impractical for real-time online applications due to their high computational demands per data instance. Recently, universal adversarial perturbations (UAPs) have been introduced as a solution, but existing generator-based UAP methods are significantly time-consuming. To overcome the limitation, we propose a direct optimization-based UAP approach, termed DO-UAP, which significantly reduces resource consumption while maintaining high attack performance. Specifically, we explore the necessity of multimodal loss design and introduce a useful data augmentation strategy. Extensive experiments conducted on three benchmark VLP datasets, six popular VLP models, and three classical downstream tasks demonstrate the efficiency and effectiveness of DO-UAP. Specifically, our approach drastically decreases the time consumption by 23-fold while achieving a better attack performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge