Ting Bi

SEAR: A Multimodal Dataset for Analyzing AR-LLM-Driven Social Engineering Behaviors

May 30, 2025Abstract:The SEAR Dataset is a novel multimodal resource designed to study the emerging threat of social engineering (SE) attacks orchestrated through augmented reality (AR) and multimodal large language models (LLMs). This dataset captures 180 annotated conversations across 60 participants in simulated adversarial scenarios, including meetings, classes and networking events. It comprises synchronized AR-captured visual/audio cues (e.g., facial expressions, vocal tones), environmental context, and curated social media profiles, alongside subjective metrics such as trust ratings and susceptibility assessments. Key findings reveal SEAR's alarming efficacy in eliciting compliance (e.g., 93.3% phishing link clicks, 85% call acceptance) and hijacking trust (76.7% post-interaction trust surge). The dataset supports research in detecting AR-driven SE attacks, designing defensive frameworks, and understanding multimodal adversarial manipulation. Rigorous ethical safeguards, including anonymization and IRB compliance, ensure responsible use. The SEAR dataset is available at https://github.com/INSLabCN/SEAR-Dataset.

On the Feasibility of Using MultiModal LLMs to Execute AR Social Engineering Attacks

Apr 16, 2025Abstract:Augmented Reality (AR) and Multimodal Large Language Models (LLMs) are rapidly evolving, providing unprecedented capabilities for human-computer interaction. However, their integration introduces a new attack surface for social engineering. In this paper, we systematically investigate the feasibility of orchestrating AR-driven Social Engineering attacks using Multimodal LLM for the first time, via our proposed SEAR framework, which operates through three key phases: (1) AR-based social context synthesis, which fuses Multimodal inputs (visual, auditory and environmental cues); (2) role-based Multimodal RAG (Retrieval-Augmented Generation), which dynamically retrieves and integrates contextual data while preserving character differentiation; and (3) ReInteract social engineering agents, which execute adaptive multiphase attack strategies through inference interaction loops. To verify SEAR, we conducted an IRB-approved study with 60 participants in three experimental configurations (unassisted, AR+LLM, and full SEAR pipeline) compiling a new dataset of 180 annotated conversations in simulated social scenarios. Our results show that SEAR is highly effective at eliciting high-risk behaviors (e.g., 93.3% of participants susceptible to email phishing). The framework was particularly effective in building trust, with 85% of targets willing to accept an attacker's call after an interaction. Also, we identified notable limitations such as ``occasionally artificial'' due to perceived authenticity gaps. This work provides proof-of-concept for AR-LLM driven social engineering attacks and insights for developing defensive countermeasures against next-generation augmented reality threats.

A Map-matching Algorithm with Extraction of Multi-group Information for Low-frequency Data

Sep 18, 2022

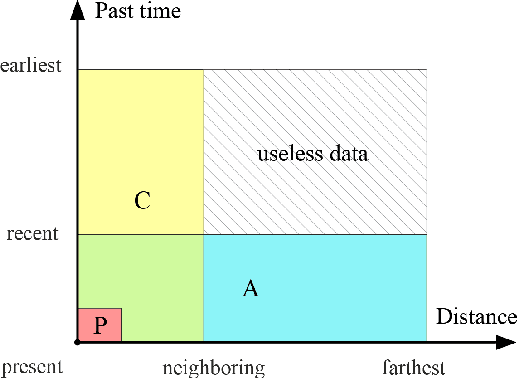

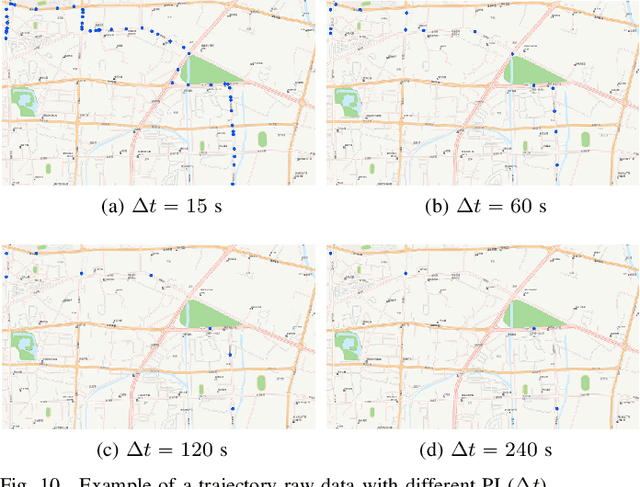

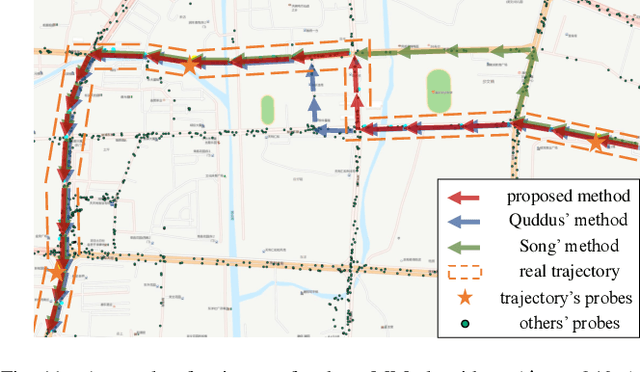

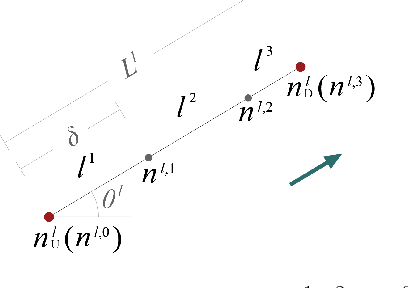

Abstract:The growing use of probe vehicles generates a huge number of GNSS data. Limited by the satellite positioning technology, further improving the accuracy of map-matching is challenging work, especially for low-frequency trajectories. When matching a trajectory, the ego vehicle's spatial-temporal information of the present trip is the most useful with the least amount of data. In addition, there are a large amount of other data, e.g., other vehicles' state and past prediction results, but it is hard to extract useful information for matching maps and inferring paths. Most map-matching studies only used the ego vehicle's data and ignored other vehicles' data. Based on it, this paper designs a new map-matching method to make full use of "Big data". We first sort all data into four groups according to their spatial and temporal distance from the present matching probe which allows us to sort for their usefulness. Then we design three different methods to extract valuable information (scores) from them: a score for speed and bearing, a score for historical usage, and a score for traffic state using the spectral graph Markov neutral network. Finally, we use a modified top-K shortest-path method to search the candidate paths within an ellipse region and then use the fused score to infer the path (projected location). We test the proposed method against baseline algorithms using a real-world dataset in China. The results show that all scoring methods can enhance map-matching accuracy. Furthermore, our method outperforms the others, especially when GNSS probing frequency is less than 0.01 Hz.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge