Jie Cheng

STEP3-VL-10B Technical Report

Jan 15, 2026Abstract:We present STEP3-VL-10B, a lightweight open-source foundation model designed to redefine the trade-off between compact efficiency and frontier-level multimodal intelligence. STEP3-VL-10B is realized through two strategic shifts: first, a unified, fully unfrozen pre-training strategy on 1.2T multimodal tokens that integrates a language-aligned Perception Encoder with a Qwen3-8B decoder to establish intrinsic vision-language synergy; and second, a scaled post-training pipeline featuring over 1k iterations of reinforcement learning. Crucially, we implement Parallel Coordinated Reasoning (PaCoRe) to scale test-time compute, allocating resources to scalable perceptual reasoning that explores and synthesizes diverse visual hypotheses. Consequently, despite its compact 10B footprint, STEP3-VL-10B rivals or surpasses models 10$\times$-20$\times$ larger (e.g., GLM-4.6V-106B, Qwen3-VL-235B) and top-tier proprietary flagships like Gemini 2.5 Pro and Seed-1.5-VL. Delivering best-in-class performance, it records 92.2% on MMBench and 80.11% on MMMU, while excelling in complex reasoning with 94.43% on AIME2025 and 75.95% on MathVision. We release the full model suite to provide the community with a powerful, efficient, and reproducible baseline.

PaCoRe: Learning to Scale Test-Time Compute with Parallel Coordinated Reasoning

Jan 09, 2026Abstract:We introduce Parallel Coordinated Reasoning (PaCoRe), a training-and-inference framework designed to overcome a central limitation of contemporary language models: their inability to scale test-time compute (TTC) far beyond sequential reasoning under a fixed context window. PaCoRe departs from the traditional sequential paradigm by driving TTC through massive parallel exploration coordinated via a message-passing architecture in multiple rounds. Each round launches many parallel reasoning trajectories, compacts their findings into context-bounded messages, and synthesizes these messages to guide the next round and ultimately produce the final answer. Trained end-to-end with large-scale, outcome-based reinforcement learning, the model masters the synthesis abilities required by PaCoRe and scales to multi-million-token effective TTC without exceeding context limits. The approach yields strong improvements across diverse domains, and notably pushes reasoning beyond frontier systems in mathematics: an 8B model reaches 94.5% on HMMT 2025, surpassing GPT-5's 93.2% by scaling effective TTC to roughly two million tokens. We open-source model checkpoints, training data, and the full inference pipeline to accelerate follow-up work.

ZTree: A Subgroup Identification Based Decision Tree Learning Framework

Sep 16, 2025Abstract:Decision trees are a commonly used class of machine learning models valued for their interpretability and versatility, capable of both classification and regression. We propose ZTree, a novel decision tree learning framework that replaces CART's traditional purity based splitting with statistically principled subgroup identification. At each node, ZTree applies hypothesis testing (e.g., z-tests, t-tests, Mann-Whitney U, log-rank) to assess whether a candidate subgroup differs meaningfully from the complement. To adjust for the complication of multiple testing, we employ a cross-validation-based approach to determine if further node splitting is needed. This robust stopping criterion eliminates the need for post-pruning and makes the test threshold (z-threshold) the only parameter for controlling tree complexity. Because of the simplicity of the tree growing procedure, once a detailed tree is learned using the most lenient z-threshold, all simpler trees can be derived by simply removing nodes that do not meet the larger z-thresholds. This makes parameter tuning intuitive and efficient. Furthermore, this z-threshold is essentially a p-value, allowing users to easily plug in appropriate statistical tests into our framework without adjusting the range of parameter search. Empirical evaluation on five large-scale UCI datasets demonstrates that ZTree consistently delivers strong performance, especially at low data regimes. Compared to CART, ZTree also tends to grow simpler trees without sacrificing performance. ZTree introduces a statistically grounded alternative to traditional decision tree splitting by leveraging hypothesis testing and a cross-validation approach to multiple testing correction, resulting in an efficient and flexible framework.

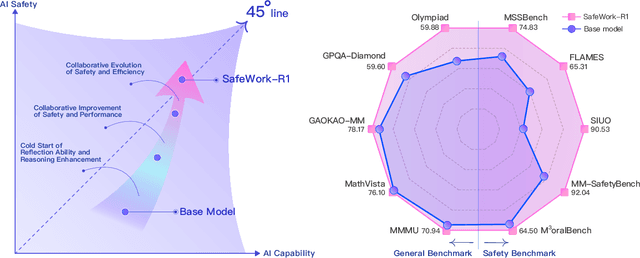

SafeWork-R1: Coevolving Safety and Intelligence under the AI-45$^{\circ}$ Law

Jul 24, 2025

Abstract:We introduce SafeWork-R1, a cutting-edge multimodal reasoning model that demonstrates the coevolution of capabilities and safety. It is developed by our proposed SafeLadder framework, which incorporates large-scale, progressive, safety-oriented reinforcement learning post-training, supported by a suite of multi-principled verifiers. Unlike previous alignment methods such as RLHF that simply learn human preferences, SafeLadder enables SafeWork-R1 to develop intrinsic safety reasoning and self-reflection abilities, giving rise to safety `aha' moments. Notably, SafeWork-R1 achieves an average improvement of $46.54\%$ over its base model Qwen2.5-VL-72B on safety-related benchmarks without compromising general capabilities, and delivers state-of-the-art safety performance compared to leading proprietary models such as GPT-4.1 and Claude Opus 4. To further bolster its reliability, we implement two distinct inference-time intervention methods and a deliberative search mechanism, enforcing step-level verification. Finally, we further develop SafeWork-R1-InternVL3-78B, SafeWork-R1-DeepSeek-70B, and SafeWork-R1-Qwen2.5VL-7B. All resulting models demonstrate that safety and capability can co-evolve synergistically, highlighting the generalizability of our framework in building robust, reliable, and trustworthy general-purpose AI.

HAMF: A Hybrid Attention-Mamba Framework for Joint Scene Context Understanding and Future Motion Representation Learning

May 21, 2025

Abstract:Motion forecasting represents a critical challenge in autonomous driving systems, requiring accurate prediction of surrounding agents' future trajectories. While existing approaches predict future motion states with the extracted scene context feature from historical agent trajectories and road layouts, they suffer from the information degradation during the scene feature encoding. To address the limitation, we propose HAMF, a novel motion forecasting framework that learns future motion representations with the scene context encoding jointly, to coherently combine the scene understanding and future motion state prediction. We first embed the observed agent states and map information into 1D token sequences, together with the target multi-modal future motion features as a set of learnable tokens. Then we design a unified Attention-based encoder, which synergistically combines self-attention and cross-attention mechanisms to model the scene context information and aggregate future motion features jointly. Complementing the encoder, we implement the Mamba module in the decoding stage to further preserve the consistency and correlations among the learned future motion representations, to generate the accurate and diverse final trajectories. Extensive experiments on Argoverse 2 benchmark demonstrate that our hybrid Attention-Mamba model achieves state-of-the-art motion forecasting performance with the simple and lightweight architecture.

Stop Summation: Min-Form Credit Assignment Is All Process Reward Model Needs for Reasoning

Apr 21, 2025

Abstract:Process reward models (PRMs) have proven effective for test-time scaling of Large Language Models (LLMs) on challenging reasoning tasks. However, reward hacking issues with PRMs limit their successful application in reinforcement fine-tuning. In this paper, we identify the main cause of PRM-induced reward hacking: the canonical summation-form credit assignment in reinforcement learning (RL), which defines the value as cumulative gamma-decayed future rewards, easily induces LLMs to hack steps with high rewards. To address this, we propose PURE: Process sUpervised Reinforcement lEarning. The key innovation of PURE is a min-form credit assignment that formulates the value function as the minimum of future rewards. This method significantly alleviates reward hacking by limiting the value function range and distributing advantages more reasonably. Through extensive experiments on 3 base models, we show that PRM-based approaches enabling min-form credit assignment achieve comparable reasoning performance to verifiable reward-based methods within only 30% steps. In contrast, the canonical sum-form credit assignment collapses training even at the beginning! Additionally, when we supplement PRM-based fine-tuning with just 10% verifiable rewards, we further alleviate reward hacking and produce the best fine-tuned model based on Qwen2.5-Math-7B in our experiments, achieving 82.5% accuracy on AMC23 and 53.3% average accuracy across 5 benchmarks. Moreover, we summarize the observed reward hacking cases and analyze the causes of training collapse. Code and models are available at https://github.com/CJReinforce/PURE.

ElimPCL: Eliminating Noise Accumulation with Progressive Curriculum Labeling for Source-Free Domain Adaptation

Mar 31, 2025Abstract:Source-Free Domain Adaptation (SFDA) aims to train a target model without source data, and the key is to generate pseudo-labels using a pre-trained source model. However, we observe that the source model often produces highly uncertain pseudo-labels for hard samples, particularly those heavily affected by domain shifts, leading to these noisy pseudo-labels being introduced even before adaptation and further reinforced through parameter updates. Additionally, they continuously influence neighbor samples through propagation in the feature space.To eliminate the issue of noise accumulation, we propose a novel Progressive Curriculum Labeling (ElimPCL) method, which iteratively filters trustworthy pseudo-labeled samples based on prototype consistency to exclude high-noise samples from training. Furthermore, a Dual MixUP technique is designed in the feature space to enhance the separability of hard samples, thereby mitigating the interference of noisy samples on their neighbors.Extensive experiments validate the effectiveness of ElimPCL, achieving up to a 3.4% improvement on challenging tasks compared to state-of-the-art methods.

Offline Reinforcement Learning with Discrete Diffusion Skills

Mar 26, 2025

Abstract:Skills have been introduced to offline reinforcement learning (RL) as temporal abstractions to tackle complex, long-horizon tasks, promoting consistent behavior and enabling meaningful exploration. While skills in offline RL are predominantly modeled within a continuous latent space, the potential of discrete skill spaces remains largely underexplored. In this paper, we propose a compact discrete skill space for offline RL tasks supported by state-of-the-art transformer-based encoder and diffusion-based decoder. Coupled with a high-level policy trained via offline RL techniques, our method establishes a hierarchical RL framework where the trained diffusion decoder plays a pivotal role. Empirical evaluations show that the proposed algorithm, Discrete Diffusion Skill (DDS), is a powerful offline RL method. DDS performs competitively on Locomotion and Kitchen tasks and excels on long-horizon tasks, achieving at least a 12 percent improvement on AntMaze-v2 benchmarks compared to existing offline RL approaches. Furthermore, DDS offers improved interpretability, training stability, and online exploration compared to previous skill-based methods.

Establishing Reality-Virtuality Interconnections in Urban Digital Twins for Superior Intelligent Road Inspection

Dec 23, 2024

Abstract:Road inspection is essential for ensuring road maintenance and traffic safety, as road defects gradually emerge and compromise road functionality. Traditional methods, which rely on manual evaluations, are labor-intensive, costly, and time-consuming. Although data-driven approaches are gaining traction, the scarcity and spatial sparsity of road defects in the real world pose significant challenges in acquiring high-quality datasets. Existing simulators designed to generate detailed synthetic driving scenes, however, lack models for road defects. Furthermore, advanced driving tasks involving interactions with road surfaces, such as planning and control in defective areas, remain underexplored. To address these limitations, we propose a system based on Urban Digital Twin (UDT) technology for intelligent road inspection. First, hierarchical road models are constructed from real-world driving data, creating highly detailed representations of road defect structures and surface elevations. Next, digital road twins are generated to create simulation environments for comprehensive analysis and evaluation. These scenarios are subsequently imported into a simulator to enable both data acquisition and physical simulation. Experimental results demonstrate that driving tasks, including perception and decision-making, can be significantly improved using the high-fidelity road defect scenes generated by our system.

LHPF: Look back the History and Plan for the Future in Autonomous Driving

Nov 26, 2024Abstract:Decision-making and planning in autonomous driving critically reflect the safety of the system, making effective planning imperative. Current imitation learning-based planning algorithms often merge historical trajectories with present observations to predict future candidate paths. However, these algorithms typically assess the current and historical plans independently, leading to discontinuities in driving intentions and an accumulation of errors with each step in a discontinuous plan. To tackle this challenge, this paper introduces LHPF, an imitation learning planner that integrates historical planning information. Our approach employs a historical intention aggregation module that pools historical planning intentions, which are then combined with a spatial query vector to decode the final planning trajectory. Furthermore, we incorporate a comfort auxiliary task to enhance the human-like quality of the driving behavior. Extensive experiments using both real-world and synthetic data demonstrate that LHPF not only surpasses existing advanced learning-based planners in planning performance but also marks the first instance of a purely learning-based planner outperforming the expert. Additionally, the application of the historical intention aggregation module across various backbones highlights the considerable potential of the proposed method. The code will be made publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge