Huiyu Zhou

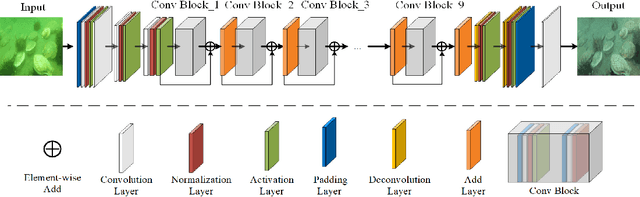

Gaussian Dynamic Convolution for Efficient Single-Image Segmentation

May 23, 2021

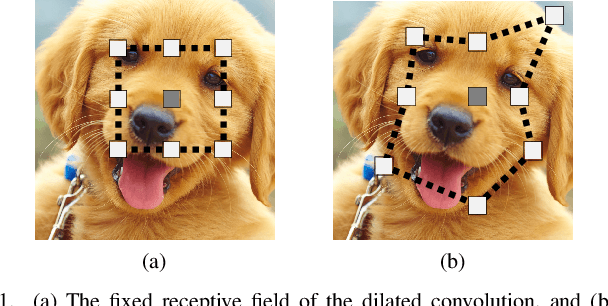

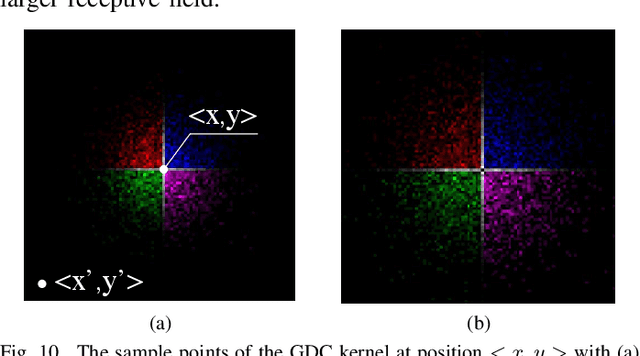

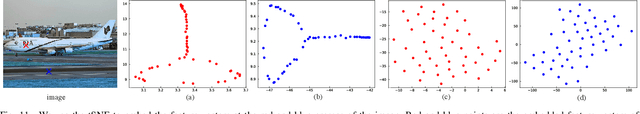

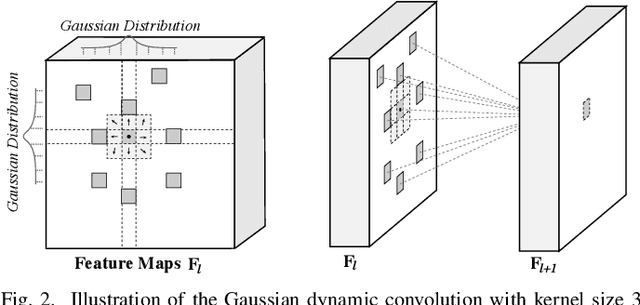

Abstract:Interactive single-image segmentation is ubiquitous in the scientific and commercial imaging software. In this work, we focus on the single-image segmentation problem only with some seeds such as scribbles. Inspired by the dynamic receptive field in the human being's visual system, we propose the Gaussian dynamic convolution (GDC) to fast and efficiently aggregate the contextual information for neural networks. The core idea is randomly selecting the spatial sampling area according to the Gaussian distribution offsets. Our GDC can be easily used as a module to build lightweight or complex segmentation networks. We adopt the proposed GDC to address the typical single-image segmentation tasks. Furthermore, we also build a Gaussian dynamic pyramid Pooling to show its potential and generality in common semantic segmentation. Experiments demonstrate that the GDC outperforms other existing convolutions on three benchmark segmentation datasets including Pascal-Context, Pascal-VOC 2012, and Cityscapes. Additional experiments are also conducted to illustrate that the GDC can produce richer and more vivid features compared with other convolutions. In general, our GDC is conducive to the convolutional neural networks to form an overall impression of the image.

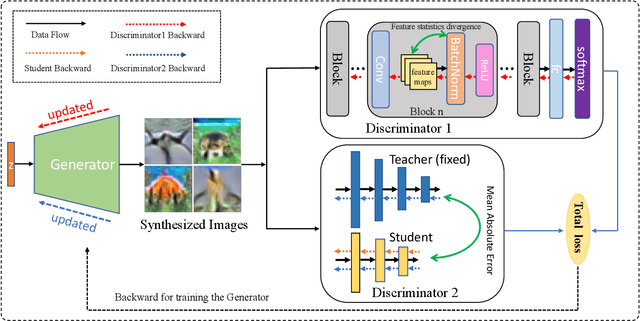

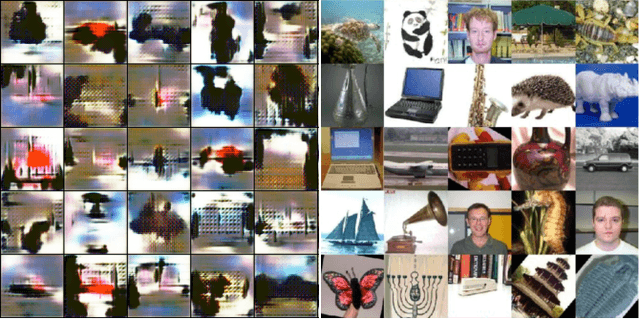

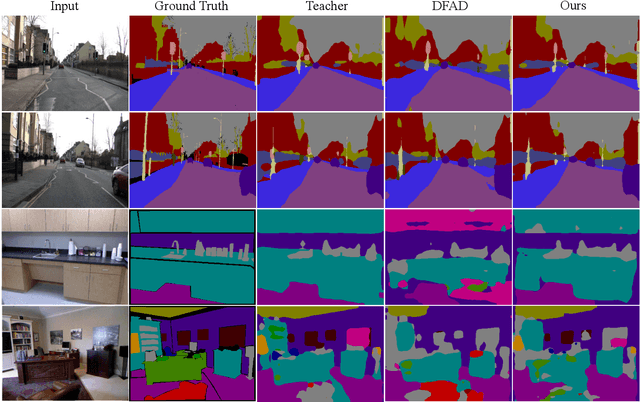

Dual Discriminator Adversarial Distillation for Data-free Model Compression

Apr 12, 2021

Abstract:Knowledge distillation has been widely used to produce portable and efficient neural networks which can be well applied on edge devices for computer vision tasks. However, almost all top-performing knowledge distillation methods need to access the original training data, which usually has a huge size and is often unavailable. To tackle this problem, we propose a novel data-free approach in this paper, named Dual Discriminator Adversarial Distillation (DDAD) to distill a neural network without any training data or meta-data. To be specific, we use a generator to create samples through dual discriminator adversarial distillation, which mimics the original training data. The generator not only uses the pre-trained teacher's intrinsic statistics in existing batch normalization layers but also obtains the maximum discrepancy from the student model. Then the generated samples are used to train the compact student network under the supervision of the teacher. The proposed method obtains an efficient student network which closely approximates its teacher network, despite using no original training data. Extensive experiments are conducted to to demonstrate the effectiveness of the proposed approach on CIFAR-10, CIFAR-100 and Caltech101 datasets for classification tasks. Moreover, we extend our method to semantic segmentation tasks on several public datasets such as CamVid and NYUv2. All experiments show that our method outperforms all baselines for data-free knowledge distillation.

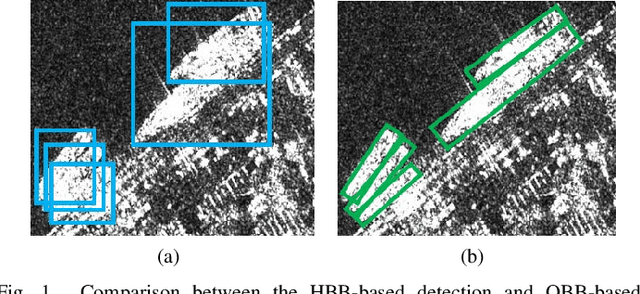

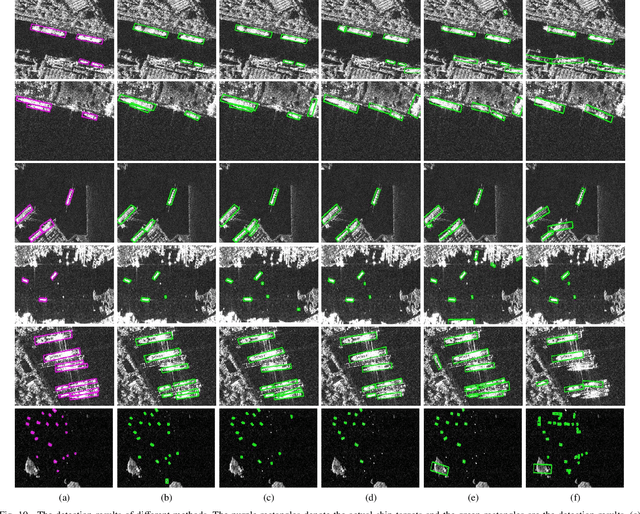

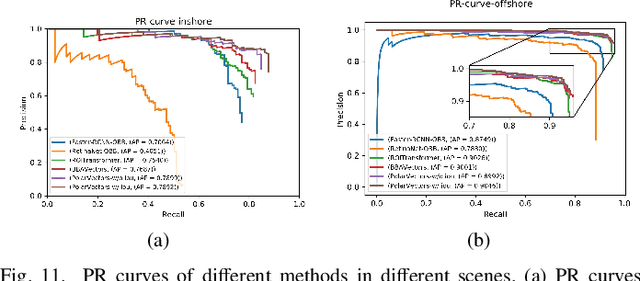

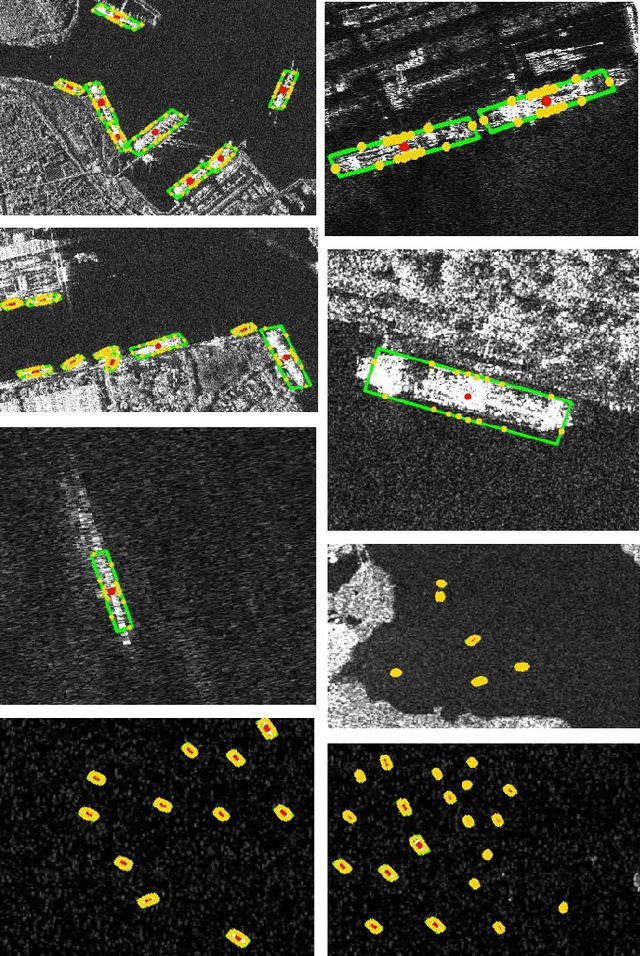

Learning Polar Encodings for Arbitrary-Oriented Ship Detection in SAR Images

Mar 24, 2021

Abstract:Common horizontal bounding box (HBB)-based methods are not capable of accurately locating slender ship targets with arbitrary orientations in synthetic aperture radar (SAR) images. Therefore, in recent years, methods based on oriented bounding box (OBB) have gradually received attention from researchers. However, most of the recently proposed deep learning-based methods for OBB detection encounter the boundary discontinuity problem in angle or key point regression. In order to alleviate this problem, researchers propose to introduce some manually set parameters or extra network branches for distinguishing the boundary cases, which make training more diffcult and lead to performance degradation. In this paper, in order to solve the boundary discontinuity problem in OBB regression, we propose to detect SAR ships by learning polar encodings. The encoding scheme uses a group of vectors pointing from the center of the ship target to the boundary points to represent an OBB. The boundary discontinuity problem is avoided by training and inference directly according to the polar encodings. In addition, we propose an Intersect over Union (IOU) -weighted regression loss, which further guides the training of polar encodings through the IOU metric and improves the detection performance. Experiments on the Rotating SAR Ship Detection Dataset (RSSDD) show that the proposed method can achieve better detection performance over other comparison algorithms and other OBB encoding schemes, demonstrating the effectiveness of our method.

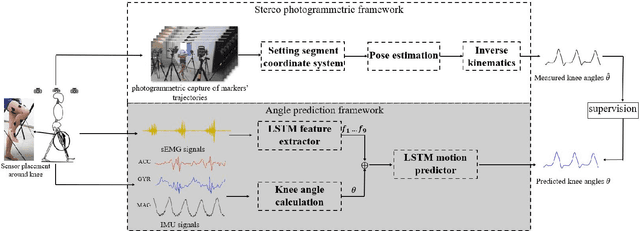

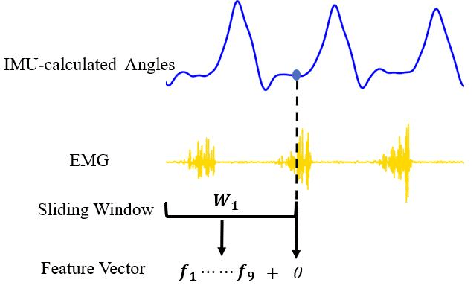

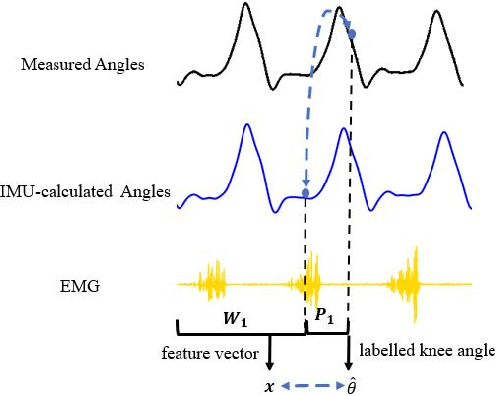

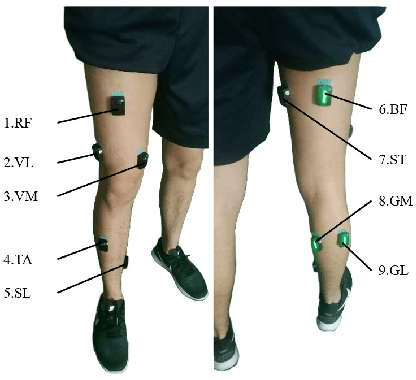

Continuous Prediction of Lower-Limb Kinematics From Multi-Modal Biomedical Signals

Mar 22, 2021

Abstract:The fast-growing techniques of measuring and fusing multi-modal biomedical signals enable advanced motor intent decoding schemes of lowerlimb exoskeletons, meeting the increasing demand for rehabilitative or assistive applications of take-home healthcare. Challenges of exoskeletons motor intent decoding schemes remain in making a continuous prediction to compensate for the hysteretic response caused by mechanical transmission. In this paper, we solve this problem by proposing an ahead of time continuous prediction of lower limb kinematics, with the prediction of knee angles during level walking as a case study. Firstly, an end-to-end kinematics prediction network(KinPreNet), consisting of a feature extractor and an angle predictor, is proposed and experimentally compared with features and methods traditionally used in ahead-of-time prediction of gait phases. Secondly, inspired by the electromechanical delay(EMD), we further explore our algorithm's capability of compensating response delay of mechanical transmission by validating the performance of the different sections of prediction time. And we experimentally reveal the time boundary of compensating the hysteretic response. Thirdly, a comparison of employing EMG signals or not is performed to reveal the EMG and kinematic signals collaborated contributions to the continuous prediction. During the experiments, EMG signals of nine muscles and knee angles calculated from inertial measurement unit (IMU) signals are recorded from ten healthy subjects. To the best of our knowledge, this is the first study of continuously predicting lower-limb kinematics in an ahead-of-time manner based on the electromechanical delay (EMD).

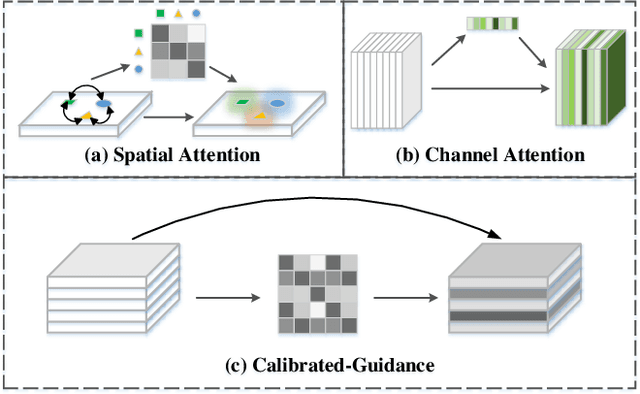

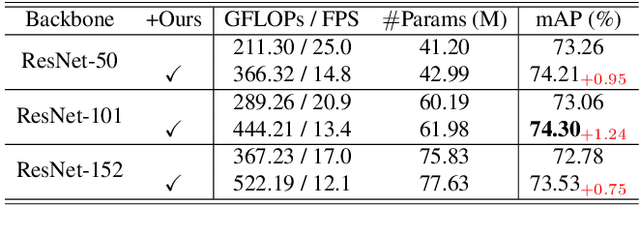

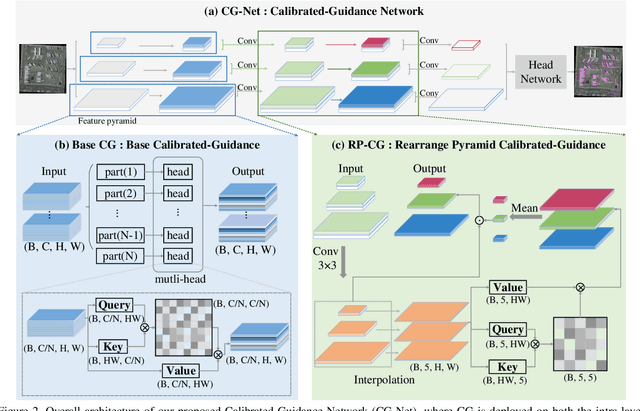

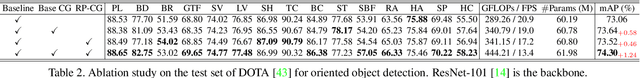

Learning Calibrated-Guidance for Object Detection in Aerial Images

Mar 21, 2021

Abstract:Recently, the study on object detection in aerial images has made tremendous progress in the community of computer vision. However, most state-of-the-art methods tend to develop elaborate attention mechanisms for the space-time feature calibrations with high computational complexity, while surprisingly ignoring the importance of feature calibrations in channels. In this work, we propose a simple yet effective Calibrated-Guidance (CG) scheme to enhance channel communications in a feature transformer fashion, which can adaptively determine the calibration weights for each channel based on the global feature affinity-pairs. Specifically, given a set of feature maps, CG first computes the feature similarity between each channel and the remaining channels as the intermediary calibration guidance. Then, re-representing each channel by aggregating all the channels weighted together via the guidance. Our CG can be plugged into any deep neural network, which is named as CG-Net. To demonstrate its effectiveness and efficiency, extensive experiments are carried out on both oriented and horizontal object detection tasks of aerial images. Results on two challenging benchmarks (i.e., DOTA and HRSC2016) demonstrate that our CG-Net can achieve state-of-the-art performance in accuracy with a fair computational overhead. https://github.com/WeiZongqi/CG-Net

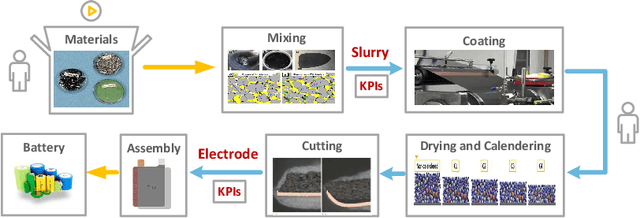

Feature Analyses and Modelling of Lithium-ion Batteries Manufacturing based on Random Forest Classification

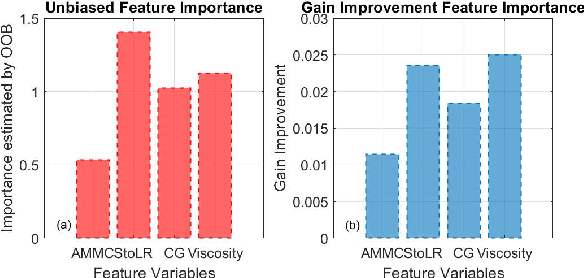

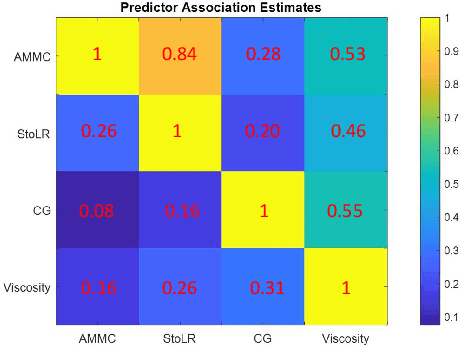

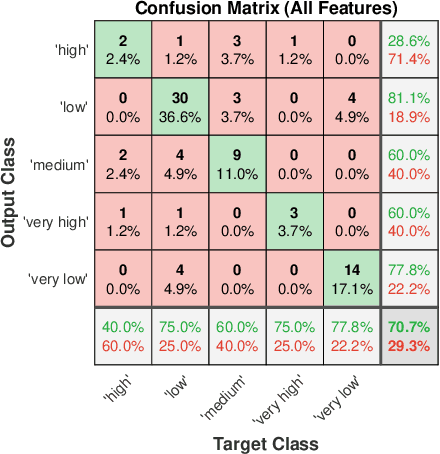

Feb 10, 2021

Abstract:Lithium-ion battery manufacturing is a highly complicated process with strongly coupled feature interdependencies, a feasible solution that can analyse feature variables within manufacturing chain and achieve reliable classification is thus urgently needed. This article proposes a random forest (RF)-based classification framework, through using the out of bag (OOB) predictions, Gini changes as well as predictive measure of association (PMOA), for effectively quantifying the importance and correlations of battery manufacturing features and their effects on the classification of electrode properties. Battery manufacturing data containing three intermediate product features from the mixing stage and one product parameter from the coating stage are analysed by the designed RF framework to investigate their effects on both the battery electrode active material mass load and porosity. Illustrative results demonstrate that the proposed RF framework not only achieves the reliable classification of electrode properties but also leads to the effective quantification of both manufacturing feature importance and correlations. This is the first time to design a systematic RF framework for simultaneously quantifying battery production feature importance and correlations by three various quantitative indicators including the unbiased feature importance (FI), gain improvement FI and PMOA, paving a promising solution to reduce model dimension and conduct efficient sensitivity analysis of battery manufacturing.

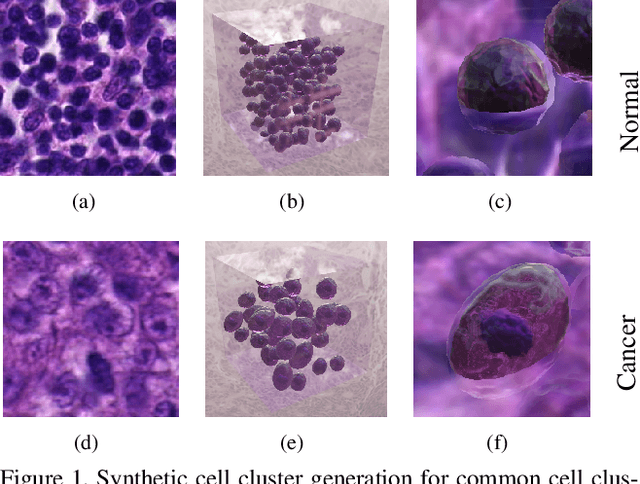

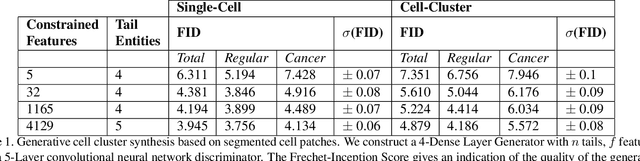

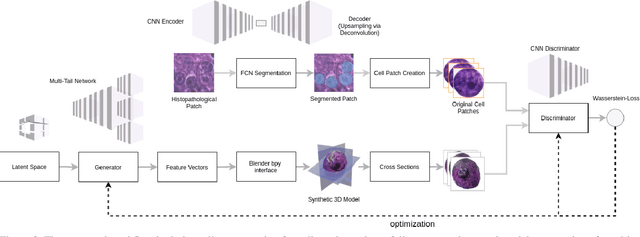

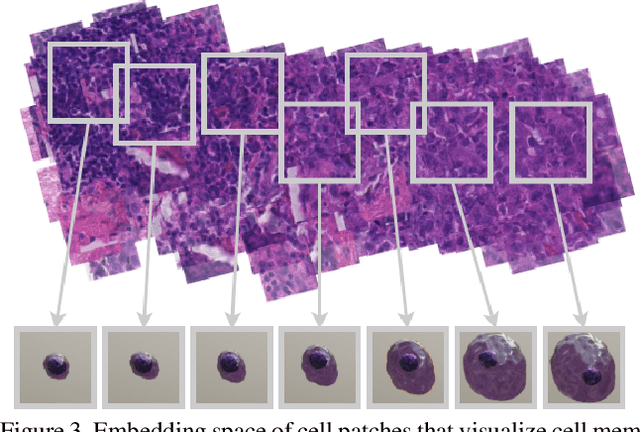

Synthetic Generation of Three-Dimensional Cancer Cell Models from Histopathological Images

Feb 08, 2021

Abstract:Synthetic generation of three-dimensional cell models from histopathological images aims to enhance understanding of cell mutation, and progression of cancer, necessary for clinical assessment and optimal treatment. Classical reconstruction algorithms based on image registration of consecutive slides of stained tissues are prone to errors and often not suitable for the training of three-dimensional segmentation algorithms. We propose a novel framework to generate synthetic three-dimensional histological models based on a generator-discriminator pattern optimizing constrained features that construct a 3D model via a Blender interface exploiting smooth shape continuity typical for biological specimens. To capture the spatial context of entire cell clusters we deploy a novel deep topology transformer that implements and attention mechanism on cell group images to extract features for the frozen feature decoder. The proposed algorithms achieves high quantitative and qualitative synthesis evident in comparative evaluation metrics such as a low Frechet-Inception scores.

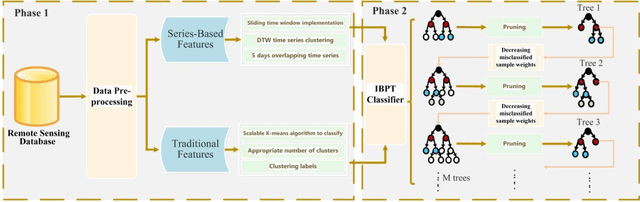

Towards advancing the earthquake forecasting by machine learning of satellite data

Jan 31, 2021

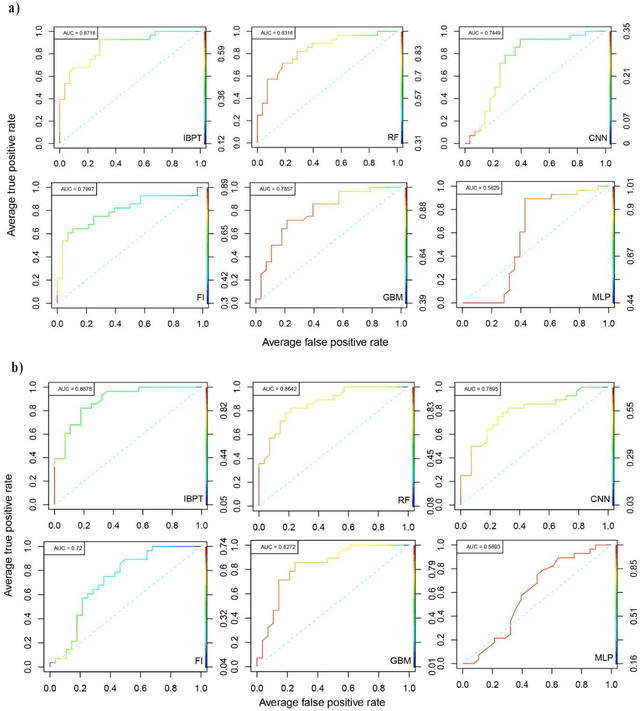

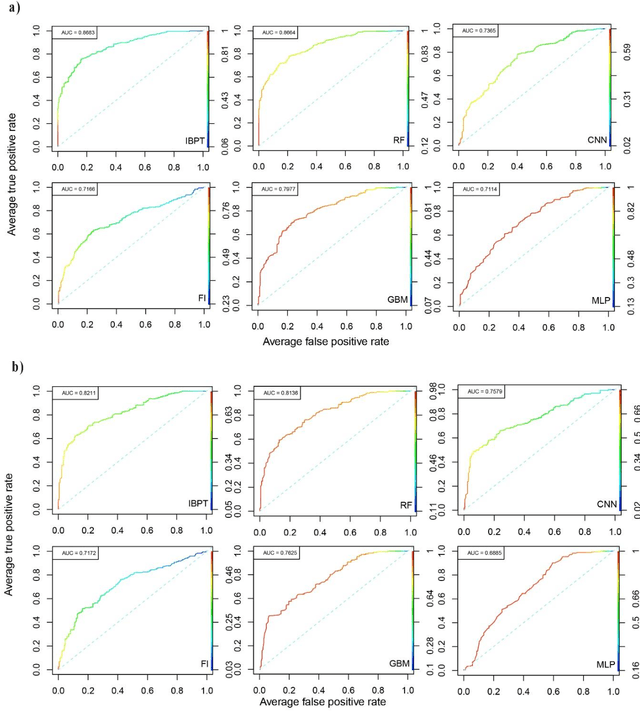

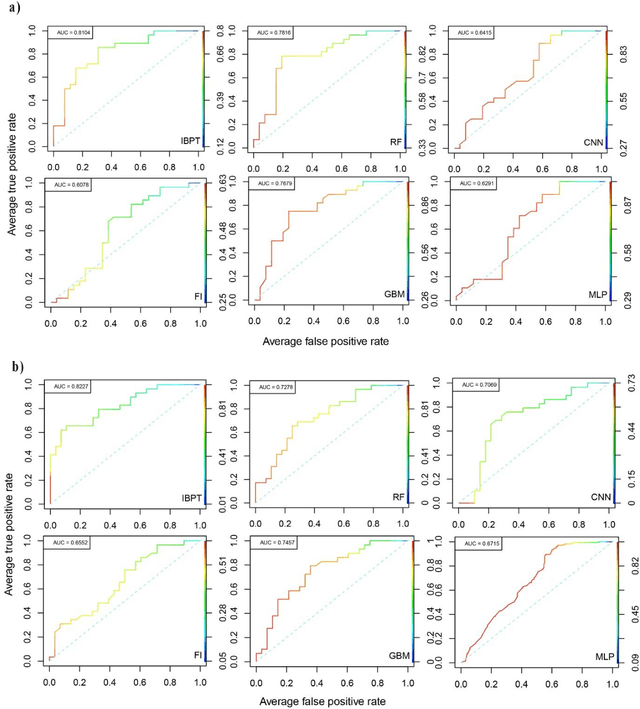

Abstract:Amongst the available technologies for earthquake research, remote sensing has been commonly used due to its unique features such as fast imaging and wide image-acquisition range. Nevertheless, early studies on pre-earthquake and remote-sensing anomalies are mostly oriented towards anomaly identification and analysis of a single physical parameter. Many analyses are based on singular events, which provide a lack of understanding of this complex natural phenomenon because usually, the earthquake signals are hidden in the environmental noise. The universality of such analysis still is not being demonstrated on a worldwide scale. In this paper, we investigate physical and dynamic changes of seismic data and thereby develop a novel machine learning method, namely Inverse Boosting Pruning Trees (IBPT), to issue short-term forecast based on the satellite data of 1,371 earthquakes of magnitude six or above due to their impact on the environment. We have analyzed and compared our proposed framework against several states of the art machine learning methods using ten different infrared and hyperspectral measurements collected between 2006 and 2013. Our proposed method outperforms all the six selected baselines and shows a strong capability in improving the likelihood of earthquake forecasting across different earthquake databases.

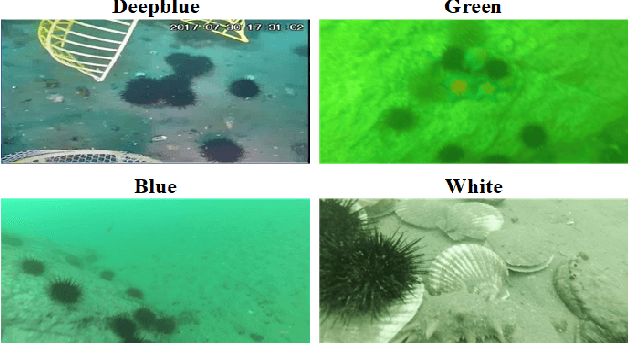

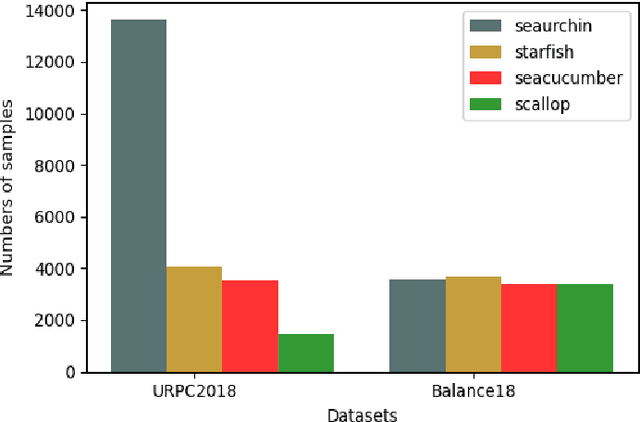

Class balanced underwater object detection dataset generated by class-wise style augmentation

Jan 20, 2021

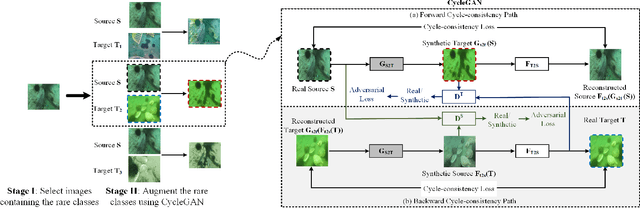

Abstract:Underwater object detection technique is of great significance for various applications in underwater the scenes. However, class imbalance issue is still an unsolved bottleneck for current underwater object detection algorithms. It leads to large precision discrepancies among different classes that the dominant classes with more training data achieve higher detection precisions while the minority classes with fewer training data achieves much lower detection precisions. In this paper, we propose a novel class-wise style augmentation (CWSA) algorithm to generate a class-balanced underwater dataset Balance18 from the public contest underwater dataset URPC2018. CWSA is a new kind of data augmentation technique which augments the training data for the minority classes by generating various colors, textures and contrasts for the minority classes. Compare with previous data augmentation algorithms such flipping, cropping and rotations, CWSA is able to generate a class balanced underwater dataset with diverse color distortions and haze-effects.

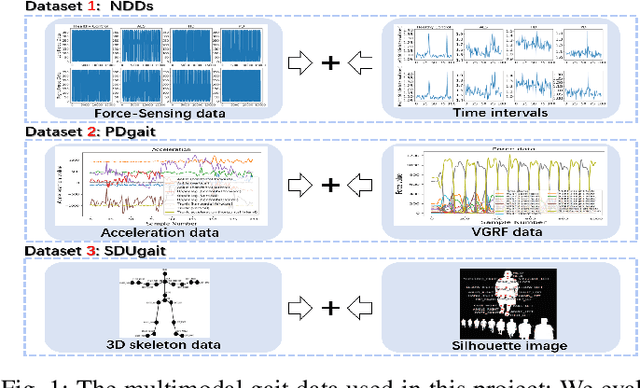

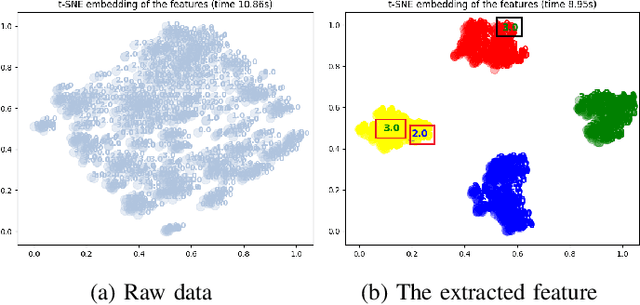

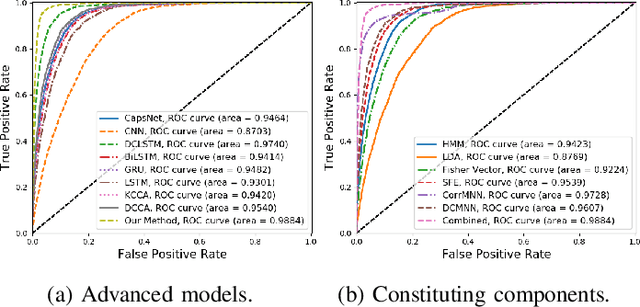

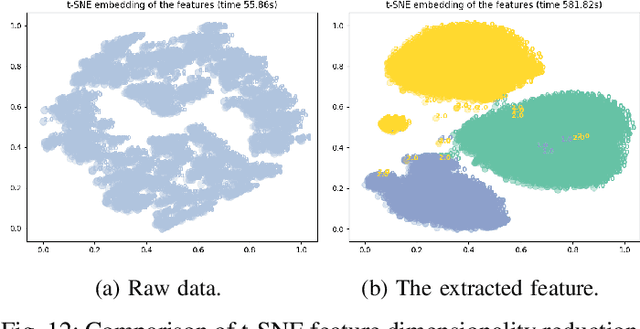

Multimodal Gait Recognition for Neurodegenerative Diseases

Jan 07, 2021

Abstract:In recent years, single modality based gait recognition has been extensively explored in the analysis of medical images or other sensory data, and it is recognised that each of the established approaches has different strengths and weaknesses. As an important motor symptom, gait disturbance is usually used for diagnosis and evaluation of diseases; moreover, the use of multi-modality analysis of the patient's walking pattern compensates for the one-sidedness of single modality gait recognition methods that only learn gait changes in a single measurement dimension. The fusion of multiple measurement resources has demonstrated promising performance in the identification of gait patterns associated with individual diseases. In this paper, as a useful tool, we propose a novel hybrid model to learn the gait differences between three neurodegenerative diseases, between patients with different severity levels of Parkinson's disease and between healthy individuals and patients, by fusing and aggregating data from multiple sensors. A spatial feature extractor (SFE) is applied to generating representative features of images or signals. In order to capture temporal information from the two modality data, a new correlative memory neural network (CorrMNN) architecture is designed for extracting temporal features. Afterwards, we embed a multi-switch discriminator to associate the observations with individual state estimations. Compared with several state-of-the-art techniques, our proposed framework shows more accurate classification results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge