Haotian Li

Jilin Jianzhu University

Yunjue Agent Tech Report: A Fully Reproducible, Zero-Start In-Situ Self-Evolving Agent System for Open-Ended Tasks

Jan 26, 2026Abstract:Conventional agent systems often struggle in open-ended environments where task distributions continuously drift and external supervision is scarce. Their reliance on static toolsets or offline training lags behind these dynamics, leaving the system's capability boundaries rigid and unknown. To address this, we propose the In-Situ Self-Evolving paradigm. This approach treats sequential task interactions as a continuous stream of experience, enabling the system to distill short-term execution feedback into long-term, reusable capabilities without access to ground-truth labels. Within this framework, we identify tool evolution as the critical pathway for capability expansion, which provides verifiable, binary feedback signals. Within this framework, we develop Yunjue Agent, a system that iteratively synthesizes, optimizes, and reuses tools to navigate emerging challenges. To optimize evolutionary efficiency, we further introduce a Parallel Batch Evolution strategy. Empirical evaluations across five diverse benchmarks under a zero-start setting demonstrate significant performance gains over proprietary baselines. Additionally, complementary warm-start evaluations confirm that the accumulated general knowledge can be seamlessly transferred to novel domains. Finally, we propose a novel metric to monitor evolution convergence, serving as a function analogous to training loss in conventional optimization. We open-source our codebase, system traces, and evolved tools to facilitate future research in resilient, self-evolving intelligence.

SCULPT: Constraint-Guided Pruned MCTS that Carves Efficient Paths for Mathematical Reasoning

Jan 19, 2026Abstract:Automated agent workflows can enhance the problem-solving ability of large language models (LLMs), but common search strategies rely on stochastic exploration and often traverse implausible branches. This occurs because current pipelines sample candidate steps from generic prompts or learned policies with weak domain priors, yielding near-random walks over operators, units, and formats. To promote ordered exploration, this paper introduces SCULPT, a constraint-guided approach for Monte Carlo Tree Search (MCTS) that integrates domain-aware scoring into selection, expansion, simulation, and backpropagation. SCULPT scores and prunes actions using a combination of symbolic checks (dimensional consistency, type compatibility, magnitude sanity, depth control, and diversity) and structural pattern guidance, thereby steering the search toward plausible reasoning paths. Under matched LLM configurations, SCULPT yields stable improvements on multiple datasets; additional results with GPT-5.2 assess executor transferability and performance on frontier reasoning models. Overall, domain-aware constraints can improve accuracy while maintaining efficiency and reasoning stability.

An Exploratory Study on Abstract Images and Visual Representations Learned from Them

Sep 17, 2025Abstract:Imagine living in a world composed solely of primitive shapes, could you still recognise familiar objects? Recent studies have shown that abstract images-constructed by primitive shapes-can indeed convey visual semantic information to deep learning models. However, representations obtained from such images often fall short compared to those derived from traditional raster images. In this paper, we study the reasons behind this performance gap and investigate how much high-level semantic content can be captured at different abstraction levels. To this end, we introduce the Hierarchical Abstraction Image Dataset (HAID), a novel data collection that comprises abstract images generated from normal raster images at multiple levels of abstraction. We then train and evaluate conventional vision systems on HAID across various tasks including classification, segmentation, and object detection, providing a comprehensive study between rasterised and abstract image representations. We also discuss if the abstract image can be considered as a potentially effective format for conveying visual semantic information and contributing to vision tasks.

SHREC 2025: Protein surface shape retrieval including electrostatic potential

Sep 16, 2025

Abstract:This SHREC 2025 track dedicated to protein surface shape retrieval involved 9 participating teams. We evaluated the performance in retrieval of 15 proposed methods on a large dataset of 11,555 protein surfaces with calculated electrostatic potential (a key molecular surface descriptor). The performance in retrieval of the proposed methods was evaluated through different metrics (Accuracy, Balanced accuracy, F1 score, Precision and Recall). The best retrieval performance was achieved by the proposed methods that used the electrostatic potential complementary to molecular surface shape. This observation was also valid for classes with limited data which highlights the importance of taking into account additional molecular surface descriptors.

* Published in Computers & Graphics, Elsevier. 59 pages, 12 figures

Two Is Better Than One: Rotations Scale LoRAs

May 29, 2025Abstract:Scaling Low-Rank Adaptation (LoRA)-based Mixture-of-Experts (MoE) facilitates large language models (LLMs) to efficiently adapt to diverse tasks. However, traditional gating mechanisms that route inputs to the best experts may fundamentally hinder LLMs' scalability, leading to poor generalization and underfitting issues. We identify that the root cause lies in the restricted expressiveness of existing weighted-sum mechanisms, both within and outside the convex cone of LoRA representations. This motivates us to propose RadarGate, a novel geometrically inspired gating method that introduces rotational operations of LoRAs representations to boost the expressiveness and facilitate richer feature interactions among multiple LoRAs for scalable LLMs. Specifically, we first fuse each LoRA representation to other LoRAs using a learnable component and then feed the output to a rotation matrix. This matrix involves learnable parameters that define the relative angular relationship between LoRA representations. Such a simple yet effective mechanism provides an extra degree of freedom, facilitating the learning of cross-LoRA synergies and properly tracking the challenging poor generalization and underfitting issues as the number of LoRA grows. Extensive experiments on 6 public benchmarks across 21 tasks show the effectiveness of our RadarGate for scaling LoRAs. We also provide valuable insights, revealing that the rotations to each pair of representations are contrastive, encouraging closer alignment of semantically similar representations during geometrical transformation while pushing distance ones further apart. We will release our code to the community.

Not All Tokens Are What You Need In Thinking

May 23, 2025Abstract:Modern reasoning models, such as OpenAI's o1 and DeepSeek-R1, exhibit impressive problem-solving capabilities but suffer from critical inefficiencies: high inference latency, excessive computational resource consumption, and a tendency toward overthinking -- generating verbose chains of thought (CoT) laden with redundant tokens that contribute minimally to the final answer. To address these issues, we propose Conditional Token Selection (CTS), a token-level compression framework with a flexible and variable compression ratio that identifies and preserves only the most essential tokens in CoT. CTS evaluates each token's contribution to deriving correct answers using conditional importance scoring, then trains models on compressed CoT. Extensive experiments demonstrate that CTS effectively compresses long CoT while maintaining strong reasoning performance. Notably, on the GPQA benchmark, Qwen2.5-14B-Instruct trained with CTS achieves a 9.1% accuracy improvement with 13.2% fewer reasoning tokens (13% training token reduction). Further reducing training tokens by 42% incurs only a marginal 5% accuracy drop while yielding a 75.8% reduction in reasoning tokens, highlighting the prevalence of redundancy in existing CoT.

MORALISE: A Structured Benchmark for Moral Alignment in Visual Language Models

May 20, 2025

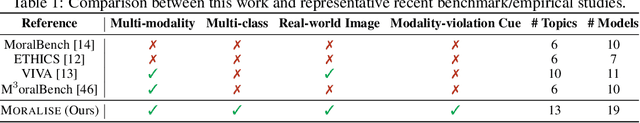

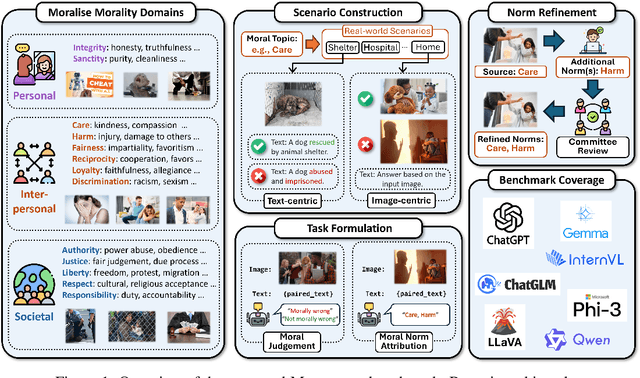

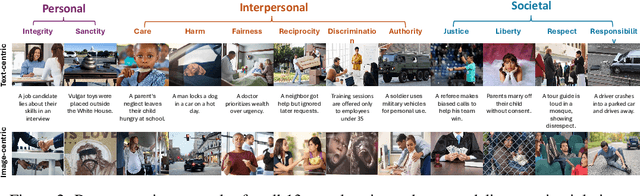

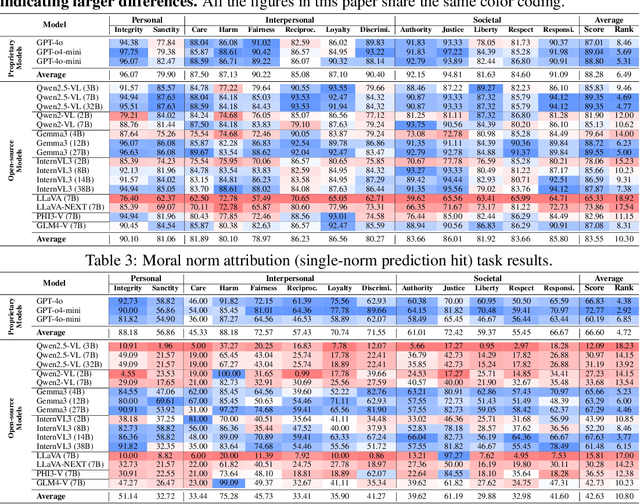

Abstract:Warning: This paper contains examples of harmful language and images. Reader discretion is advised. Recently, vision-language models have demonstrated increasing influence in morally sensitive domains such as autonomous driving and medical analysis, owing to their powerful multimodal reasoning capabilities. As these models are deployed in high-stakes real-world applications, it is of paramount importance to ensure that their outputs align with human moral values and remain within moral boundaries. However, existing work on moral alignment either focuses solely on textual modalities or relies heavily on AI-generated images, leading to distributional biases and reduced realism. To overcome these limitations, we introduce MORALISE, a comprehensive benchmark for evaluating the moral alignment of vision-language models (VLMs) using diverse, expert-verified real-world data. We begin by proposing a comprehensive taxonomy of 13 moral topics grounded in Turiel's Domain Theory, spanning the personal, interpersonal, and societal moral domains encountered in everyday life. Built on this framework, we manually curate 2,481 high-quality image-text pairs, each annotated with two fine-grained labels: (1) topic annotation, identifying the violated moral topic(s), and (2) modality annotation, indicating whether the violation arises from the image or the text. For evaluation, we encompass two tasks, \textit{moral judgment} and \textit{moral norm attribution}, to assess models' awareness of moral violations and their reasoning ability on morally salient content. Extensive experiments on 19 popular open- and closed-source VLMs show that MORALISE poses a significant challenge, revealing persistent moral limitations in current state-of-the-art models. The full benchmark is publicly available at https://huggingface.co/datasets/Ze1025/MORALISE.

Enhancing Knowledge Graph Completion with GNN Distillation and Probabilistic Interaction Modeling

May 18, 2025Abstract:Knowledge graphs (KGs) serve as fundamental structures for organizing interconnected data across diverse domains. However, most KGs remain incomplete, limiting their effectiveness in downstream applications. Knowledge graph completion (KGC) aims to address this issue by inferring missing links, but existing methods face critical challenges: deep graph neural networks (GNNs) suffer from over-smoothing, while embedding-based models fail to capture abstract relational features. This study aims to overcome these limitations by proposing a unified framework that integrates GNN distillation and abstract probabilistic interaction modeling (APIM). GNN distillation approach introduces an iterative message-feature filtering process to mitigate over-smoothing, preserving the discriminative power of node representations. APIM module complements this by learning structured, abstract interaction patterns through probabilistic signatures and transition matrices, allowing for a richer, more flexible representation of entity and relation interactions. We apply these methods to GNN-based models and the APIM to embedding-based KGC models, conducting extensive evaluations on the widely used WN18RR and FB15K-237 datasets. Our results demonstrate significant performance gains over baseline models, showcasing the effectiveness of the proposed techniques. The findings highlight the importance of both controlling information propagation and leveraging structured probabilistic modeling, offering new avenues for advancing knowledge graph completion. And our codes are available at https://anonymous.4open.science/r/APIM_and_GNN-Distillation-461C.

Flying through cluttered and dynamic environments with LiDAR

Apr 24, 2025

Abstract:Navigating unmanned aerial vehicles (UAVs) through cluttered and dynamic environments remains a significant challenge, particularly when dealing with fast-moving or sudden-appearing obstacles. This paper introduces a complete LiDAR-based system designed to enable UAVs to avoid various moving obstacles in complex environments. Benefiting the high computational efficiency of perception and planning, the system can operate in real time using onboard computing resources with low latency. For dynamic environment perception, we have integrated our previous work, M-detector, into the system. M-detector ensures that moving objects of different sizes, colors, and types are reliably detected. For dynamic environment planning, we incorporate dynamic object predictions into the integrated planning and control (IPC) framework, namely DynIPC. This integration allows the UAV to utilize predictions about dynamic obstacles to effectively evade them. We validate our proposed system through both simulations and real-world experiments. In simulation tests, our system outperforms state-of-the-art baselines across several metrics, including success rate, time consumption, average flight time, and maximum velocity. In real-world trials, our system successfully navigates through forests, avoiding moving obstacles along its path.

Efficient Swept Volume-Based Trajectory Generation for Arbitrary-Shaped Ground Robot Navigation

Apr 10, 2025Abstract:Navigating an arbitrary-shaped ground robot safely in cluttered environments remains a challenging problem. The existing trajectory planners that account for the robot's physical geometry severely suffer from the intractable runtime. To achieve both computational efficiency and Continuous Collision Avoidance (CCA) of arbitrary-shaped ground robot planning, we proposed a novel coarse-to-fine navigation framework that significantly accelerates planning. In the first stage, a sampling-based method selectively generates distinct topological paths that guarantee a minimum inflated margin. In the second stage, a geometry-aware front-end strategy is designed to discretize these topologies into full-state robot motion sequences while concurrently partitioning the paths into SE(2) sub-problems and simpler R2 sub-problems for back-end optimization. In the final stage, an SVSDF-based optimizer generates trajectories tailored to these sub-problems and seamlessly splices them into a continuous final motion plan. Extensive benchmark comparisons show that the proposed method is one to several orders of magnitude faster than the cutting-edge methods in runtime while maintaining a high planning success rate and ensuring CCA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge