Gui-Song Xia

HoW-3D: Holistic 3D Wireframe Perception from a Single Image

Aug 19, 2022

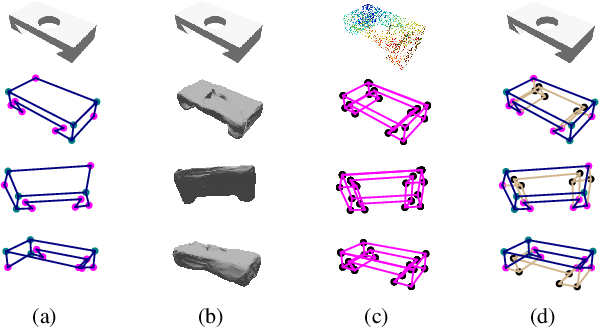

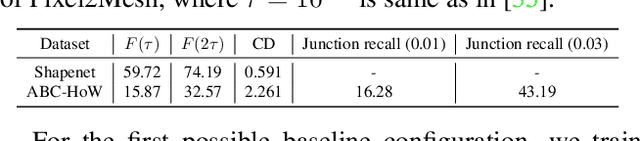

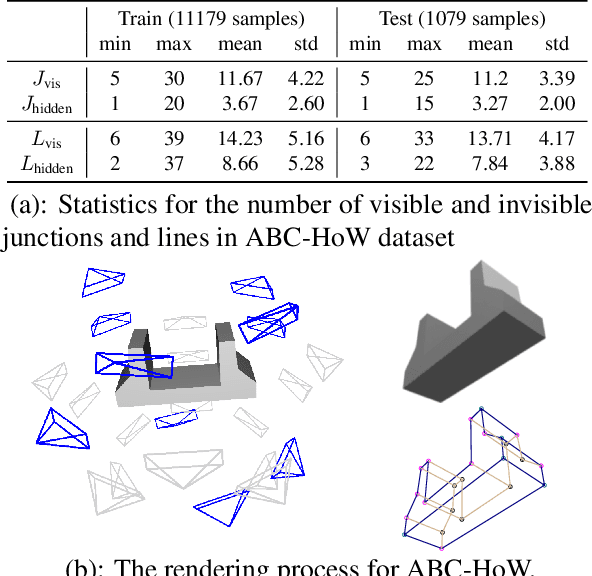

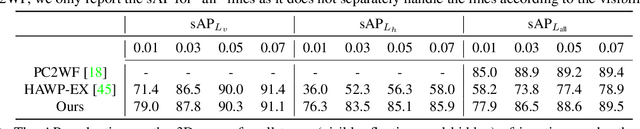

Abstract:This paper studies the problem of holistic 3D wireframe perception (HoW-3D), a new task of perceiving both the visible 3D wireframes and the invisible ones from single-view 2D images. As the non-front surfaces of an object cannot be directly observed in a single view, estimating the non-line-of-sight (NLOS) geometries in HoW-3D is a fundamentally challenging problem and remains open in computer vision. We study the problem of HoW-3D by proposing an ABC-HoW benchmark, which is created on top of CAD models sourced from the ABC-dataset with 12k single-view images and the corresponding holistic 3D wireframe models. With our large-scale ABC-HoW benchmark available, we present a novel Deep Spatial Gestalt (DSG) model to learn the visible junctions and line segments as the basis and then infer the NLOS 3D structures from the visible cues by following the Gestalt principles of human vision systems. In our experiments, we demonstrate that our DSG model performs very well in inferring the holistic 3D wireframes from single-view images. Compared with the strong baseline methods, our DSG model outperforms the previous wireframe detectors in detecting the invisible line geometry in single-view images and is even very competitive with prior arts that take high-fidelity PointCloud as inputs on reconstructing 3D wireframes.

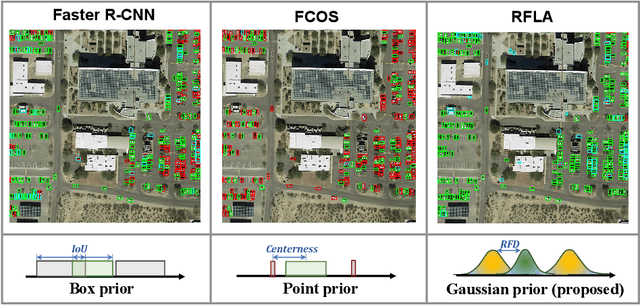

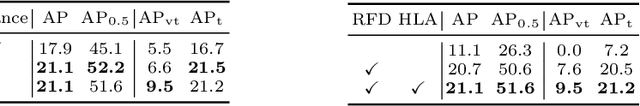

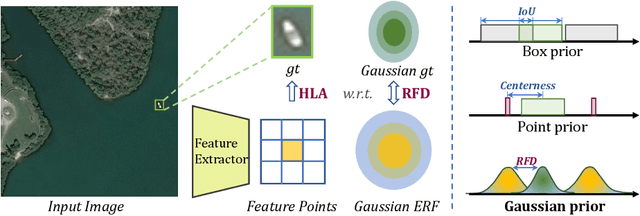

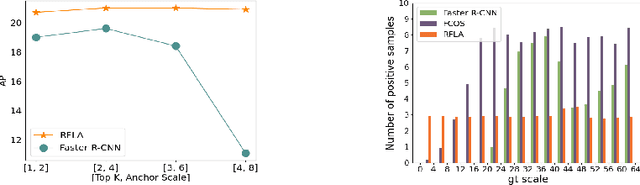

RFLA: Gaussian Receptive Field based Label Assignment for Tiny Object Detection

Aug 18, 2022

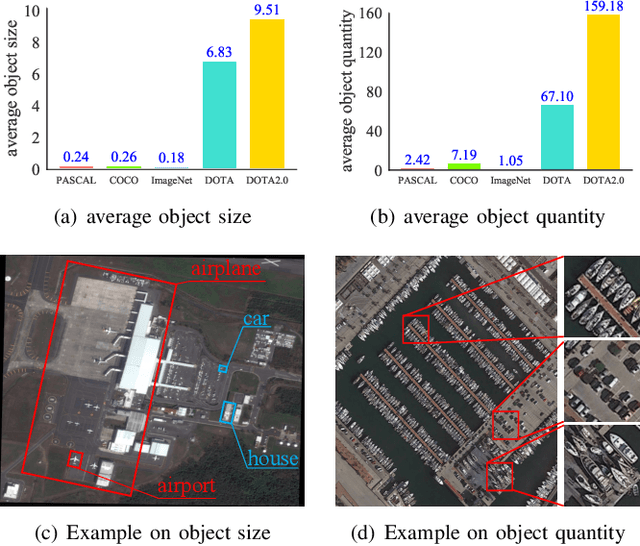

Abstract:Detecting tiny objects is one of the main obstacles hindering the development of object detection. The performance of generic object detectors tends to drastically deteriorate on tiny object detection tasks. In this paper, we point out that either box prior in the anchor-based detector or point prior in the anchor-free detector is sub-optimal for tiny objects. Our key observation is that the current anchor-based or anchor-free label assignment paradigms will incur many outlier tiny-sized ground truth samples, leading to detectors imposing less focus on the tiny objects. To this end, we propose a Gaussian Receptive Field based Label Assignment (RFLA) strategy for tiny object detection. Specifically, RFLA first utilizes the prior information that the feature receptive field follows Gaussian distribution. Then, instead of assigning samples with IoU or center sampling strategy, a new Receptive Field Distance (RFD) is proposed to directly measure the similarity between the Gaussian receptive field and ground truth. Considering that the IoU-threshold based and center sampling strategy are skewed to large objects, we further design a Hierarchical Label Assignment (HLA) module based on RFD to achieve balanced learning for tiny objects. Extensive experiments on four datasets demonstrate the effectiveness of the proposed methods. Especially, our approach outperforms the state-of-the-art competitors with 4.0 AP points on the AI-TOD dataset. Codes are available at https://github.com/Chasel-Tsui/mmdet-rfla

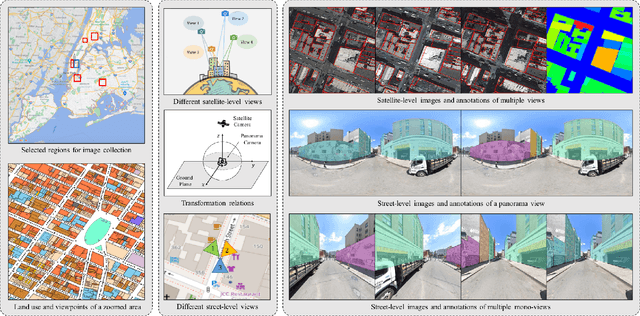

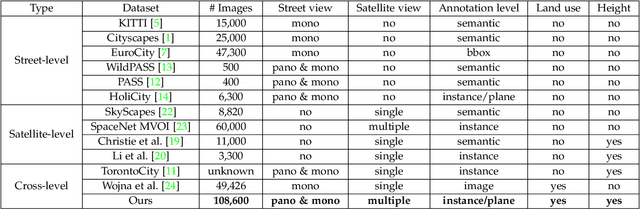

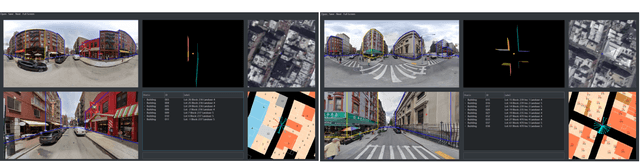

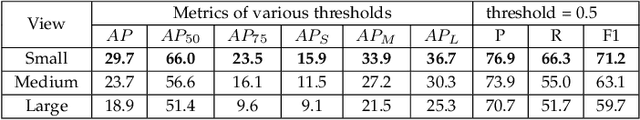

OmniCity: Omnipotent City Understanding with Multi-level and Multi-view Images

Aug 04, 2022

Abstract:This paper presents OmniCity, a new dataset for omnipotent city understanding from multi-level and multi-view images. More precisely, the OmniCity contains multi-view satellite images as well as street-level panorama and mono-view images, constituting over 100K pixel-wise annotated images that are well-aligned and collected from 25K geo-locations in New York City. To alleviate the substantial pixel-wise annotation efforts, we propose an efficient street-view image annotation pipeline that leverages the existing label maps of satellite view and the transformation relations between different views (satellite, panorama, and mono-view). With the new OmniCity dataset, we provide benchmarks for a variety of tasks including building footprint extraction, height estimation, and building plane/instance/fine-grained segmentation. Compared with the existing multi-level and multi-view benchmarks, OmniCity contains a larger number of images with richer annotation types and more views, provides more benchmark results of state-of-the-art models, and introduces a novel task for fine-grained building instance segmentation on street-level panorama images. Moreover, OmniCity provides new problem settings for existing tasks, such as cross-view image matching, synthesis, segmentation, detection, etc., and facilitates the developing of new methods for large-scale city understanding, reconstruction, and simulation. The OmniCity dataset as well as the benchmarks will be available at https://city-super.github.io/omnicity.

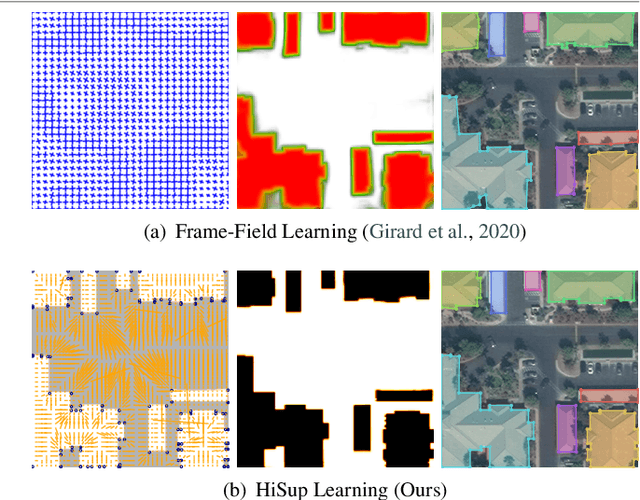

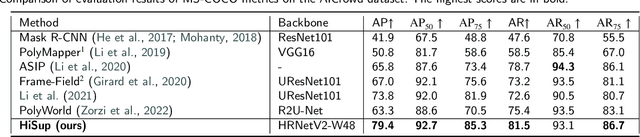

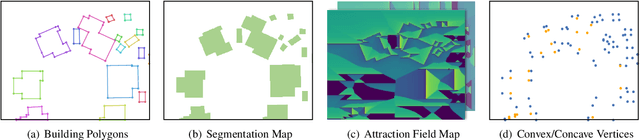

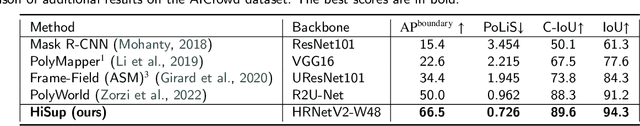

Accurate Polygonal Mapping of Buildings in Satellite Imagery

Aug 01, 2022

Abstract:This paper studies the problem of polygonal mapping of buildings by tackling the issue of mask reversibility that leads to a notable performance gap between the predicted masks and polygons from the learning-based methods. We addressed such an issue by exploiting the hierarchical supervision (of bottom-level vertices, mid-level line segments and the high-level regional masks) and proposed a novel interaction mechanism of feature embedding sourced from different levels of supervision signals to obtain reversible building masks for polygonal mapping of buildings. As a result, we show that the learned reversible building masks take all the merits of the advances of deep convolutional neural networks for high-performing polygonal mapping of buildings. In the experiments, we evaluated our method on the two public benchmarks of AICrowd and Inria. On the AICrowd dataset, our proposed method obtains unanimous improvements on the metrics of AP, APboundary and PoLiS. For the Inria dataset, our proposed method also obtains very competitive results on the metrics of IoU and Accuracy. The models and source code are available at https://github.com/SarahwXU.

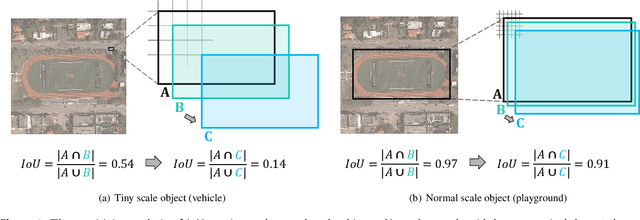

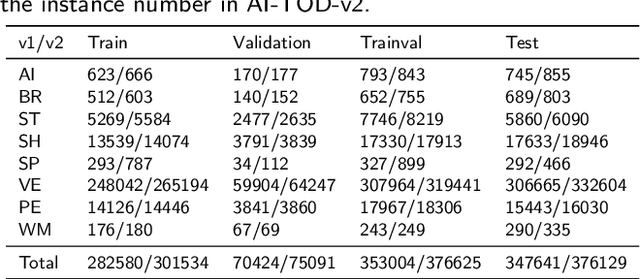

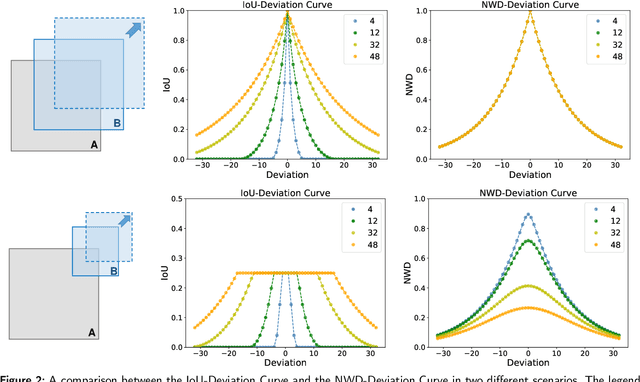

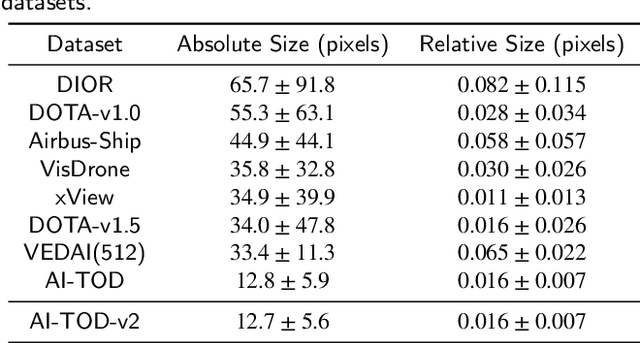

Detecting tiny objects in aerial images: A normalized Wasserstein distance and a new benchmark

Jun 28, 2022

Abstract:Tiny object detection (TOD) in aerial images is challenging since a tiny object only contains a few pixels. State-of-the-art object detectors do not provide satisfactory results on tiny objects due to the lack of supervision from discriminative features. Our key observation is that the Intersection over Union (IoU) metric and its extensions are very sensitive to the location deviation of the tiny objects, which drastically deteriorates the quality of label assignment when used in anchor-based detectors. To tackle this problem, we propose a new evaluation metric dubbed Normalized Wasserstein Distance (NWD) and a new RanKing-based Assigning (RKA) strategy for tiny object detection. The proposed NWD-RKA strategy can be easily embedded into all kinds of anchor-based detectors to replace the standard IoU threshold-based one, significantly improving label assignment and providing sufficient supervision information for network training. Tested on four datasets, NWD-RKA can consistently improve tiny object detection performance by a large margin. Besides, observing prominent noisy labels in the Tiny Object Detection in Aerial Images (AI-TOD) dataset, we are motivated to meticulously relabel it and release AI-TOD-v2 and its corresponding benchmark. In AI-TOD-v2, the missing annotation and location error problems are considerably mitigated, facilitating more reliable training and validation processes. Embedding NWD-RKA into DetectoRS, the detection performance achieves 4.3 AP points improvement over state-of-the-art competitors on AI-TOD-v2. Datasets, codes, and more visualizations are available at: https://chasel-tsui.github.io/AI-TOD-v2/

* Accepted by ISPRS Journal of Photogrammetry and Remote Sensing

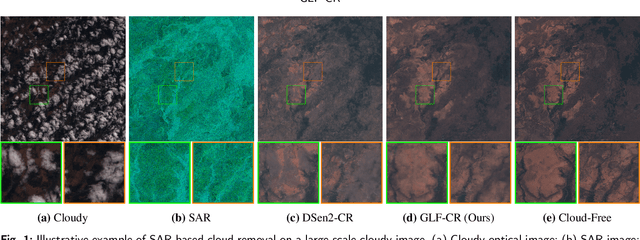

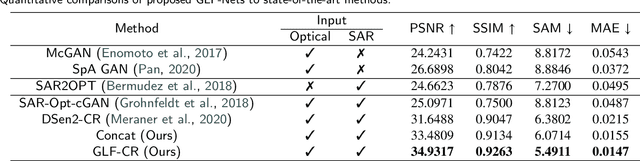

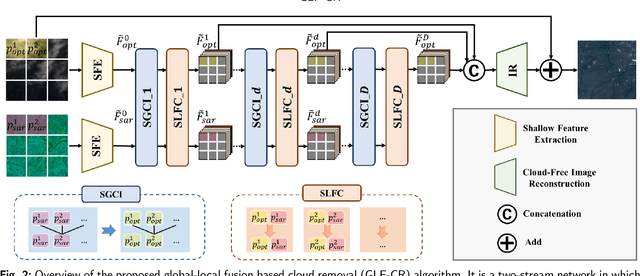

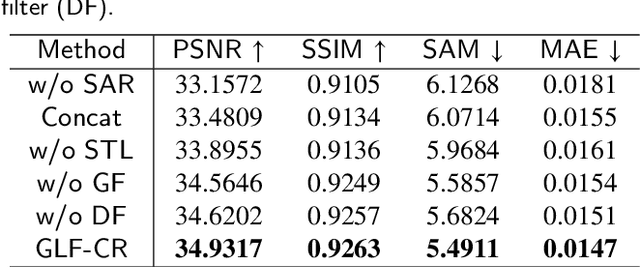

Exploring the Potential of SAR Data for Cloud Removal in Optical Satellite Imagery

Jun 06, 2022

Abstract:The challenge of the cloud removal task can be alleviated with the aid of Synthetic Aperture Radar (SAR) images that can penetrate cloud cover. However, the large domain gap between optical and SAR images as well as the severe speckle noise of SAR images may cause significant interference in SAR-based cloud removal, resulting in performance degeneration. In this paper, we propose a novel global-local fusion based cloud removal (GLF-CR) algorithm to leverage the complementary information embedded in SAR images. Exploiting the power of SAR information to promote cloud removal entails two aspects. The first, global fusion, guides the relationship among all local optical windows to maintain the structure of the recovered region consistent with the remaining cloud-free regions. The second, local fusion, transfers complementary information embedded in the SAR image that corresponds to cloudy areas to generate reliable texture details of the missing regions, and uses dynamic filtering to alleviate the performance degradation caused by speckle noise. Extensive evaluation demonstrates that the proposed algorithm can yield high quality cloud-free images and performs favorably against state-of-the-art cloud removal algorithms.

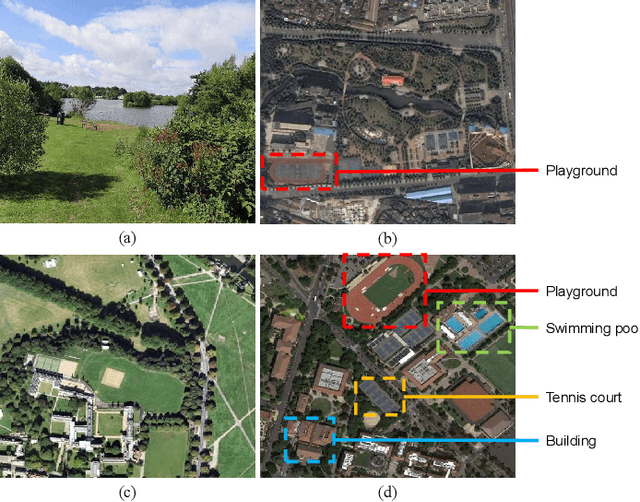

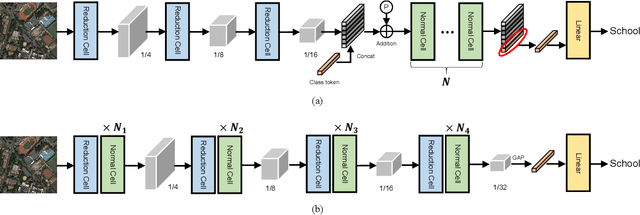

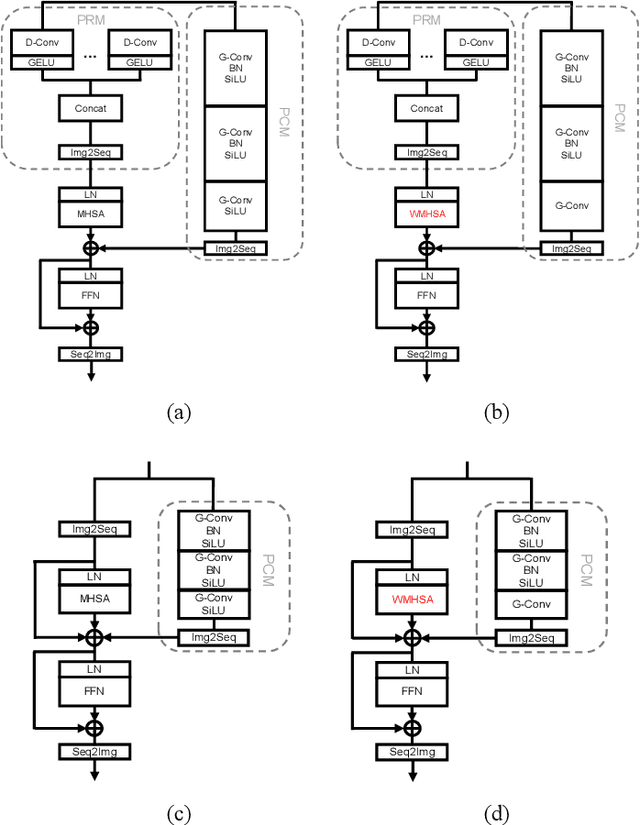

All Grains, One Scheme : Learning Multi-grain Instance Representation for Aerial Scene Classification

May 06, 2022

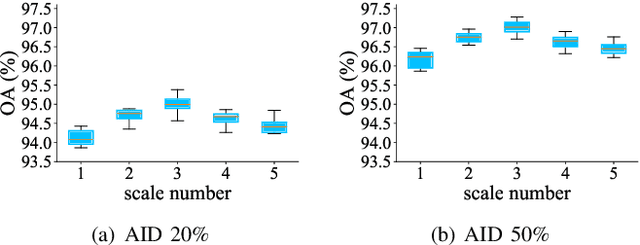

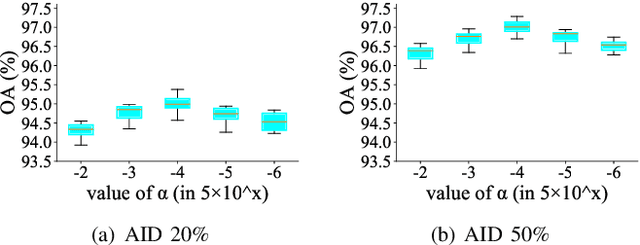

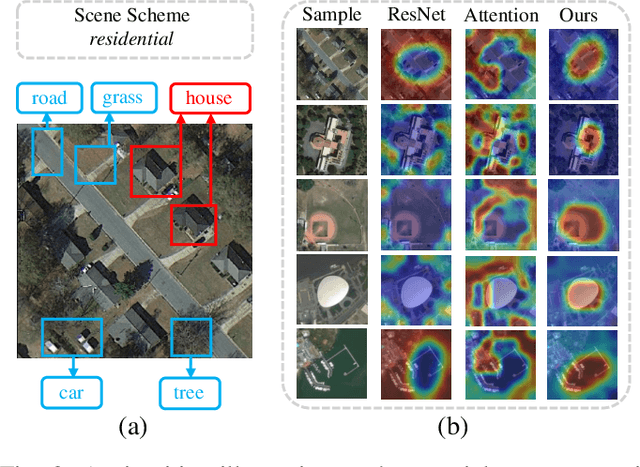

Abstract:Aerial scene classification remains challenging as: 1) the size of key objects in determining the scene scheme varies greatly; 2) many objects irrelevant to the scene scheme are often flooded in the image. Hence, how to effectively perceive the region of interests (RoIs) from a variety of sizes and build more discriminative representation from such complicated object distribution is vital to understand an aerial scene. In this paper, we propose a novel all grains, one scheme (AGOS) framework to tackle these challenges. To the best of our knowledge, it is the first work to extend the classic multiple instance learning into multi-grain formulation. Specially, it consists of a multi-grain perception module (MGP), a multi-branch multi-instance representation module (MBMIR) and a self-aligned semantic fusion (SSF) module. Firstly, our MGP preserves the differential dilated convolutional features from the backbone, which magnifies the discriminative information from multi-grains. Then, our MBMIR highlights the key instances in the multi-grain representation under the MIL formulation. Finally, our SSF allows our framework to learn the same scene scheme from multi-grain instance representations and fuses them, so that the entire framework is optimized as a whole. Notably, our AGOS is flexible and can be easily adapted to existing CNNs in a plug-and-play manner. Extensive experiments on UCM, AID and NWPU benchmarks demonstrate that our AGOS achieves a comparable performance against the state-of-the-art methods.

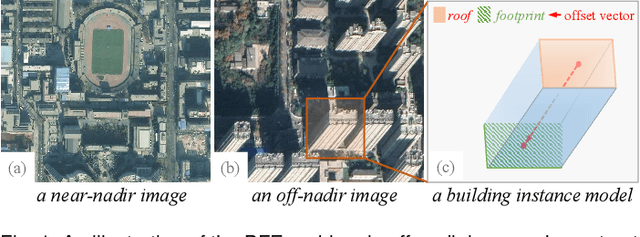

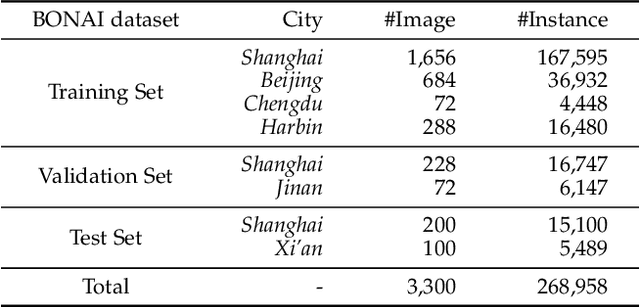

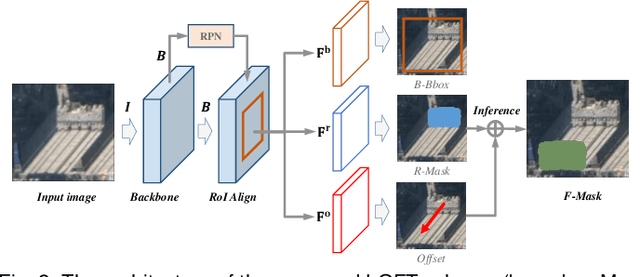

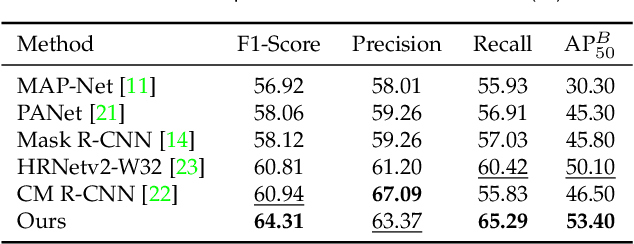

Learning to Extract Building Footprints from Off-Nadir Aerial Images

Apr 28, 2022

Abstract:Extracting building footprints from aerial images is essential for precise urban mapping with photogrammetric computer vision technologies. Existing approaches mainly assume that the roof and footprint of a building are well overlapped, which may not hold in off-nadir aerial images as there is often a big offset between them. In this paper, we propose an offset vector learning scheme, which turns the building footprint extraction problem in off-nadir images into an instance-level joint prediction problem of the building roof and its corresponding "roof to footprint" offset vector. Thus the footprint can be estimated by translating the predicted roof mask according to the predicted offset vector. We further propose a simple but effective feature-level offset augmentation module, which can significantly refine the offset vector prediction by introducing little extra cost. Moreover, a new dataset, Buildings in Off-Nadir Aerial Images (BONAI), is created and released in this paper. It contains 268,958 building instances across 3,300 aerial images with fully annotated instance-level roof, footprint, and corresponding offset vector for each building. Experiments on the BONAI dataset demonstrate that our method achieves the state-of-the-art, outperforming other competitors by 3.37 to 7.39 points in F1-score. The codes, datasets, and trained models are available at https://github.com/jwwangchn/BONAI.git.

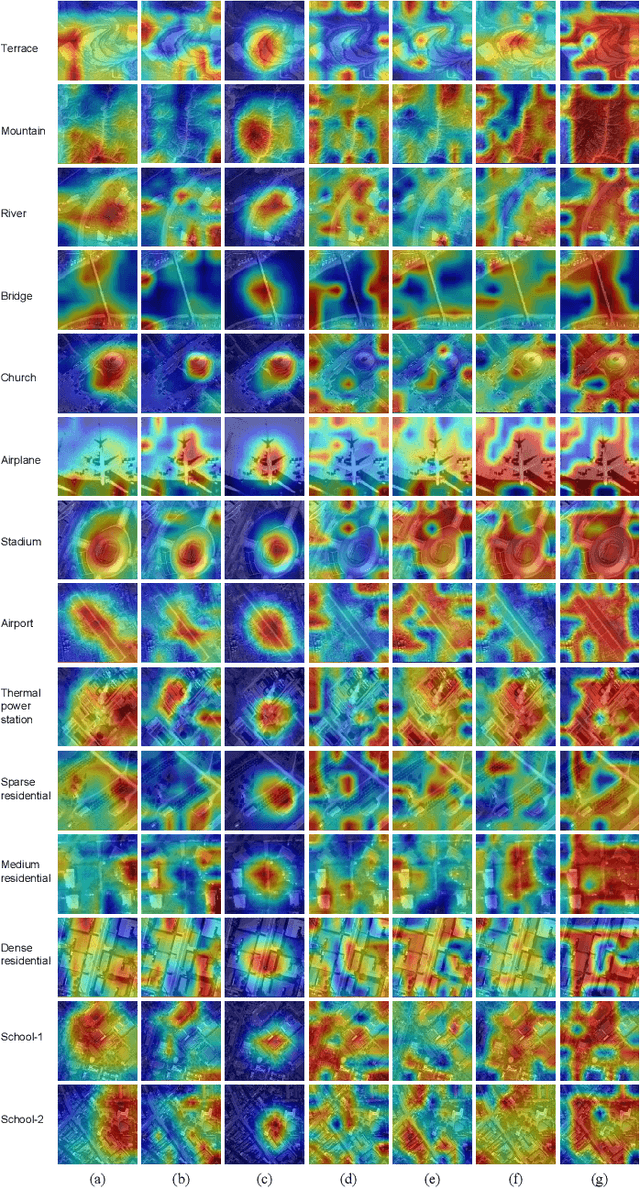

An Empirical Study of Remote Sensing Pretraining

Apr 06, 2022

Abstract:Deep learning has largely reshaped remote sensing research for aerial image understanding. Nevertheless, most of existing deep models are initialized with ImageNet pretrained weights, where the natural images inevitably presents a large domain gap relative to the aerial images, probably limiting the finetuning performance on downstream aerial scene tasks. This issue motivates us to conduct an empirical study of remote sensing pretraining (RSP). To this end, we train different networks from scratch with the help of the largest remote sensing scene recognition dataset up to now-MillionAID, to obtain the remote sensing pretrained backbones, including both convolutional neural networks (CNN) and vision transformers such as Swin and ViTAE, which have shown promising performance on computer vision tasks. Then, we investigate the impact of ImageNet pretraining (IMP) and RSP on a series of downstream tasks including scene recognition, semantic segmentation, object detection, and change detection using the CNN and vision transformers backbones. We have some empirical findings as follows. First, vision transformers generally outperforms CNN backbones, where ViTAE achieves the best performance, owing to its strong representation capacity by introducing intrinsic inductive bias from convolutions to transformers. Second, both IMP and RSP help deliver better performance, where IMP enjoys a versatility by learning more universal representations from diverse images belonging to much more categories while RSP is distinctive in perceiving remote sensing related semantics. Third, RSP mitigates the data discrepancy of IMP for remote sensing but may still suffer from the task discrepancy, where downstream tasks require different representations from the scene recognition task. These findings call for further research efforts on both large-scale pretraining datasets and effective pretraining methods.

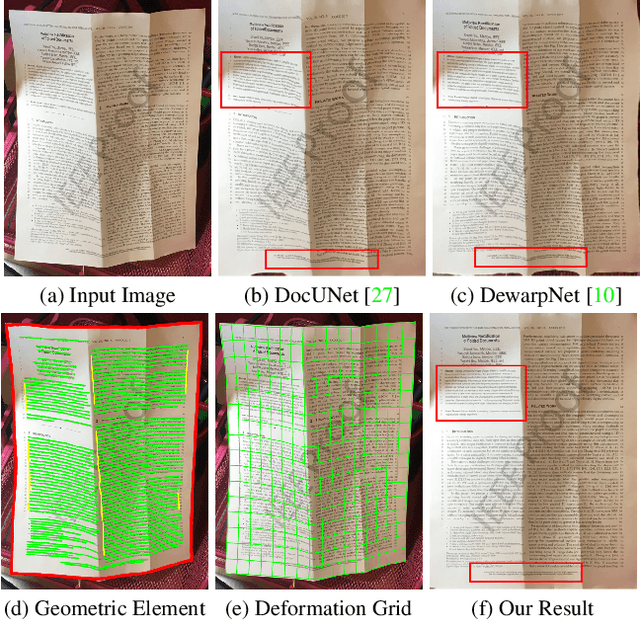

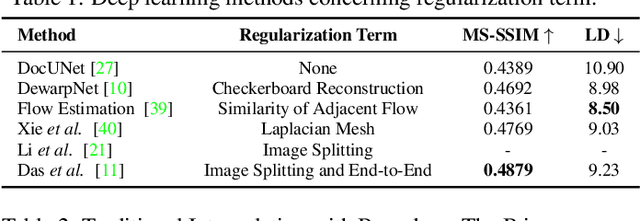

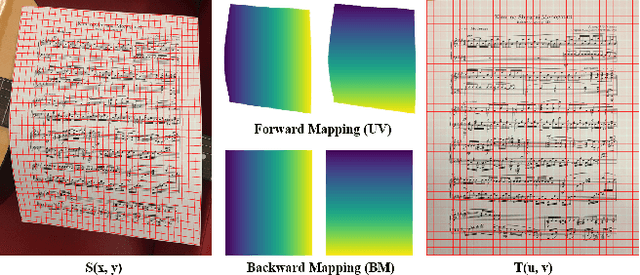

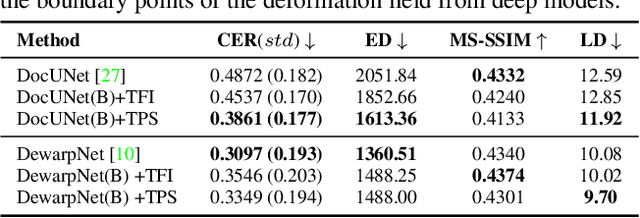

Revisiting Document Image Dewarping by Grid Regularization

Mar 31, 2022

Abstract:This paper addresses the problem of document image dewarping, which aims at eliminating the geometric distortion in document images for document digitization. Instead of designing a better neural network to approximate the optical flow fields between the inputs and outputs, we pursue the best readability by taking the text lines and the document boundaries into account from a constrained optimization perspective. Specifically, our proposed method first learns the boundary points and the pixels in the text lines and then follows the most simple observation that the boundaries and text lines in both horizontal and vertical directions should be kept after dewarping to introduce a novel grid regularization scheme. To obtain the final forward mapping for dewarping, we solve an optimization problem with our proposed grid regularization. The experiments comprehensively demonstrate that our proposed approach outperforms the prior arts by large margins in terms of readability (with the metrics of Character Errors Rate and the Edit Distance) while maintaining the best image quality on the publicly-available DocUNet benchmark.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge